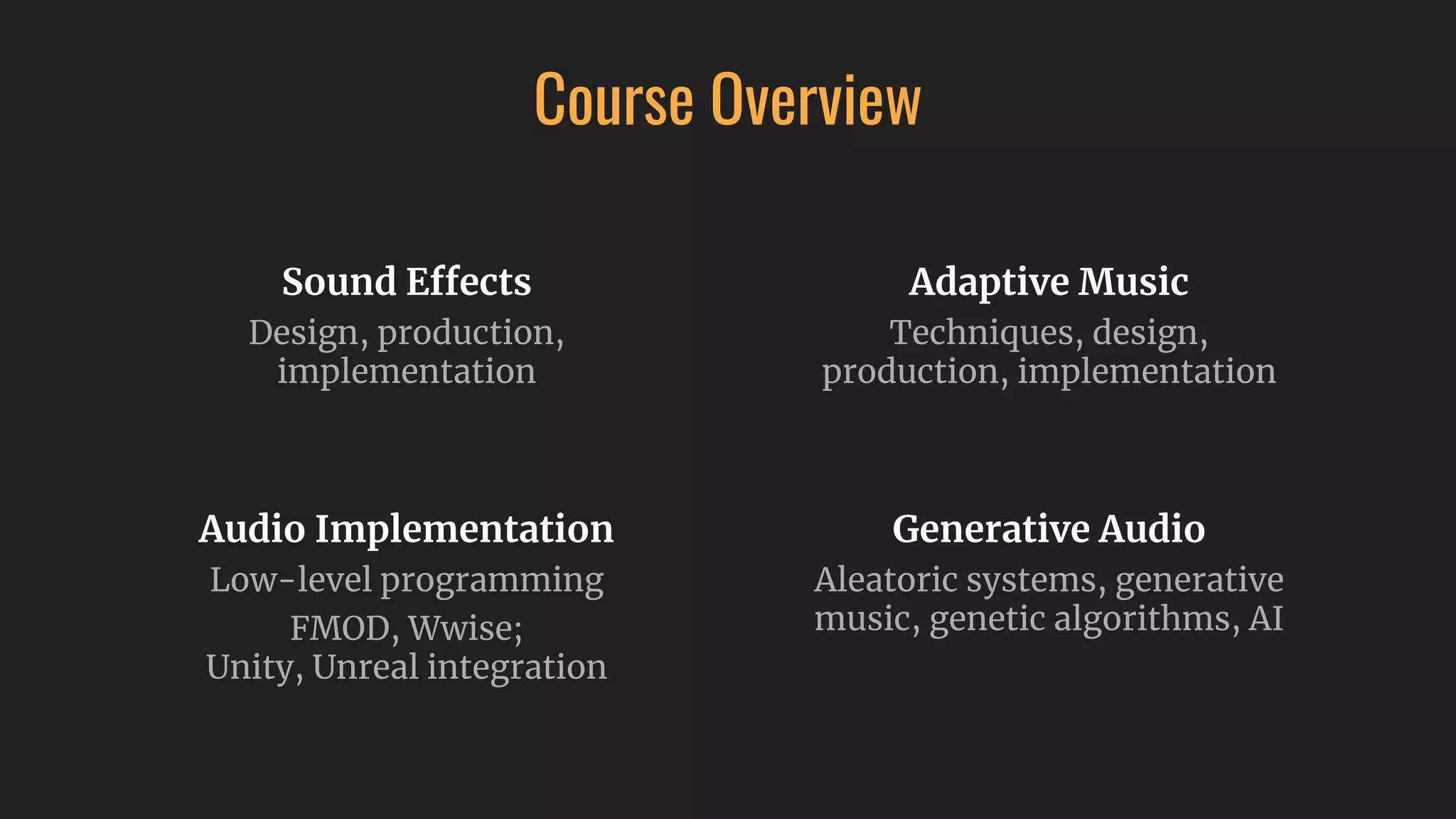

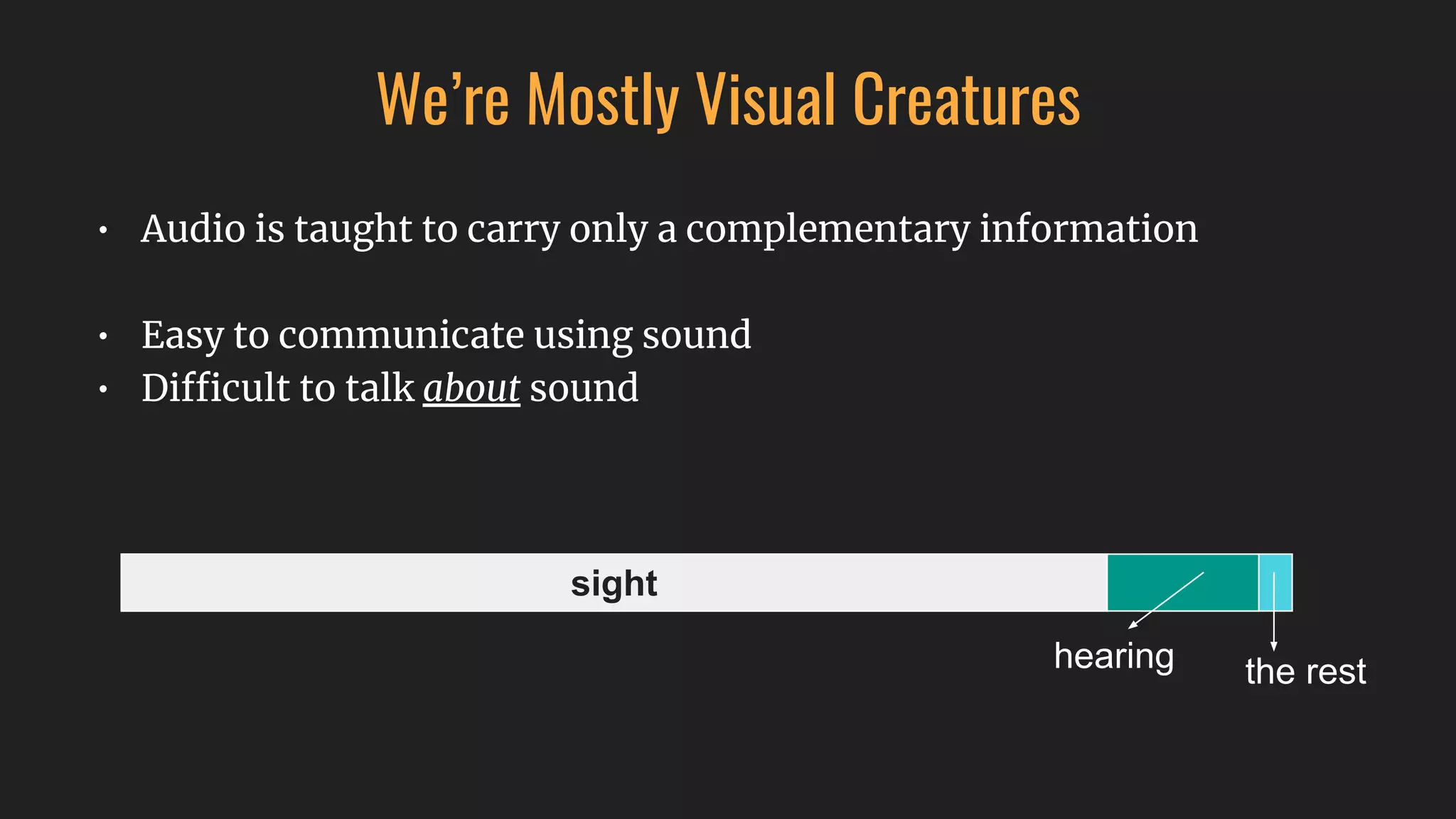

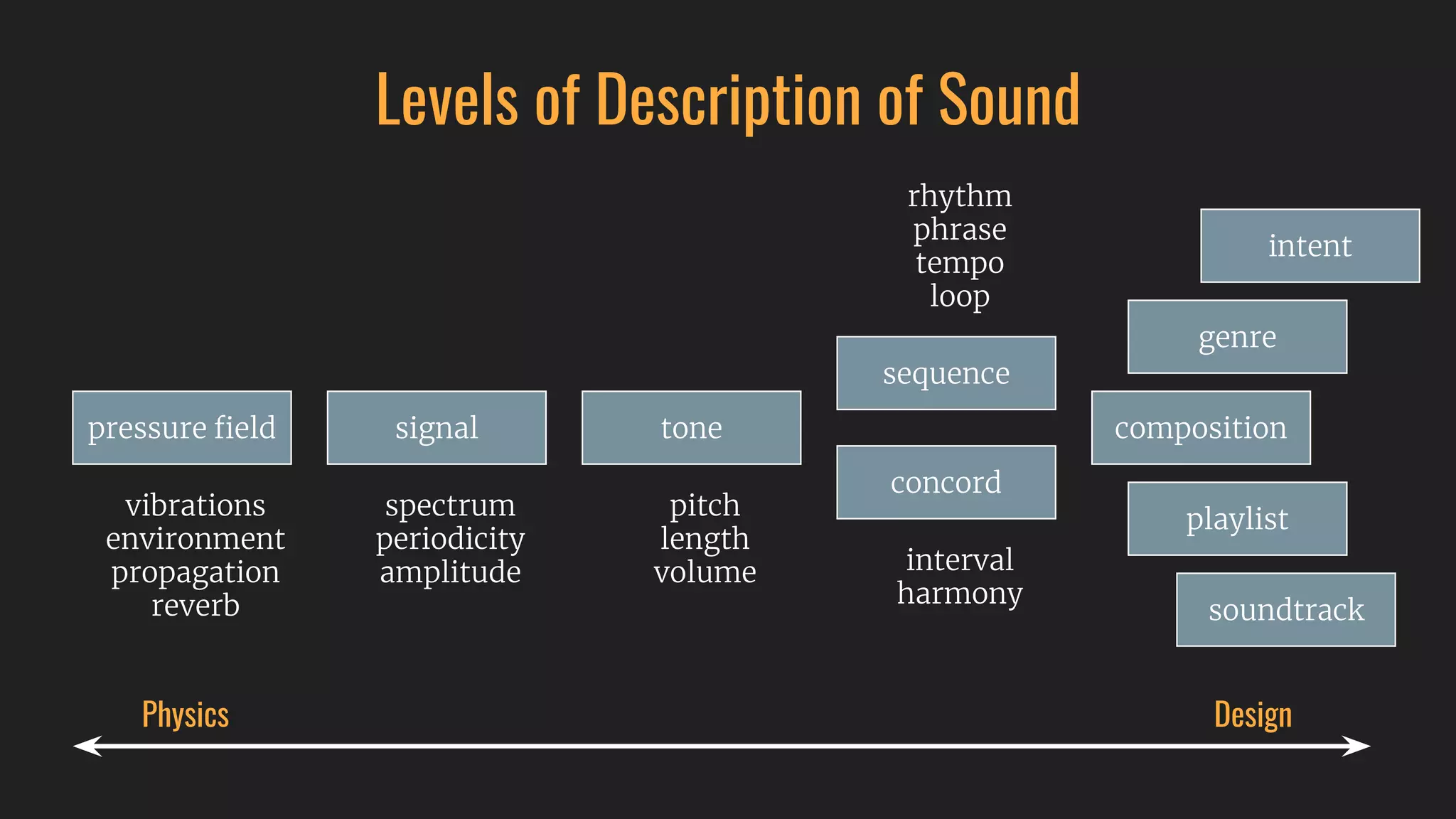

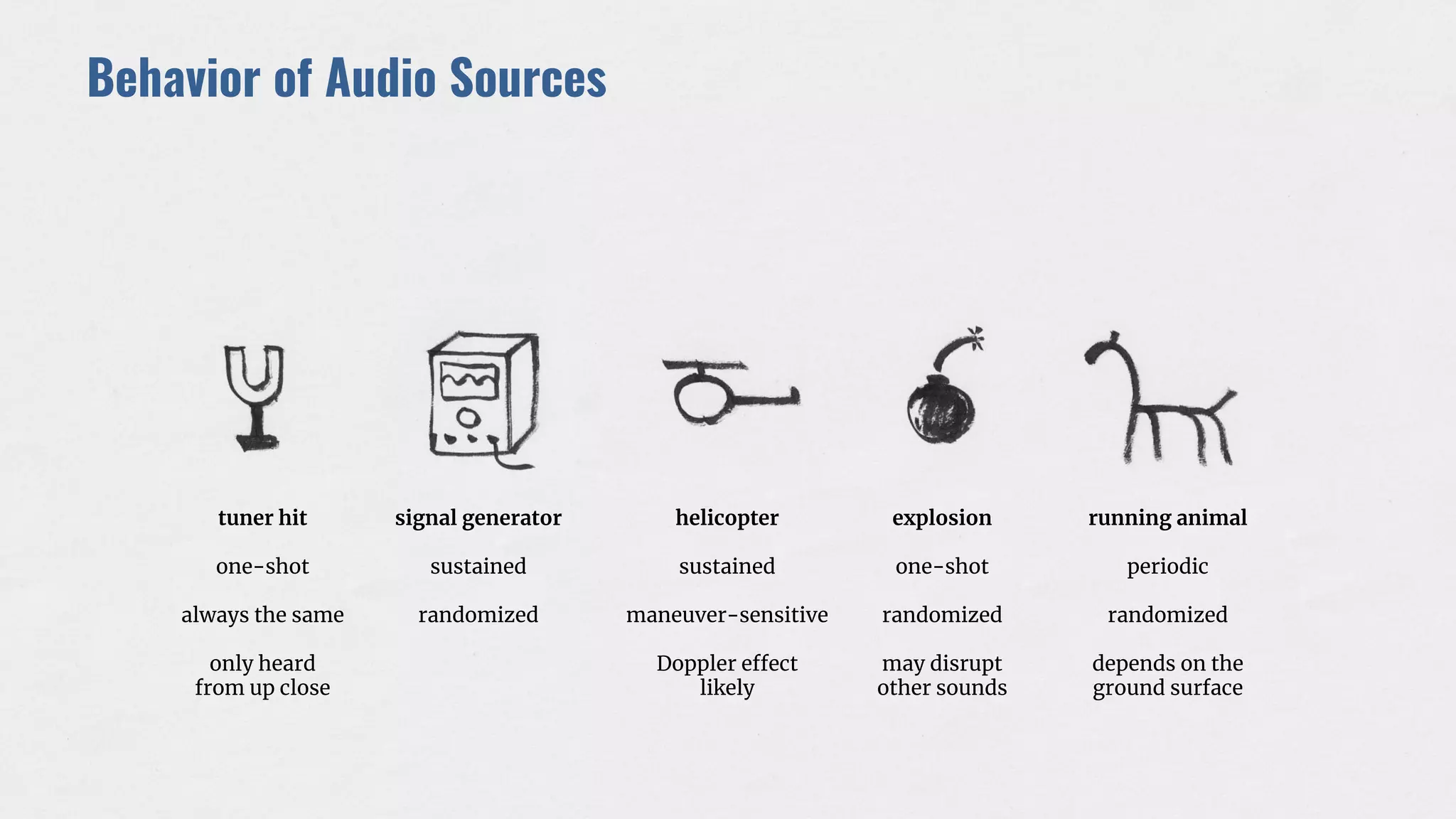

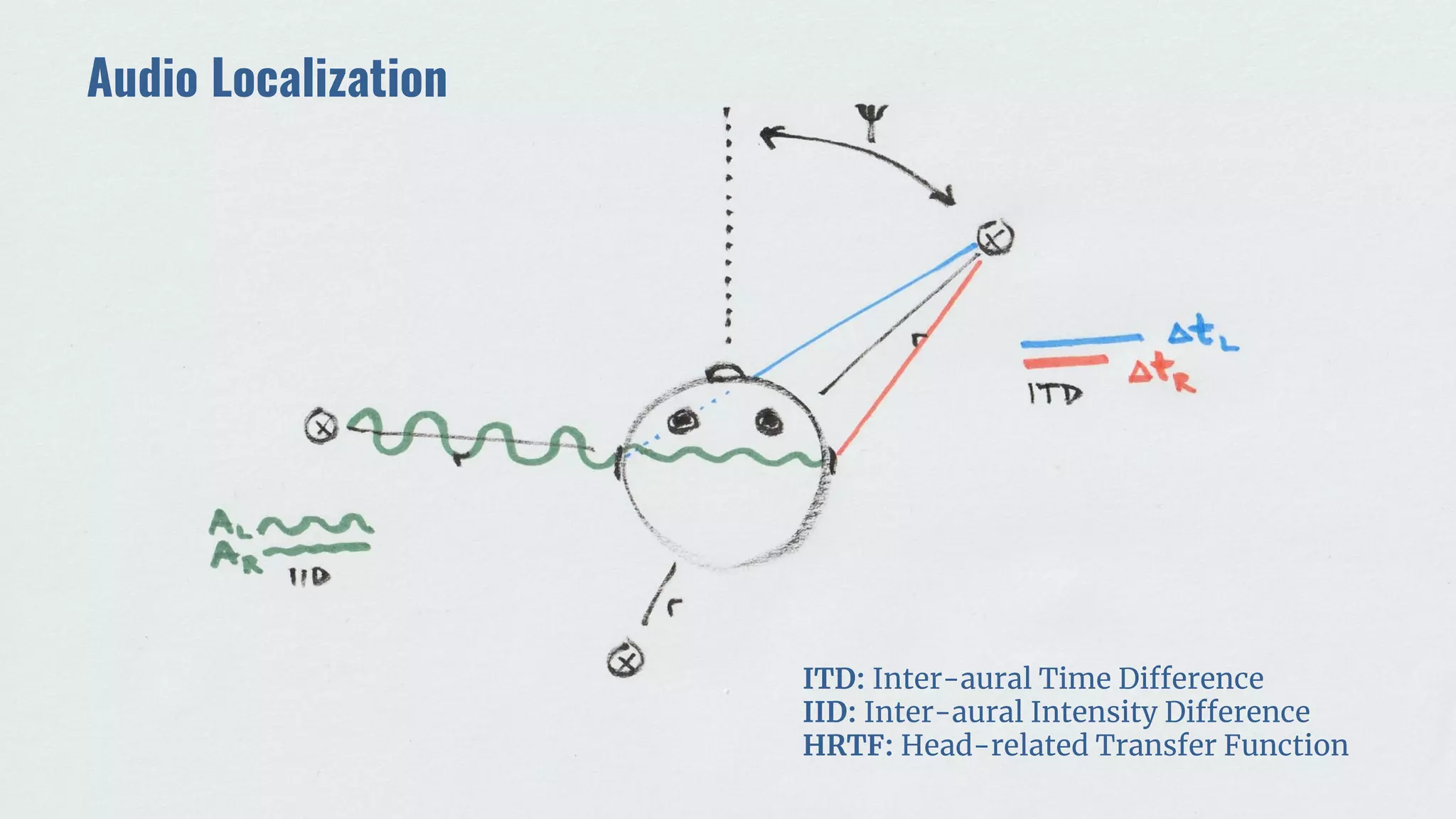

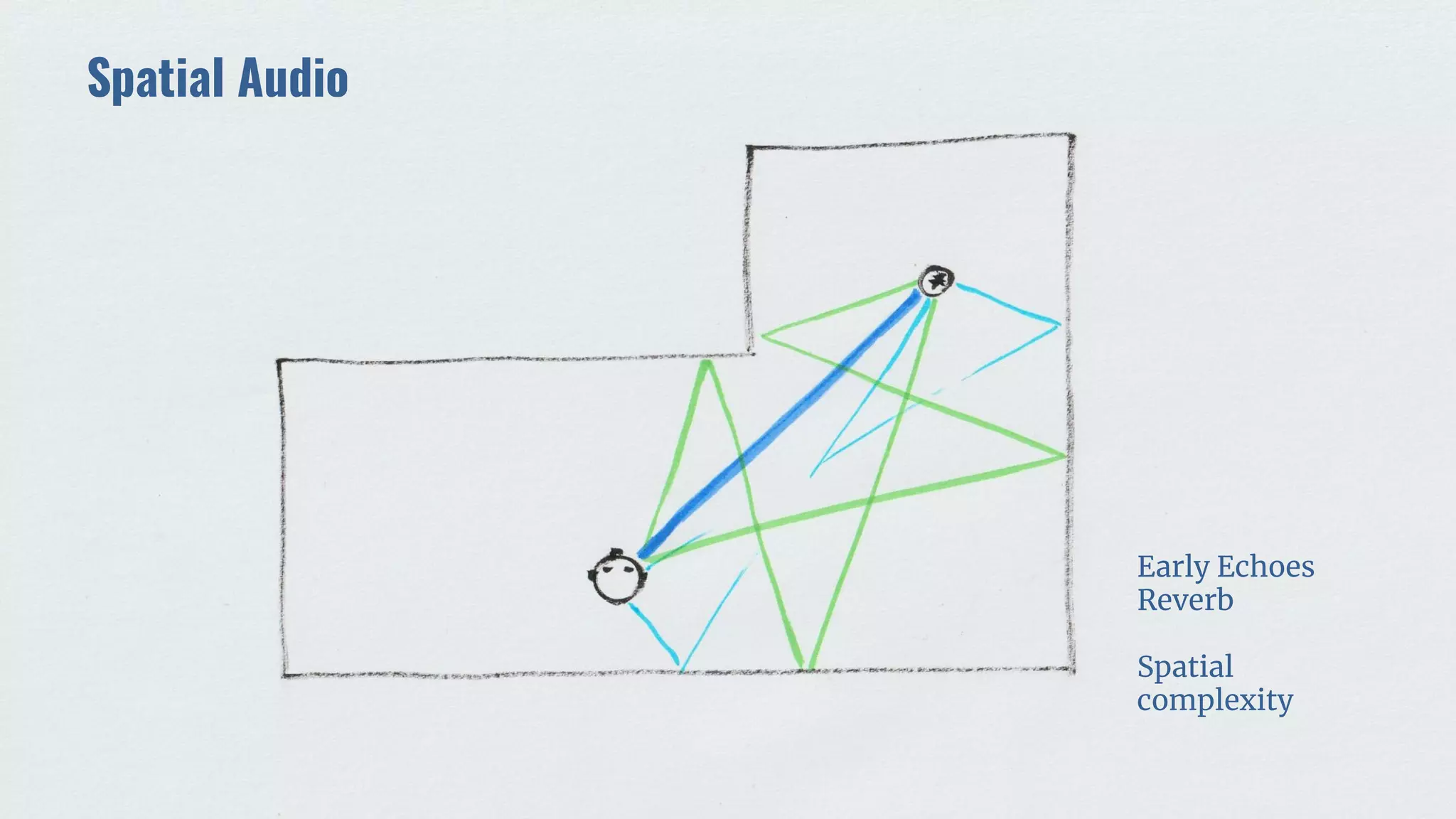

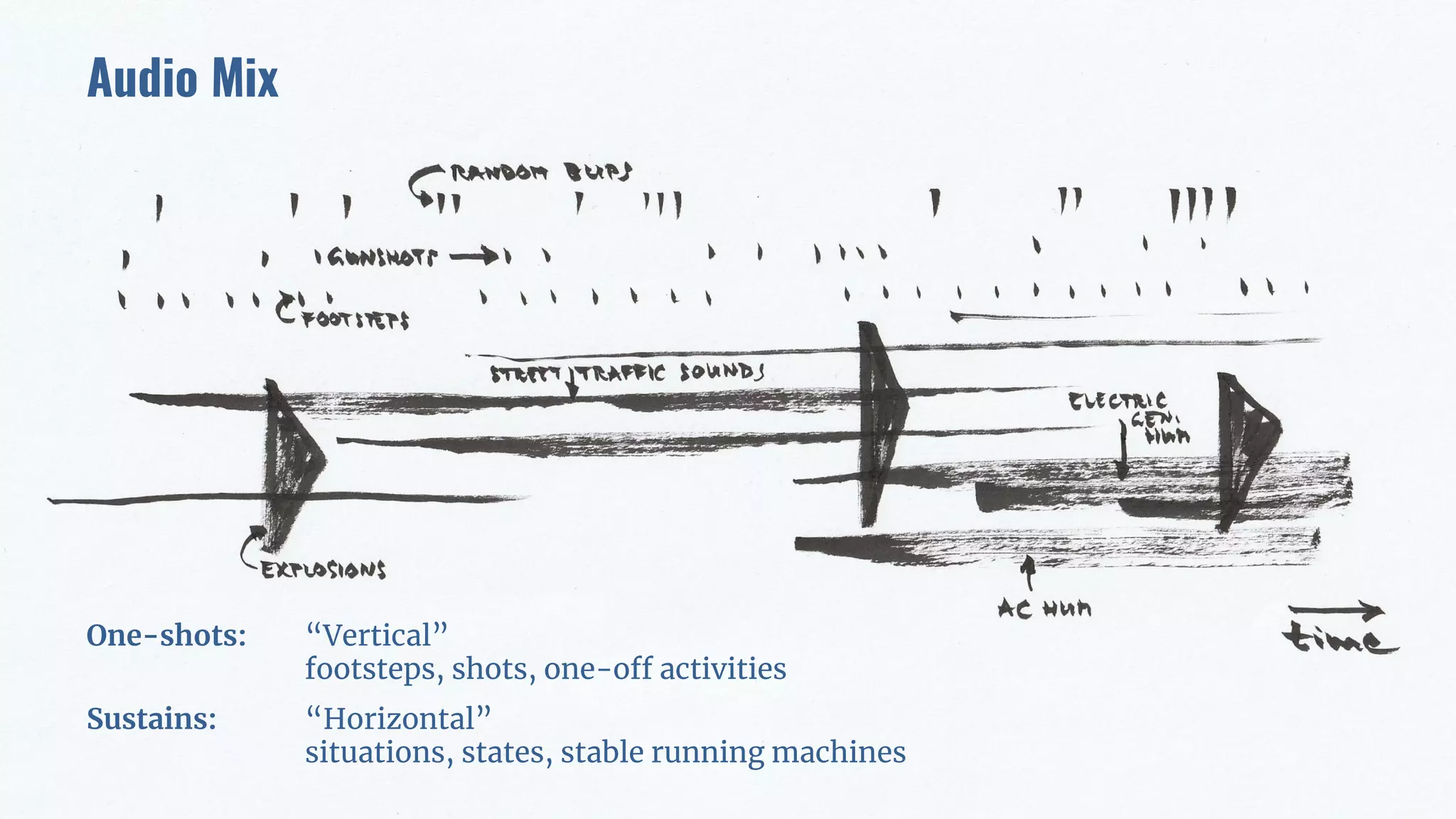

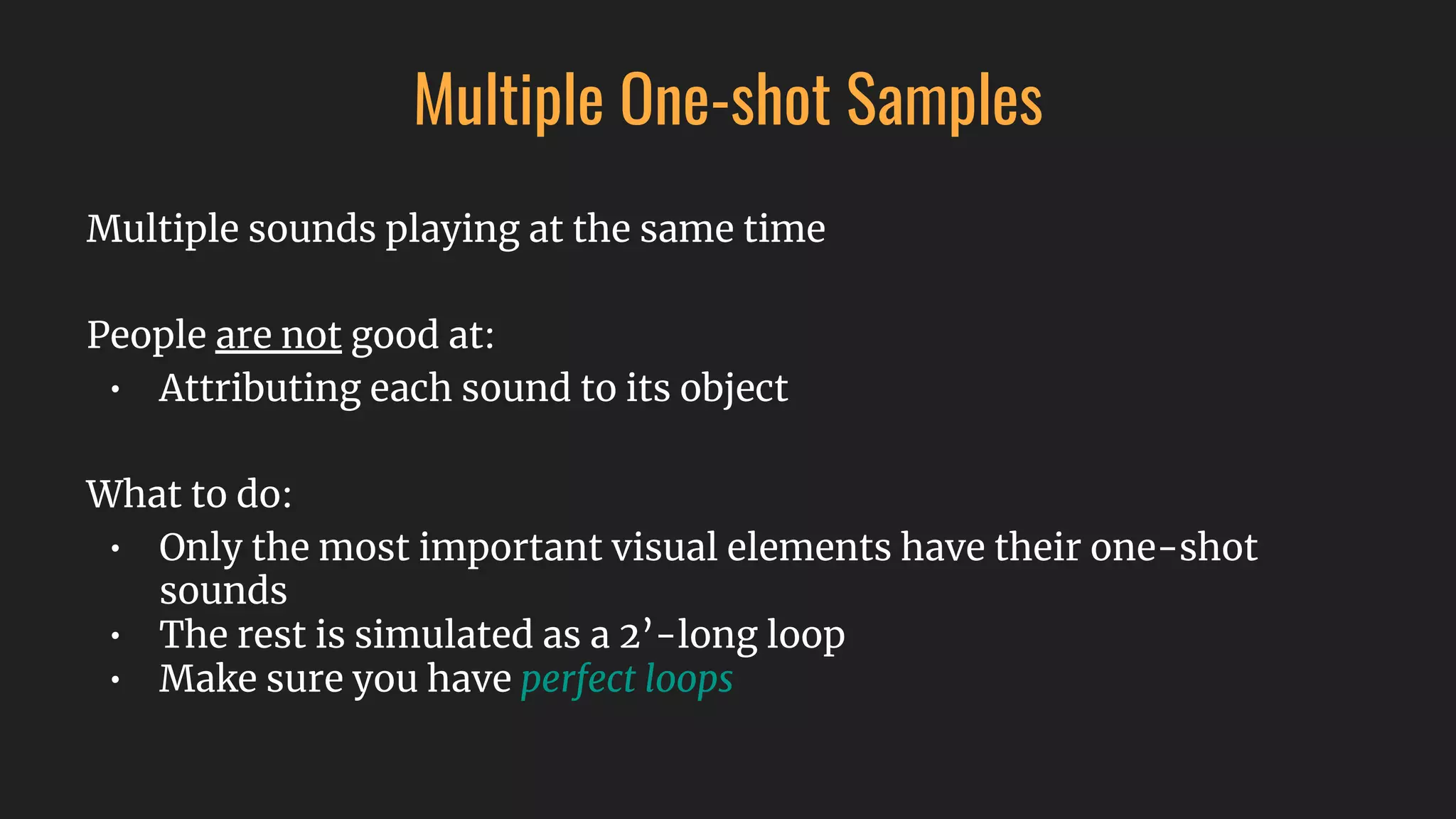

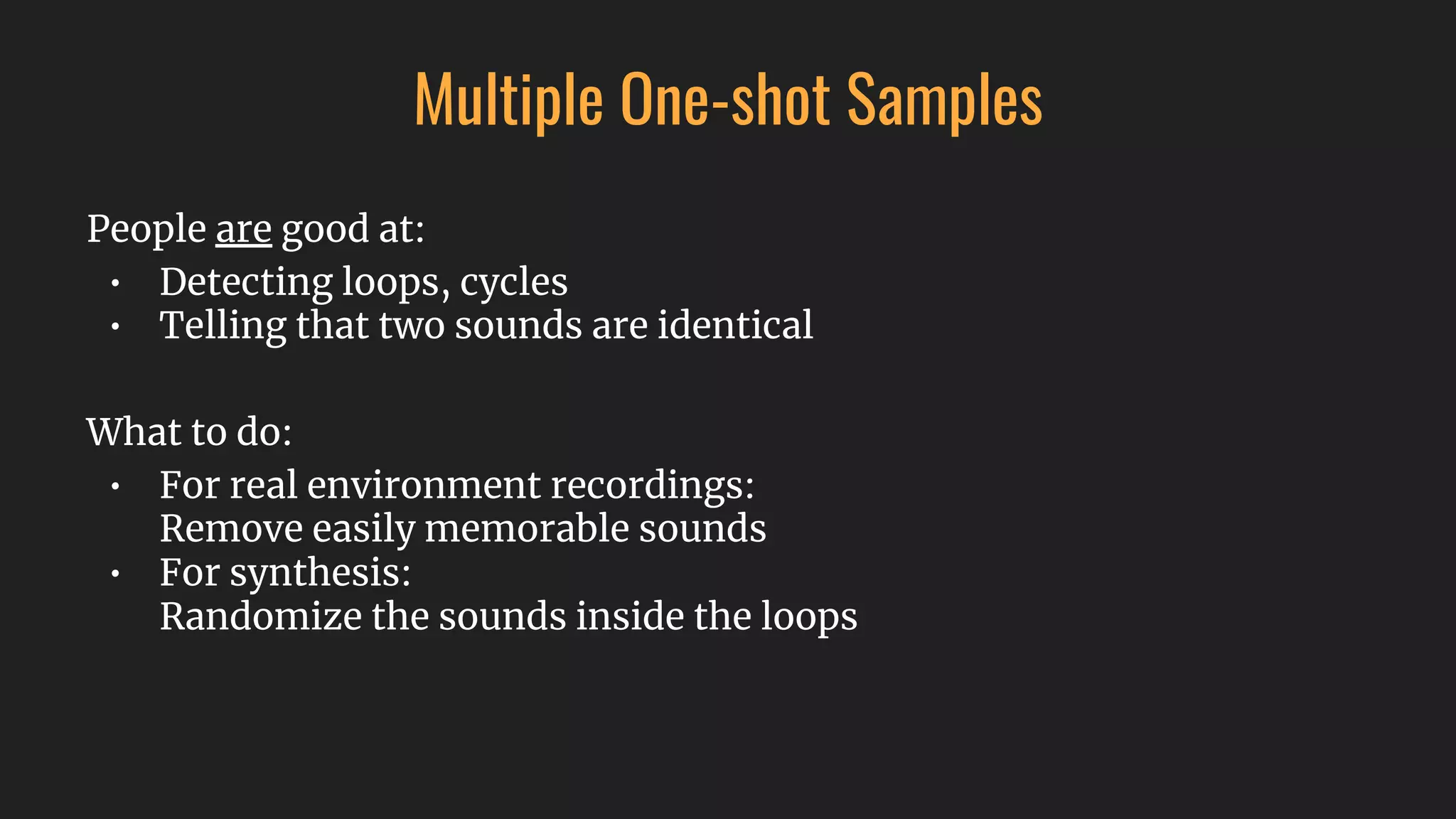

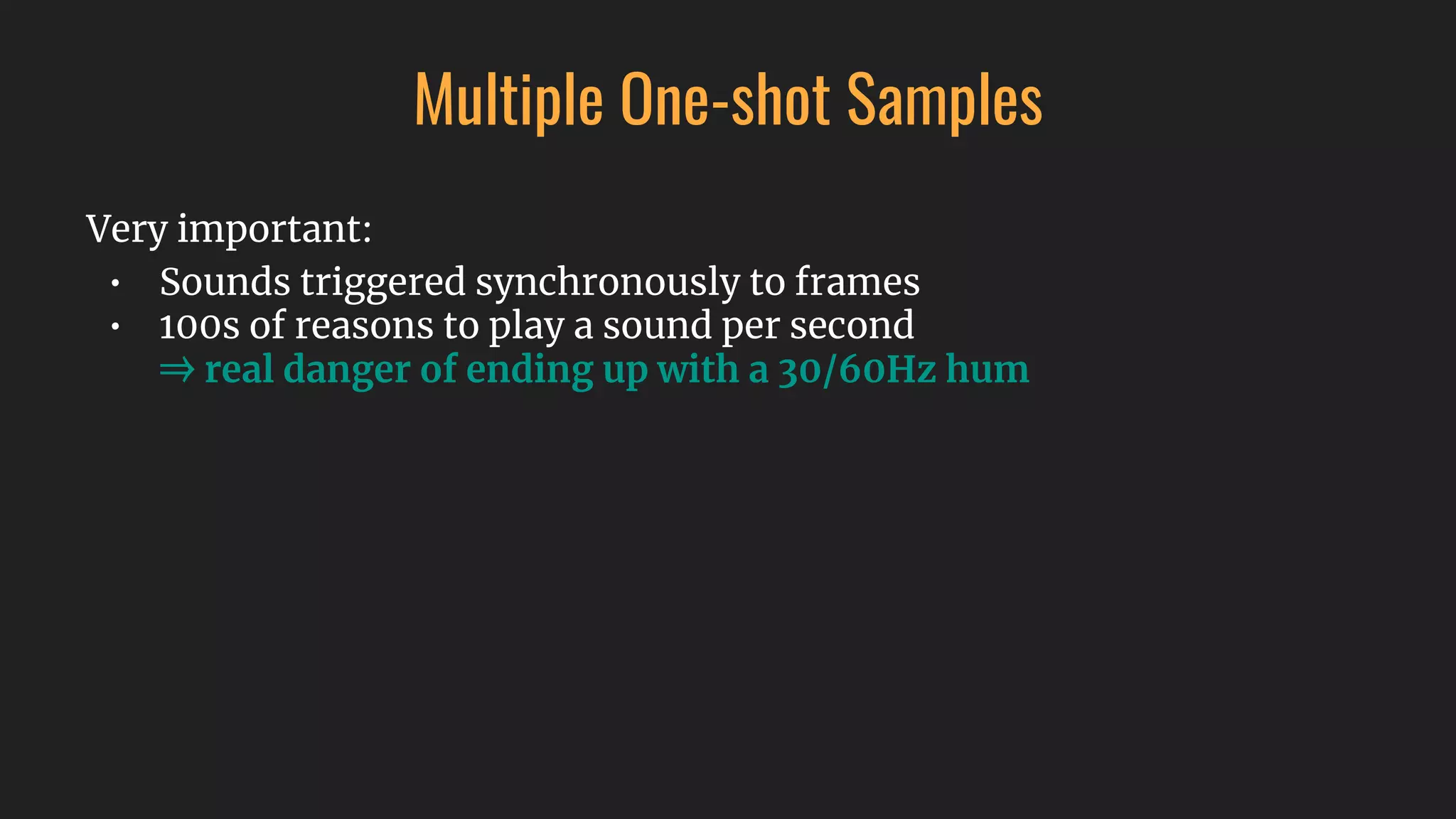

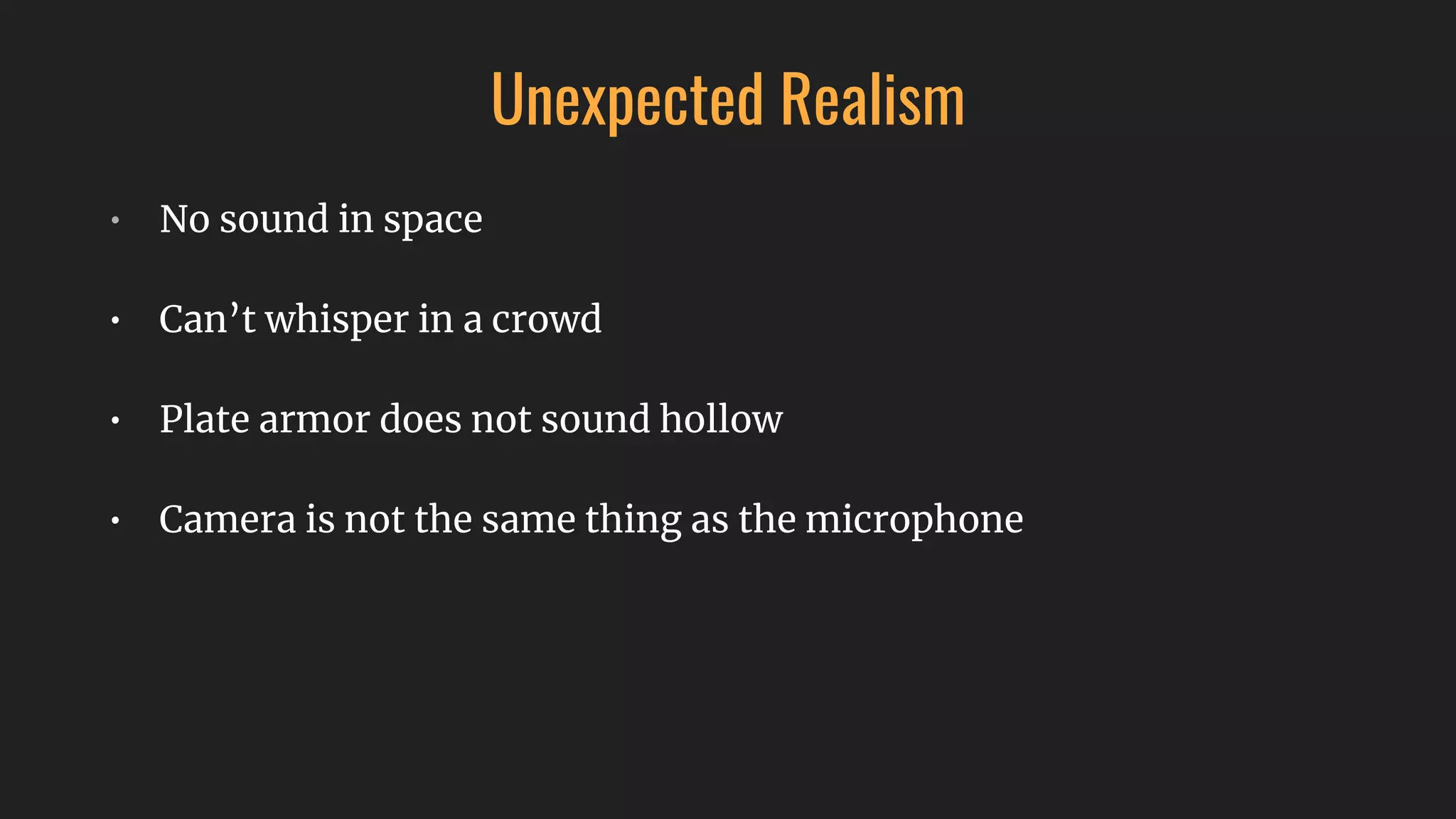

The document outlines a course on adaptive music and interactive audio at Charles University, covering techniques in sound design, generative music, and audio implementation in gaming and interactive media. It discusses the physics of sound, sound's role in user interfaces, and various categories of audio effects, including diegetic and non-diegetic sounds. Additionally, the document emphasizes the importance of sound in creating immersive environments while noting the complexities of audio perception and localization.