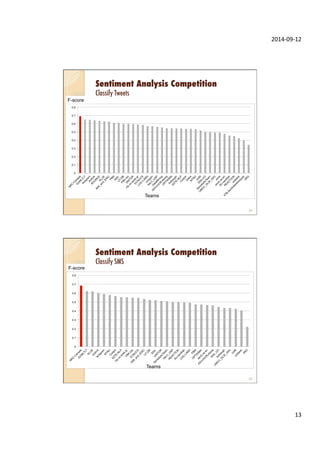

This document provides an overview of sentiment analysis and its applications. It discusses sentiment analysis tasks like classifying text sentiment and identifying aspect-level sentiment. It also covers social media texts and their characteristics. Several SemEval shared tasks on sentiment analysis are described, including tasks on classifying tweets and aspects in reviews. The document concludes with a brief discussion of sentiment analysis features.