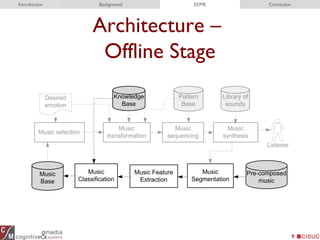

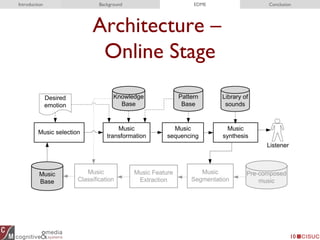

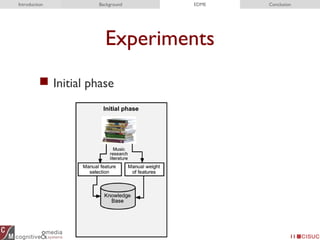

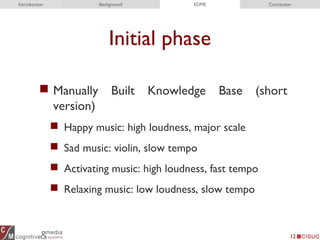

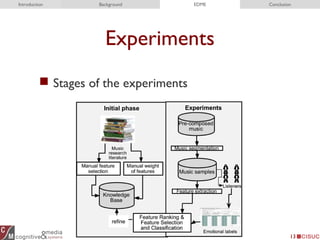

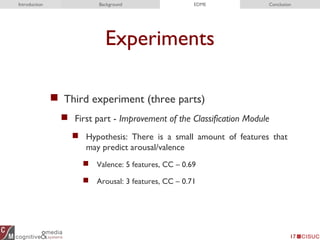

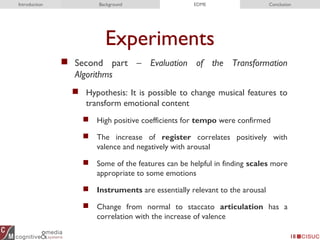

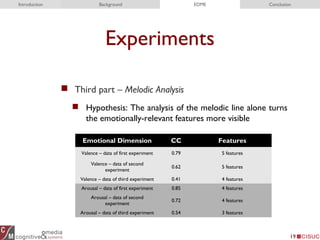

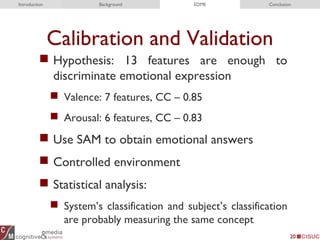

The document describes a musical system called the Emotion-Driven Music Engine (EDME) that is able to produce instrumental music with a specified emotional content. The system uses a hybrid approach that combines music selection/classification with music transformation. It was tested through experiments that evaluated its ability to accurately classify and transform music features related to emotional dimensions like valence and arousal. The results showed the system could reliably discriminate between emotions and produce emotionally expressive music, with contributions including its flexibility, reliability through experimental calibration, and adoption of both discrete and dimensional emotion representation.