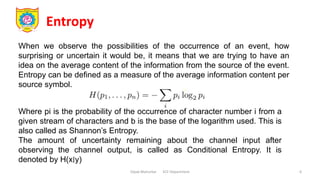

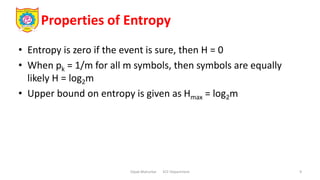

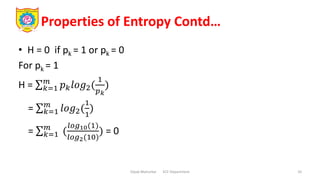

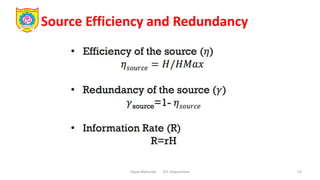

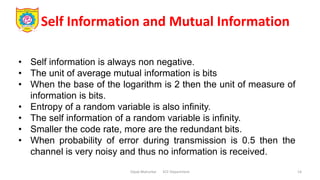

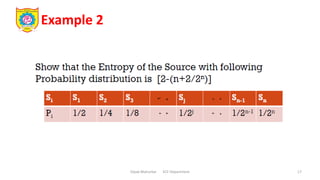

This document provides information on the topic of information sources and entropy from a lecture on discrete mathematics and information theory. It defines information and discusses how entropy can quantify the uncertainty or average information of a data source. Entropy is defined as the measure of average information per symbol of a data source. The document gives several examples of calculating the entropy of different data sources with varying symbol probabilities. It also discusses properties of entropy like how it relates to source efficiency and redundancy.

![Entropy Contd…

Dipak Mahurkar ECE Department 8

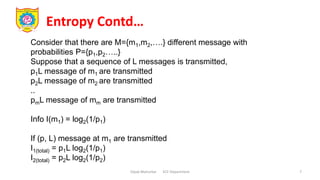

If (p, L) message at m1 are transmitted

I1(total) = p1L log2(1/p1)

I2(total) = p2L log2(1/p2)

.

.

.

Im (total) = pm L log2(1/pm)

I(total) = p1L log2(1/p1) + p2L log2(1/p2) + ….+ pmL log2(1/pm)

Average Info = Total Info / No. of messages

= I(total)/L

= [p1L log2(1/p1) + p2L log2(1/p2) + ….+ pmL log2(1/pm)] / L

= p1 log2(1/p1) + p2 log2(1/p2) + ….+ pm log2(1/pm)

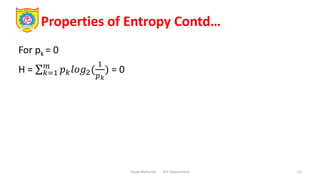

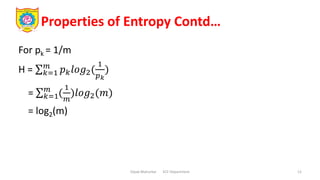

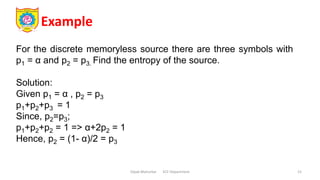

Hence, we can write,

Entropy = 𝑘=1

𝑚

𝑝𝑘𝑙𝑜𝑔2(

1

𝑝𝑘

)](https://image.slidesharecdn.com/6-231214015148-3d7044ee/85/6-1-Information-Sources-and-Entropy-pptx-8-320.jpg)

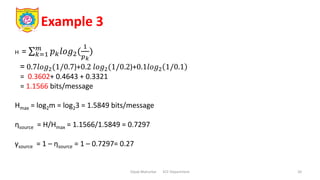

![Example 3

Dipak Mahurkar ECE Department 18

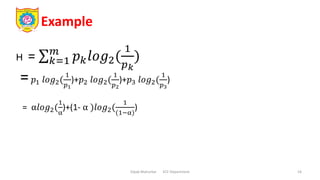

H = 𝑘=1

𝑚

𝑝𝑘𝑙𝑜𝑔2(

1

𝑝𝑘

)

=(

1

2

) 𝑙𝑜𝑔2(2)+(

1

4

) 𝑙𝑜𝑔2(4)+

1

8

𝑙𝑜𝑔2 8 +……… +

1

2𝑛 𝑙𝑜𝑔2 2𝑛

= (1/2)+(2/4)+(3/8)+…….+(n/2n)

= 2 – [ (n+2)/2n]](https://image.slidesharecdn.com/6-231214015148-3d7044ee/85/6-1-Information-Sources-and-Entropy-pptx-18-320.jpg)