Firefly Optimization Algorithm (FOA)

Introduction

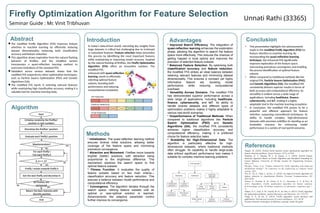

The Firefly Optimization Algorithm (FOA) is a nature-inspired metaheuristic technique based on the flashing behavior of fireflies. Developed by Xin-She Yang in 2008, FOA is widely used for solving optimization problems in various domains such as engineering, machine learning, and data science.

Inspiration from Nature

Fireflies use bioluminescent signals to communicate and attract mates. In FOA, these flashes are modeled as an attractiveness function, where fireflies move towards brighter (better) solutions. The intensity of a firefly's glow is linked to the quality (fitness value) of the solution it represents.

Algorithmic Process

Initialize Population

Generate a set of fireflies (candidate solutions) randomly.

Define objective function to evaluate brightness (fitness).

Intensity Calculation

Fireflies emit light proportional to their fitness.

Light intensity decreases with distance.

Movement Rule

A firefly moves toward a brighter (better) firefly.

Movement is influenced by distance, attractiveness, and randomness.

Equation:

𝑥

𝑖

=

𝑥

𝑖

+

𝛽

𝑒

−

𝛾

𝑟

2

(

𝑥

𝑗

−

𝑥

𝑖

)

+

𝛼

𝜖

x

i

=x

i

+βe

−γr

2

(x

j

−x

i

)+αϵ

where:

𝑥

𝑖

,

𝑥

𝑗

x

i

,x

j

= positions of fireflies

𝛽

β = attractiveness coefficient

𝛾

γ = light absorption coefficient

𝑟

r = distance between fireflies

𝛼

α = randomization factor

𝜖

ϵ = random number

Update & Repeat

Recalculate brightness after movement.

Stop if termination criteria (max iterations or convergence) are met.

Advantages of FOA

✔ Simple & Easy to Implement

✔ Global Optimization Capability

✔ Handles Nonlinear Problems Well

✔ Works with Continuous & Discrete Data

Applications of FOA

🔹 Engineering Design Optimization

🔹 Neural Network Training

🔹 Image Processing & Feature Selection

🔹 Scheduling & Resource Allocation

🔹 Path Planning in Robotics

Comparison with Other Algorithms

Feature Firefly Algorithm Genetic Algorithm Particle Swarm Optimization

Nature Swarm Intelligence Evolutionary Swarm Intelligence

Convergence Speed Fast Moderate Fast

Exploration Ability High High Moderate

Exploitation Ability High Moderate High

Conclusion

The Firefly Algorithm is a robust and efficient metaheuristic that mimics natural firefly behavior for global optimization. Its simplicity, adaptability, and effectiveness make it a powerful tool in various scientific and engineering fields.

🔹 References: Xin-She Yang, “Nature-Inspired Metaheuristic Algorithms” (2008)