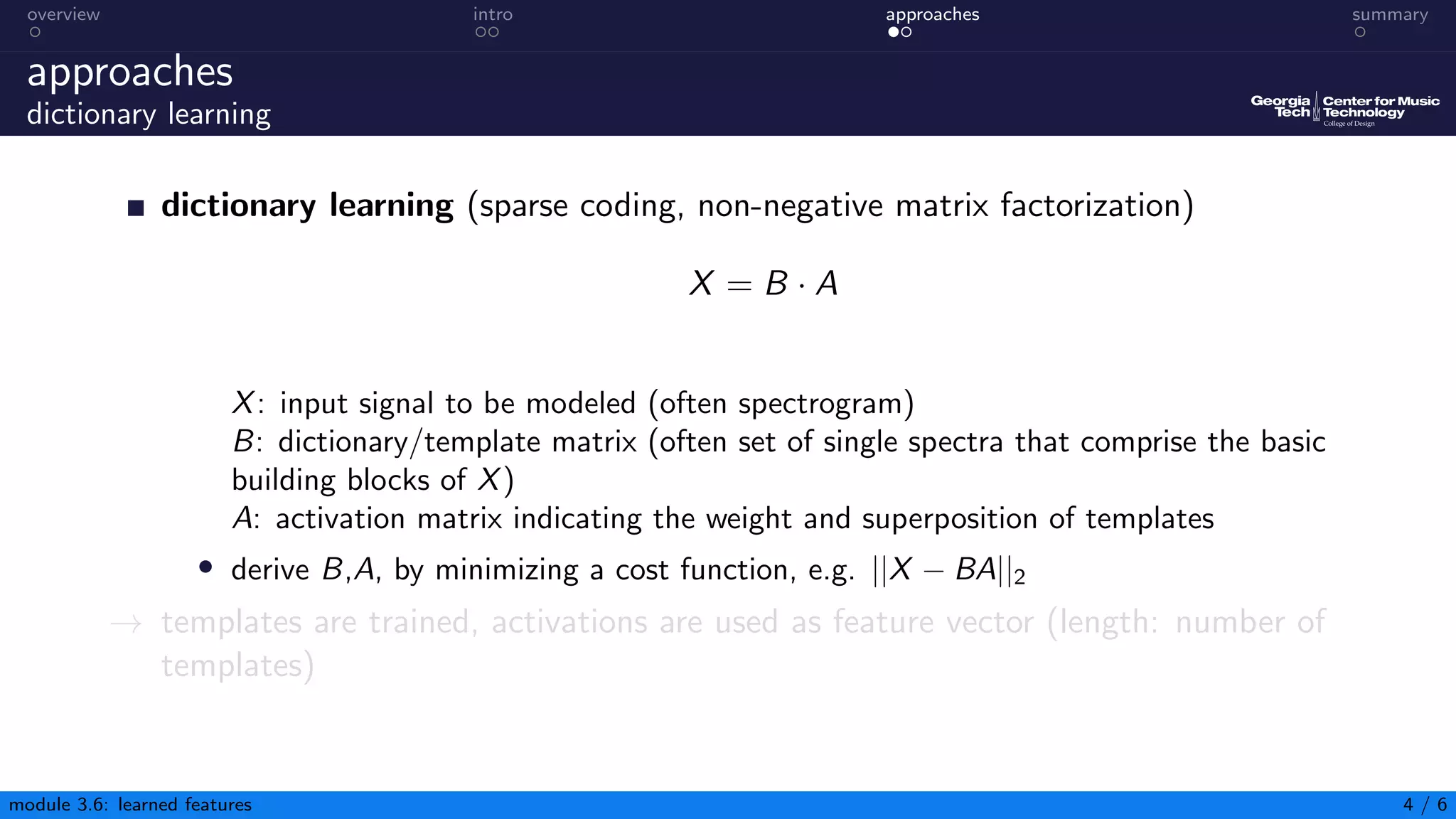

This document discusses feature learning techniques for audio content analysis. It introduces feature learning as an alternative to traditional hand-crafted features that can automatically learn features from data. Two main approaches are dictionary learning, which learns a dictionary of templates to represent audio signals, and deep learning using neural networks to learn high-level representations through multiple layers. Learned features may contain more useful information than hand-crafted ones but require large amounts of data and computation.