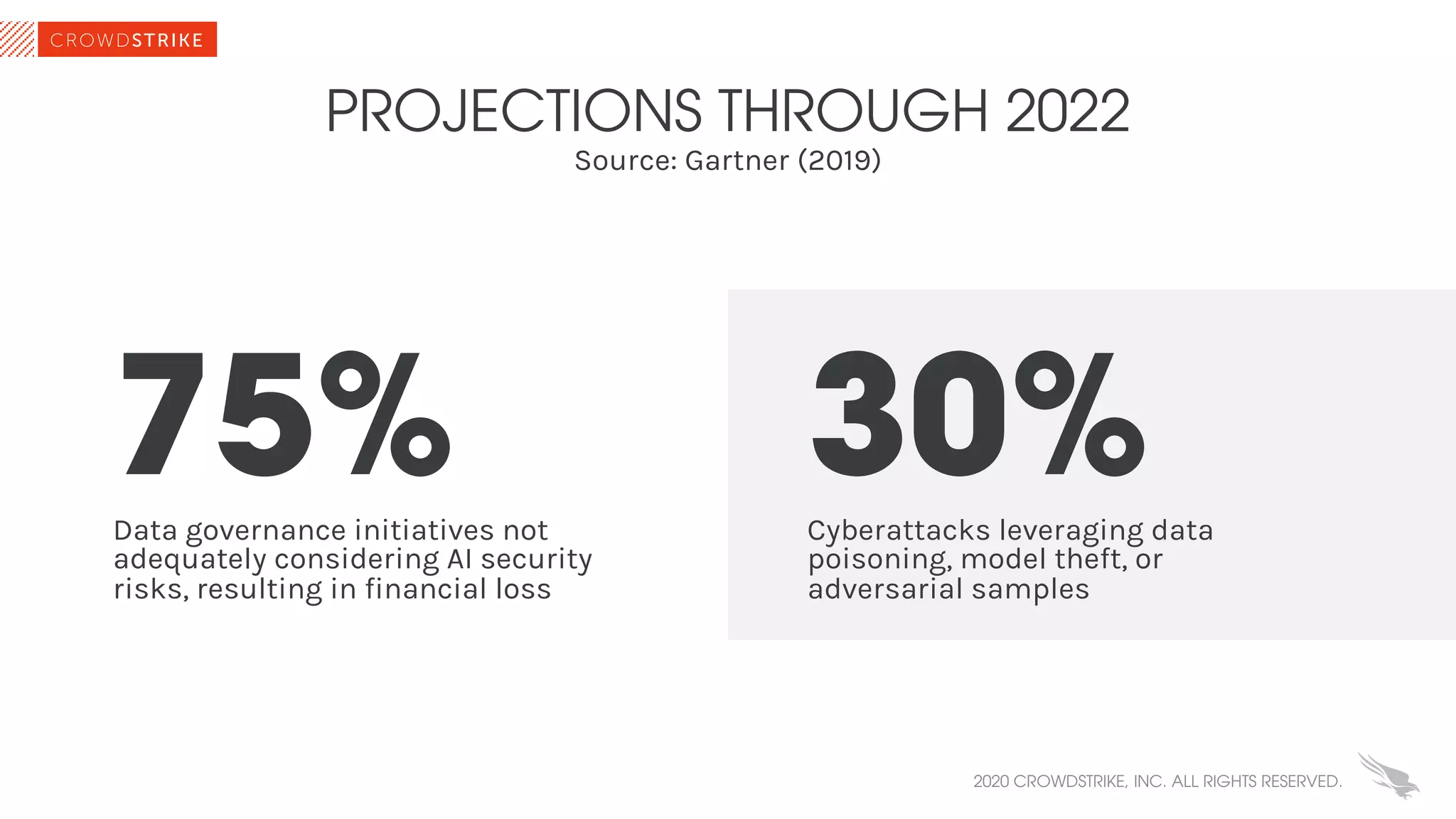

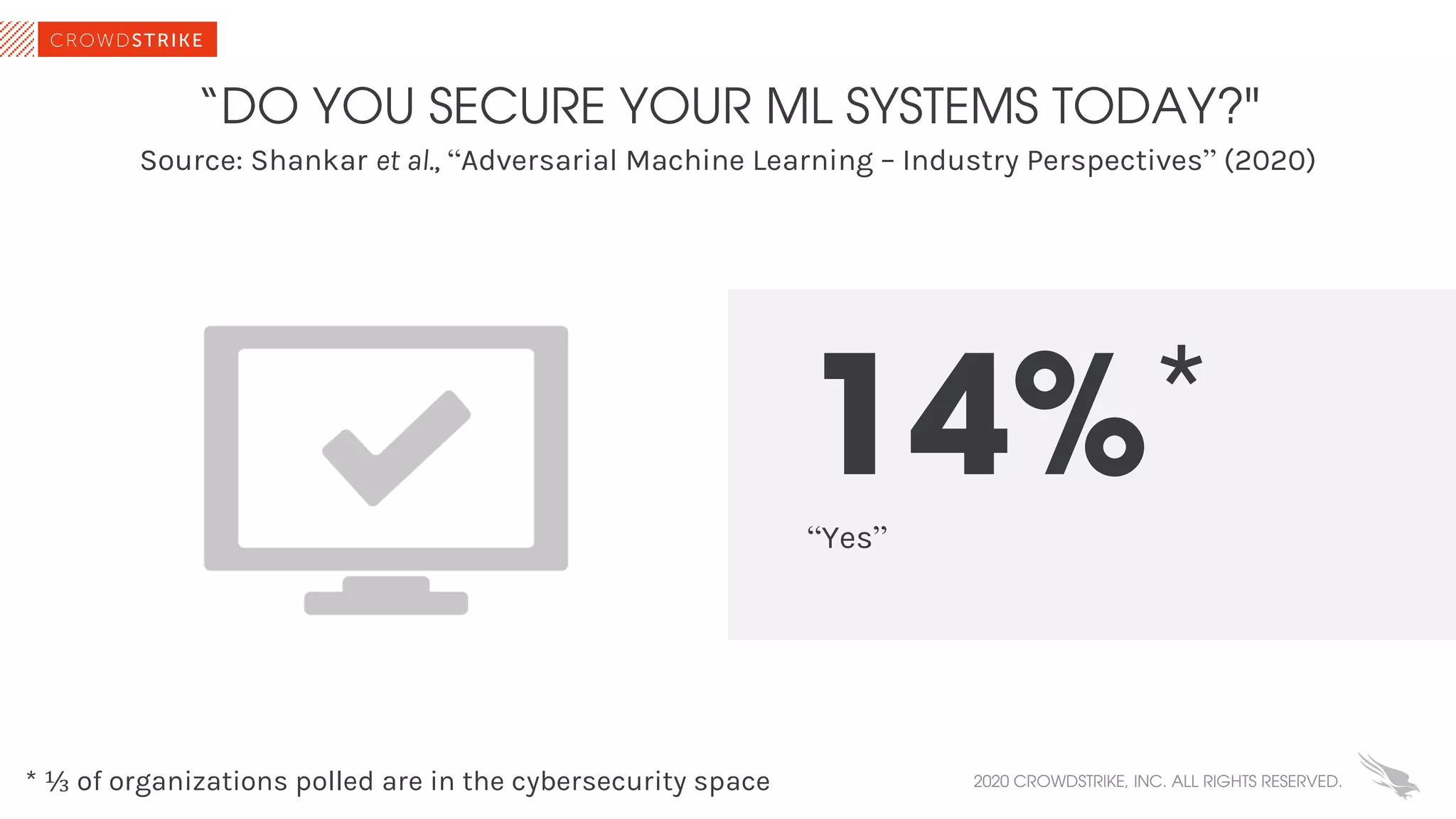

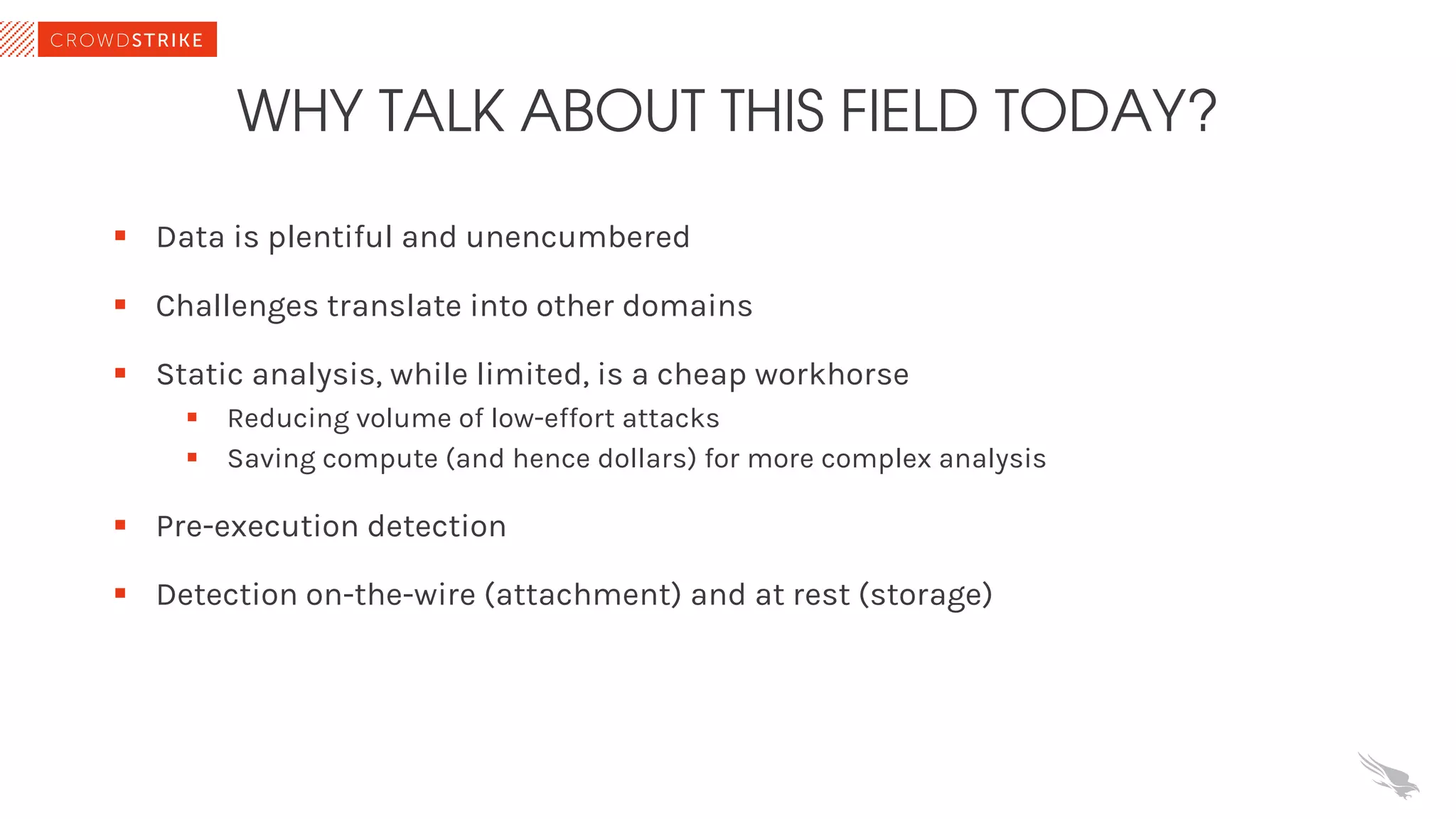

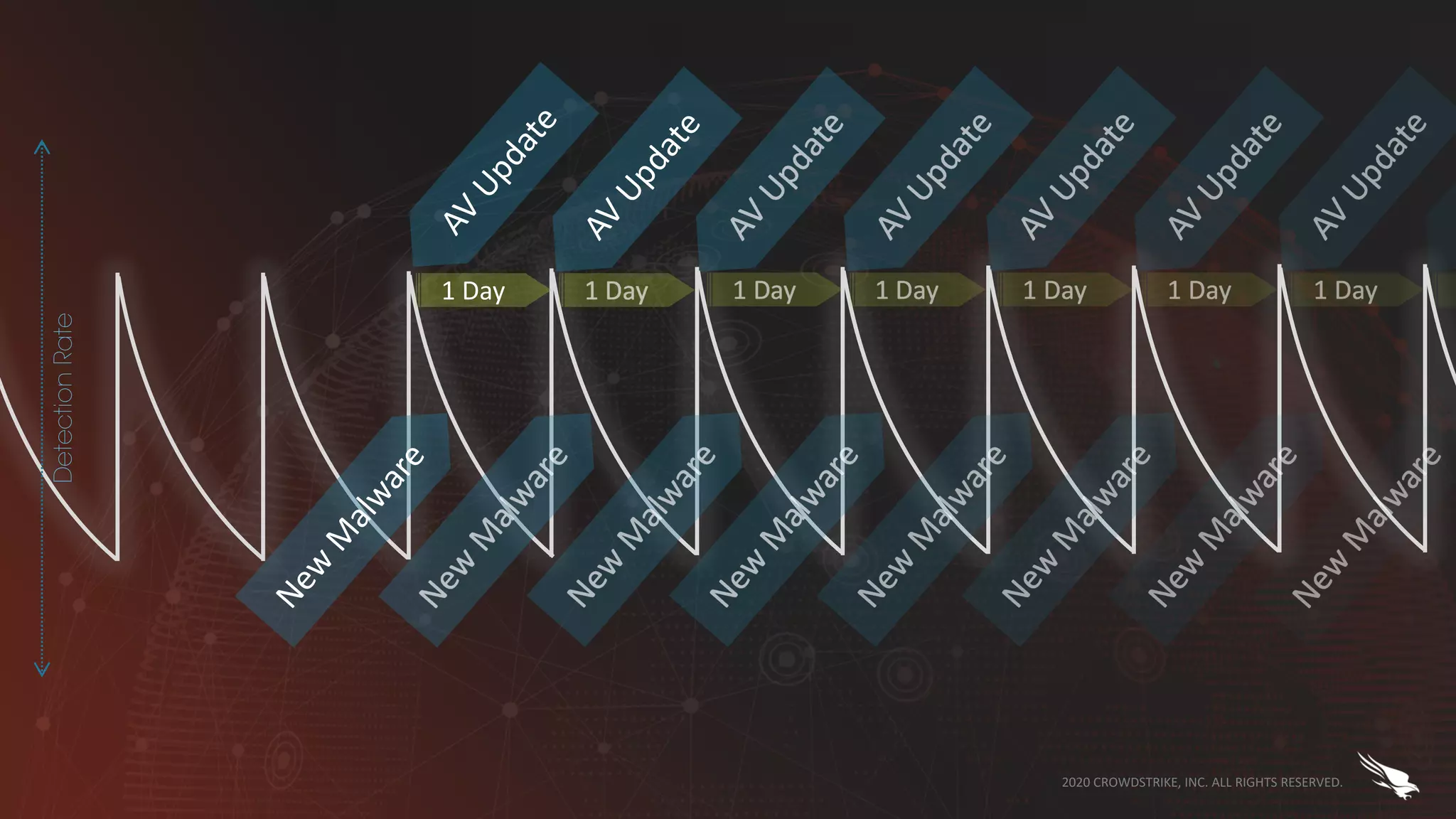

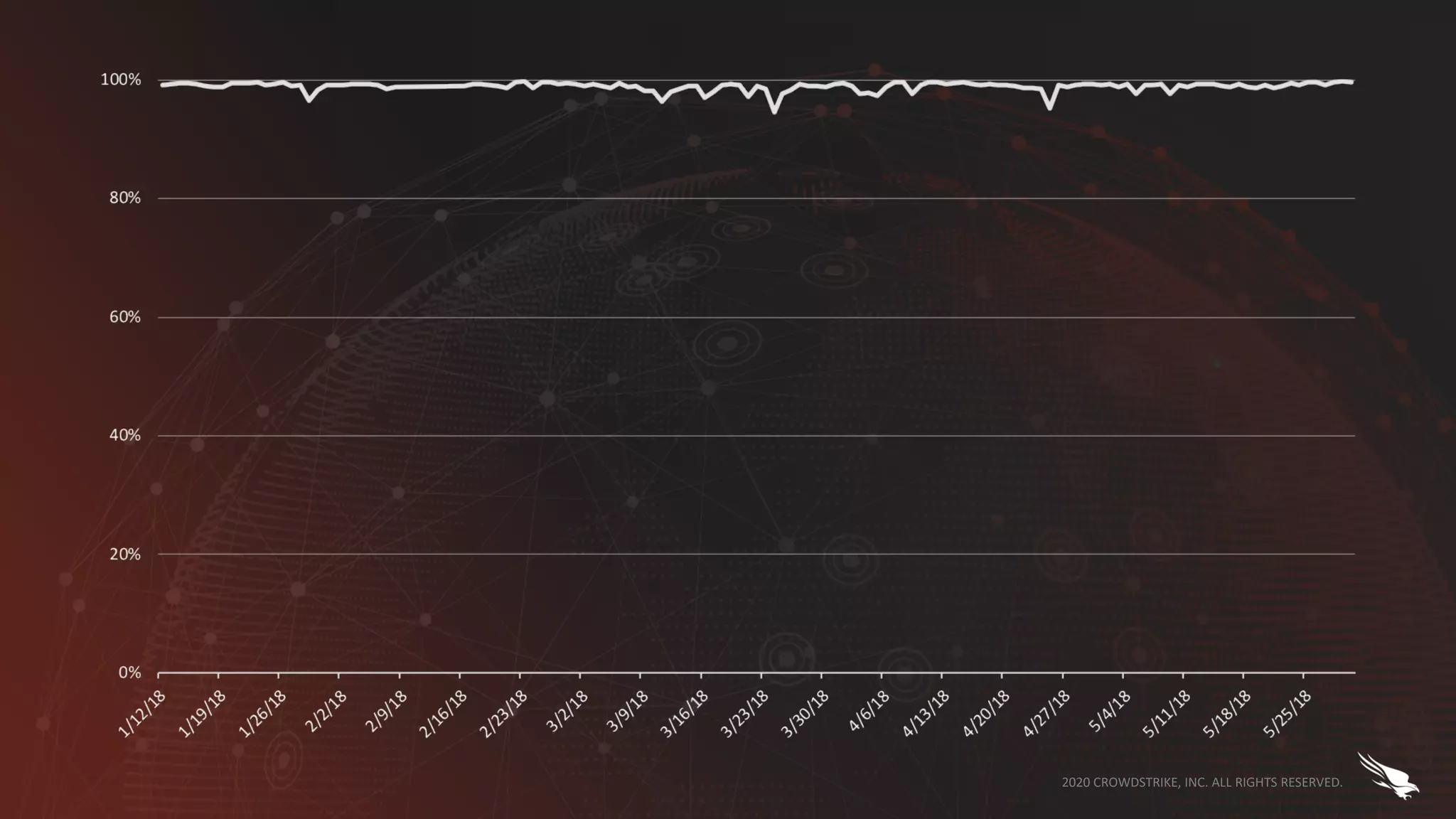

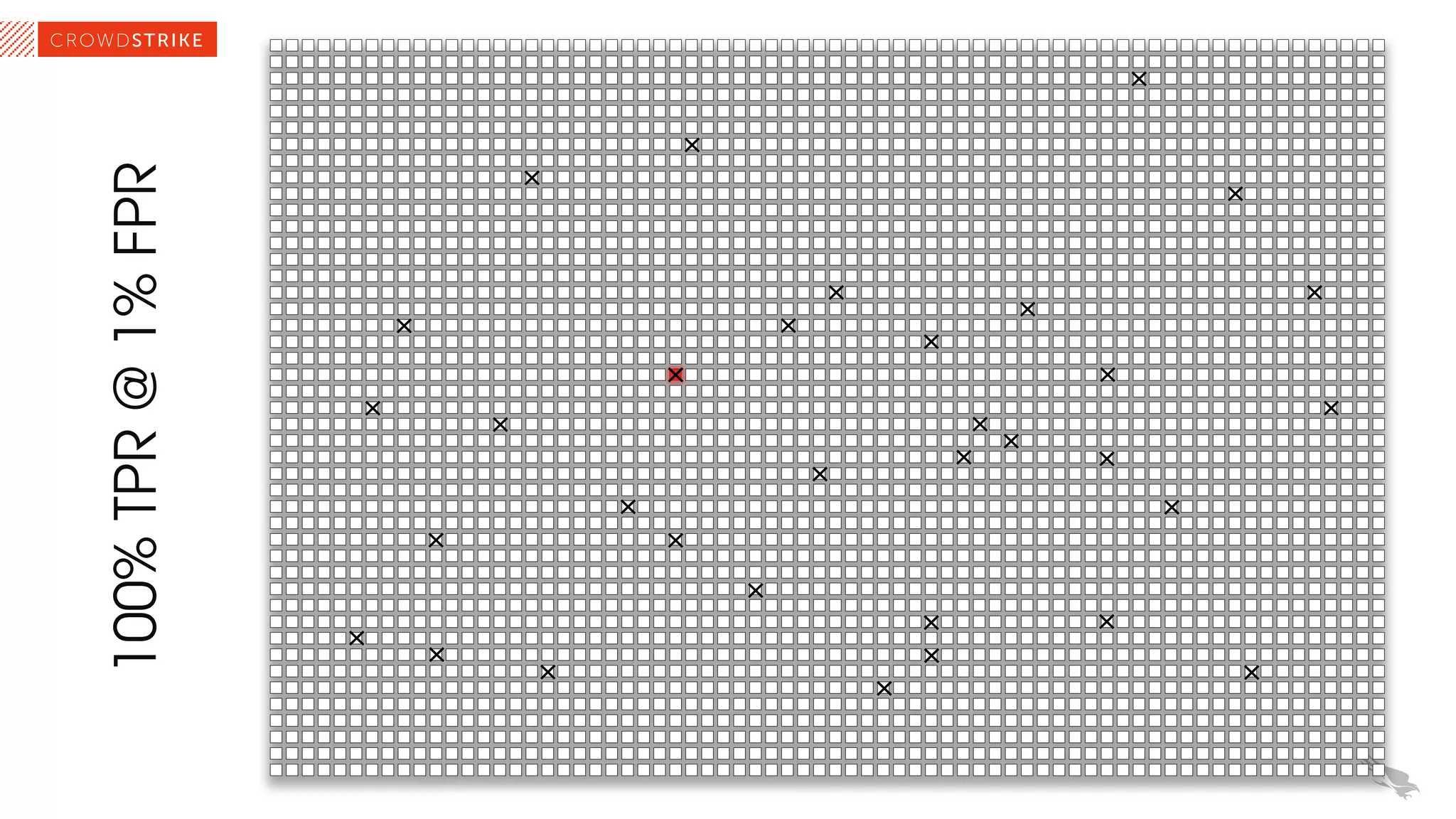

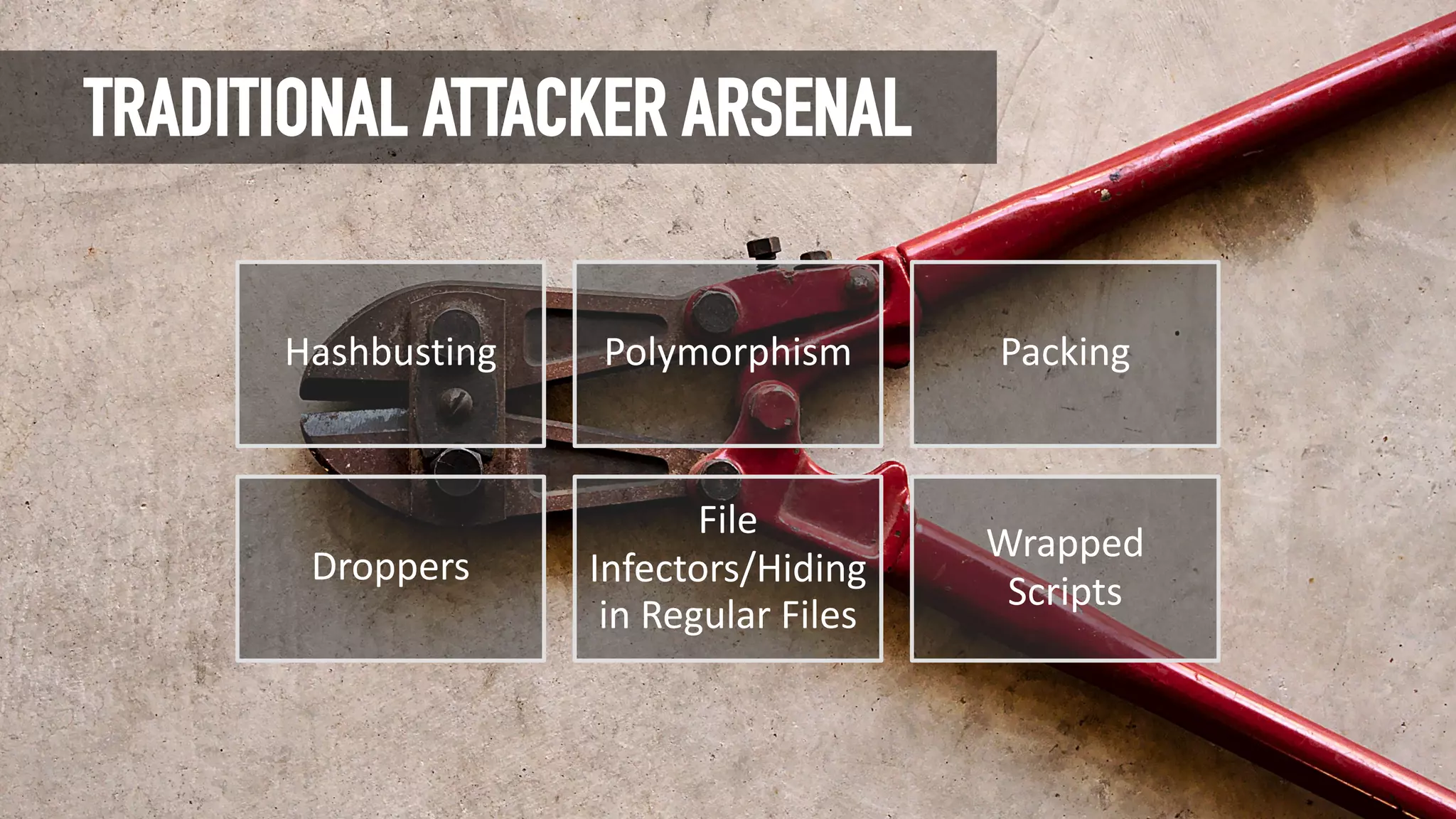

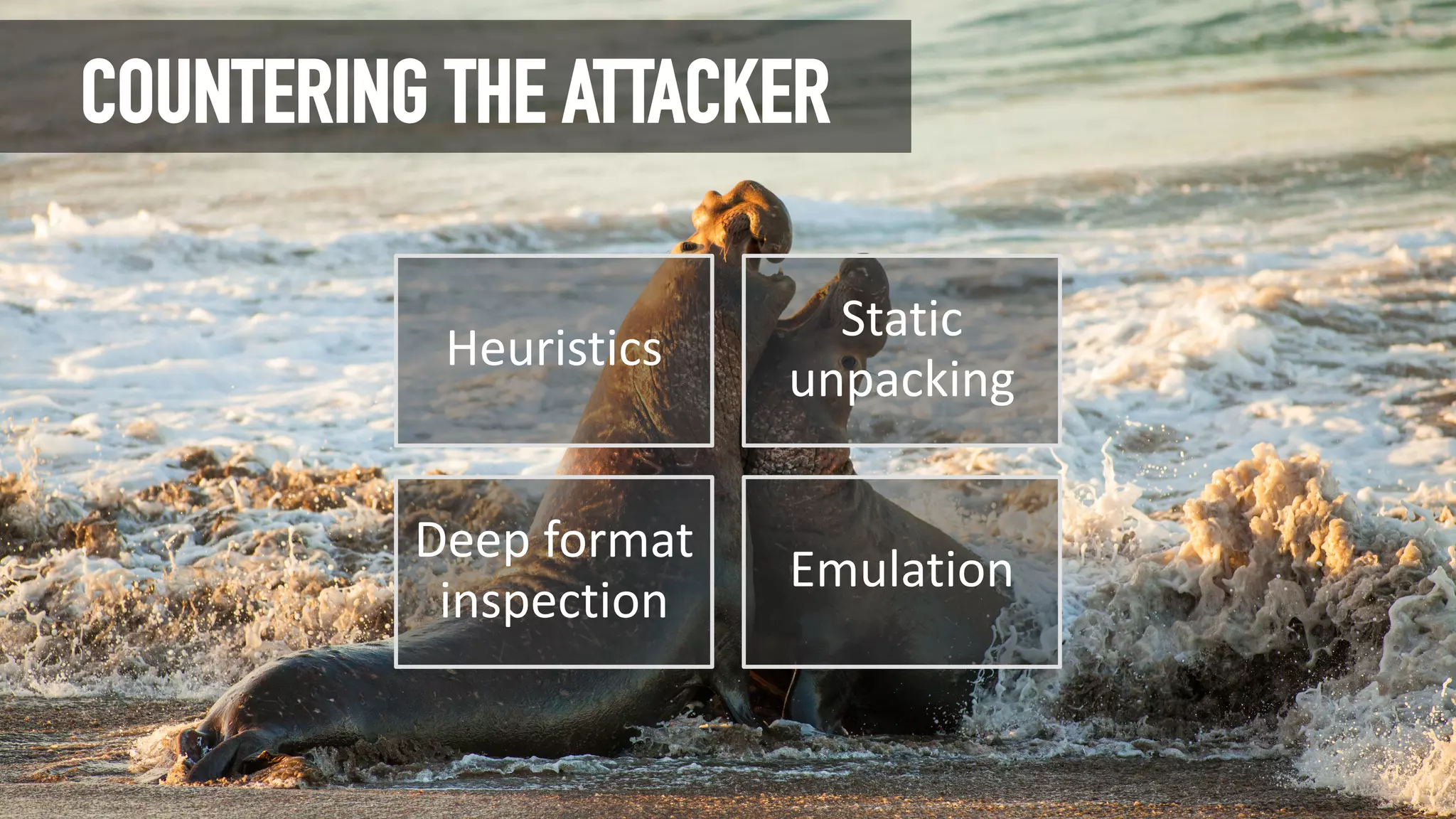

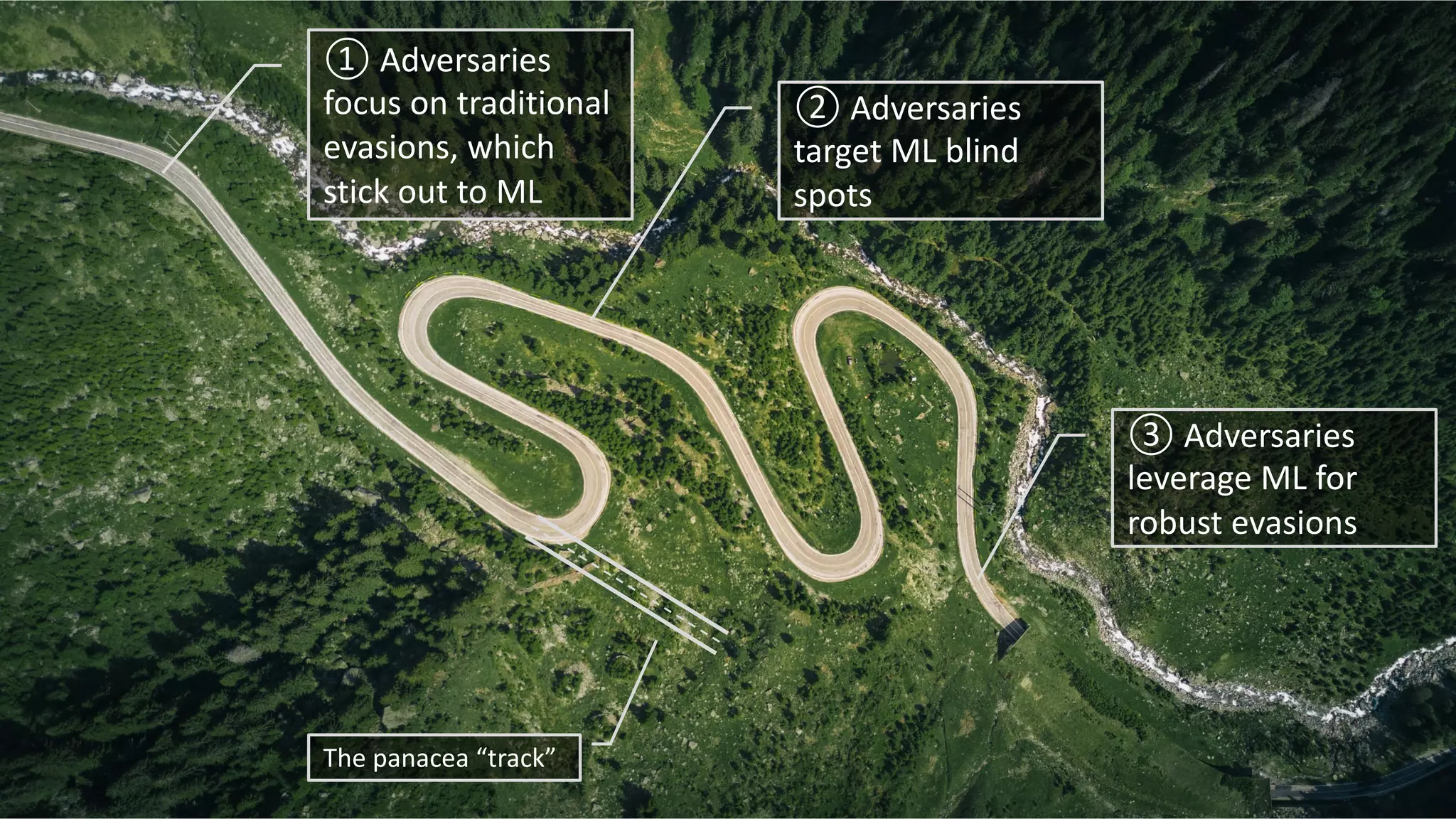

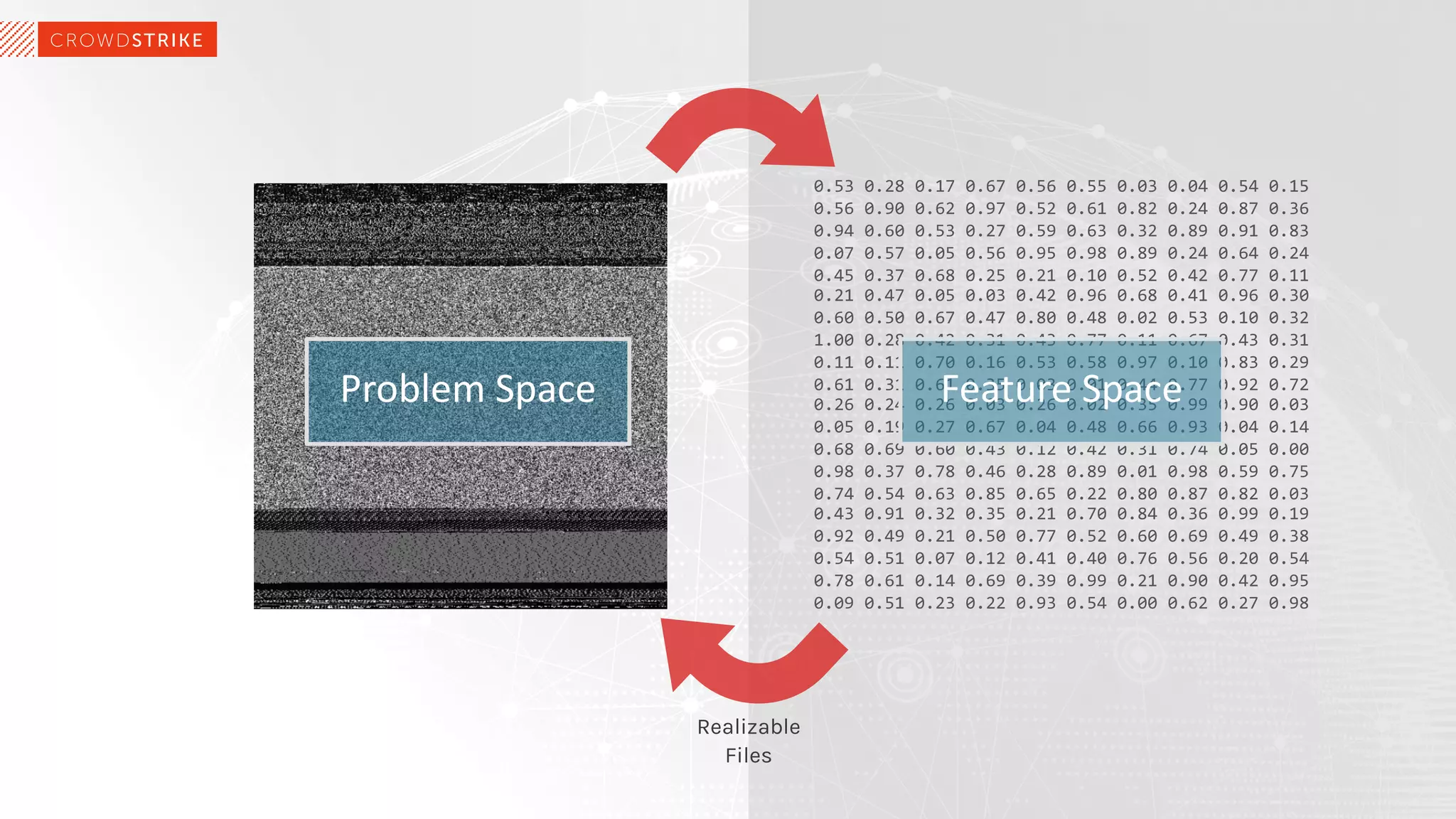

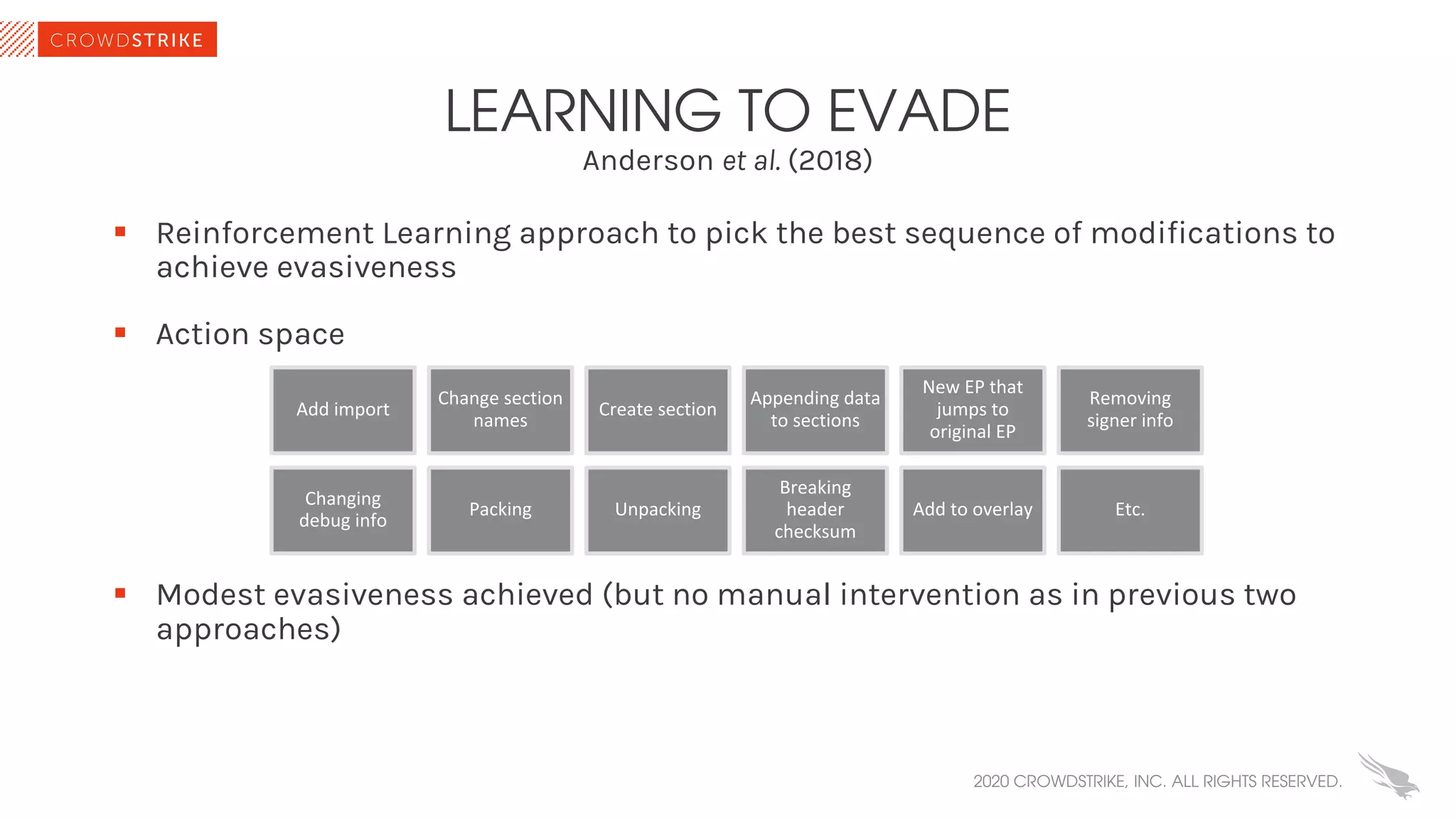

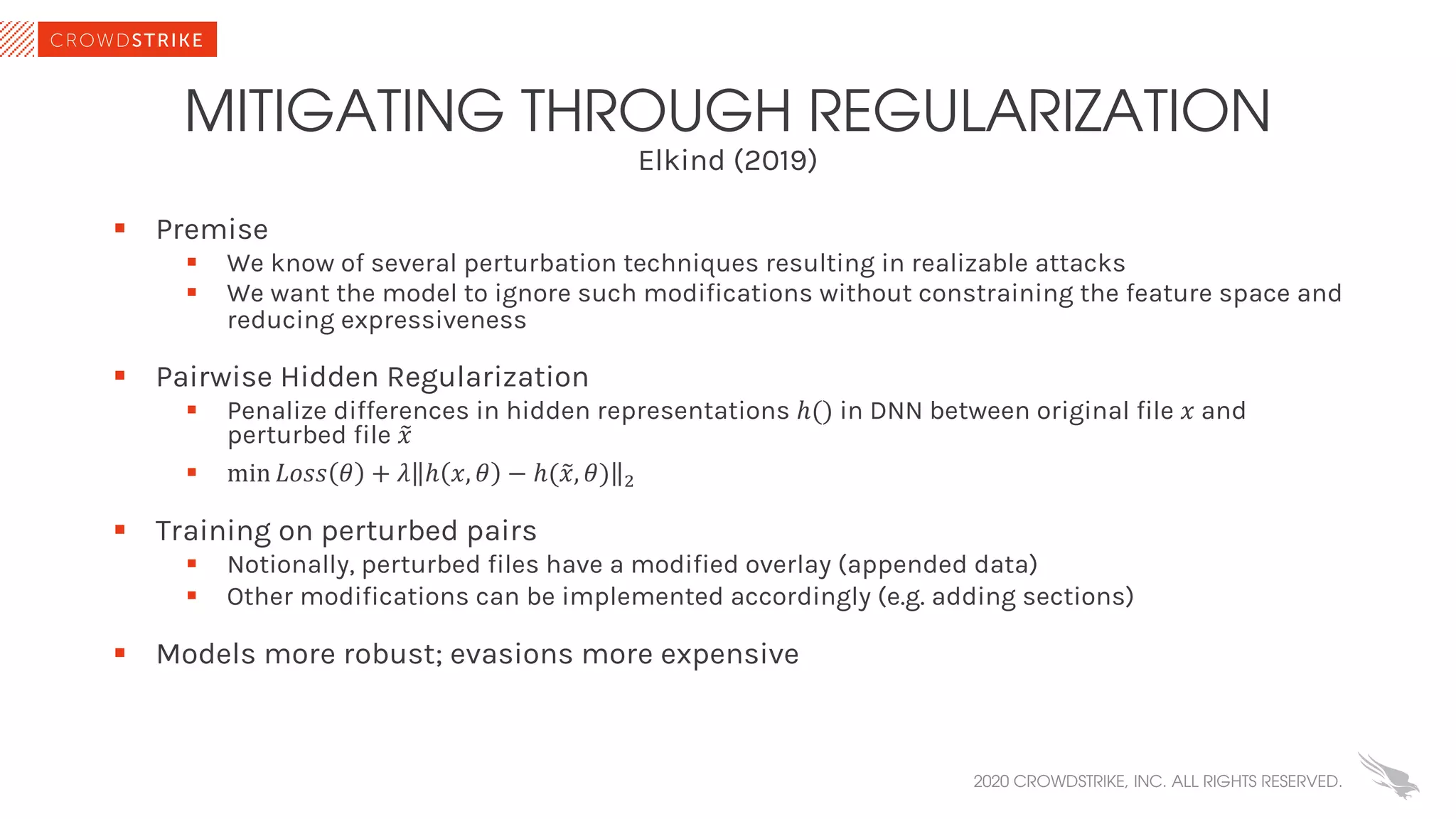

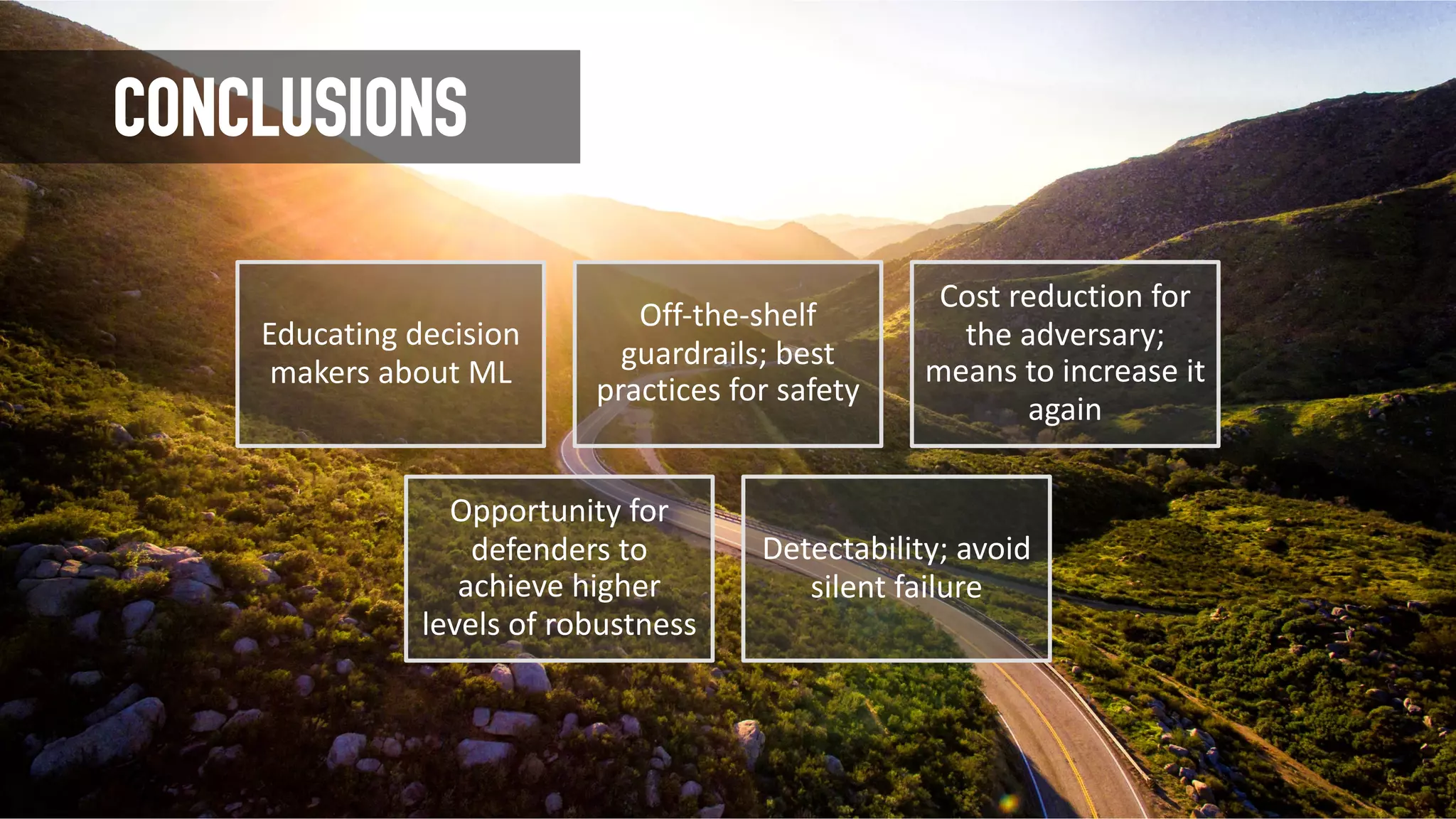

The document discusses the role of machine learning (ML) in cybersecurity, highlighting its evolution since 2013 and the associated challenges such as data governance and adversarial attacks. It emphasizes the need for robust ML systems in security, with only 14% of organizations currently securing these systems, while detailing various methods used by adversaries to evade detection. The conclusions suggest the importance of educating decision-makers about ML safety practices to enhance defense mechanisms against evolving cybersecurity threats.