Talk given by Ben Busjaeger, Software Engineering Architect at Salesforce, at FSE 2016: ACM SIGSOFT on November 2016

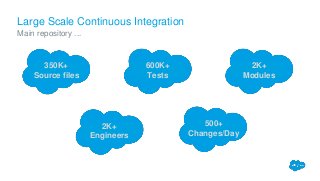

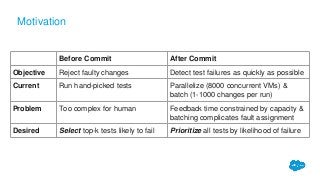

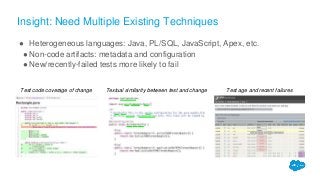

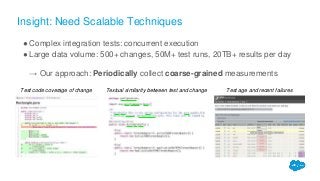

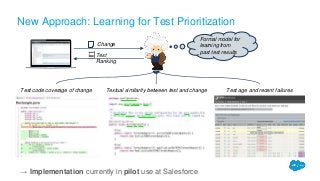

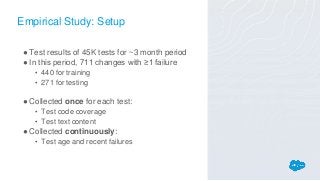

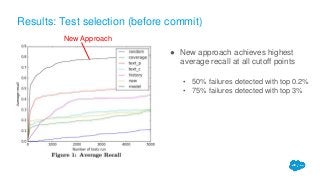

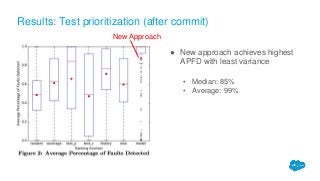

Modern cloud-software providers, such as Salesforce.com, increasingly adopt large-scale continuous integration environments. In such environments, assuring high developer productivity is strongly dependent on conducting testing efficiently and effectively. Specifically, to shorten feedback cycles, test prioritization is popularly used as an optimization mechanism for ranking tests to run by their likelihood of revealing failures. To apply test prioritization in industrial environments, we present a novel approach (tailored for practical applicability) that integrates multiple existing techniques via a systematic framework of machine learning to rank. Our initial empirical evaluation on a large realworld dataset from Salesforce shows that our approach significantly outperforms existing individual techniques.