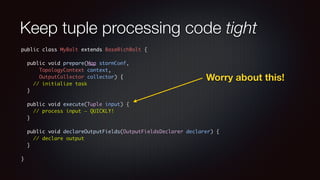

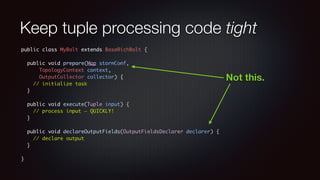

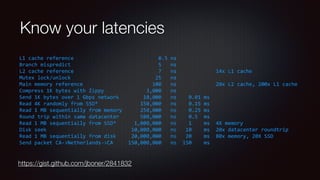

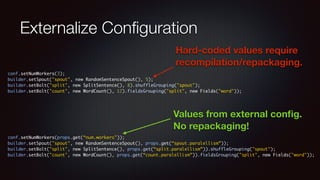

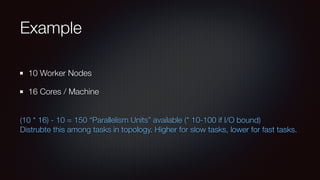

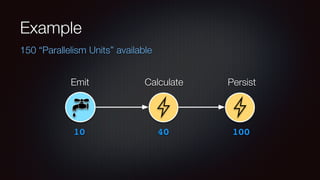

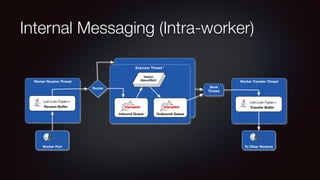

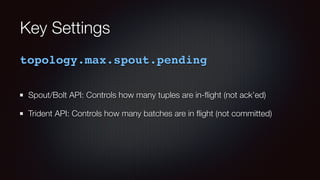

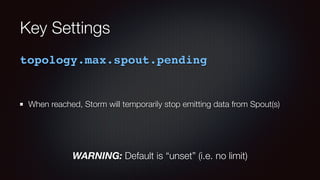

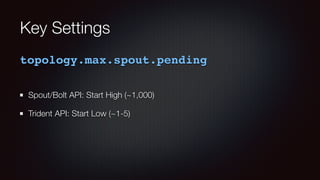

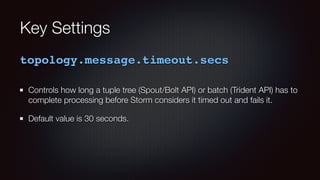

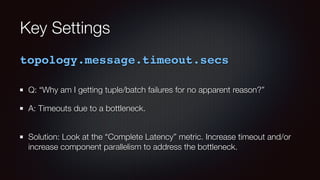

The document discusses strategies for scaling Apache Storm, emphasizing the importance of optimizing code and managing resources effectively. It highlights the significance of understanding latencies and network performance as well as the need for parallelism in handling data streams. Key recommendations include externalizing configurations, performing rigorous performance testing, and maintaining collaboration between development and operations teams.