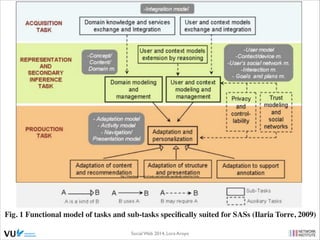

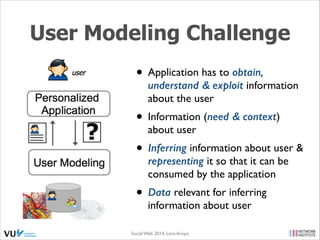

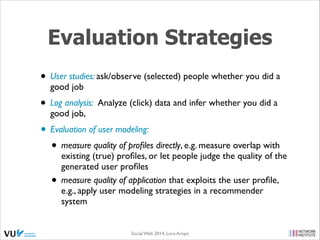

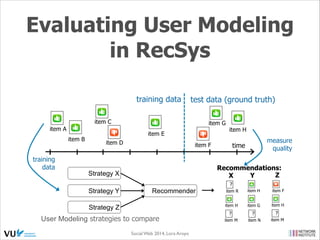

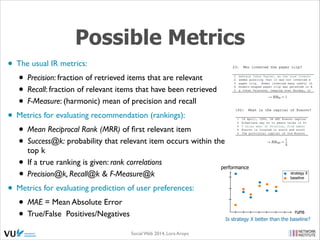

The document discusses user modeling and personalization techniques for social web applications, emphasizing the importance of inferring user information from various data sources. It covers different user modeling approaches, including overlay models, user stereotypes, and interactive user modeling to improve recommendation systems. Additionally, it highlights evaluation strategies and the challenges of balancing relevance and novelty in adaptive systems.

![Example Evaluation

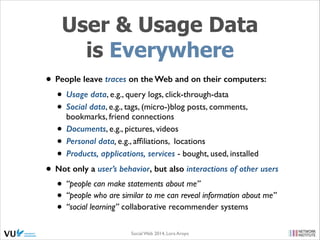

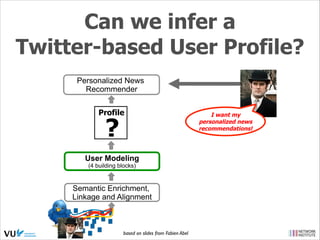

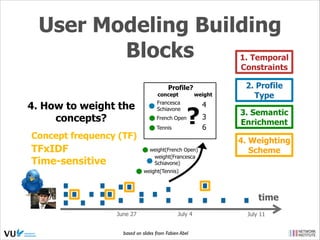

• [Rae et al.] a typical example of how to investigate and evaluate a proposal for

improving (tag) recommendations (using social networks)

• Task: test how well the different strategies (different tag contexts) can be used

for tag prediction/recommendation

• Steps:

1. Gather a dataset of tag data part of which can be used as input and aim to

test the recommendation on the remaining tag data

2. Use the input data and calculate for the different strategies the predictions

3. Measure the performance using standard (IR) metrics: Precision of the top

5 recommended tags (P@5), Mean Reciprocal Rank (MRR), Mean Average

Precision (MAP)

4. Test the results for statistical significance using T-test, relative to the

baseline (e.g. existing approach, competitive approach)

[Rae et al. Improving Tag Recommendations Using Social Networks, RIAO’10]]

Social Web 2014, Lora Aroyo!](https://image.slidesharecdn.com/lecture5socialweb2014-140303023156-phpapp02/85/Lecture-5-Personalization-on-the-Social-Web-2014-31-320.jpg)

![Example Evaluation

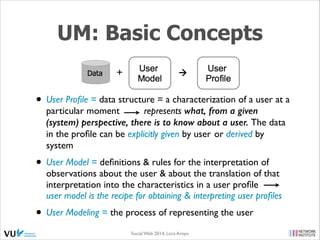

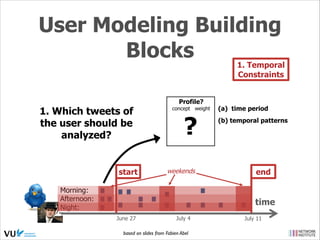

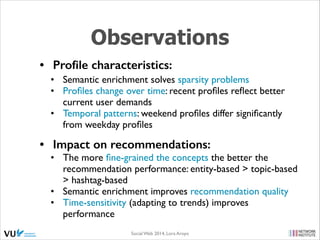

• [Guy et al.] another example of a similar evaluation approach

• The different strategies differ in the way people & tags are

used: with tag-based systems, there are complex

relationships between users, tags and items, and strategies

aim to find the relevant aspects of these relationships for

modeling and recommendation

• The baseline is the ‘most popular’ tags - often used to

compare the most popular tags to the tags predicted by a

particular personalization strategy - investigating whether

the personalization is worth the effort and is able to

outperform the easily available baseline.

[Guy et al. Social Media Recommendation based on People and Tags, SIGIR’10]]

Social Web 2014, Lora Aroyo!](https://image.slidesharecdn.com/lecture5socialweb2014-140303023156-phpapp02/85/Lecture-5-Personalization-on-the-Social-Web-2014-32-320.jpg)

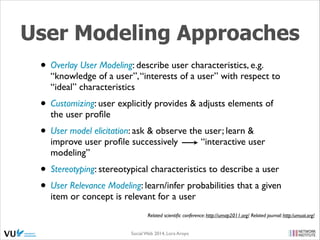

![Social Networks &

Interest Similarity

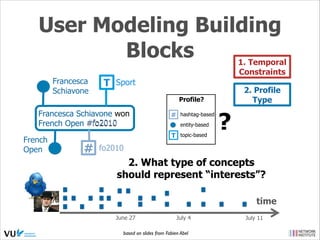

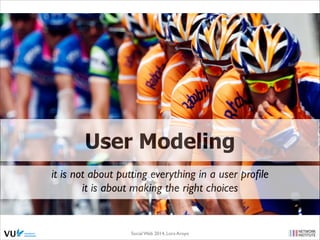

• collaborative filtering: ‘neighborhoods’ of people with similar interest

& recommending items based on likings in neighborhood

• limitations: next to ‘cold start’ and ‘sparsity’ the lack of control (over

one’s neighborhood) is also a problem, i.e. cannot add ‘trusted’ people, nor

exclude ‘strange’ ones

• therefore, interest in ‘social recommenders’, where presence of social

connections defines the similarity in interests (e.g. social tagging CiteULike):

• does a social connection indicate user interest similarity?

• how much users interest similarity depends on the strength of their

connection?

• is it feasible to use a social network as a personalized

recommendation?

[Lin & Brusilovsky, Social Social Web 2014, Lora Aroyo! Similarity: The Case of CiteULike, HT’10]

Networks and Interest](https://image.slidesharecdn.com/lecture5socialweb2014-140303023156-phpapp02/85/Lecture-5-Personalization-on-the-Social-Web-2014-44-320.jpg)

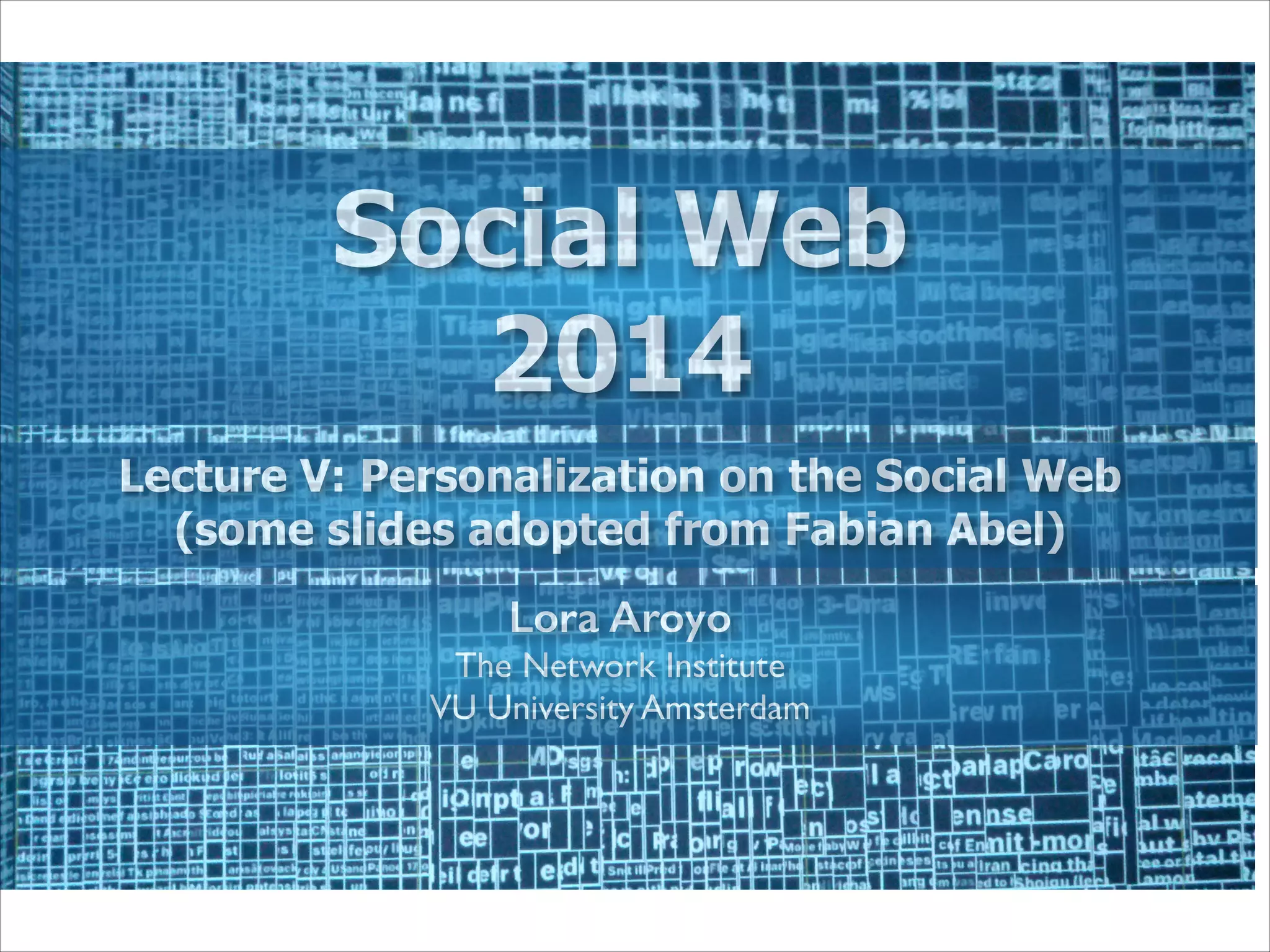

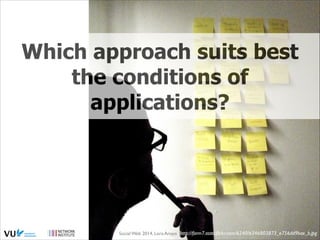

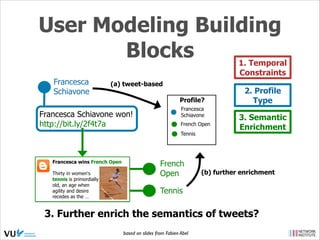

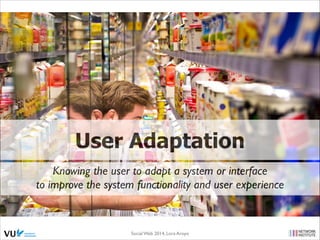

![Government stops

renovation of tower

bridge Oct 13th 2011

Tower Bridge

is a combined bascule and suspension

bridge in London, England, over the

River Thames.

Category: politics, england

Related Twiper news:

@bob: Why do they stop to… [more]

@mary: London stops reno… [more]

Tower Bridge today Under construction

Content

Features

db:Politics

db:Sports

db:Education

db:London

db:Tower_Bridge

db:Government

db:UK

Weighting strategy:

- occurrence frequency

- normalize vectors (1-norm ! sum of vector equals 1)

based on slides from Fabien Abel

0.2

0

0

0.2 = a

0.4

0.1

0.1](https://image.slidesharecdn.com/lecture5socialweb2014-140303023156-phpapp02/85/Lecture-5-Personalization-on-the-Social-Web-2014-47-320.jpg)