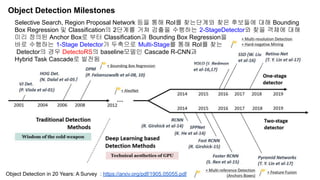

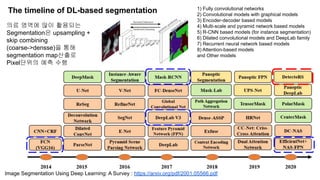

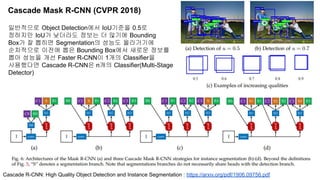

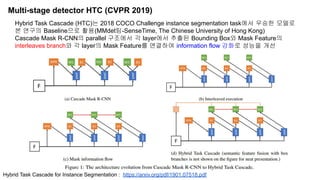

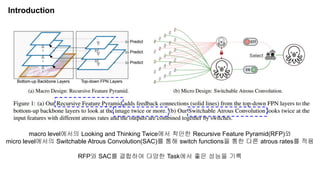

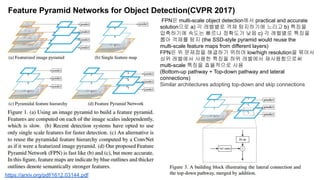

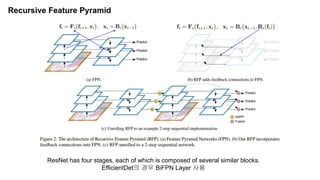

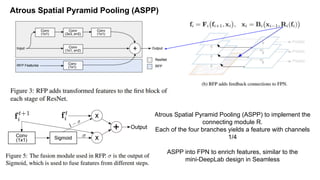

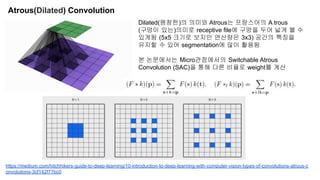

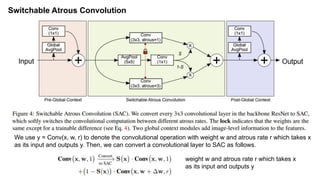

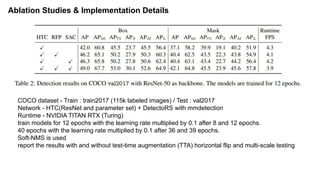

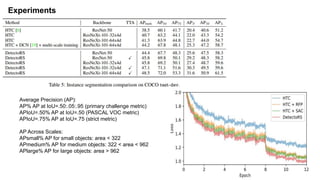

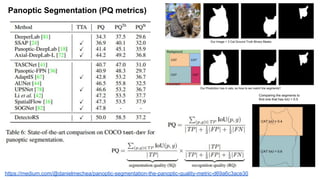

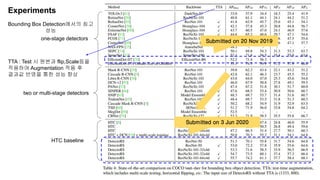

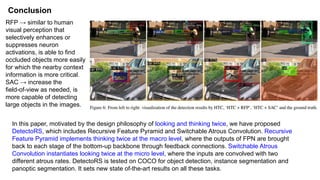

The document discusses advancements in object detection using recursive feature pyramids and switchable atrous convolution, which enhance the performance of detectors through feedback connections and multi-scale processing. It highlights the evolution of detectors, particularly the cascade R-CNN and hybrid task cascade, achieving state-of-the-art results on COCO datasets for object detection and segmentation tasks. The paper emphasizes techniques that mimic human visual perception to improve detection accuracy for occluded and large objects.