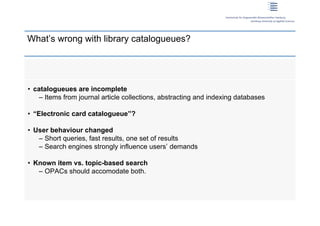

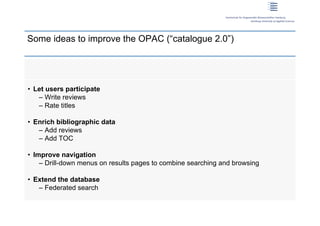

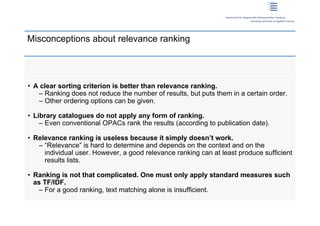

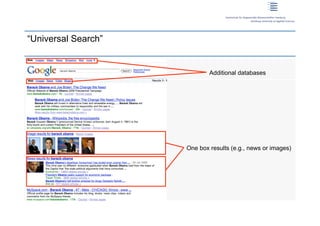

Dirk Lewandowski gave a presentation on how to rank library materials in an online public access catalog (OPAC). He discussed the importance of relevance ranking to improve search results. Some key factors for ranking include text matching, popularity, freshness, and locality. Results lists should include a mixture of item types from different collections to satisfy user needs. Relevance ranking is complex and requires considering multiple factors and data sources. The core function of an OPAC remains search, but other features can enhance the user experience.