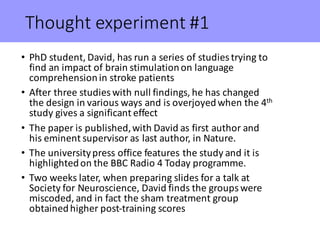

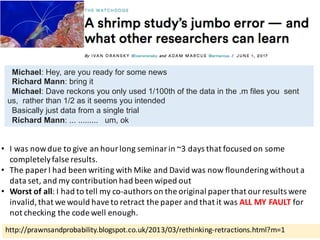

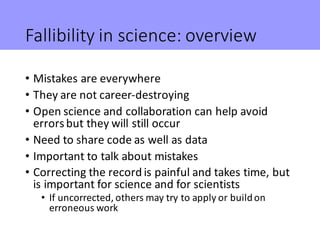

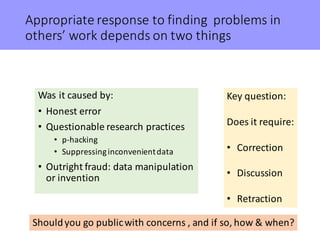

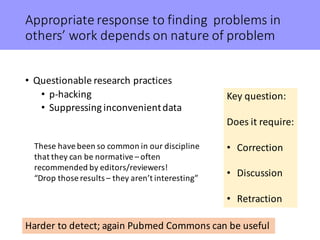

The document discusses responsible ways to handle mistakes in science. It examines several case studies of scientists retracting papers due to errors. Key points made include:

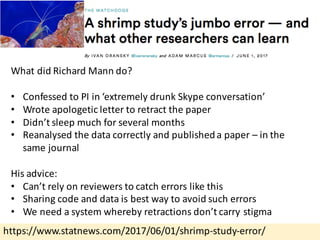

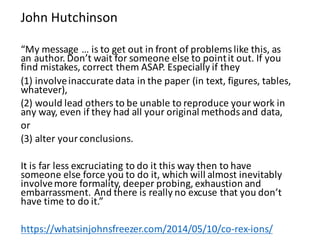

- Mistakes are common in science but can be avoided through open sharing of data and code

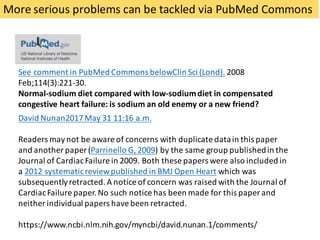

- It is important to promptly correct the record when mistakes are found to avoid others building on erroneous work

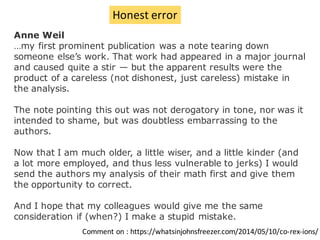

- Retracting papers for honest errors should not negatively impact scientists' careers; integrity is valued

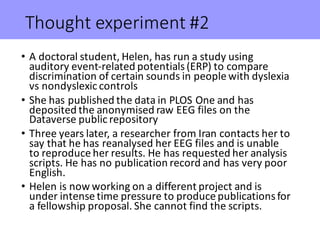

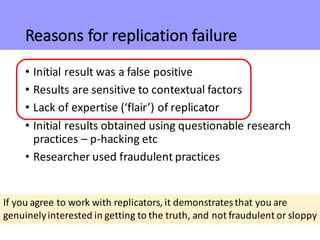

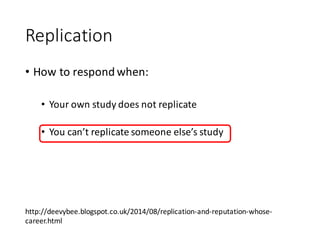

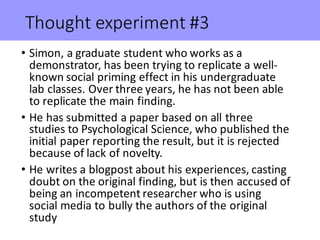

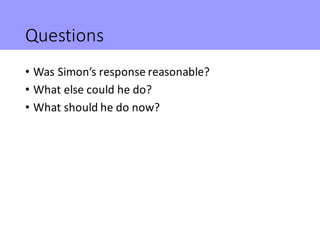

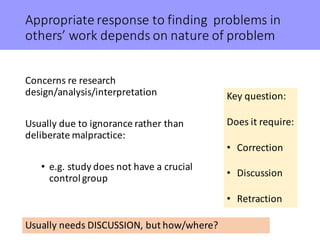

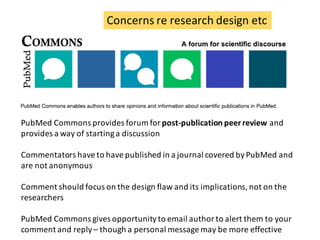

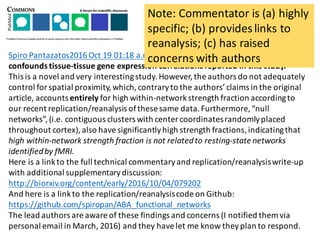

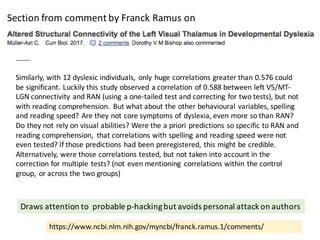

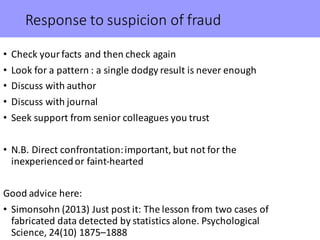

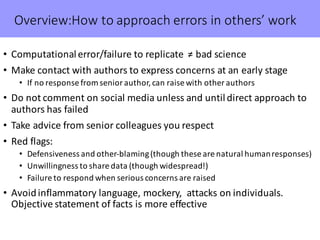

- When others cannot replicate results, discussion and collaboration are preferable to public accusations