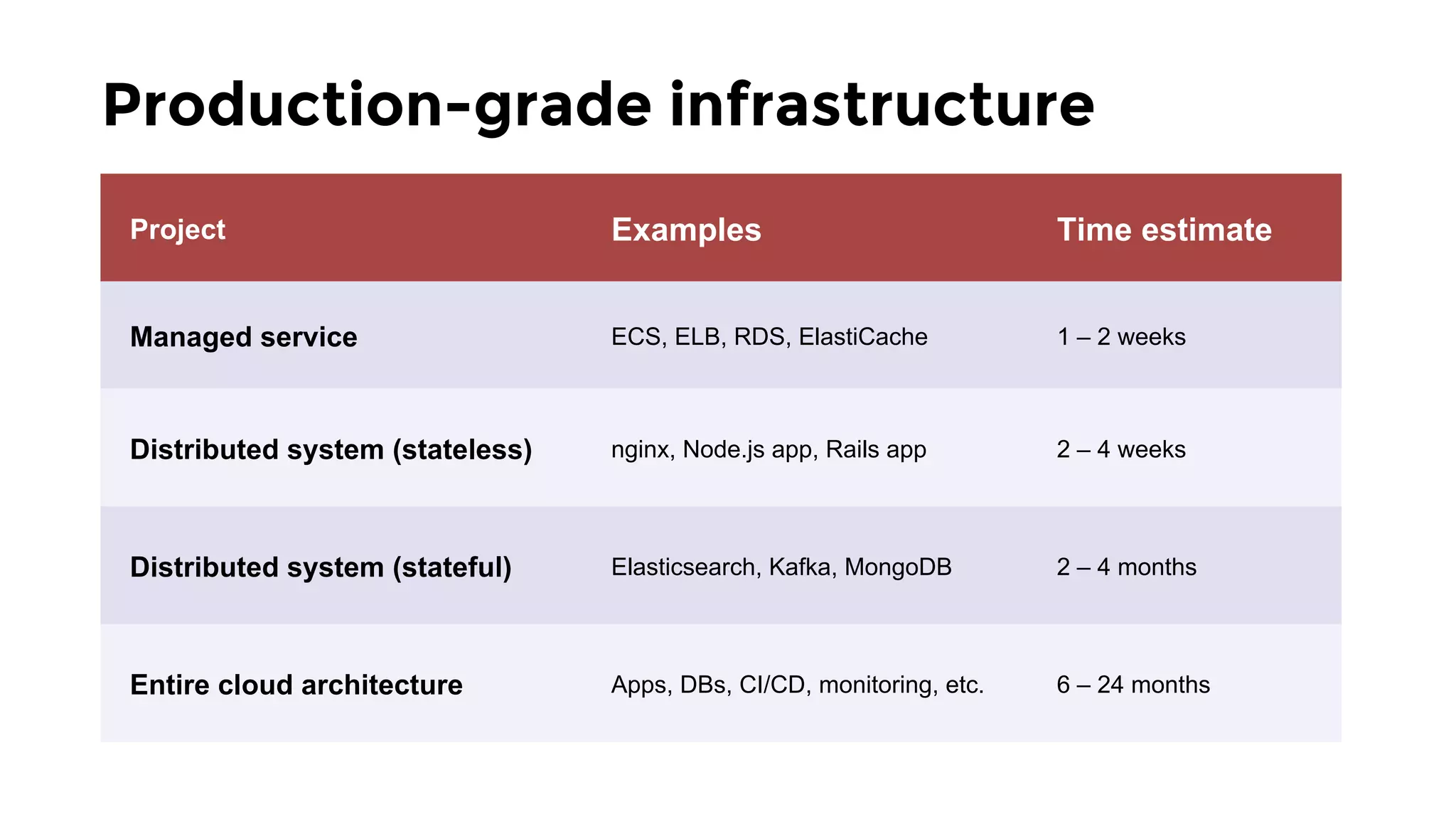

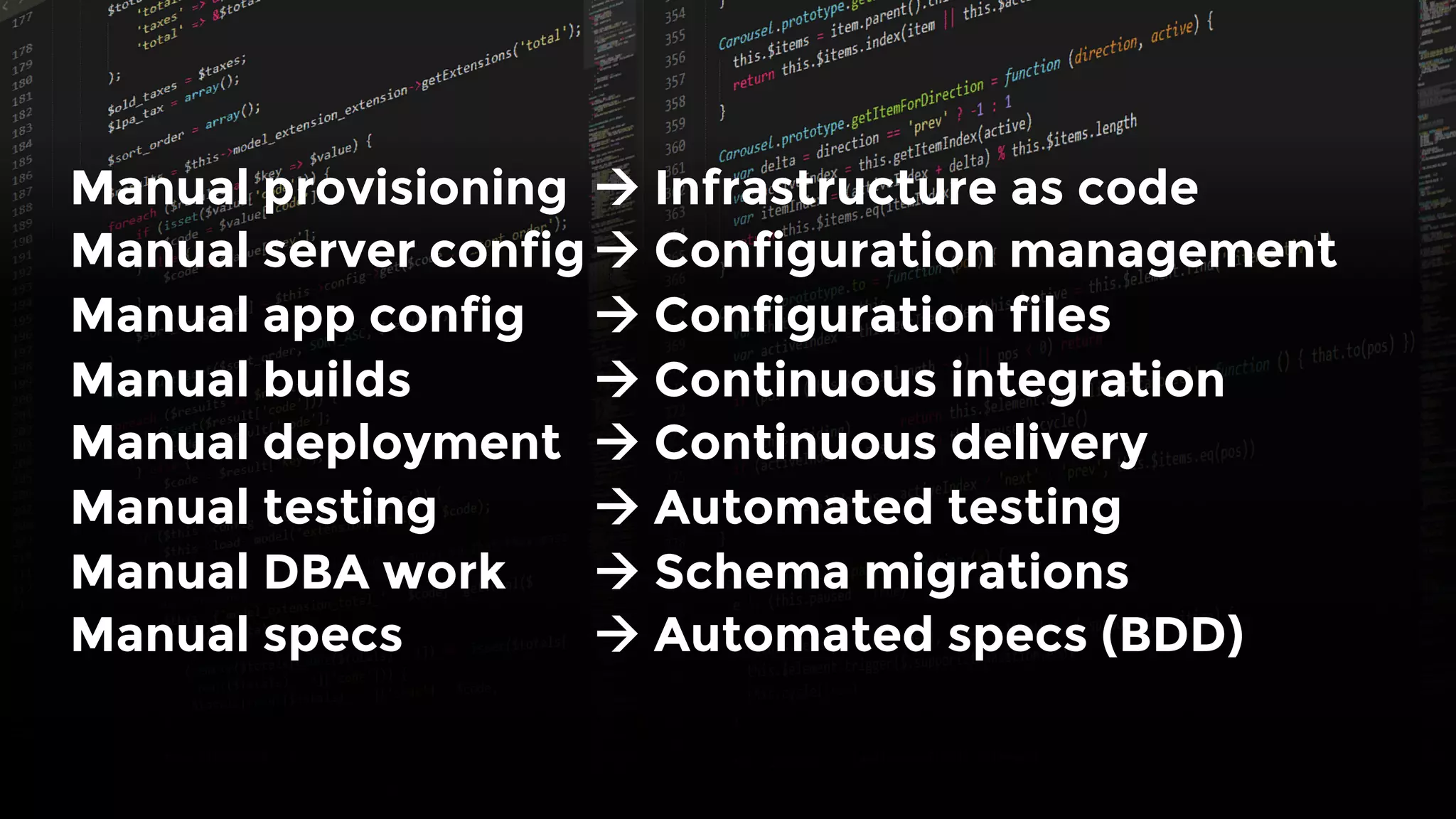

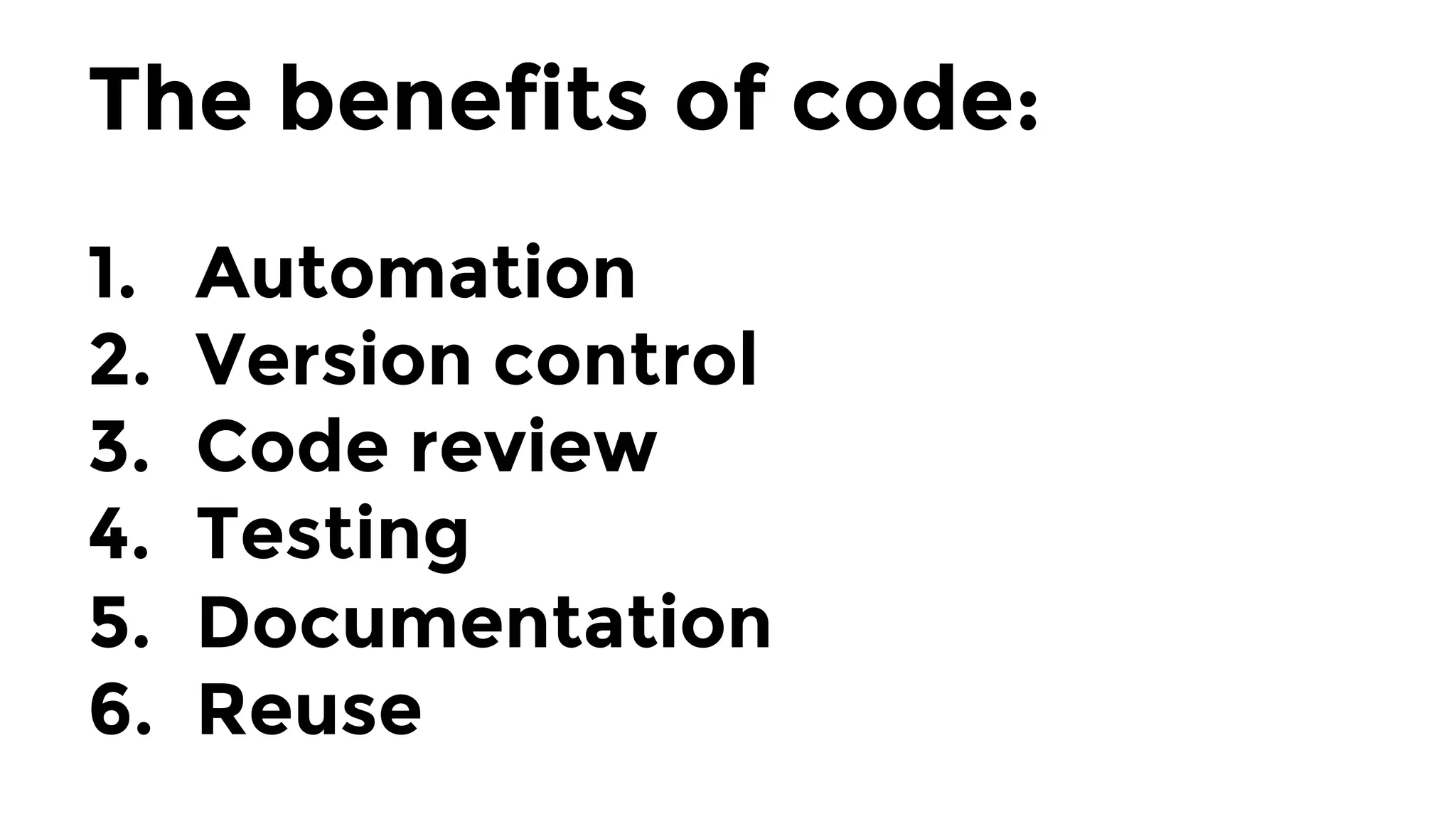

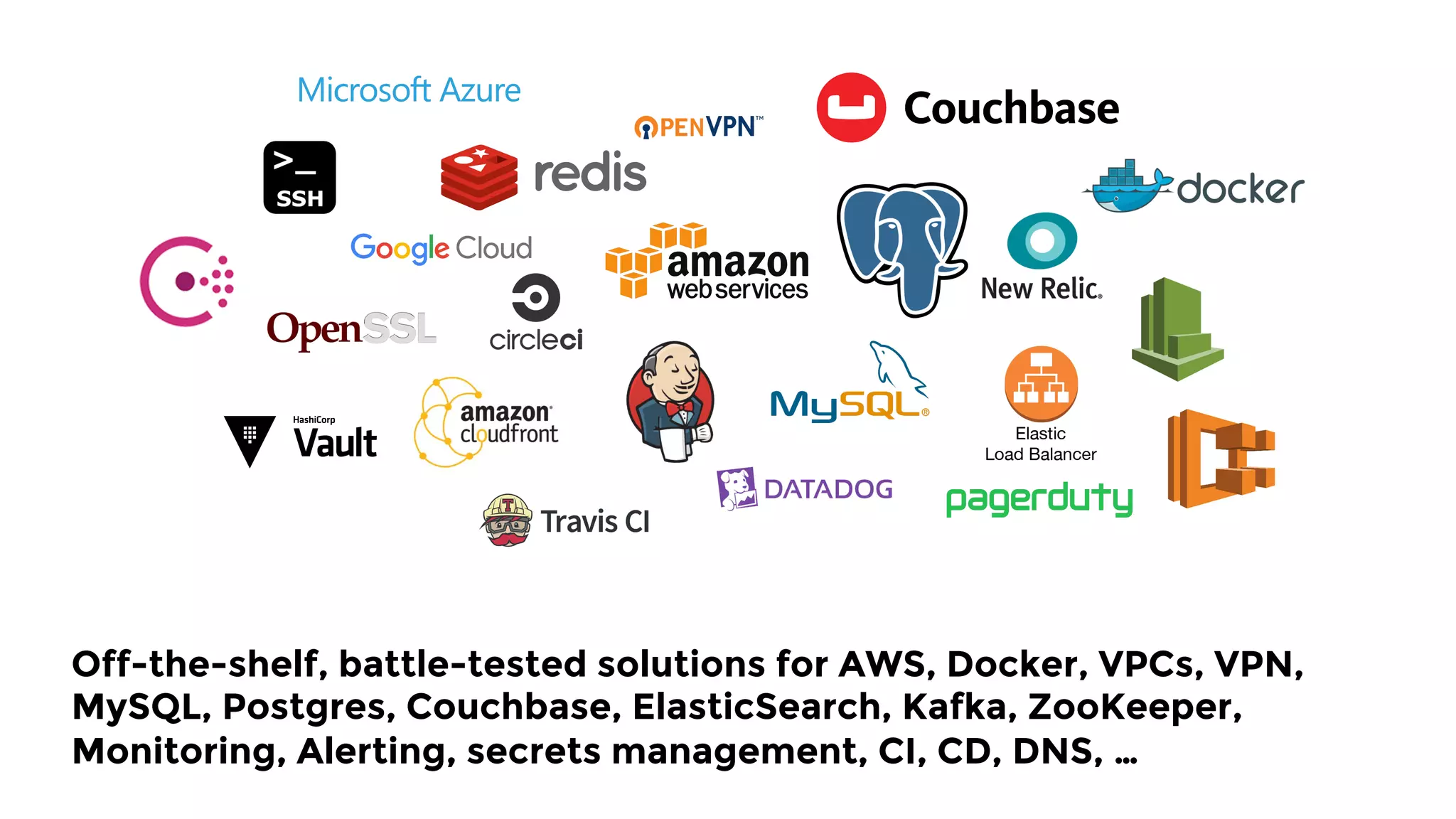

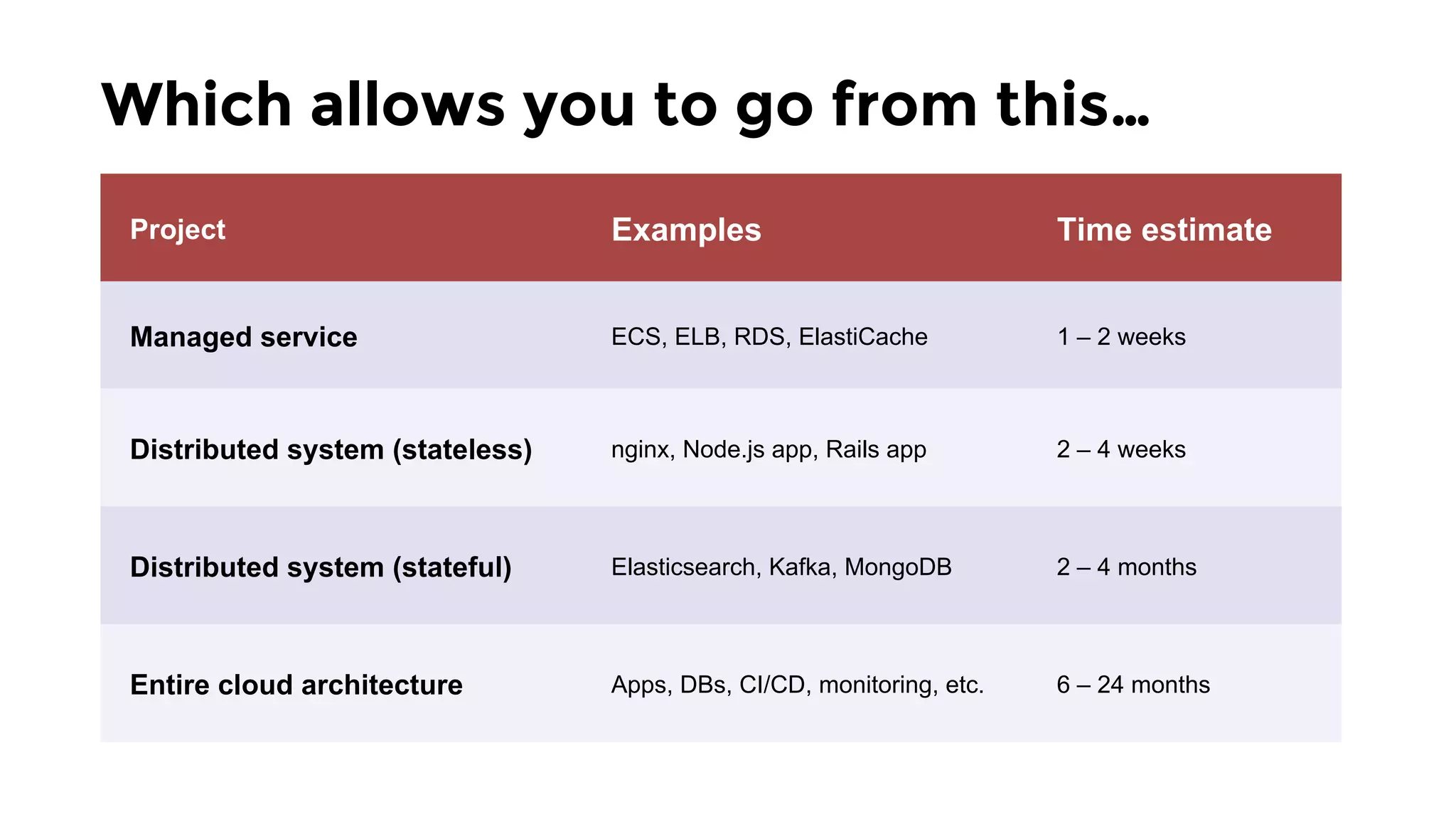

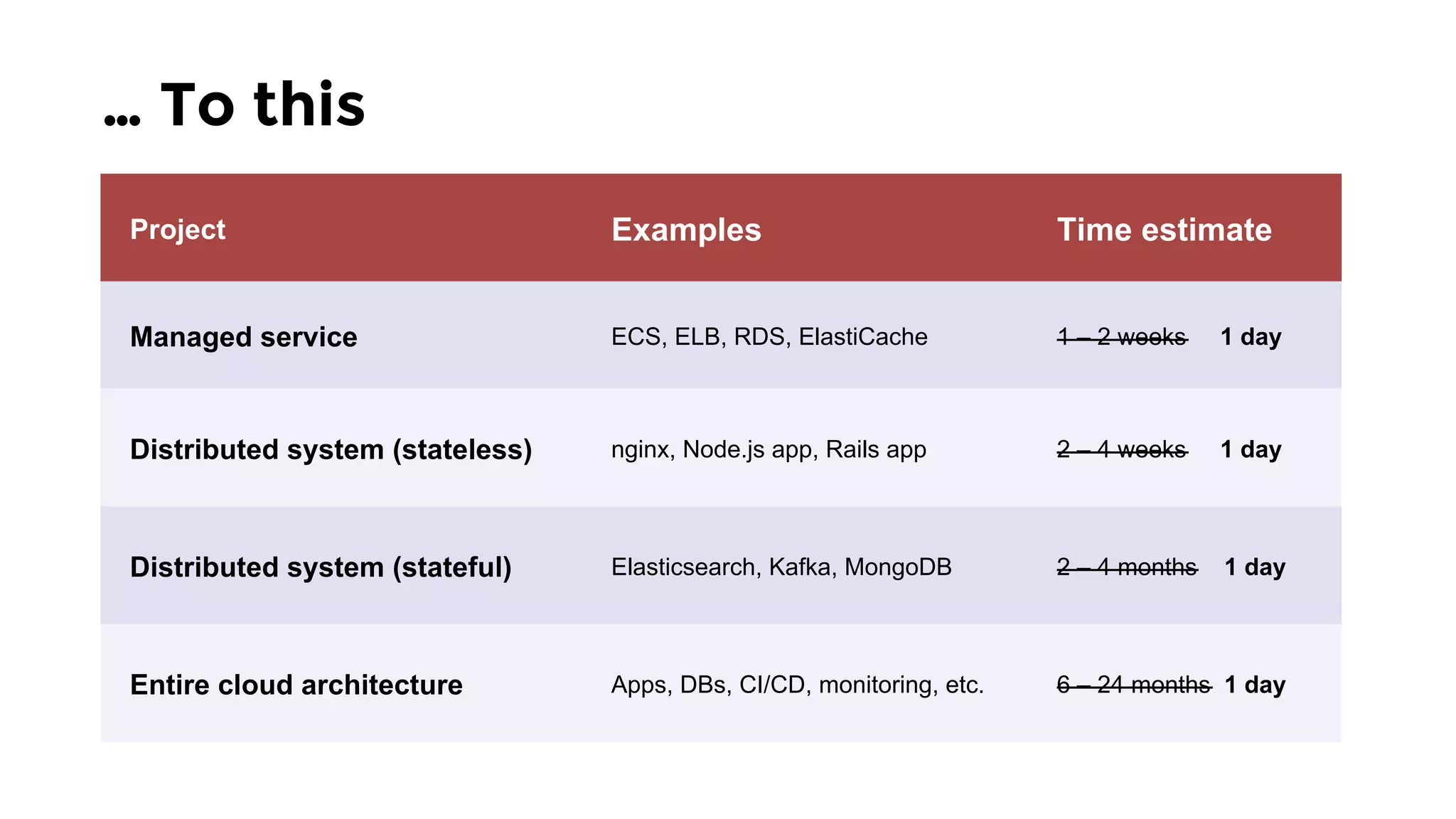

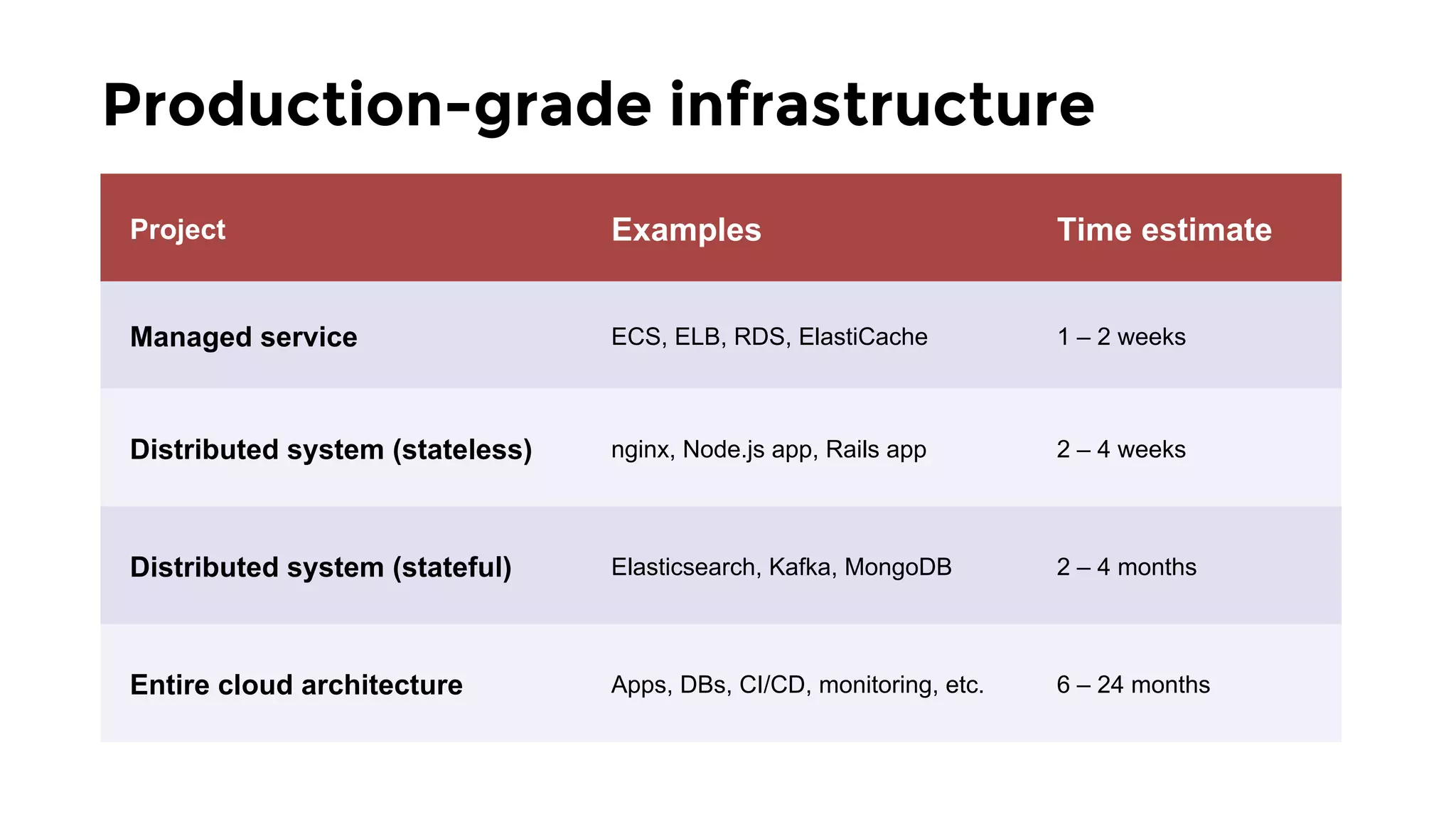

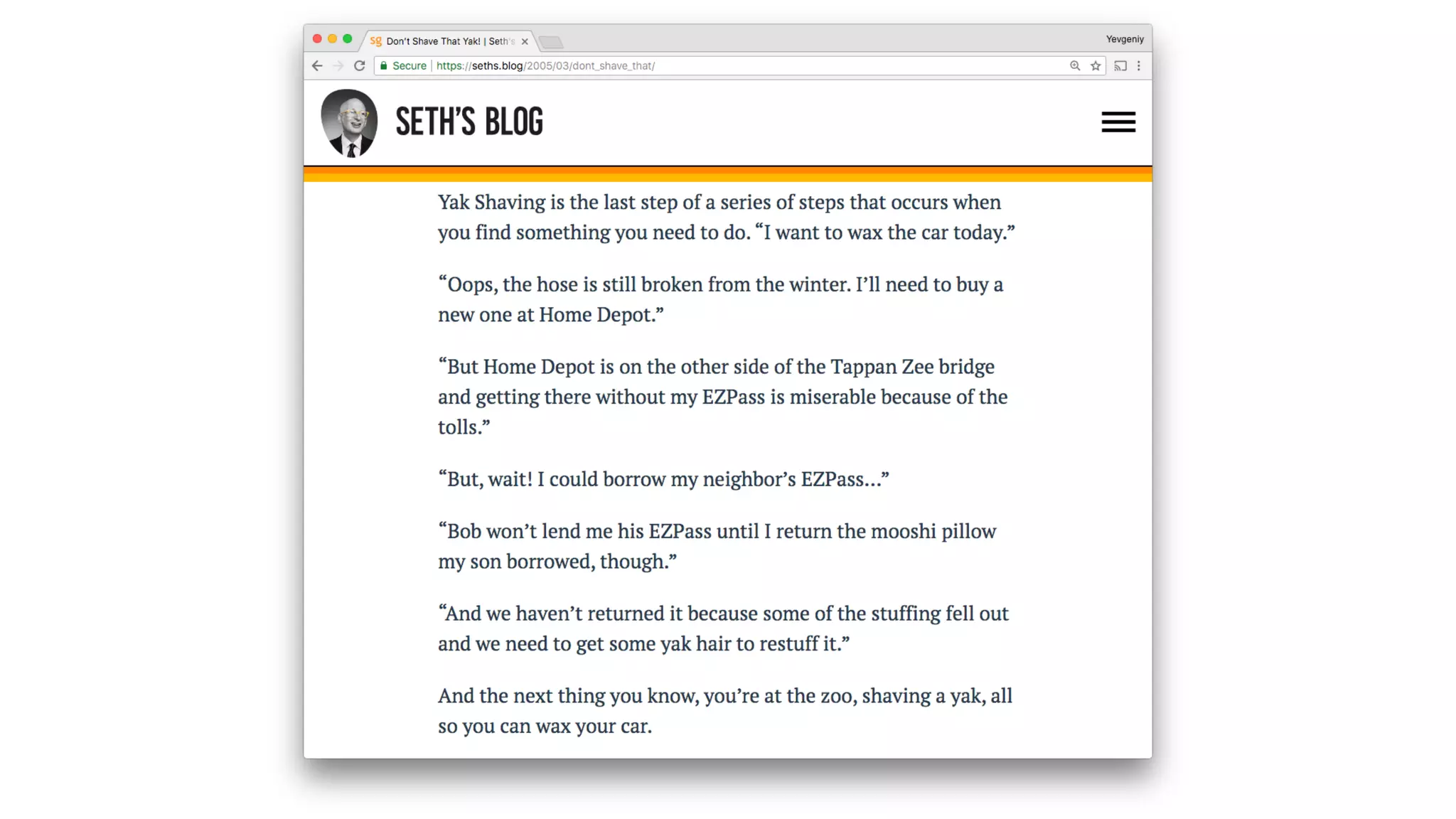

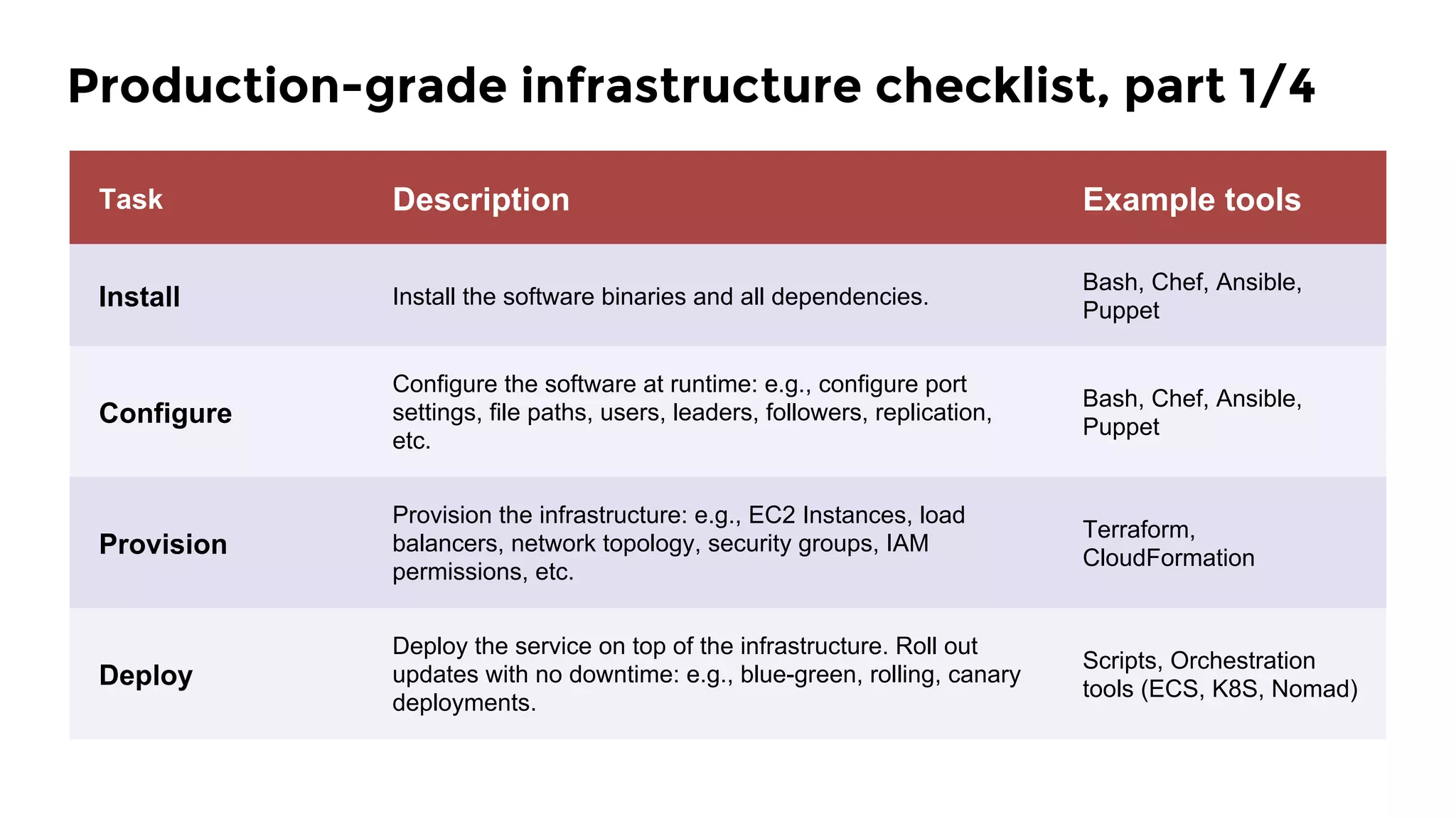

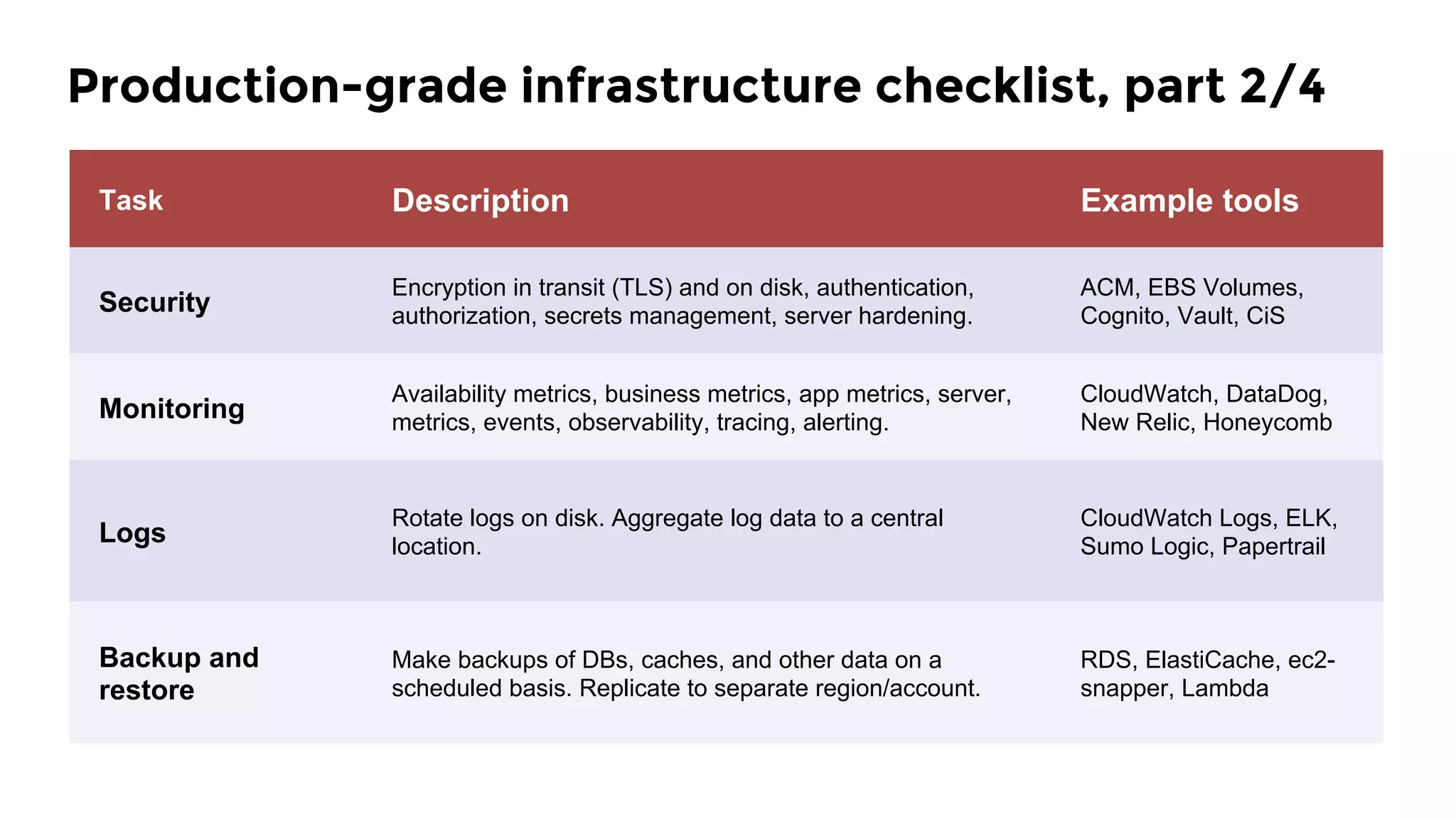

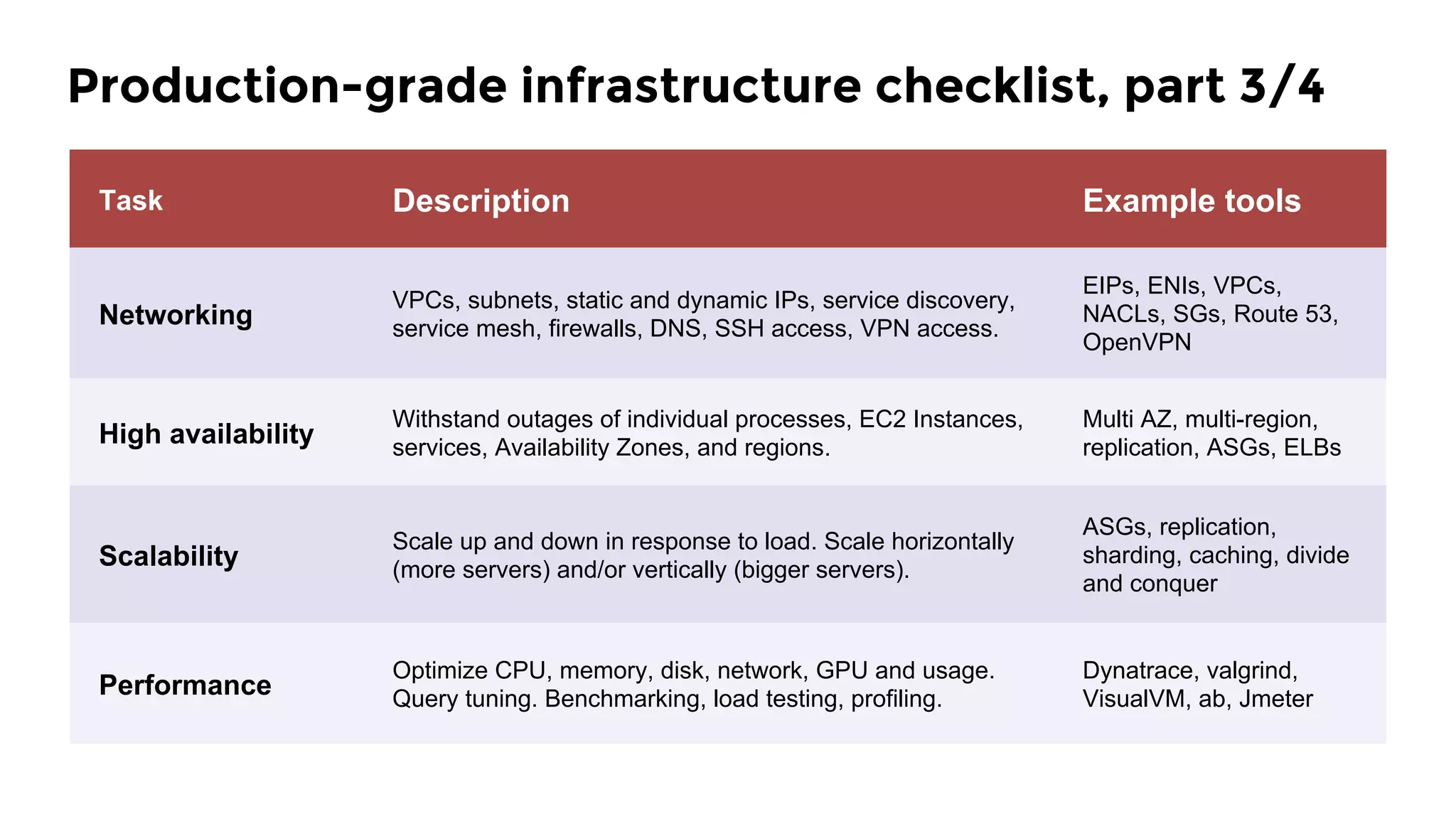

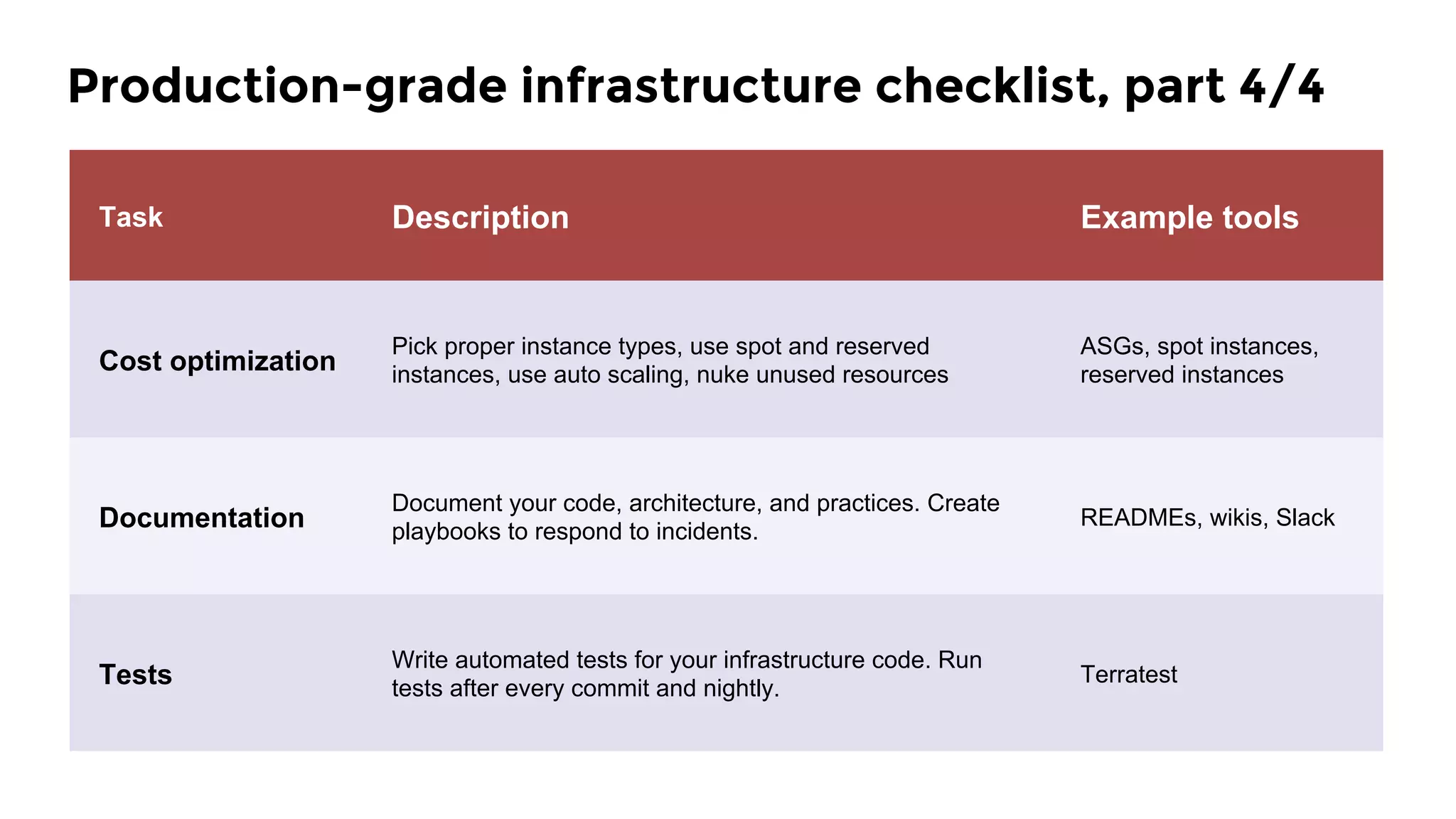

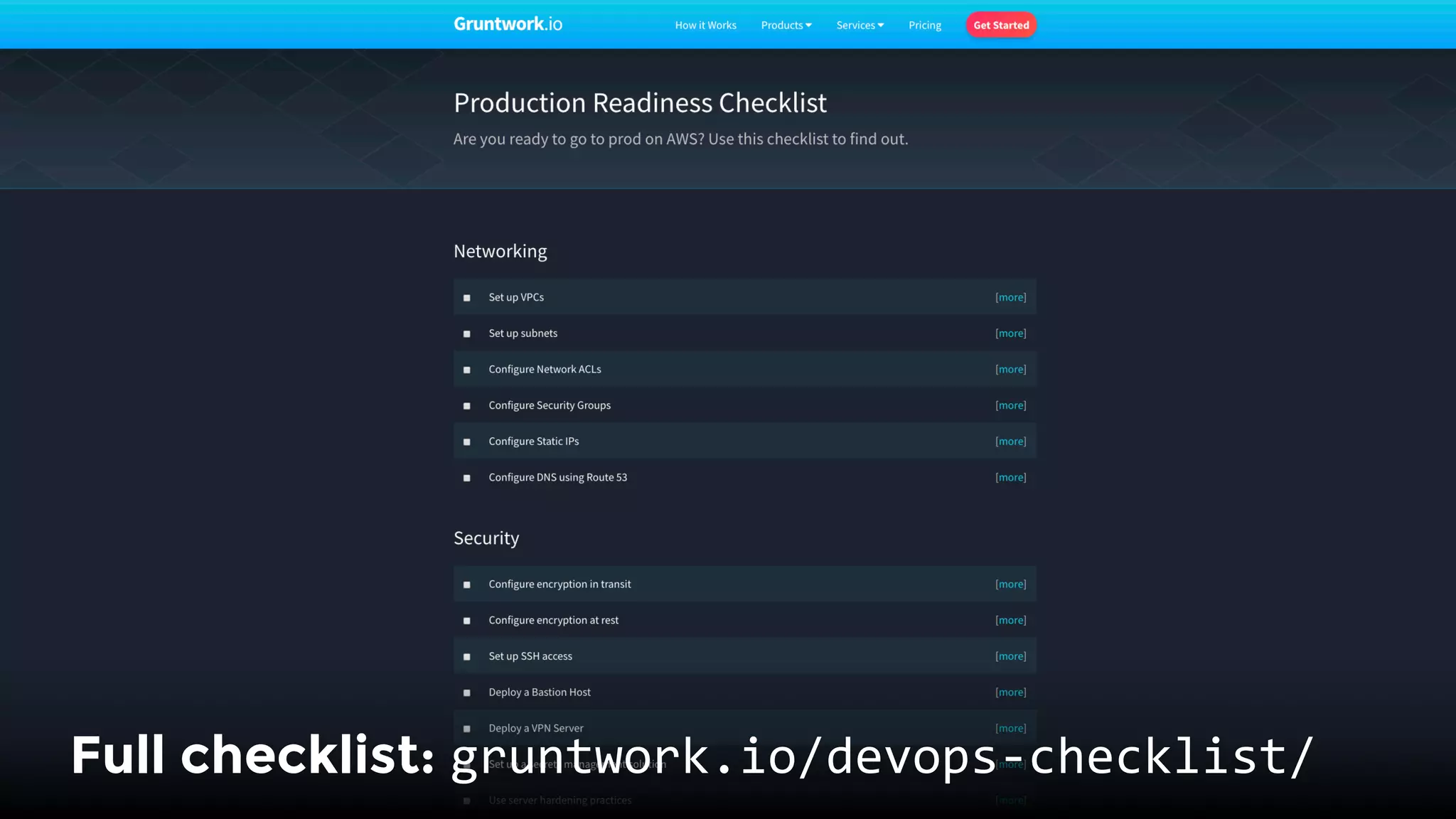

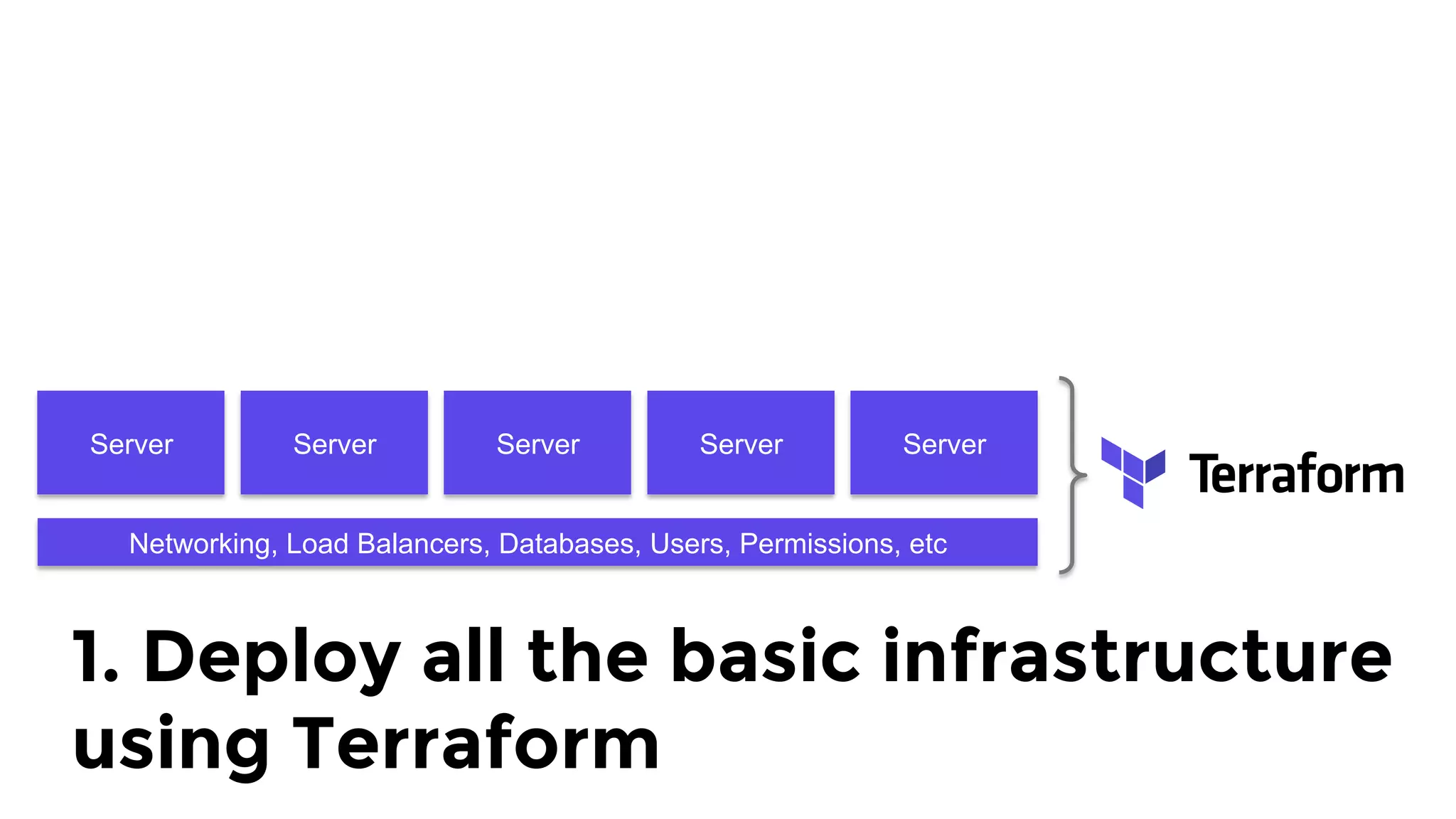

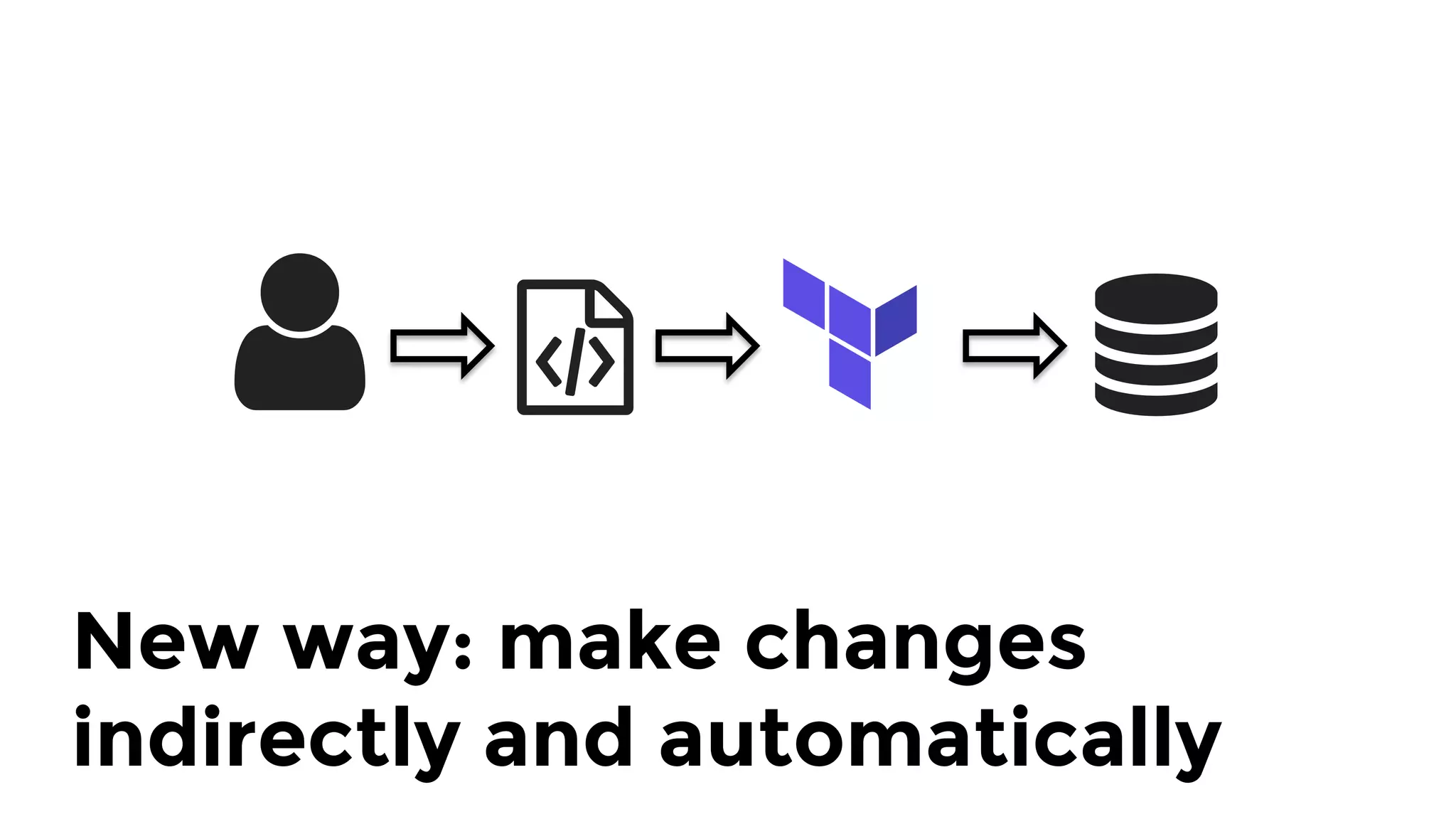

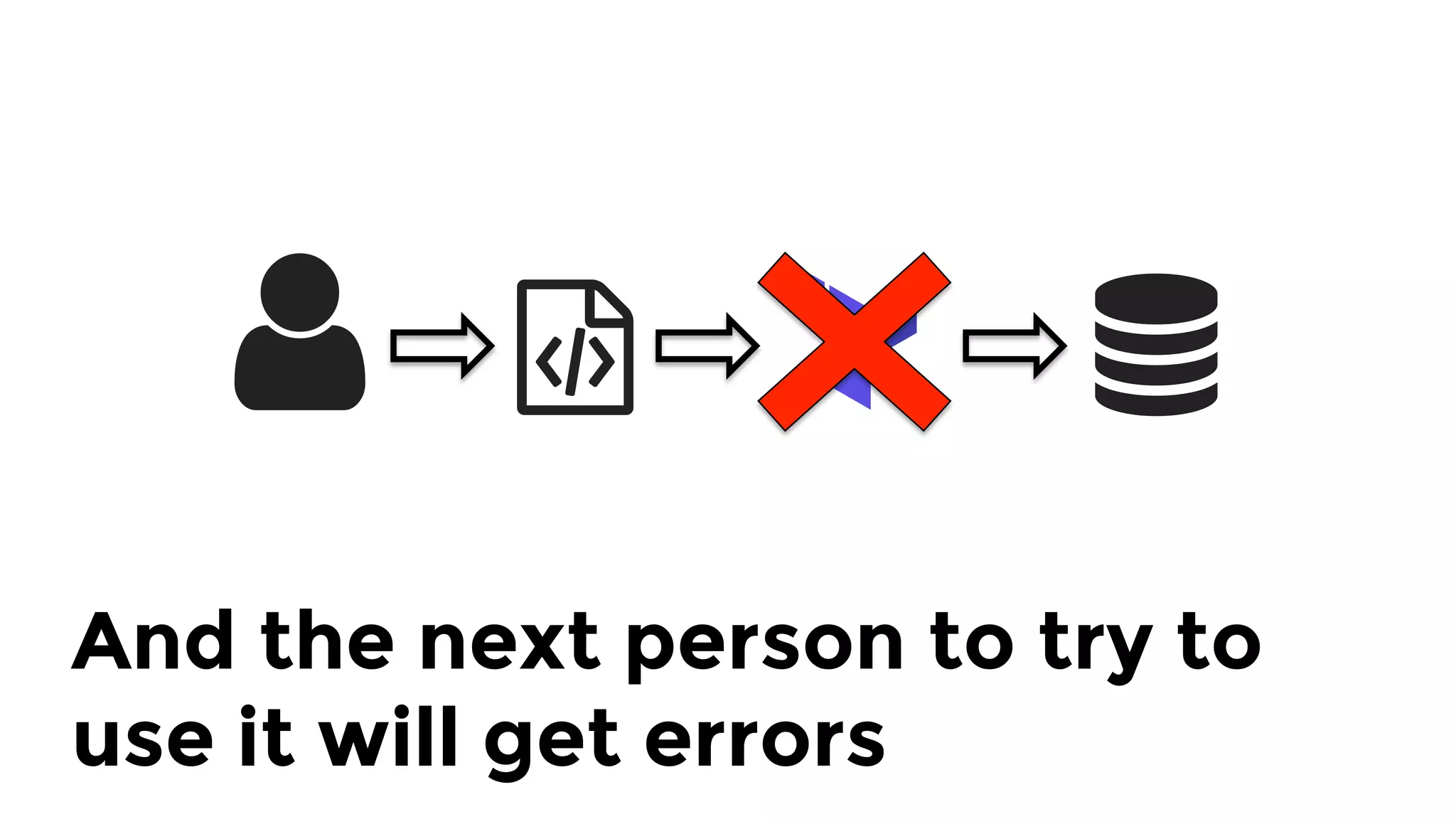

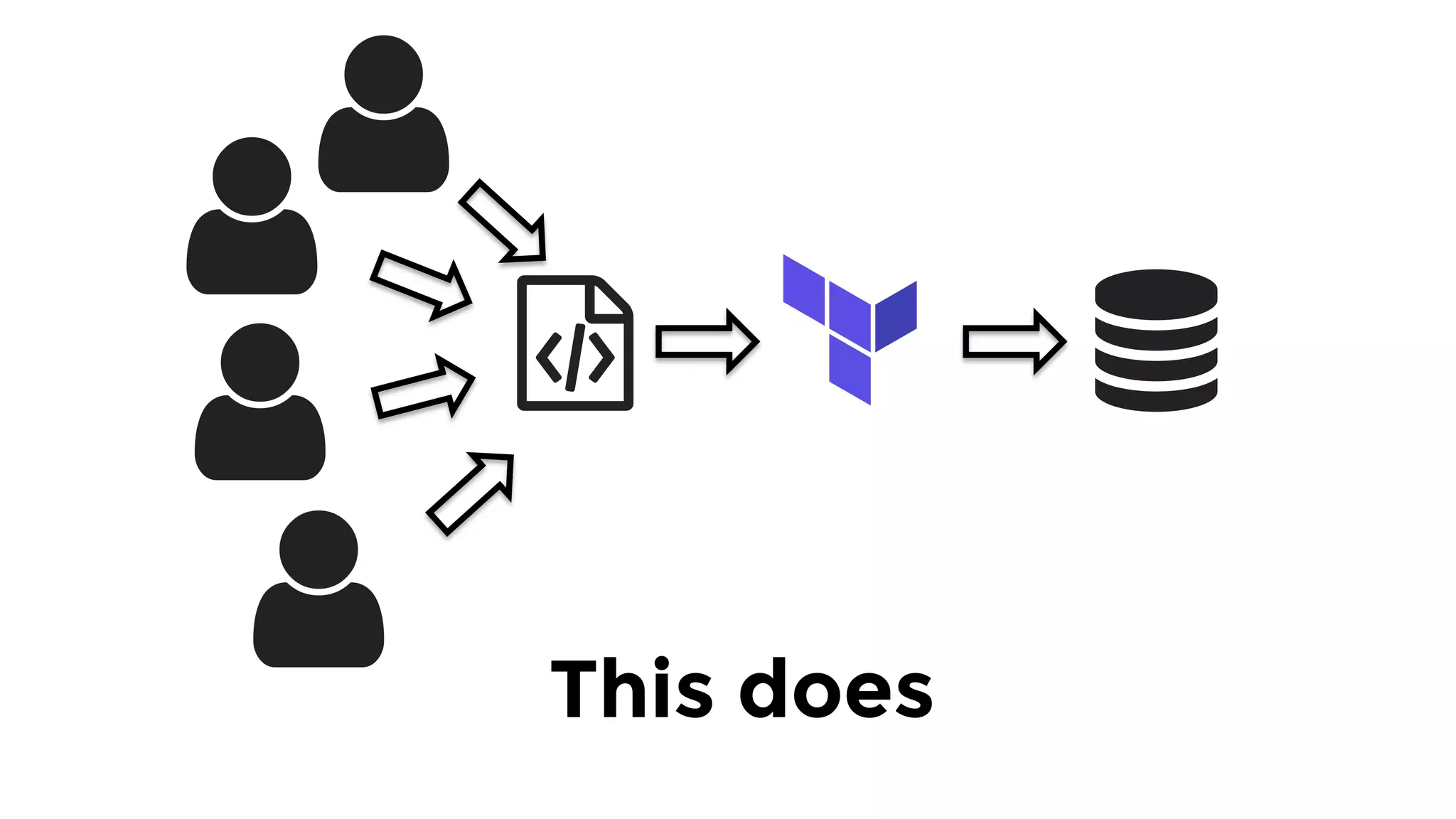

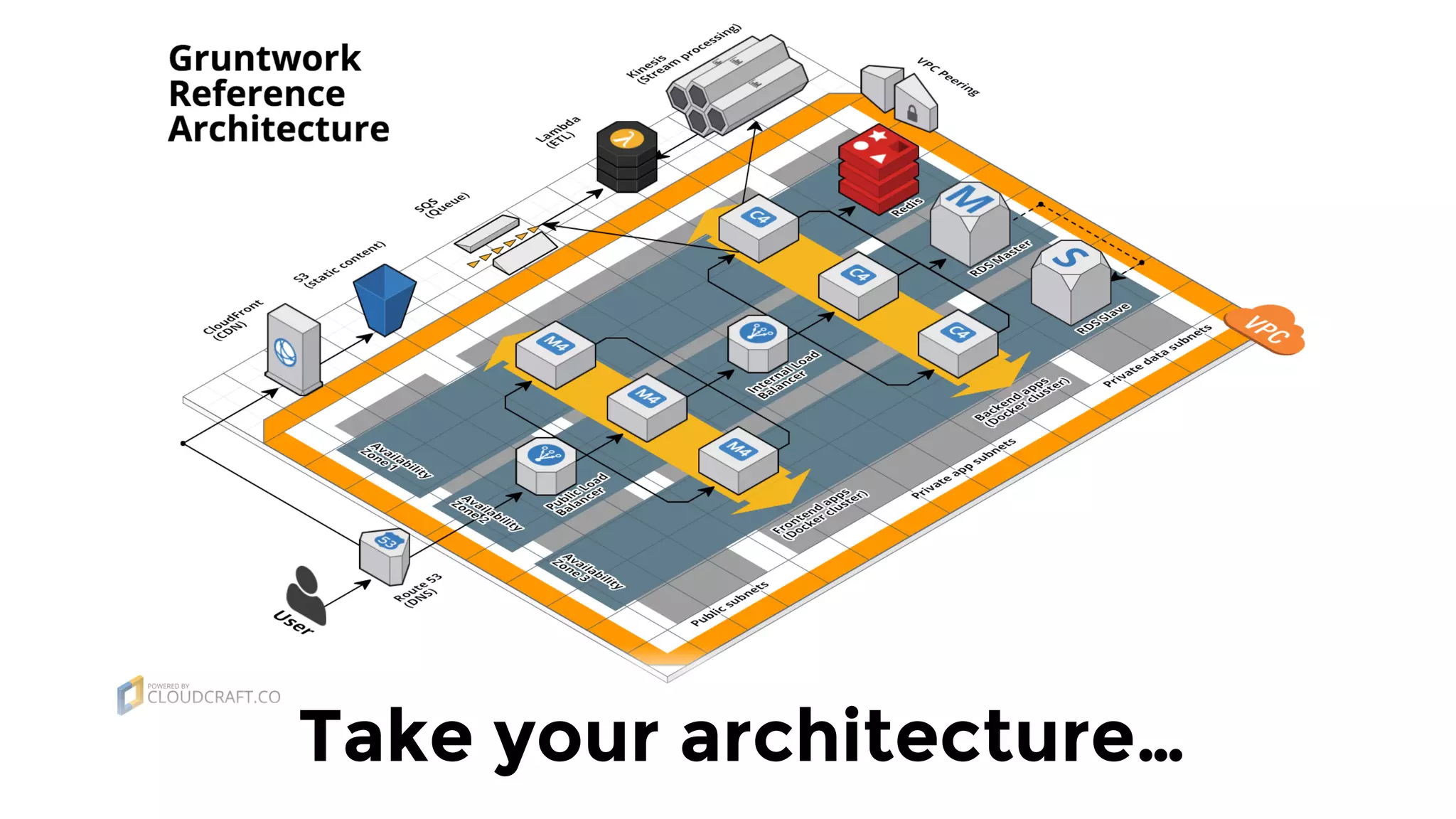

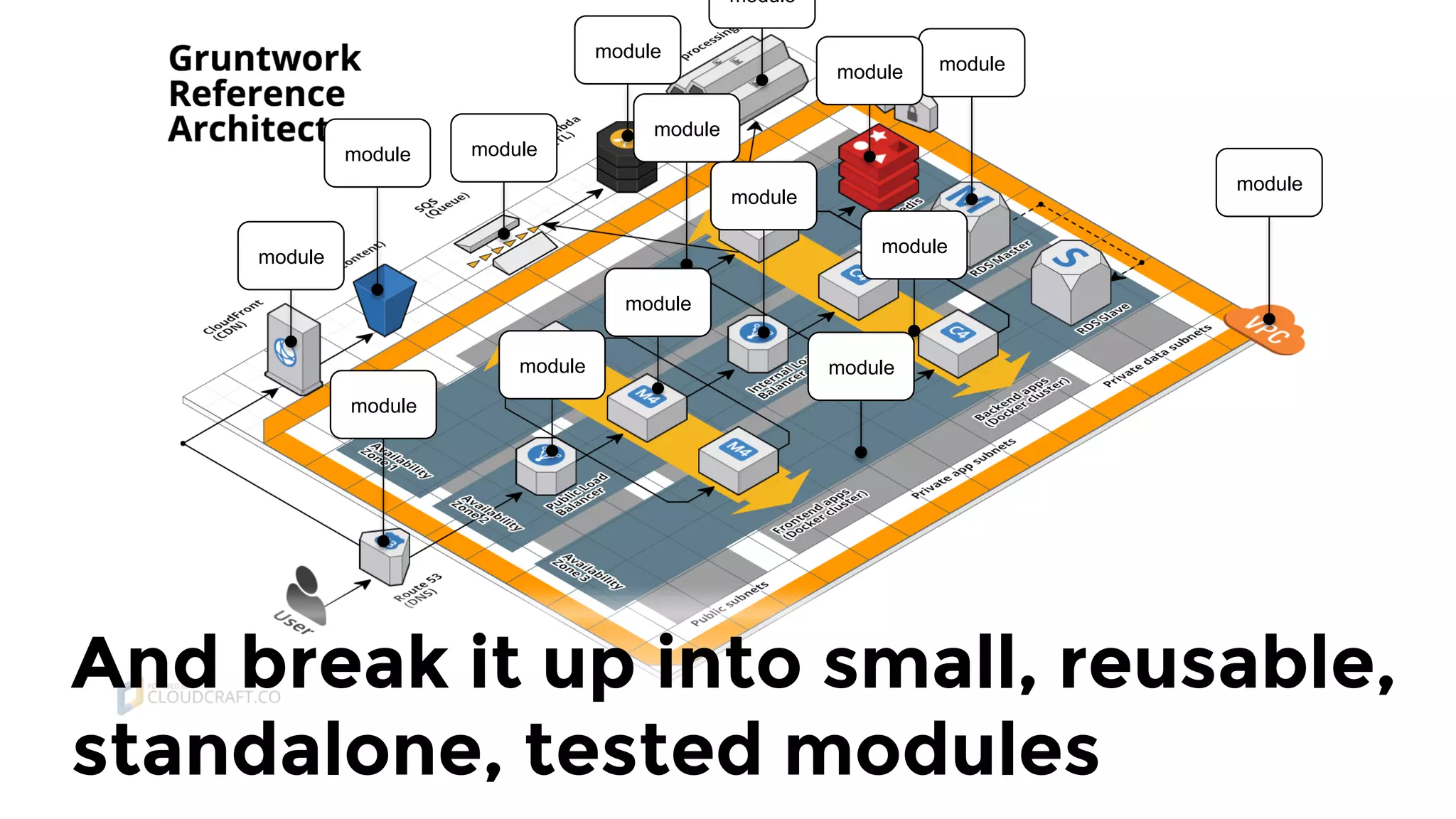

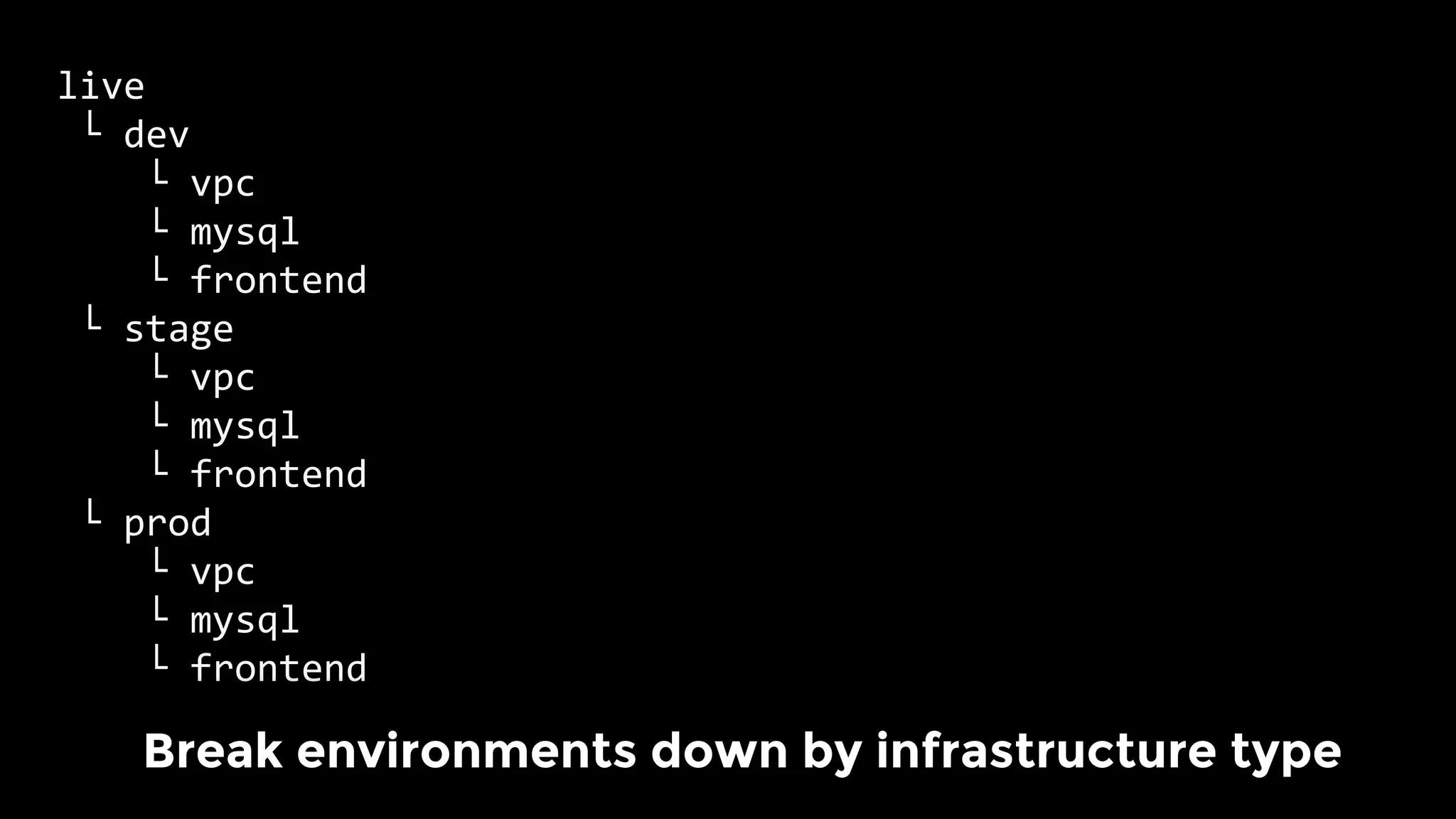

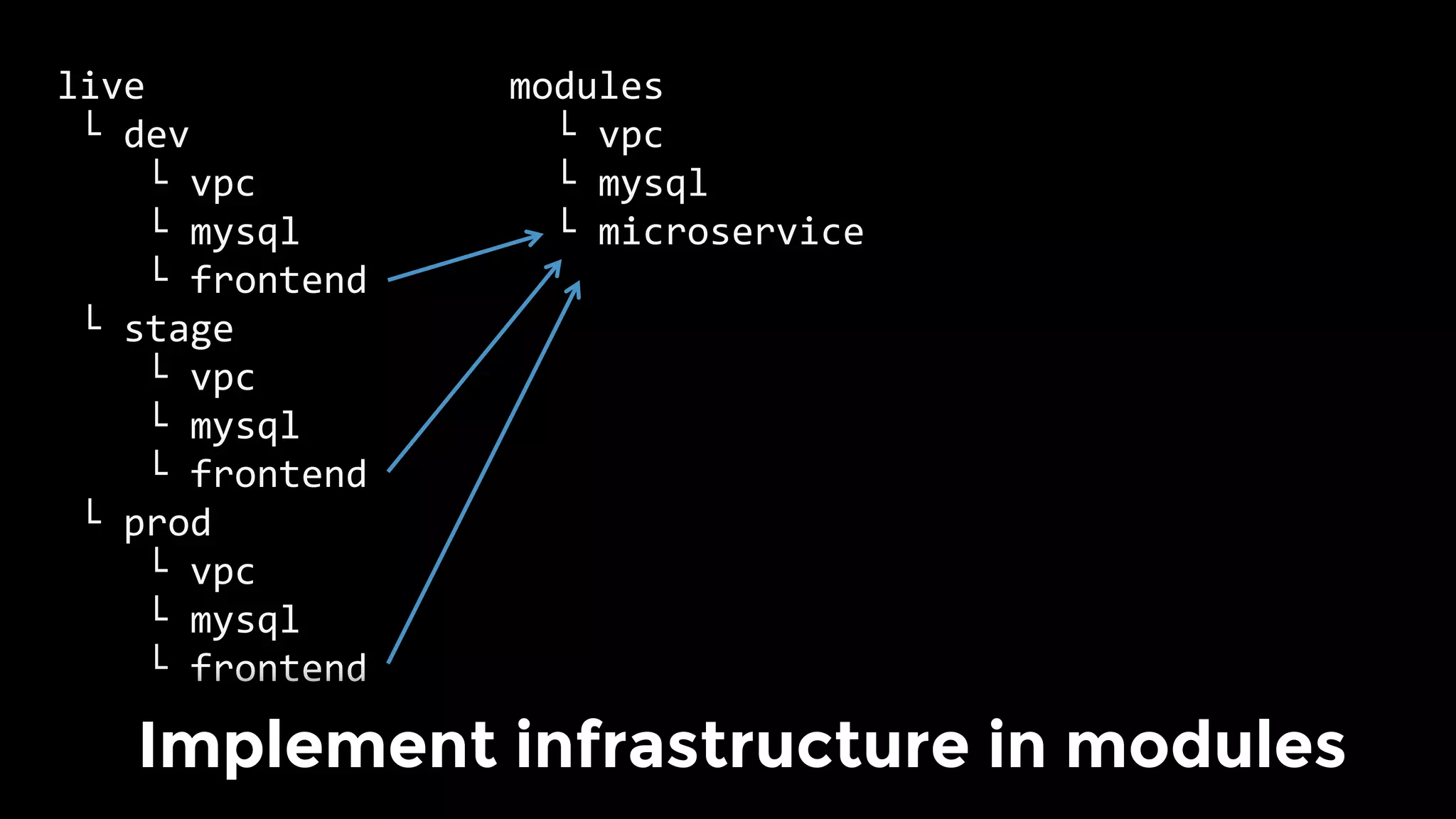

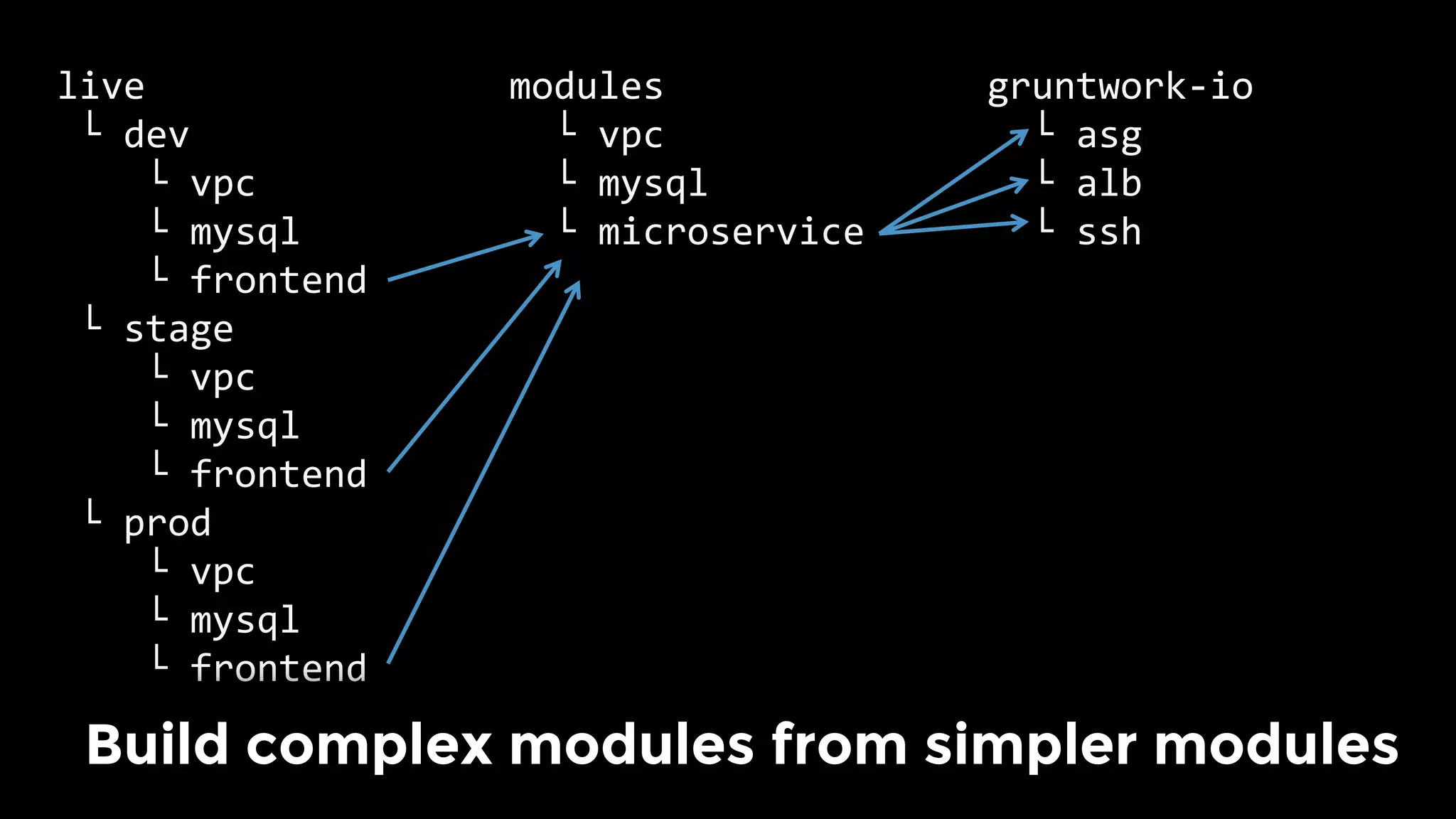

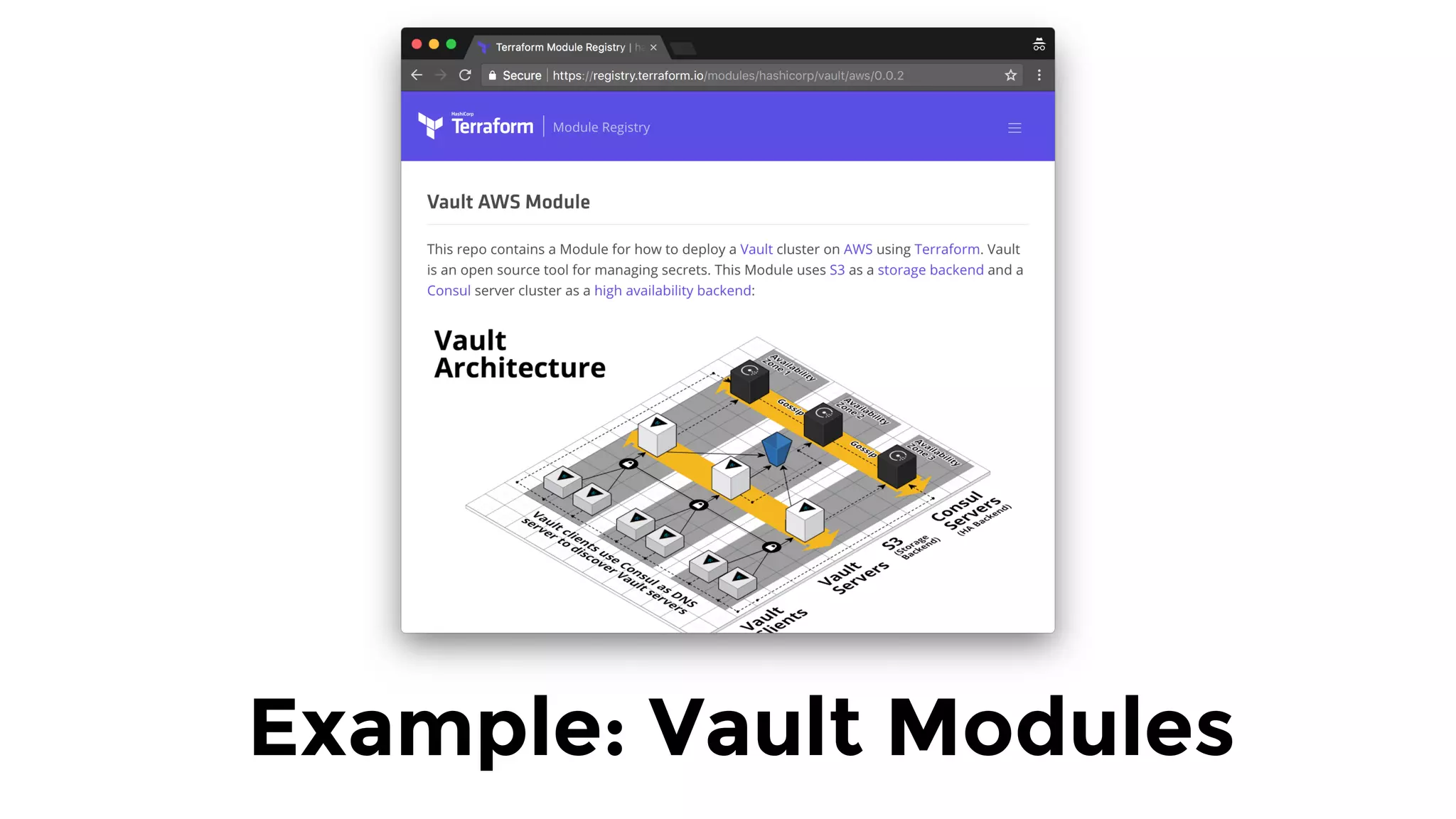

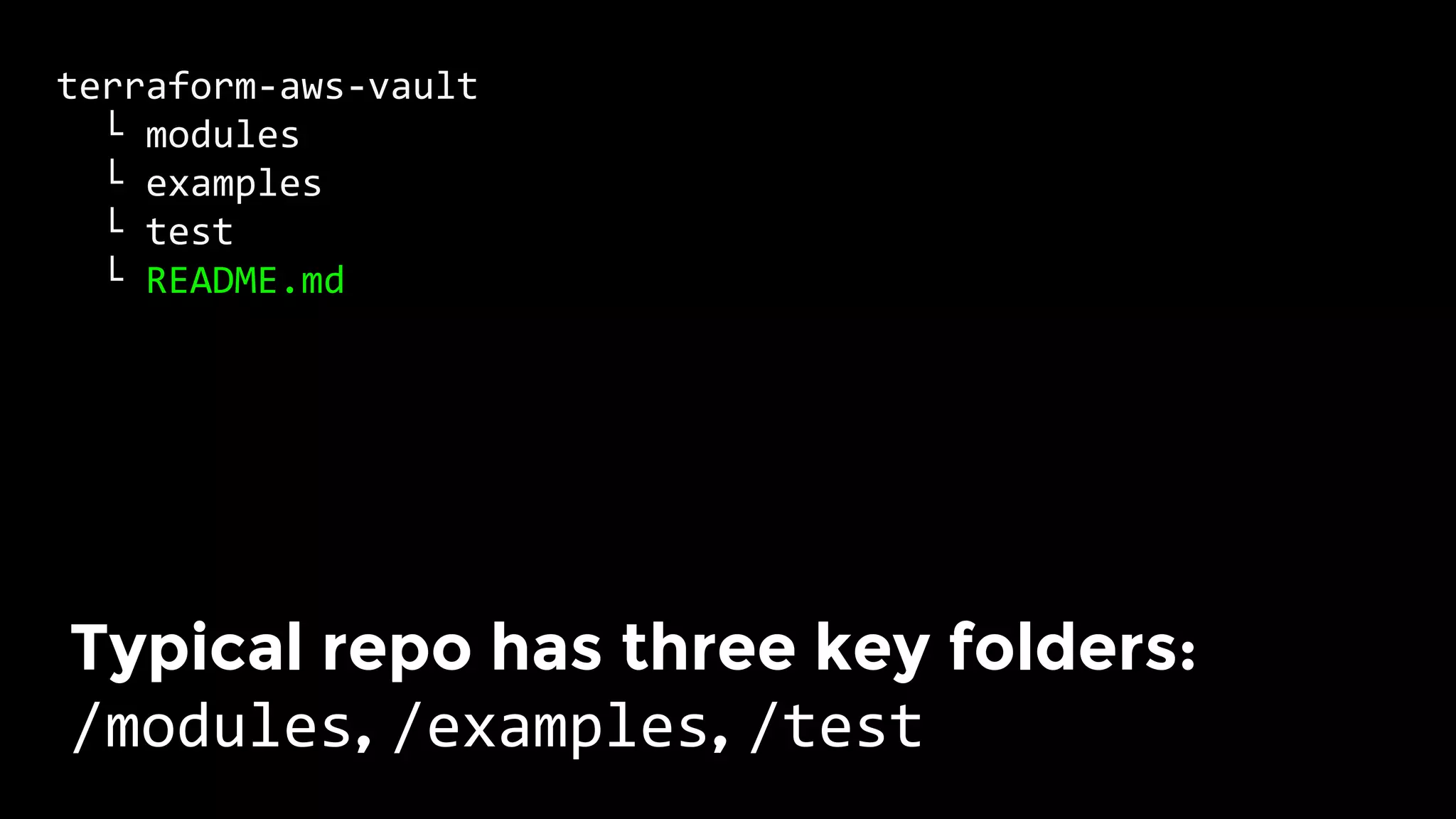

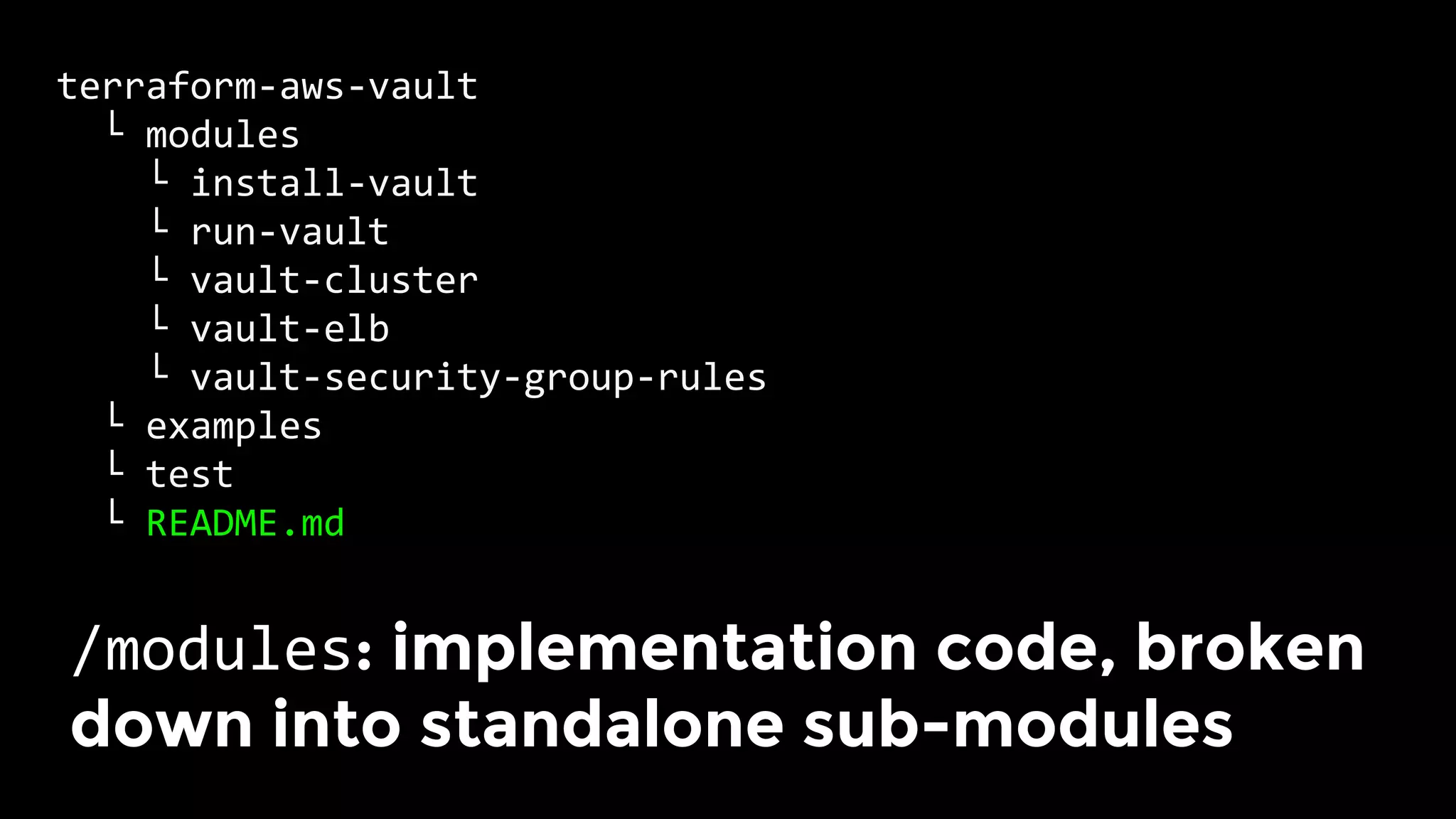

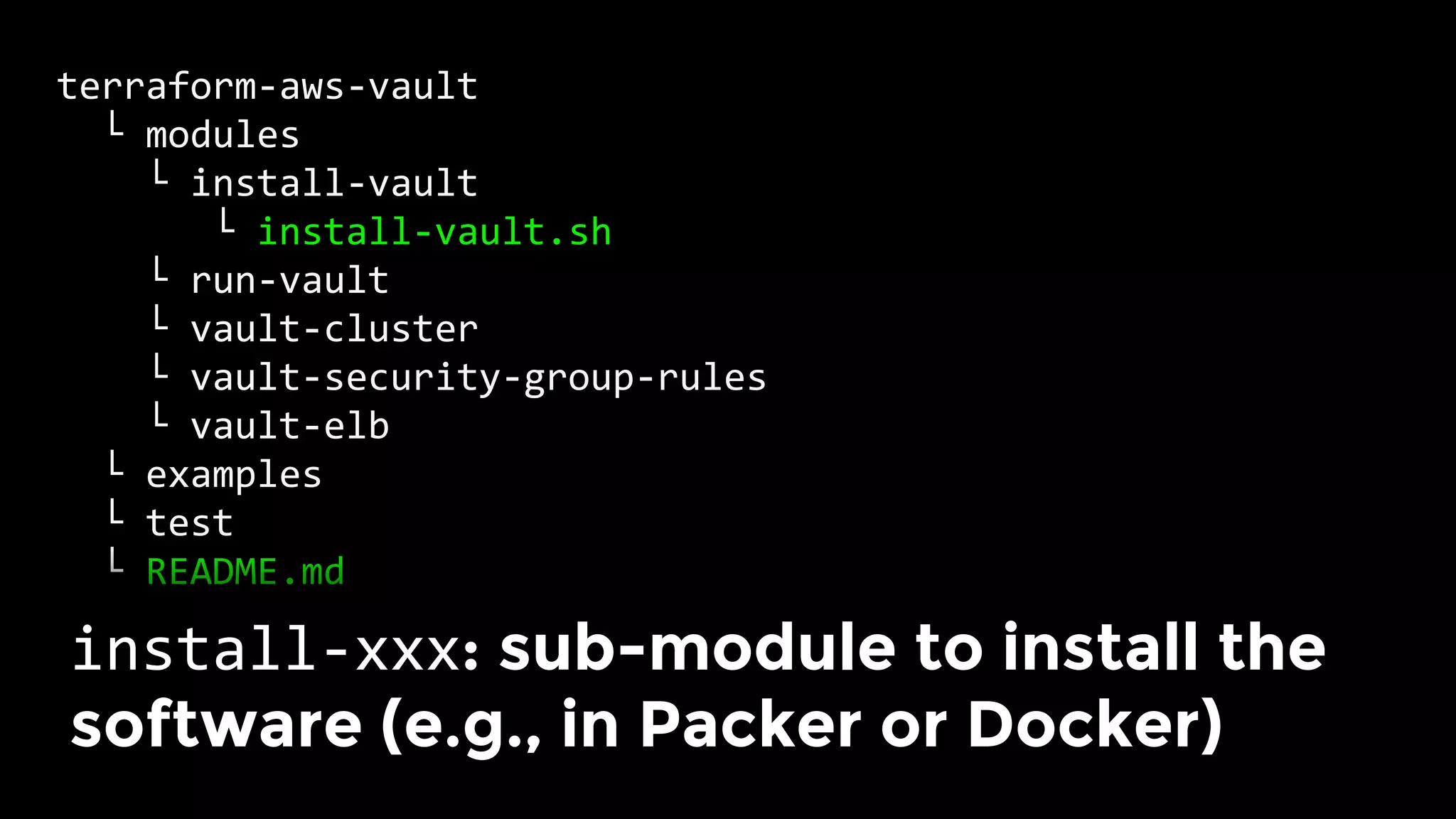

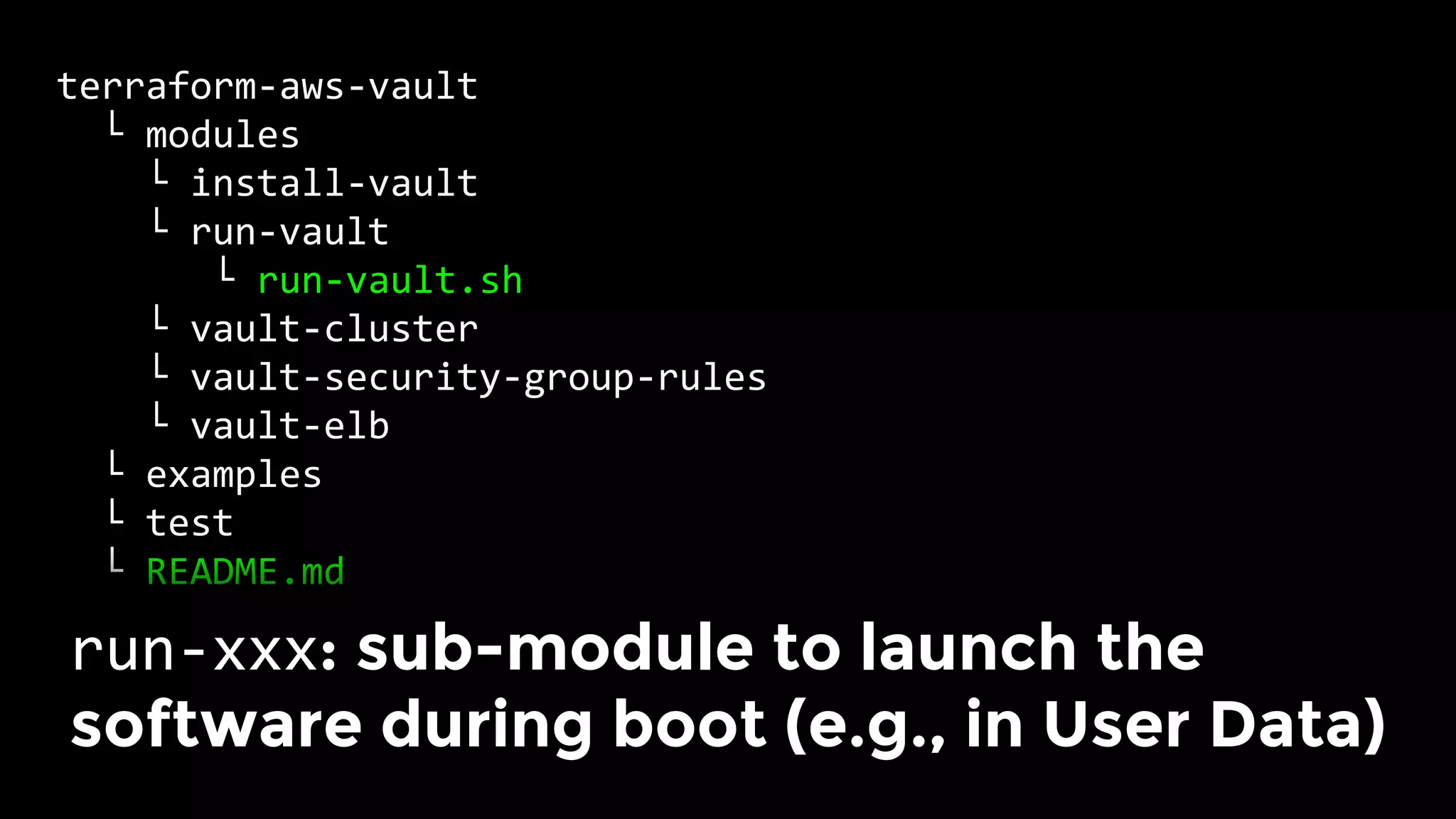

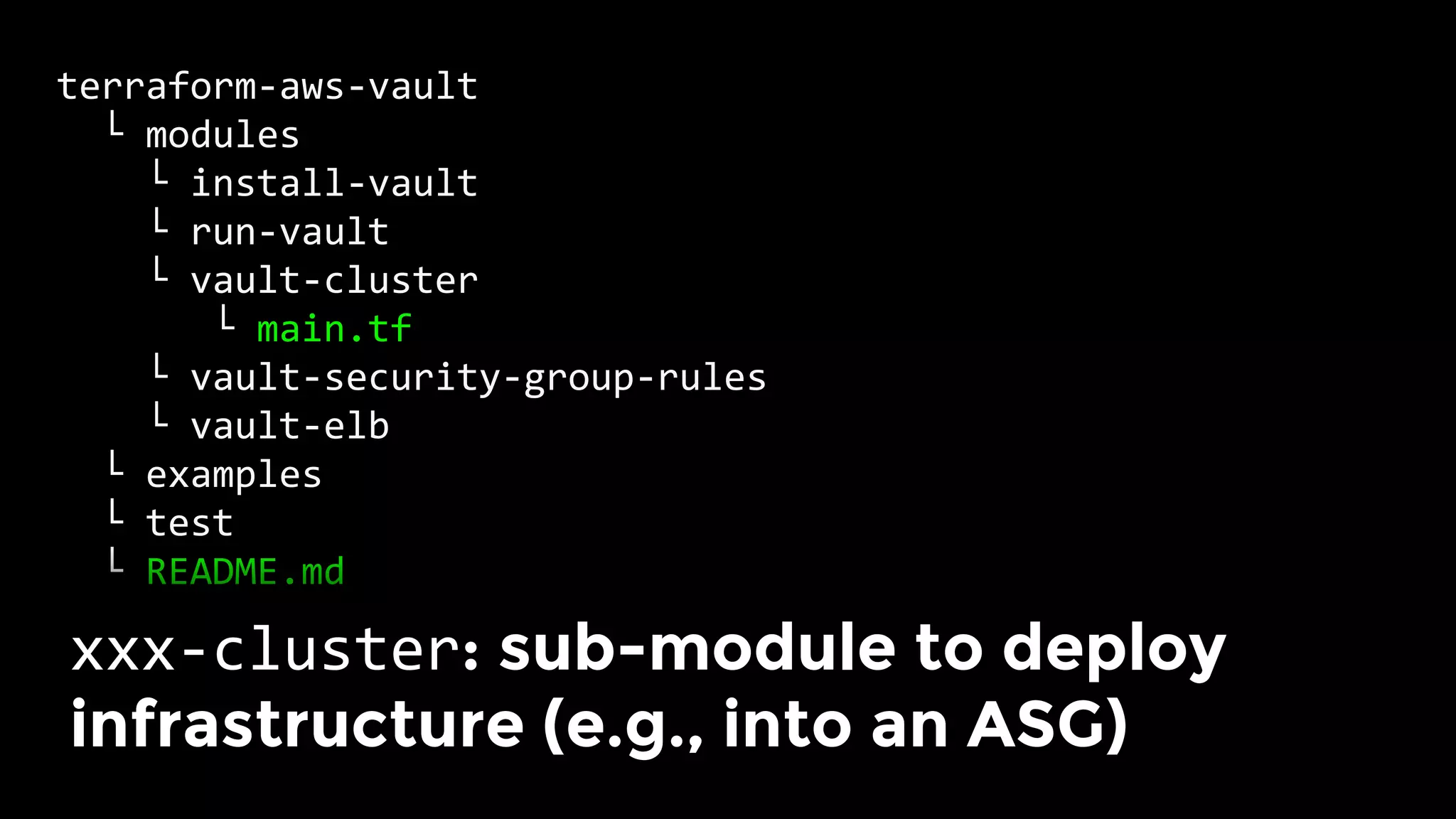

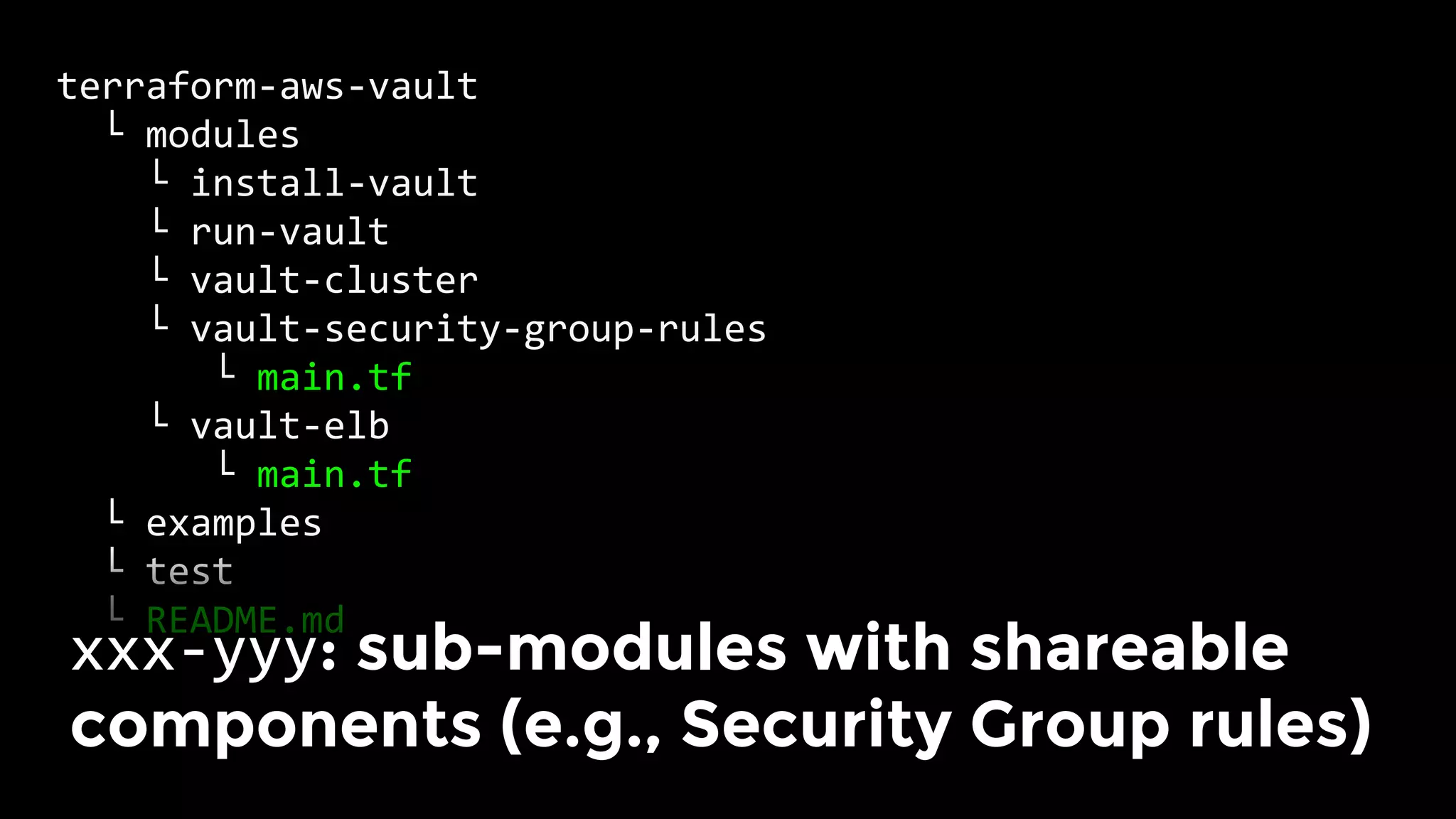

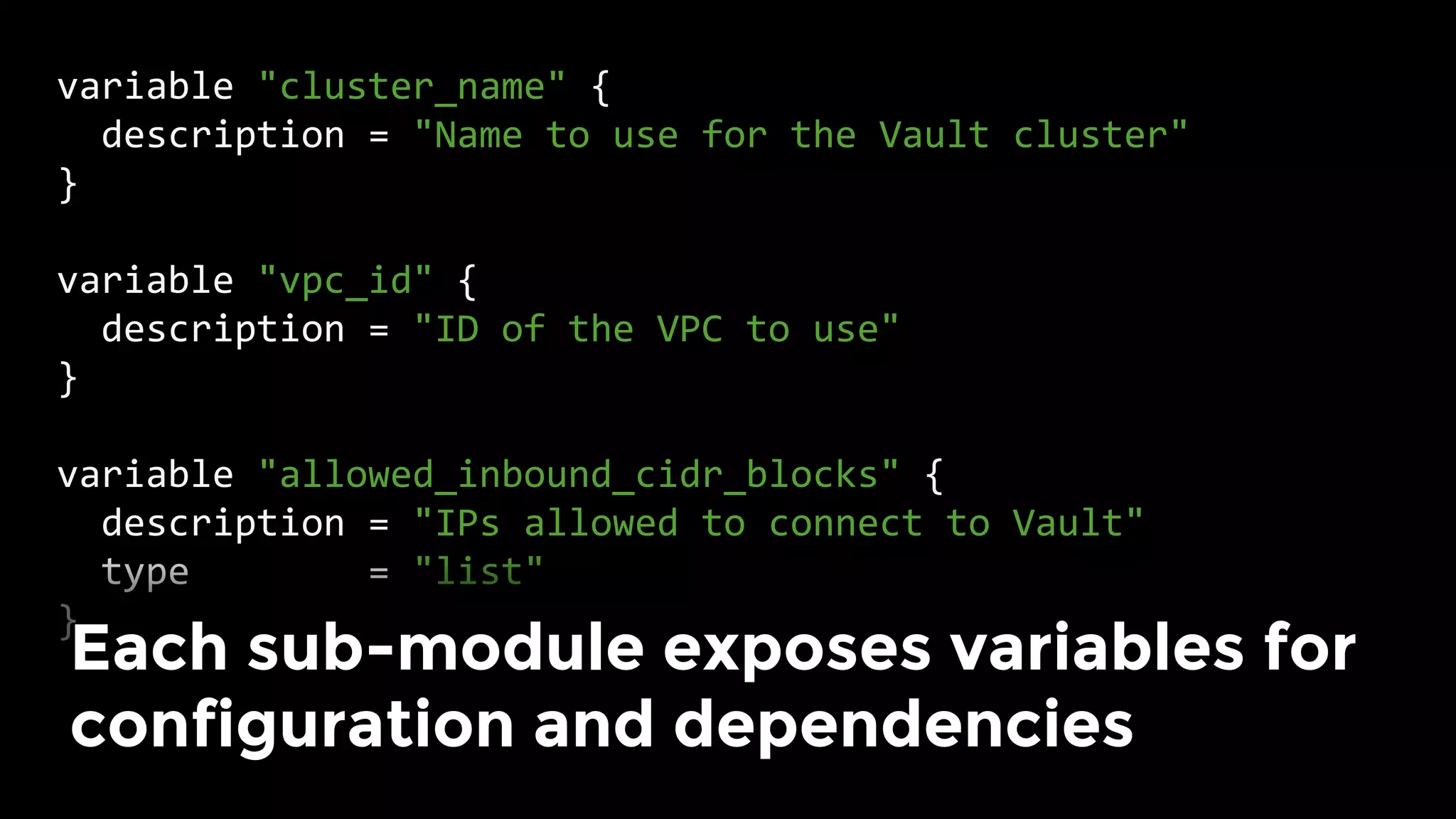

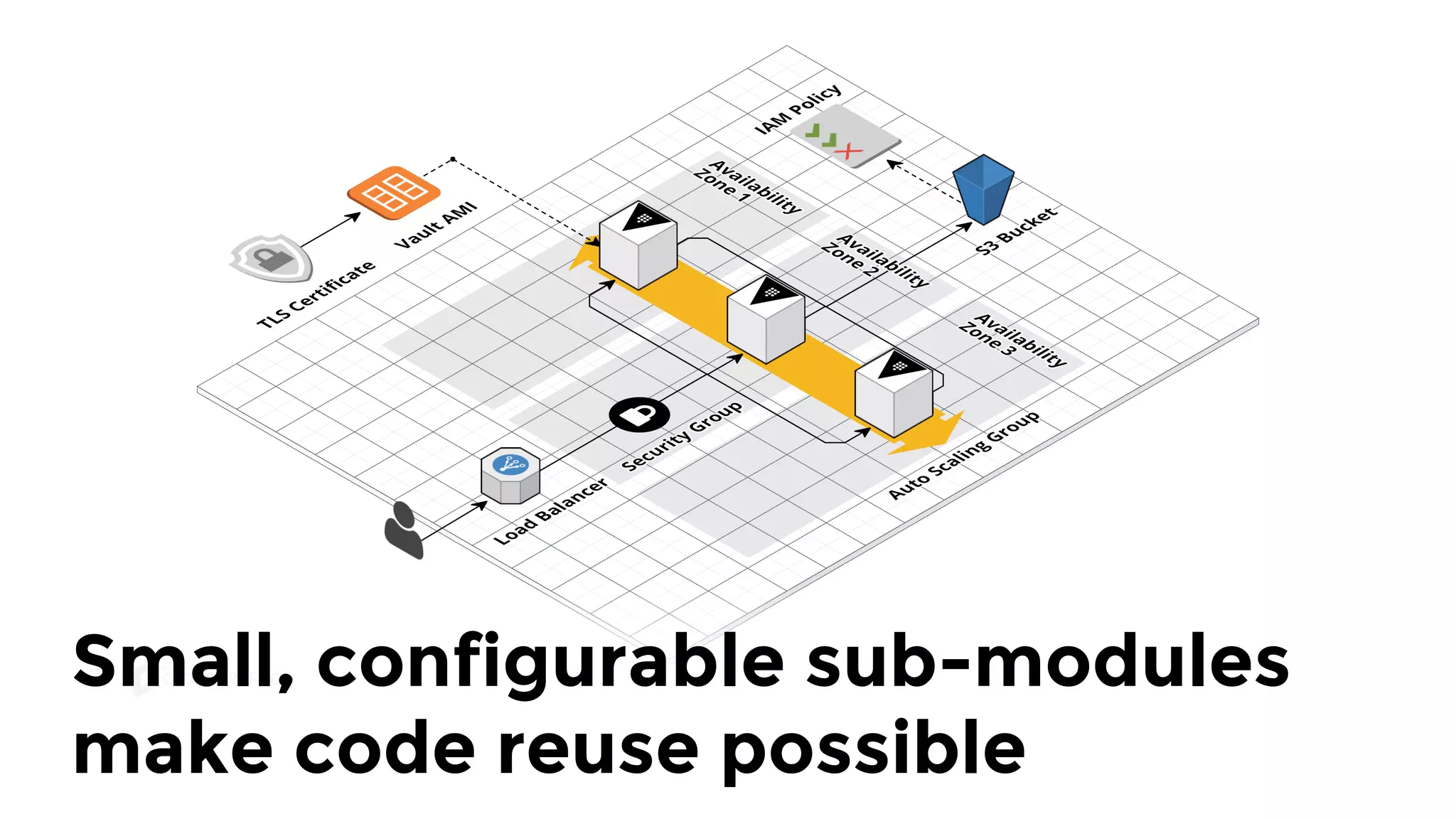

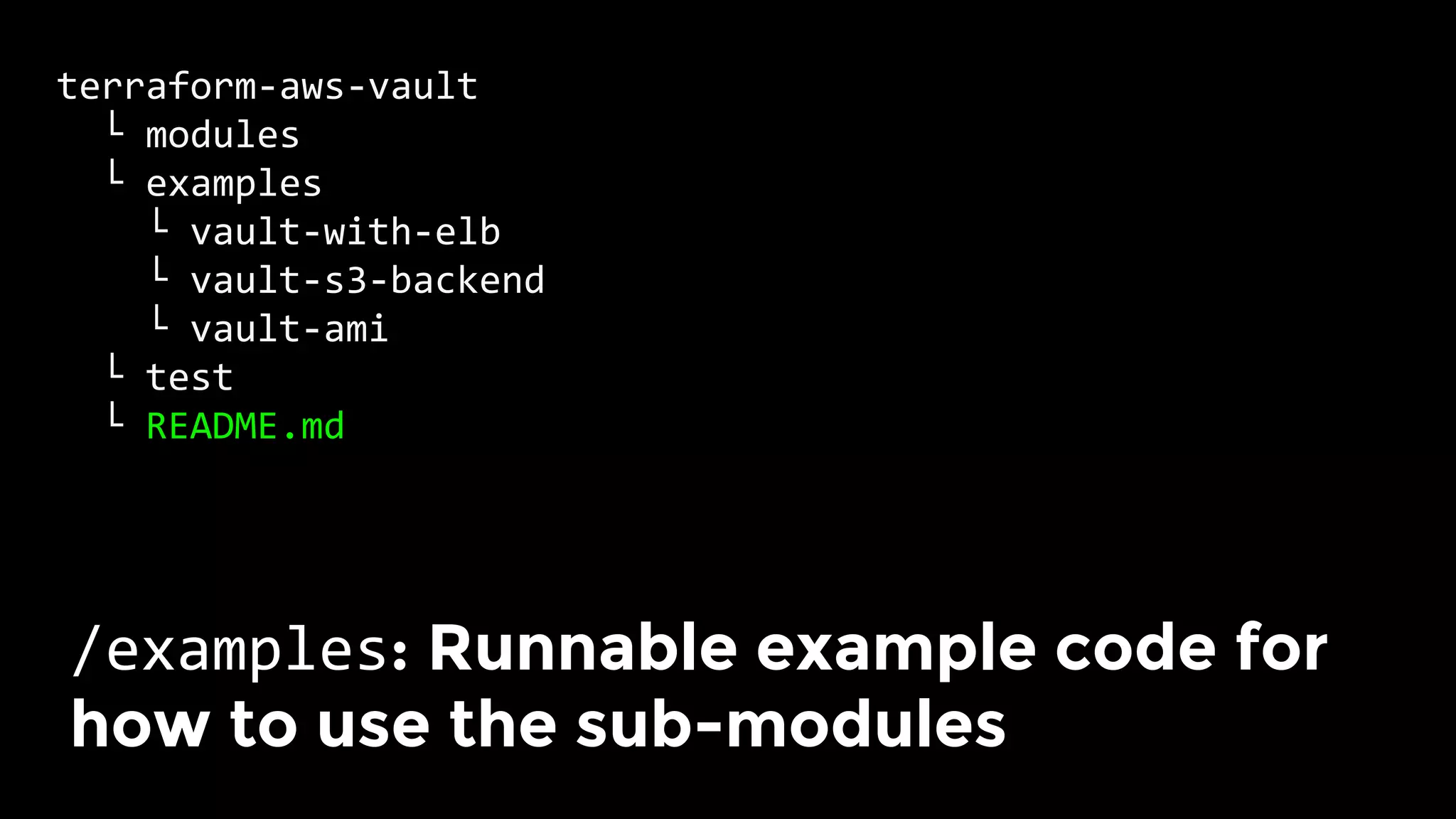

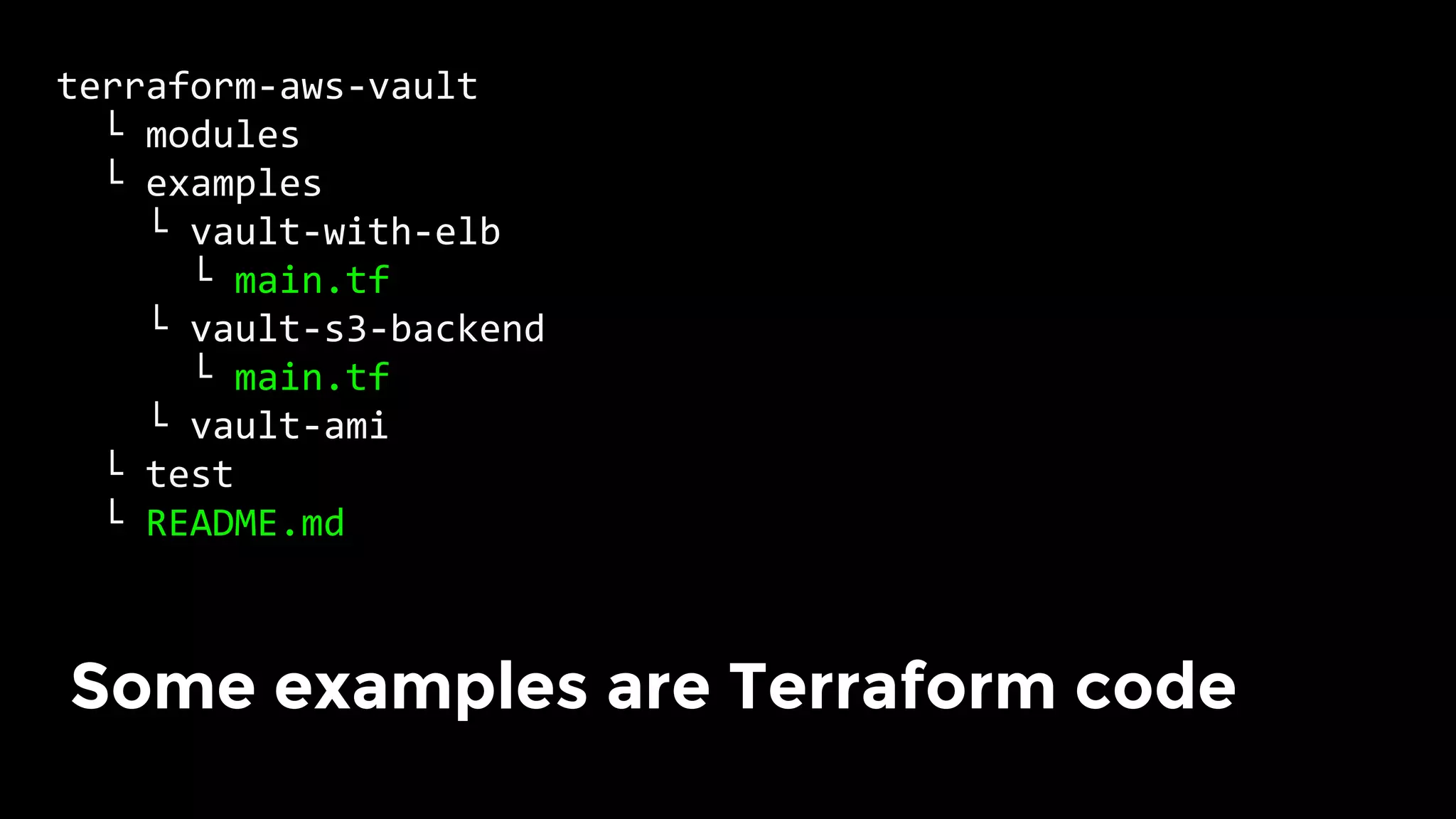

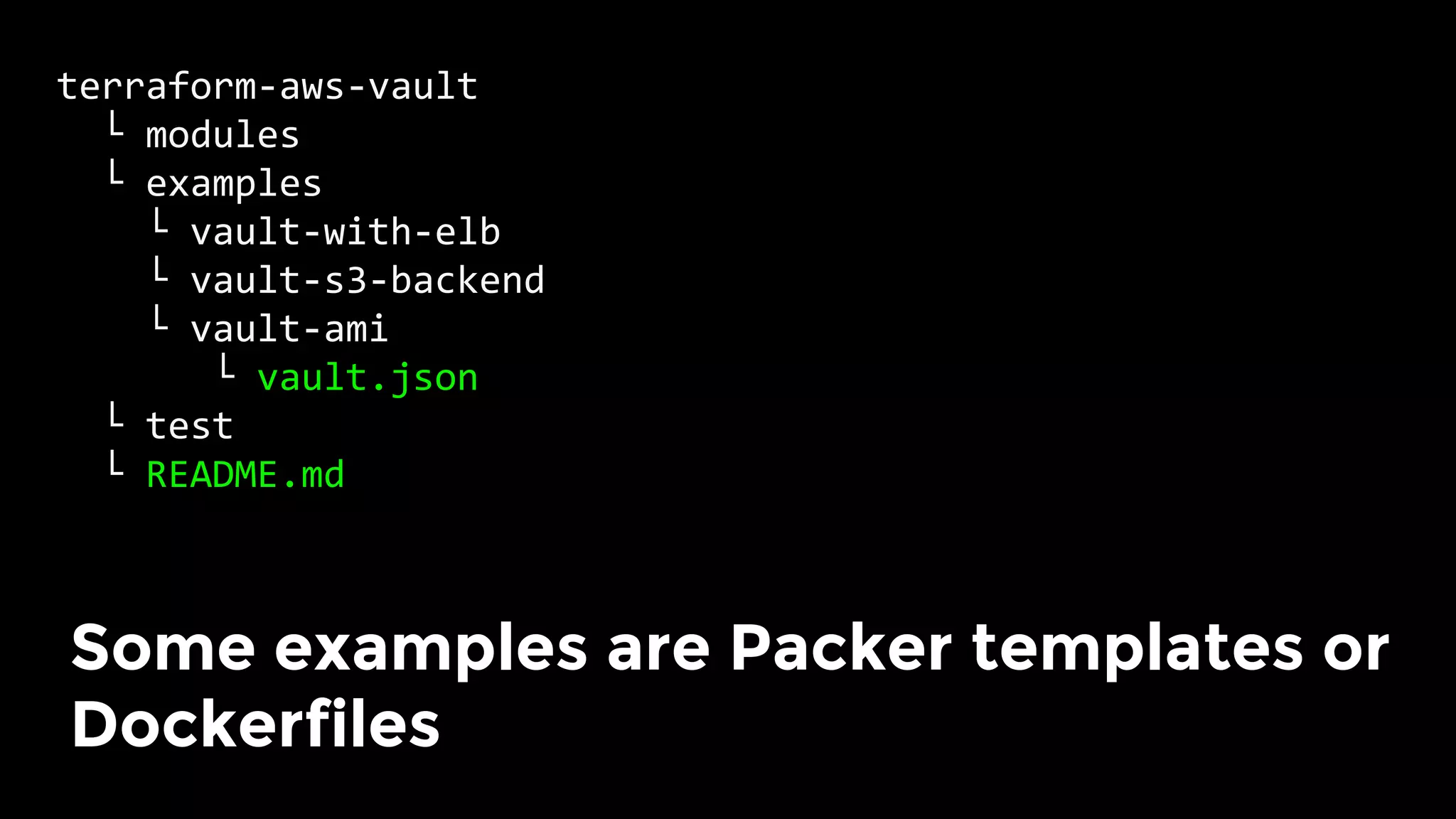

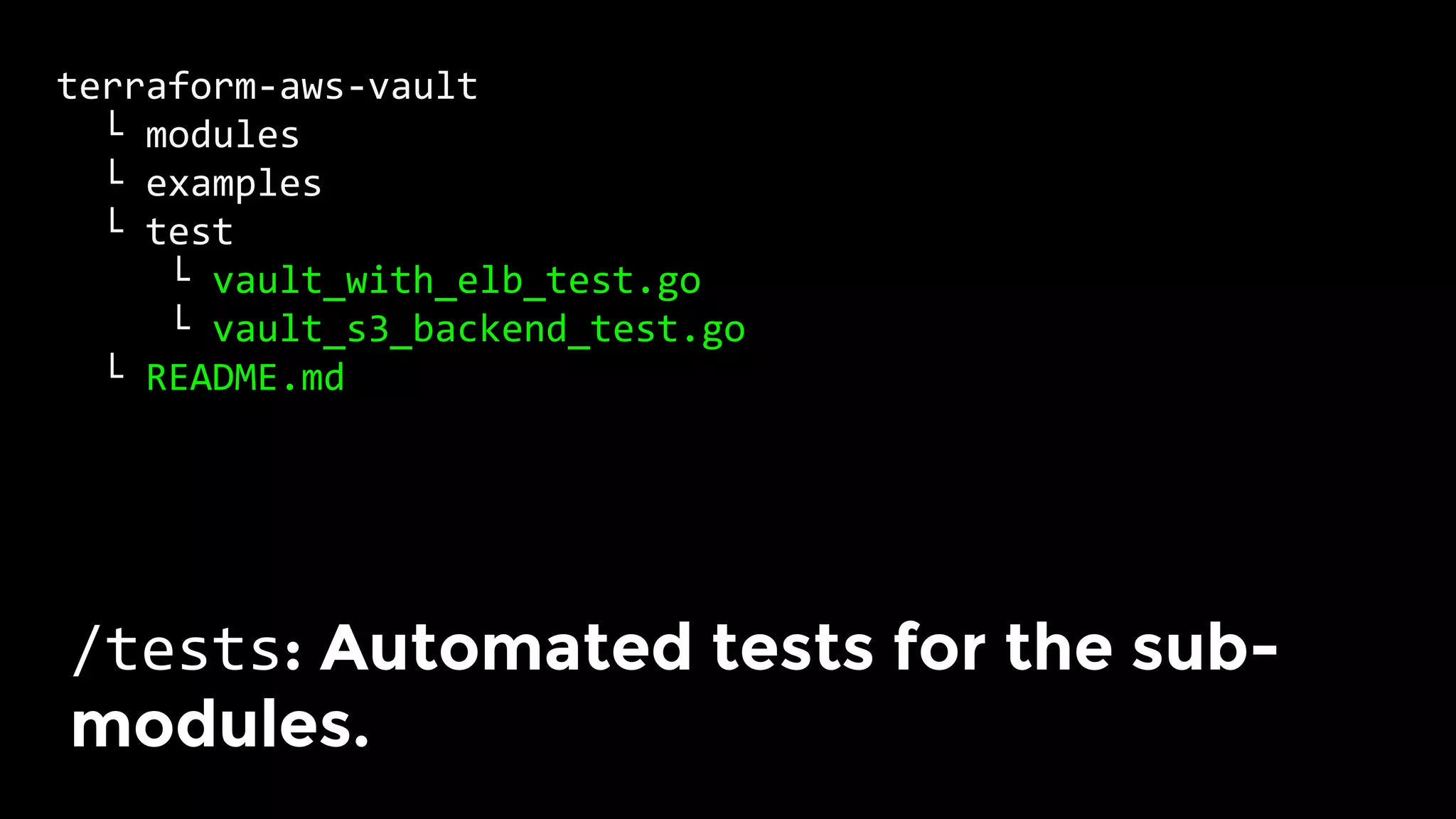

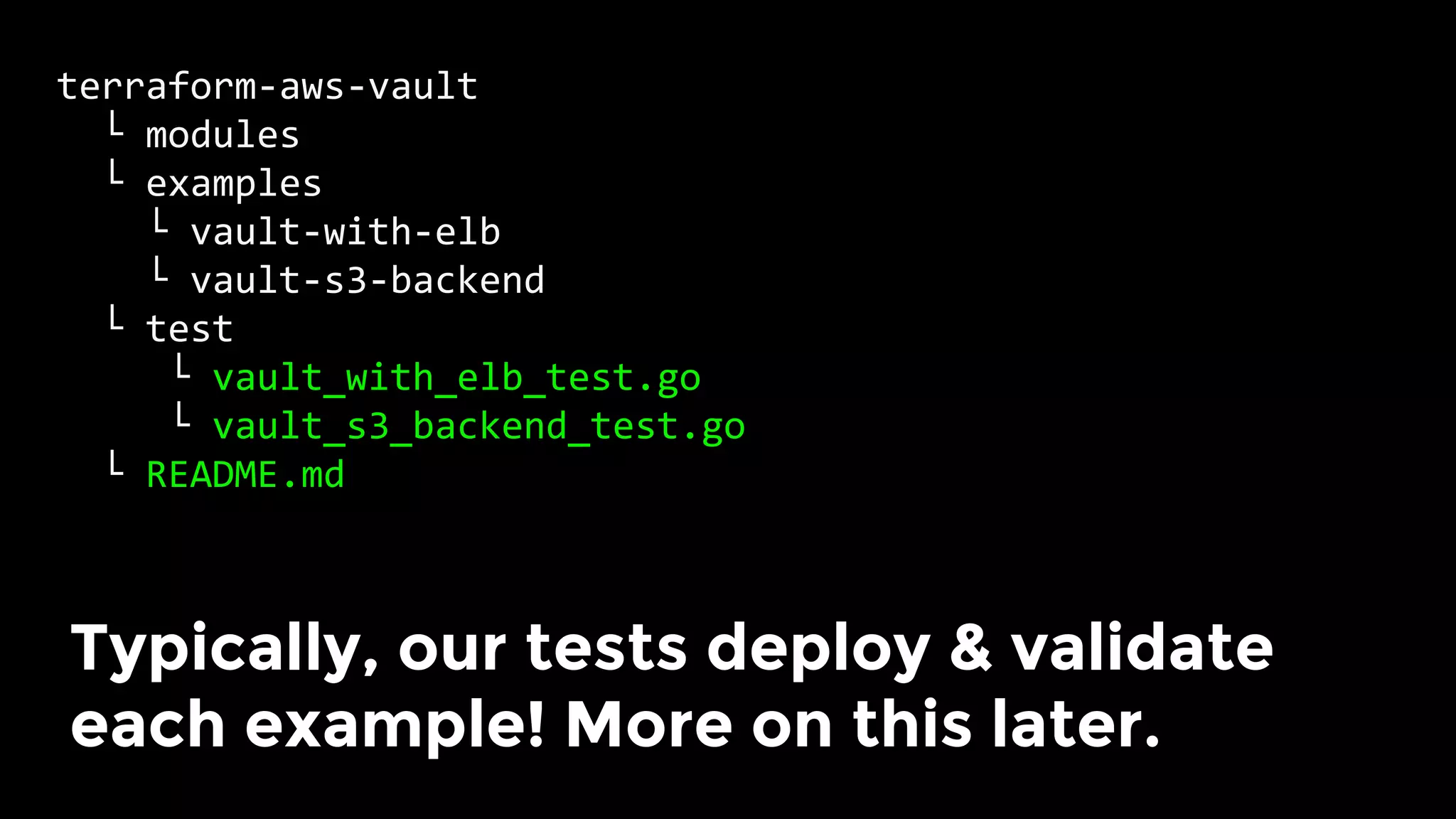

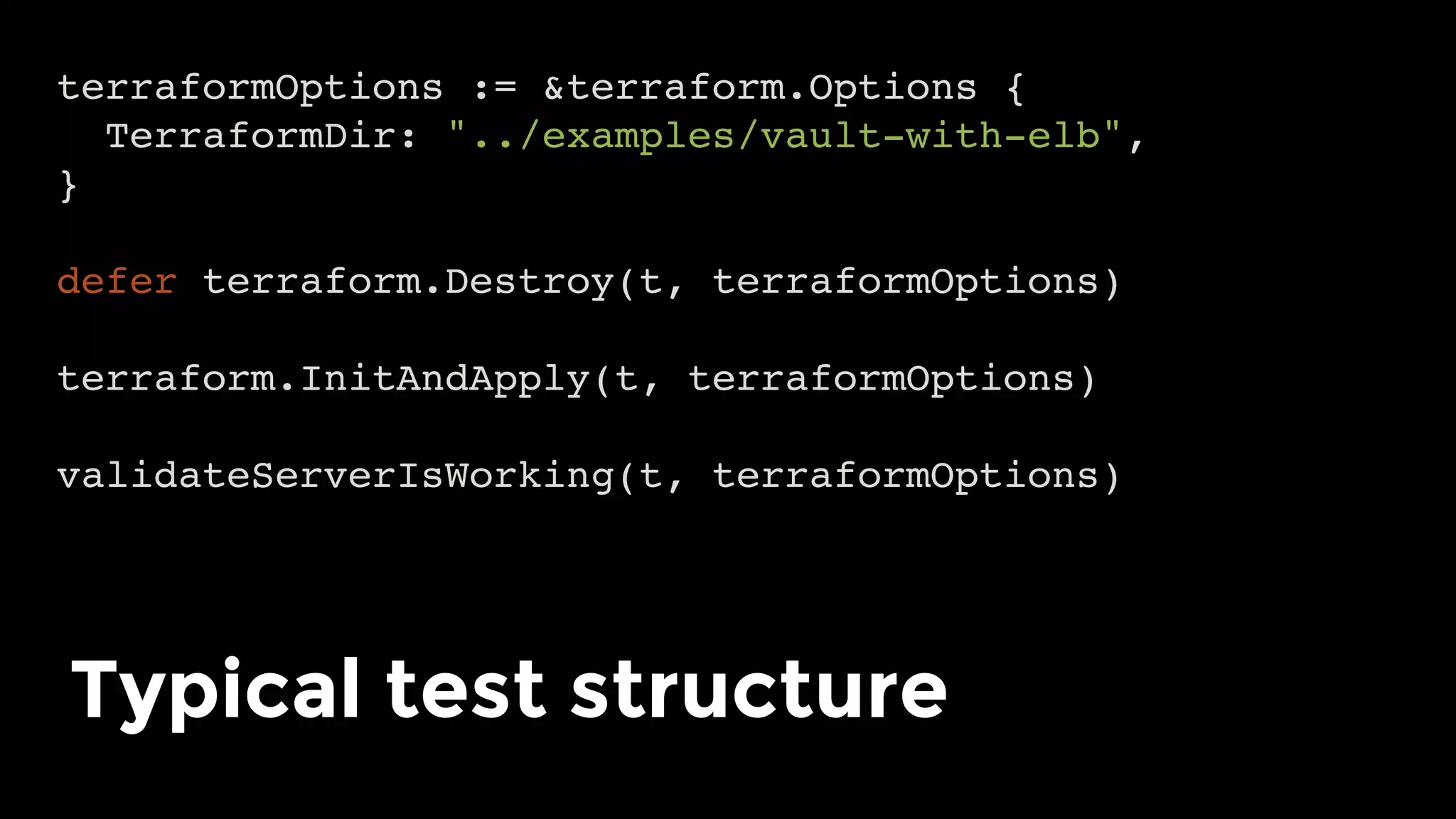

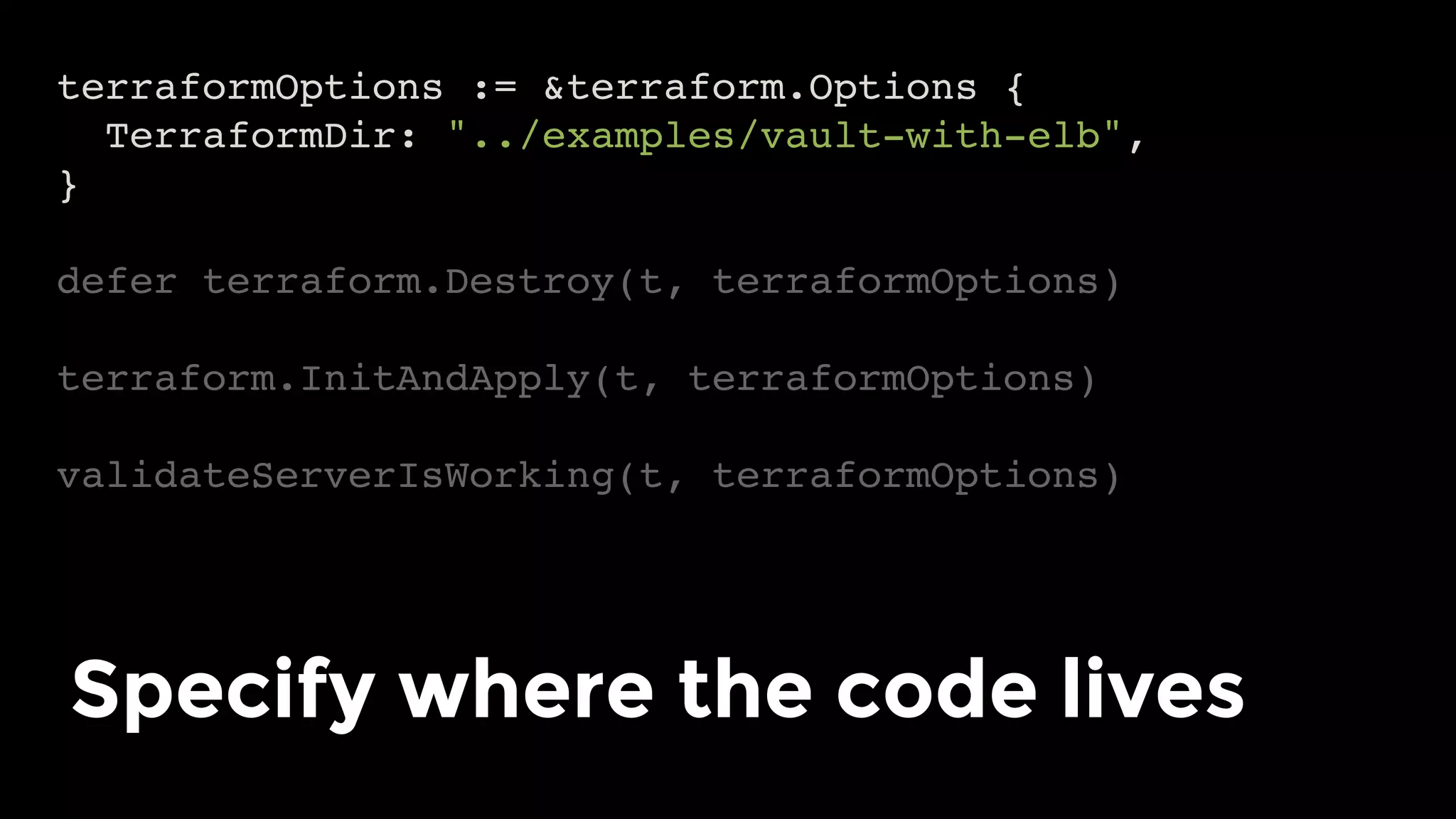

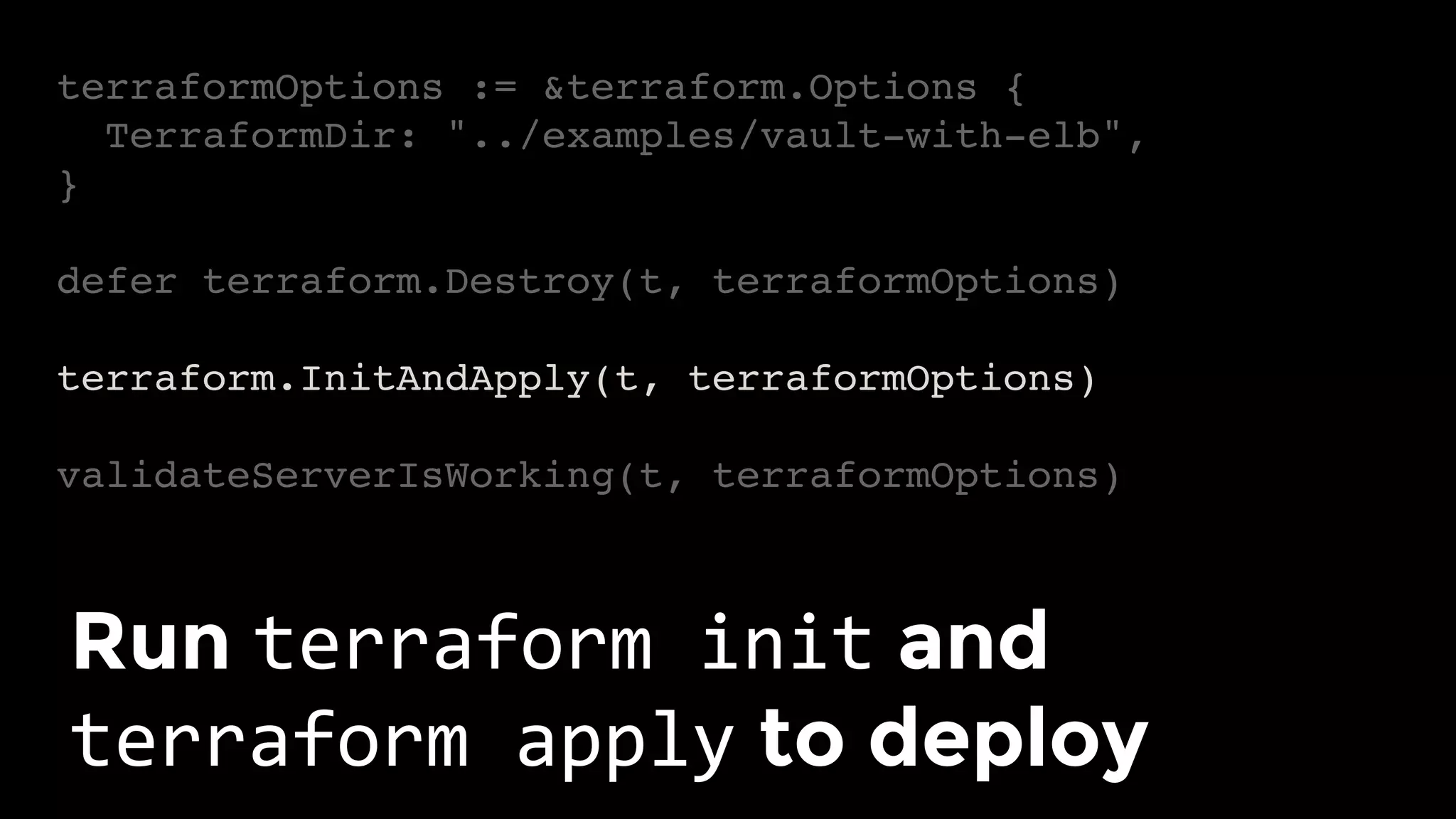

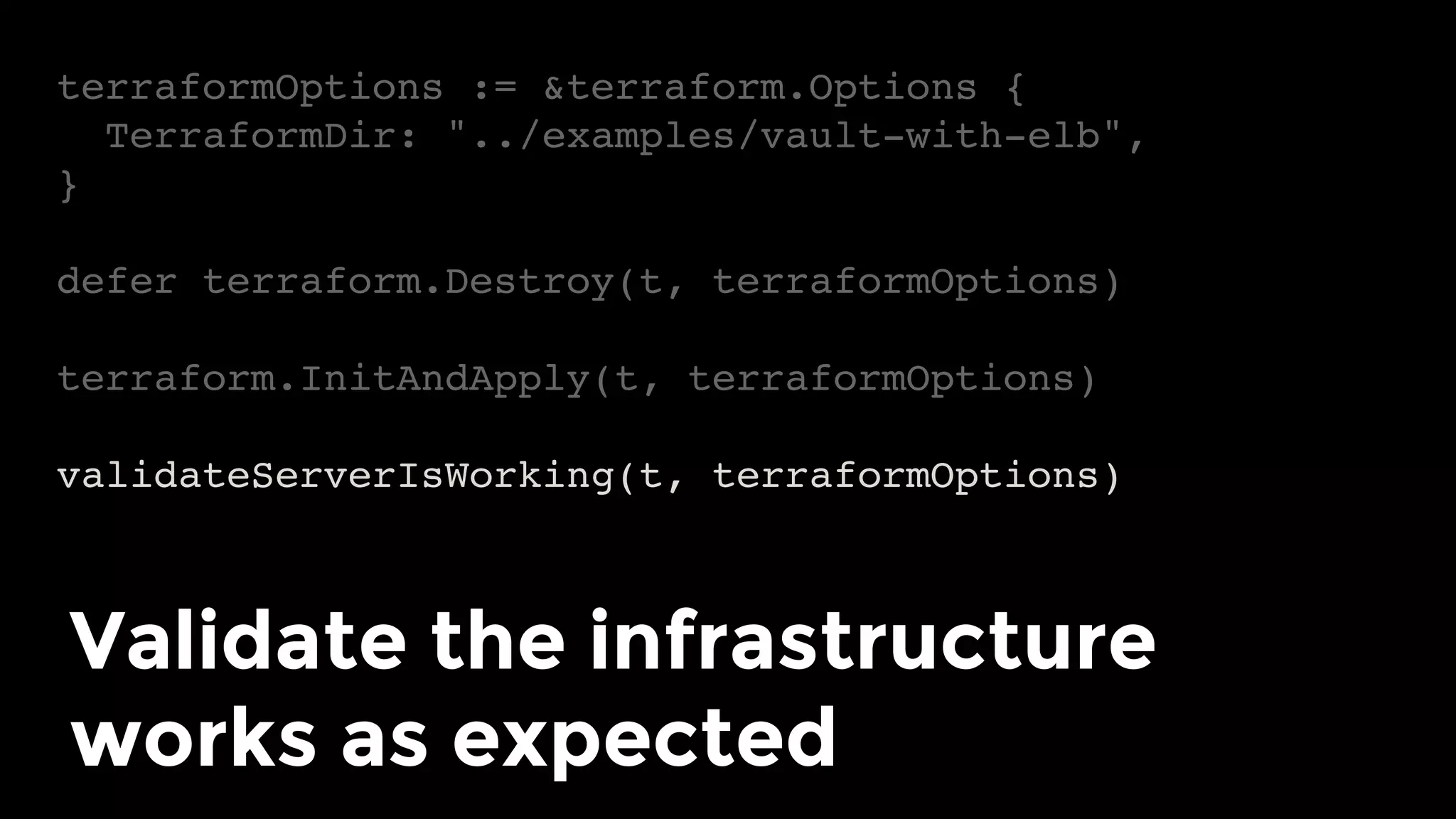

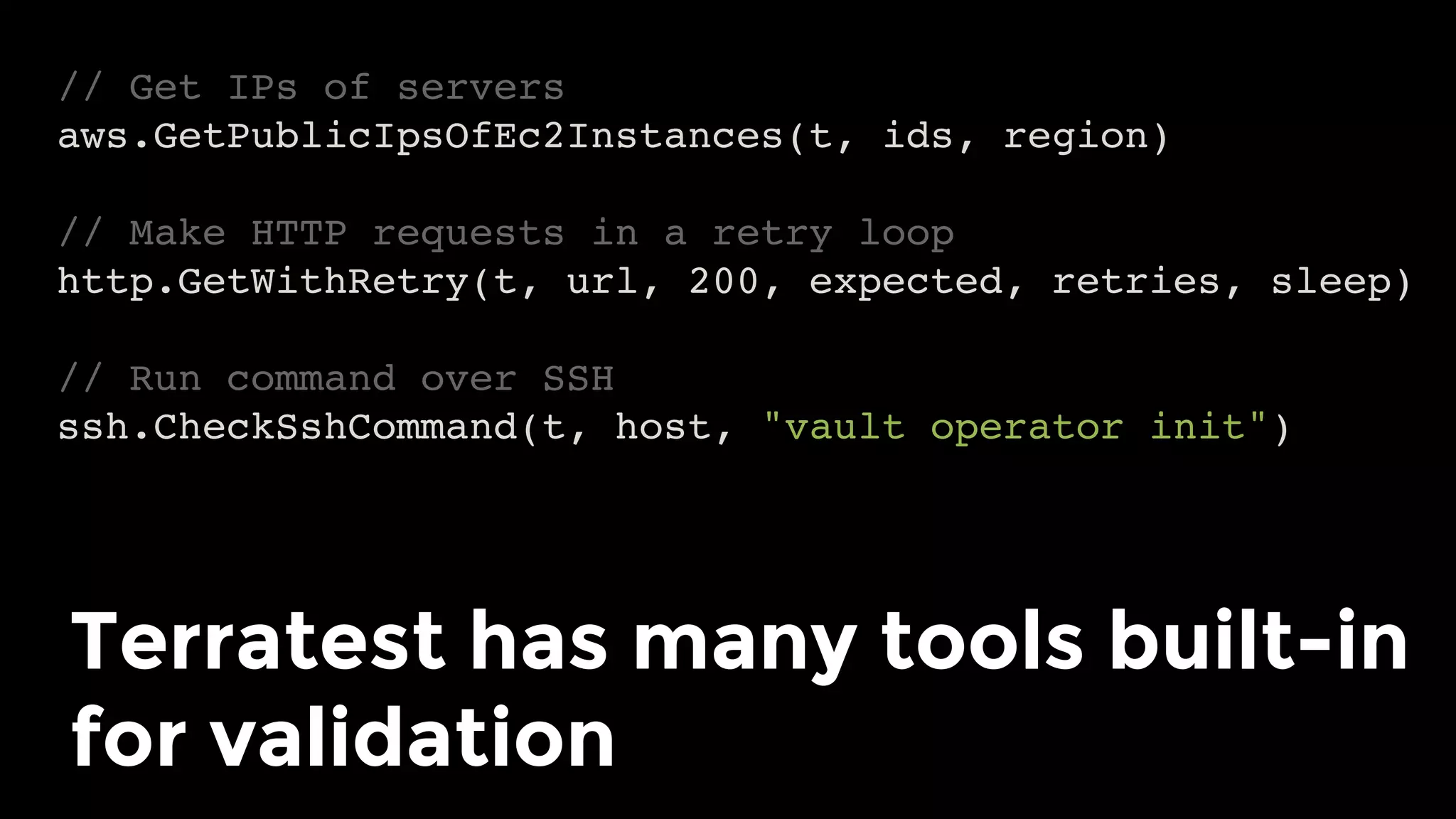

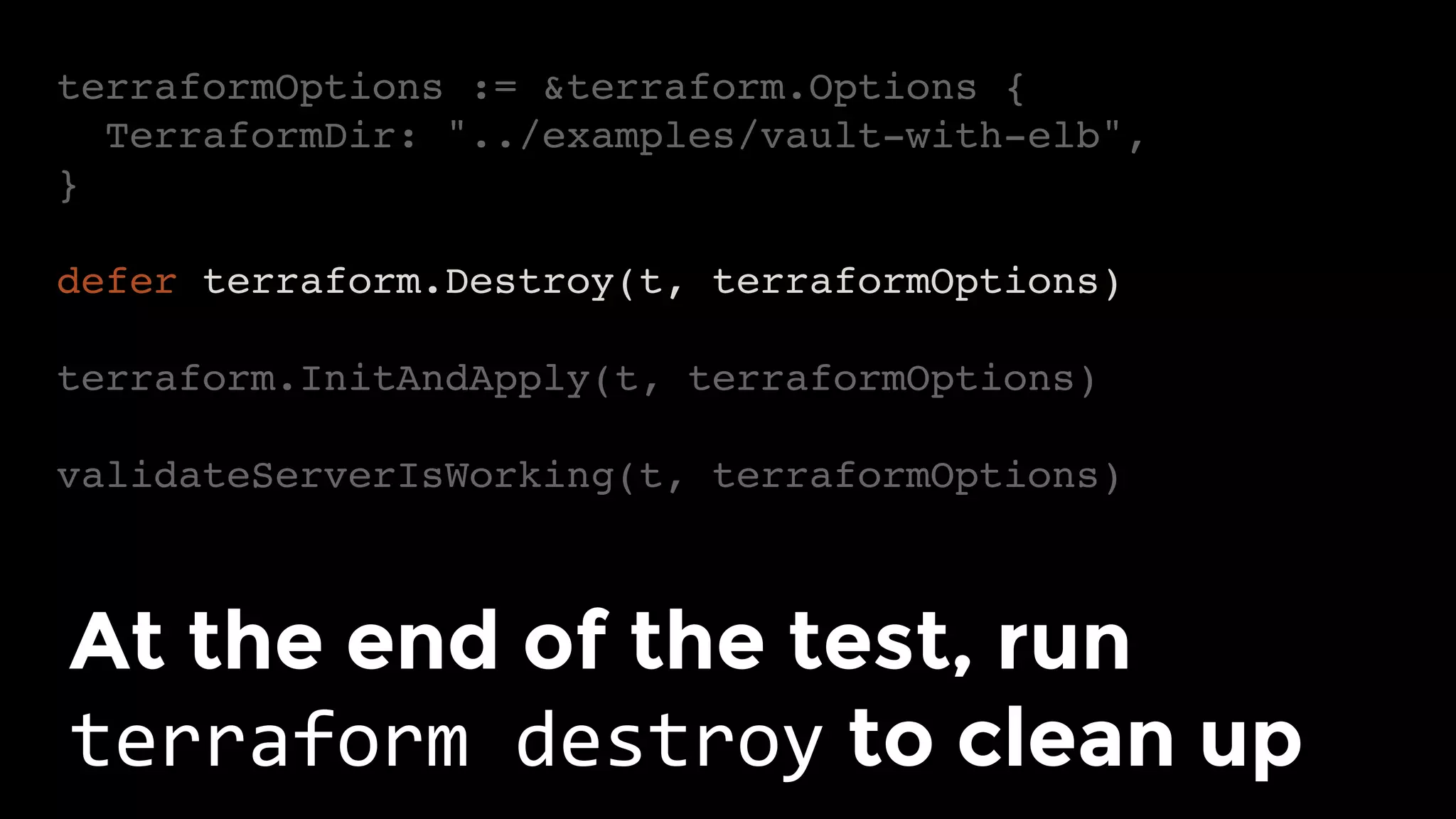

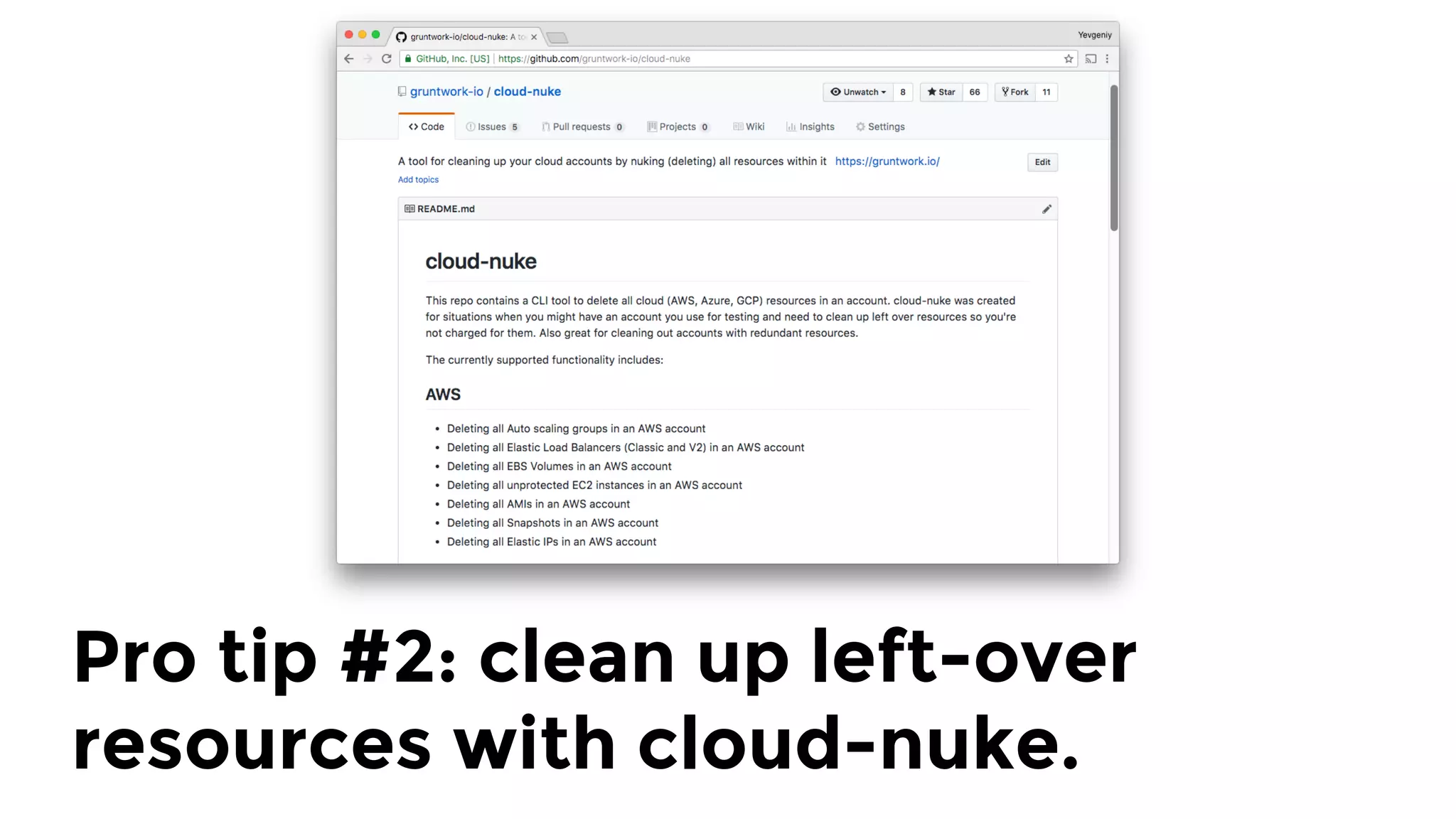

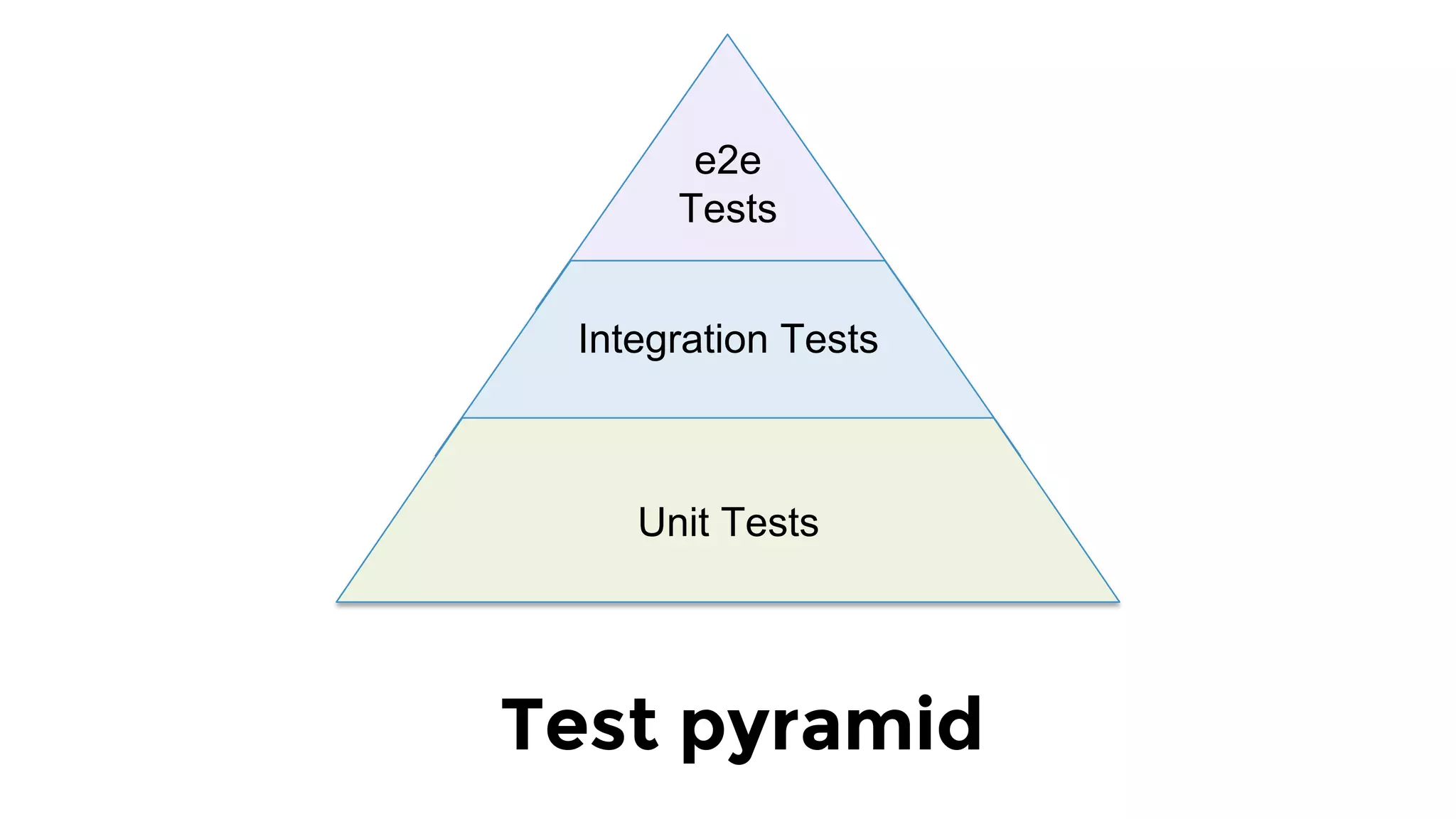

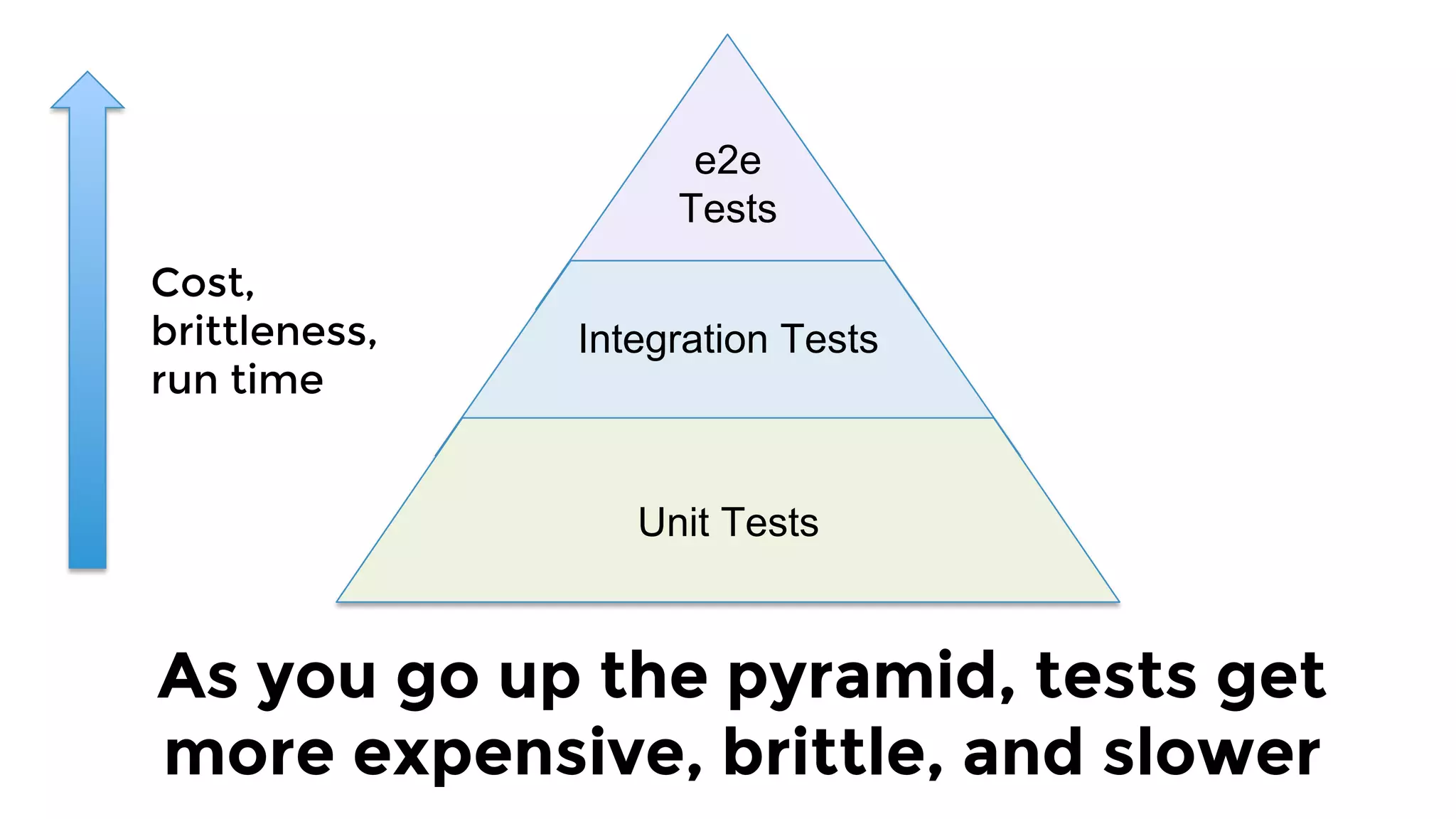

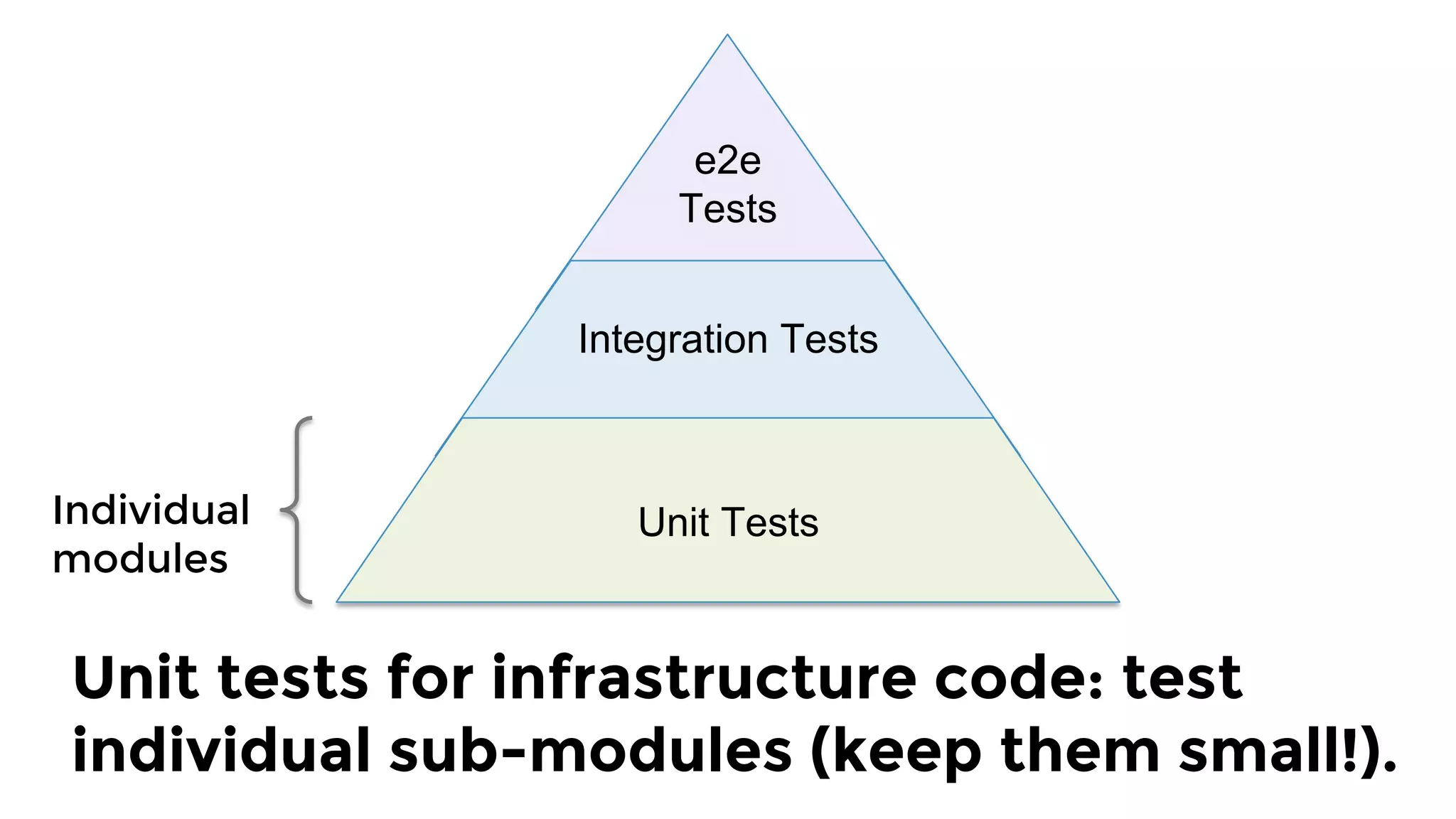

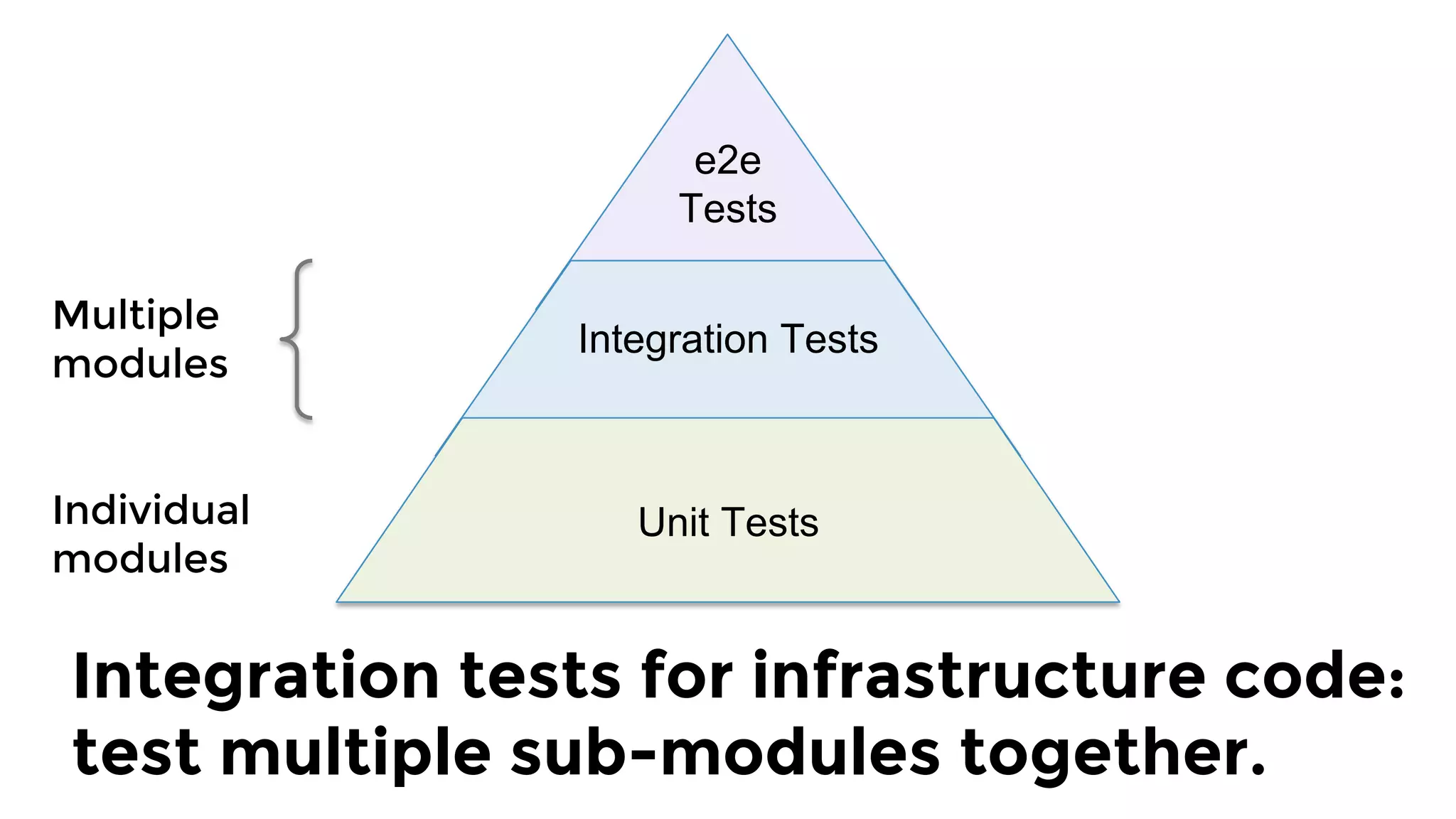

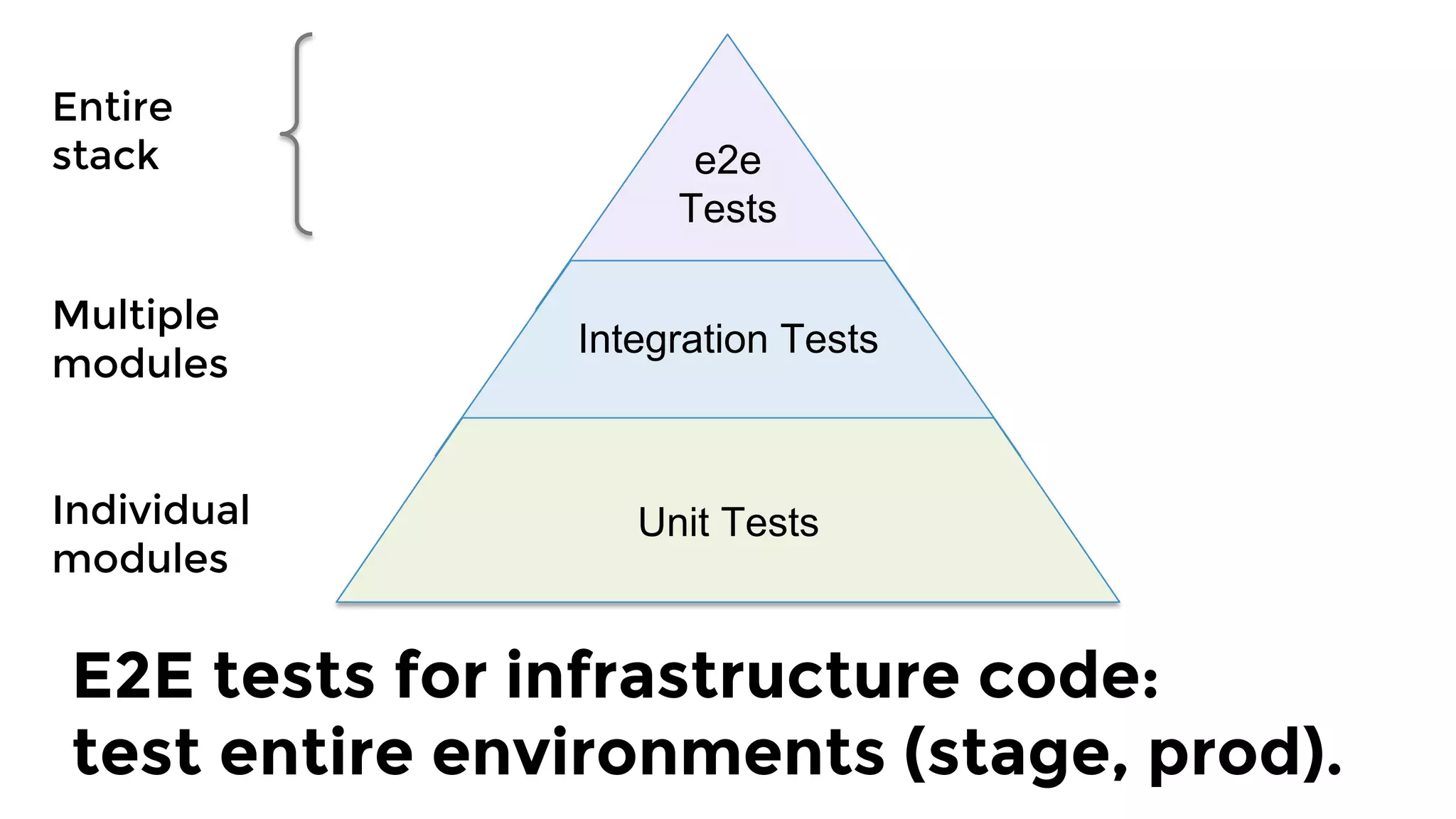

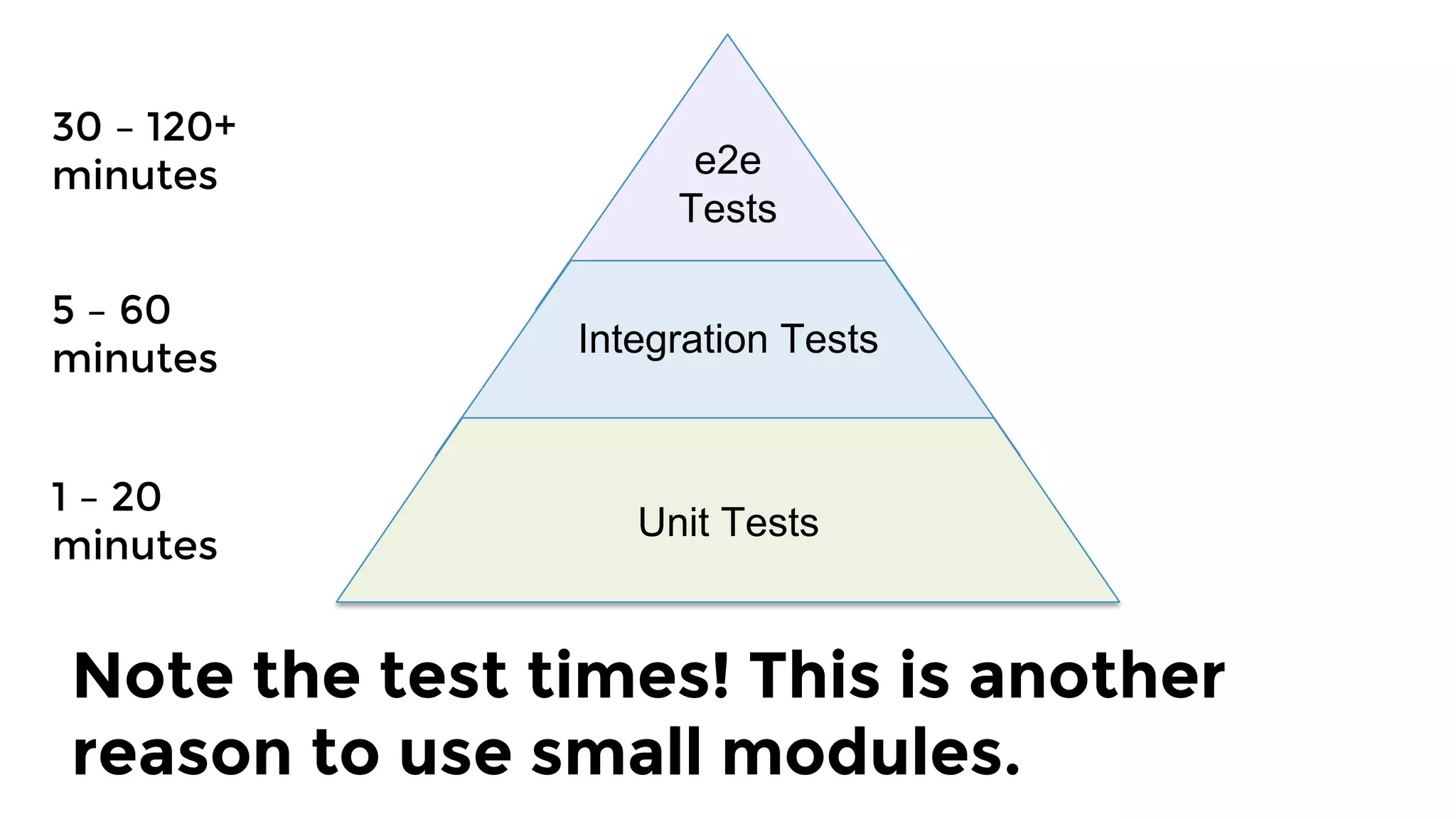

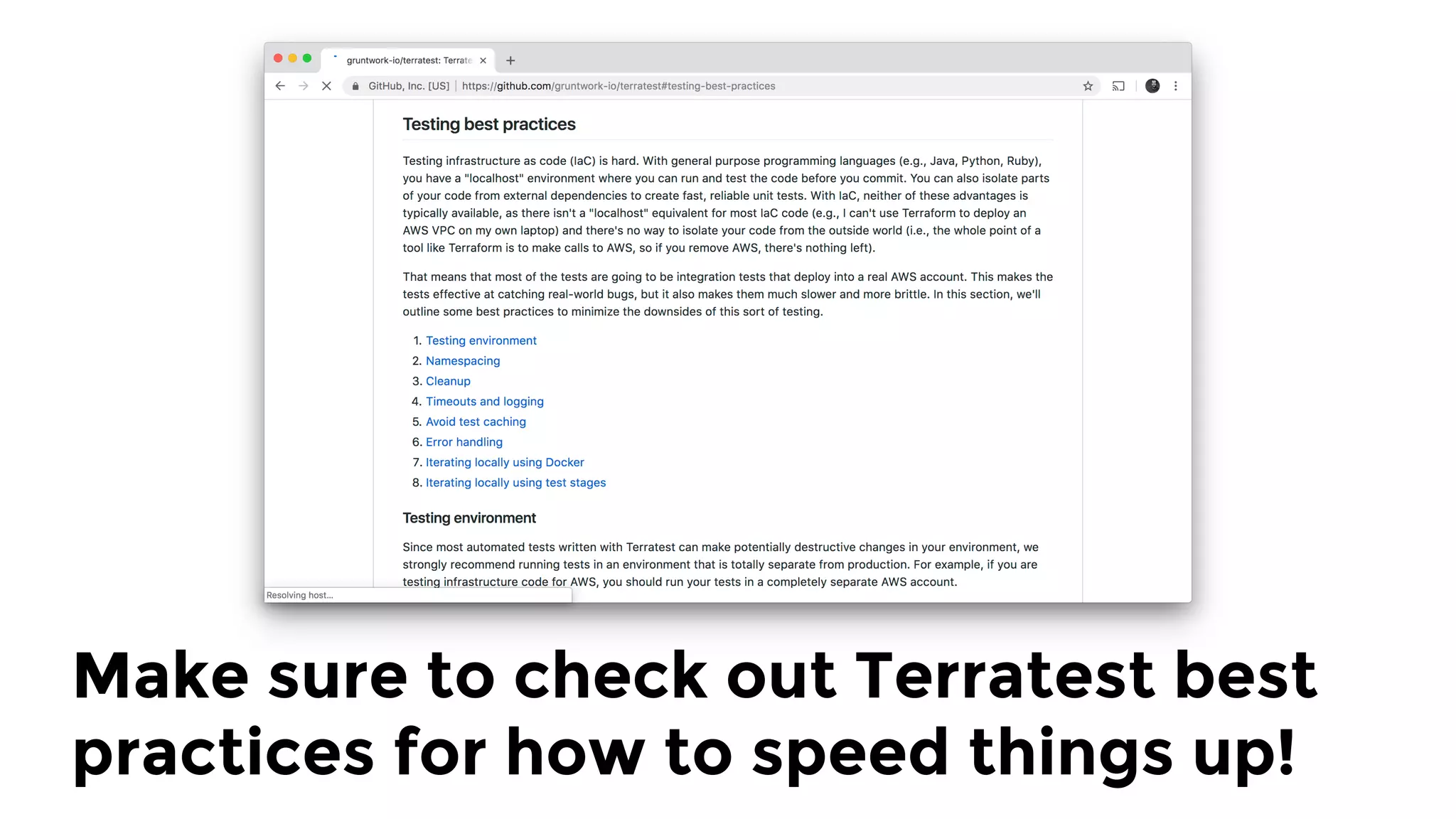

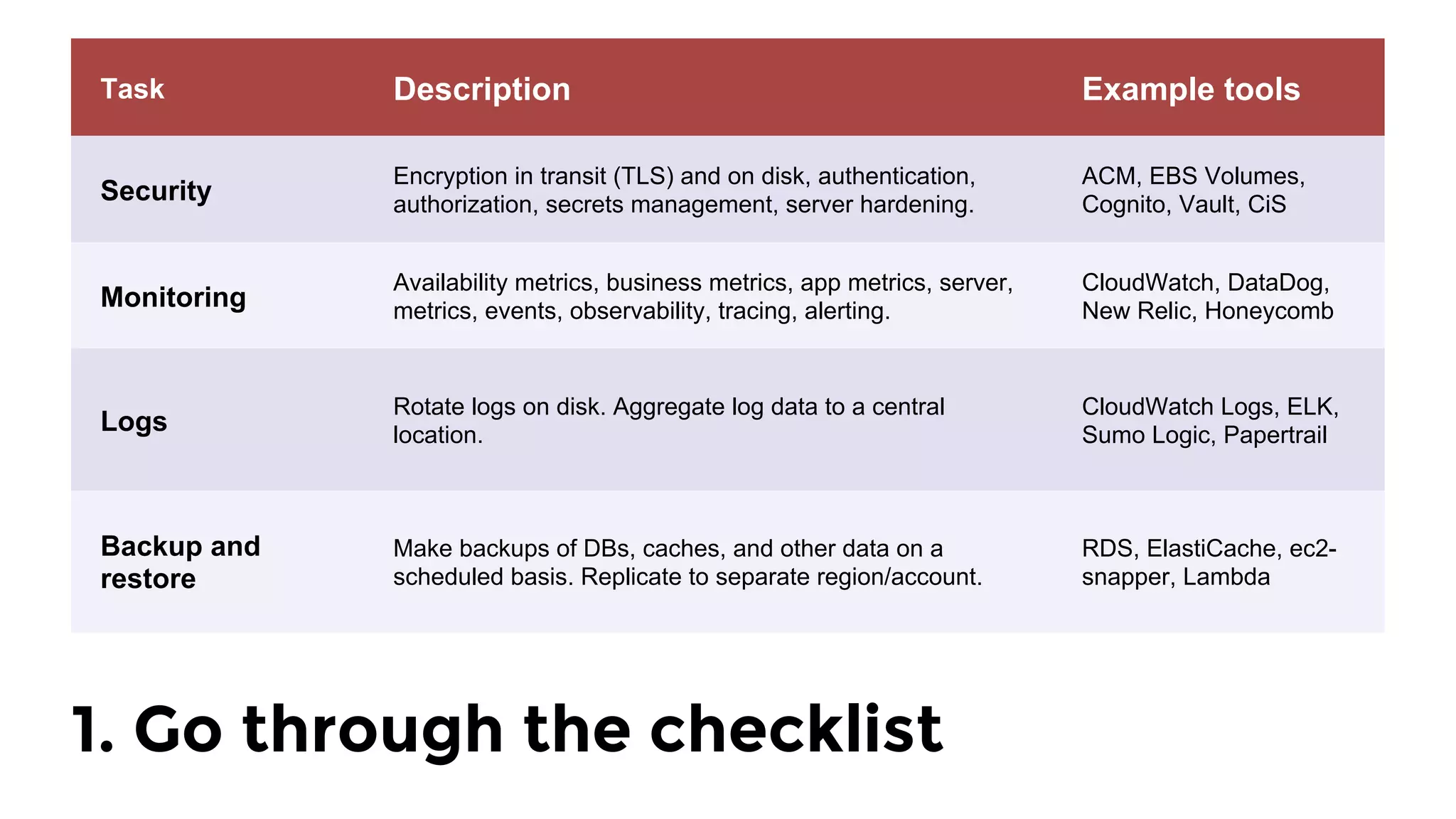

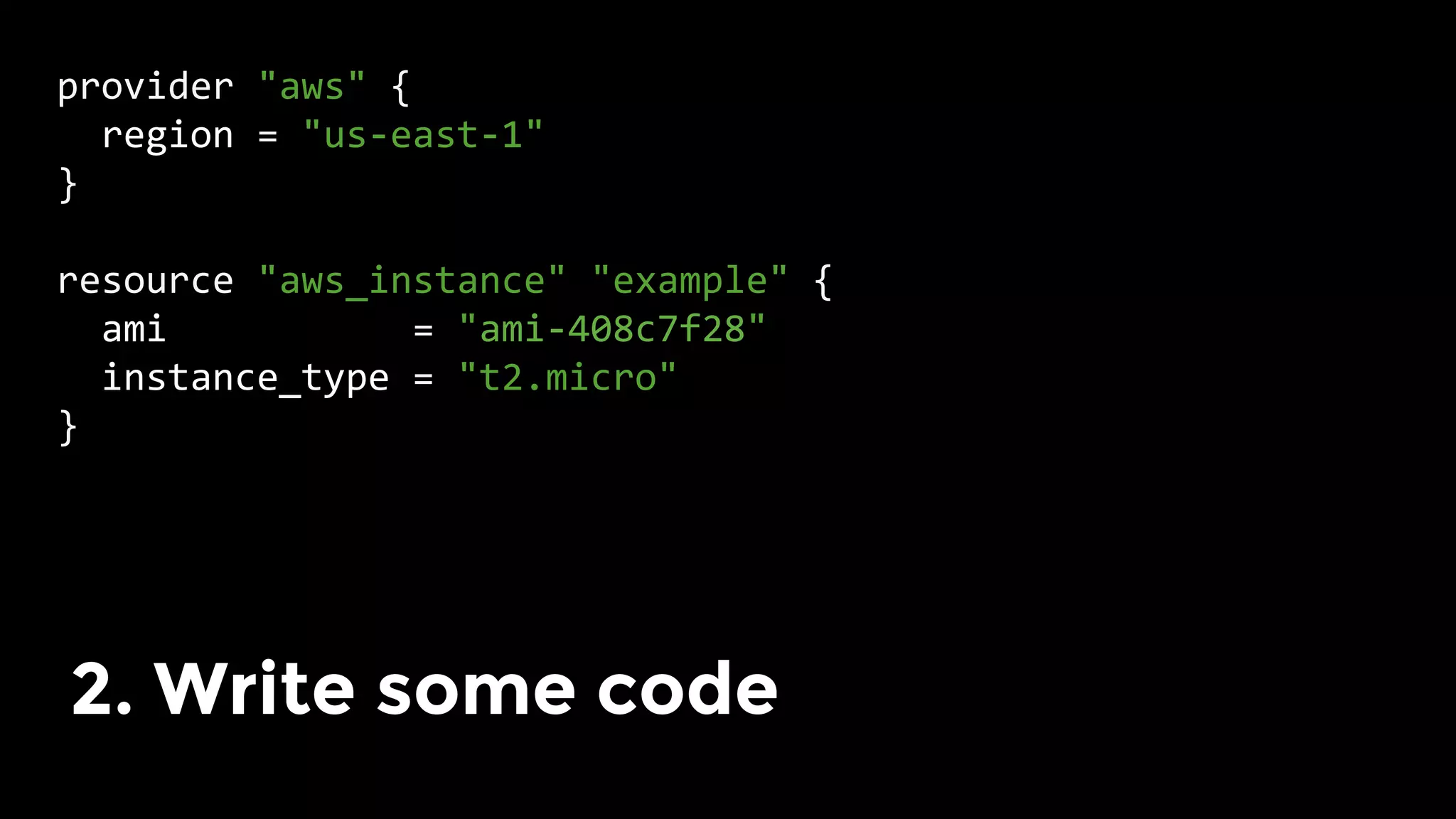

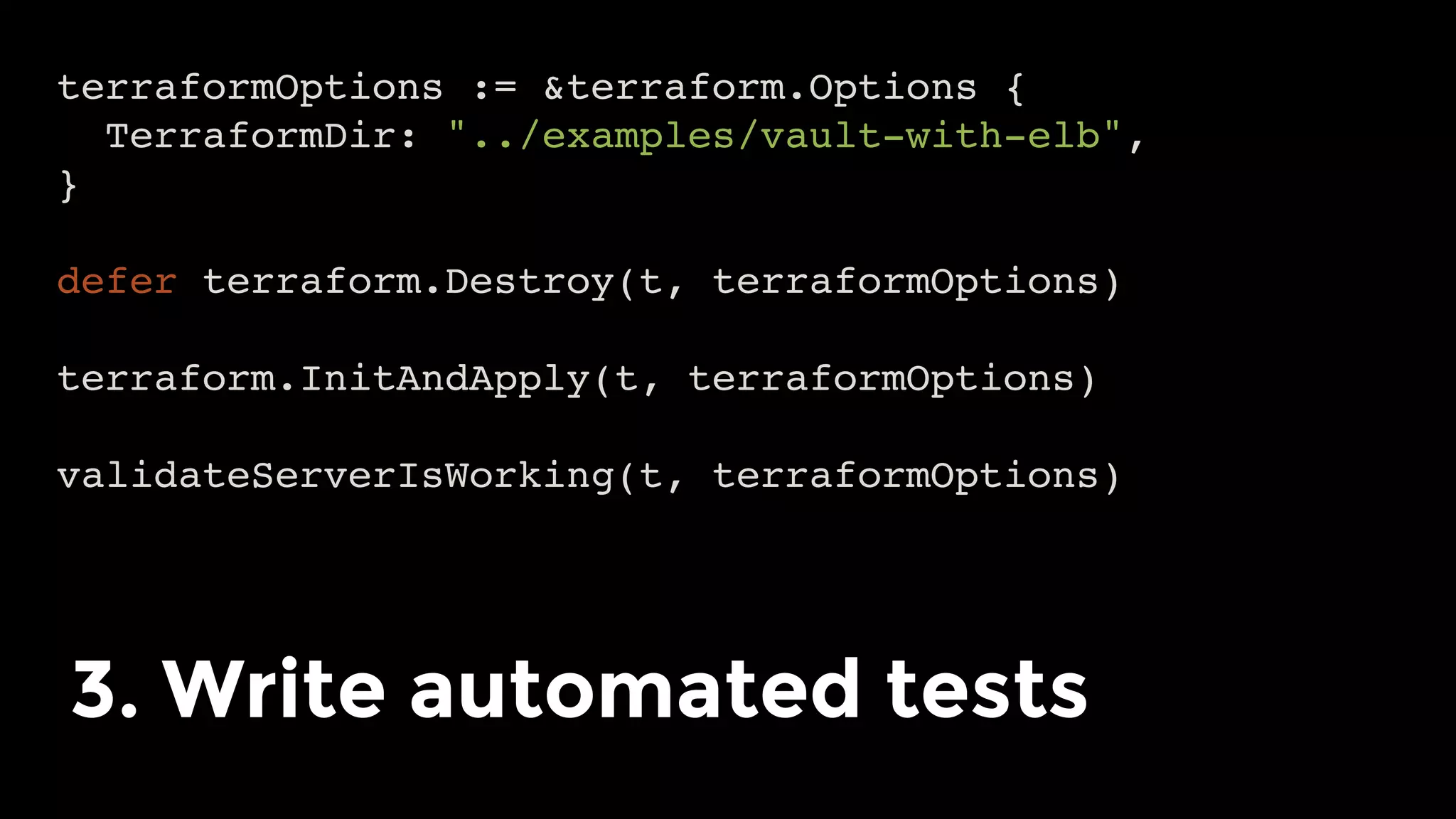

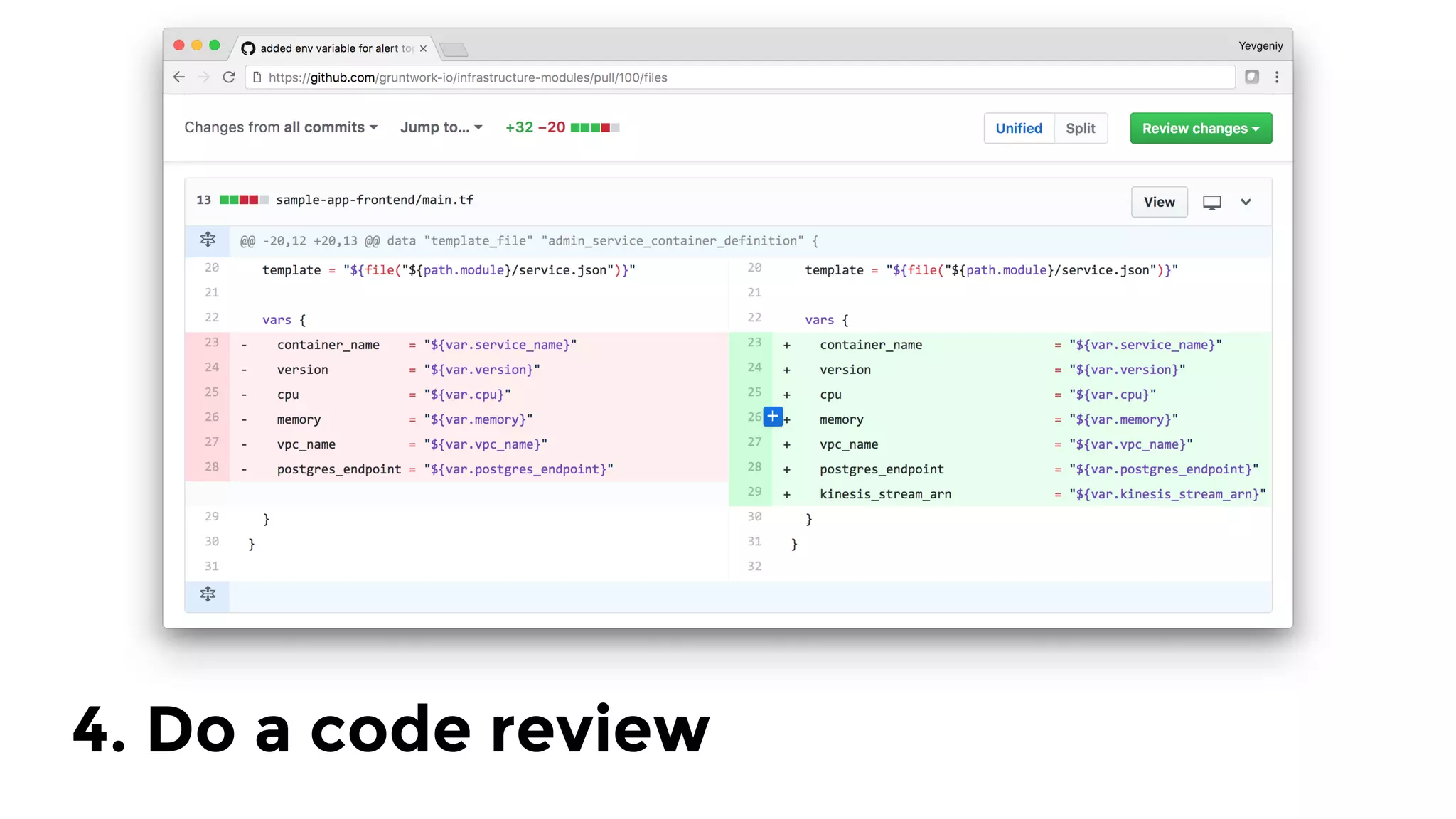

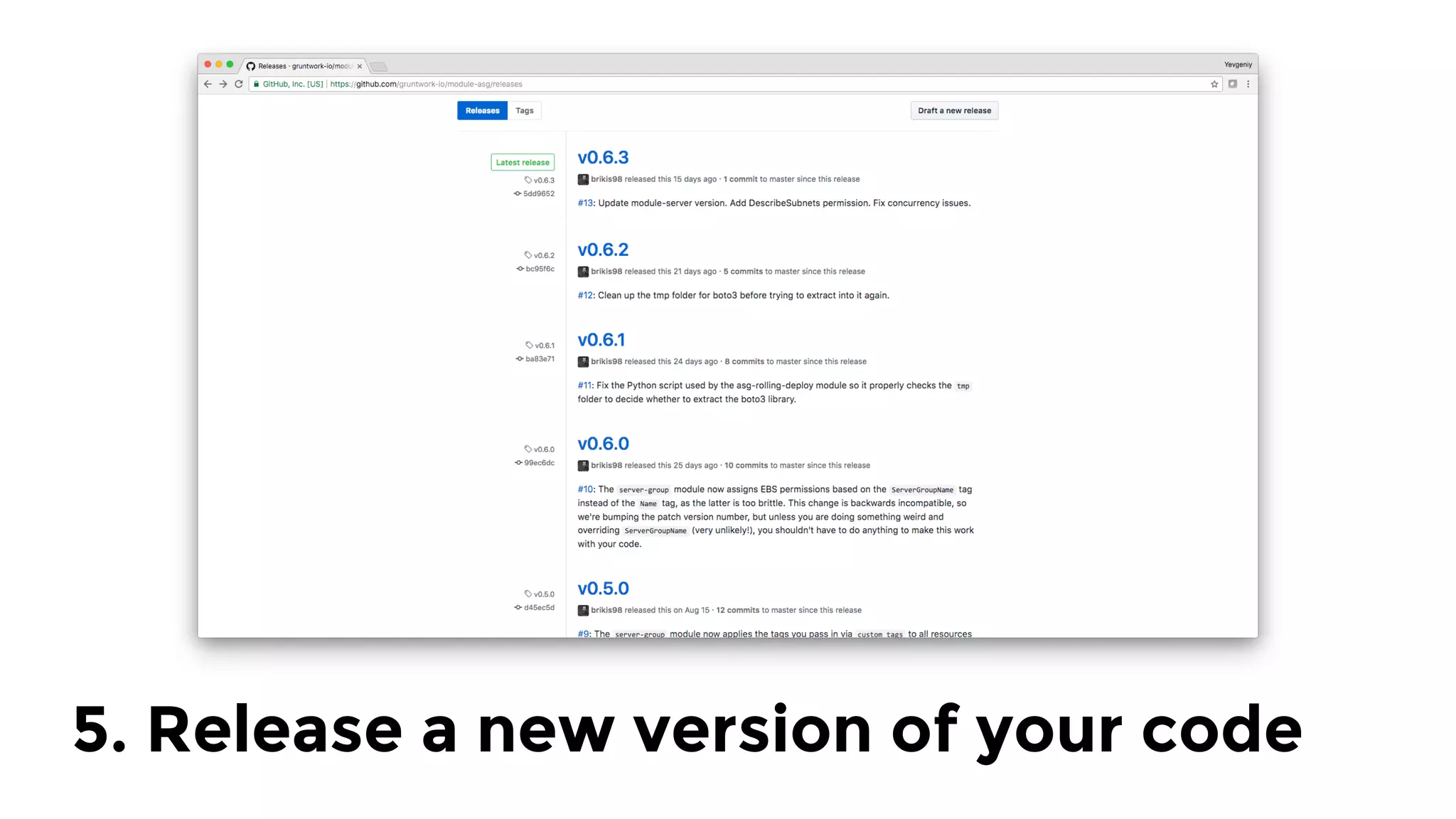

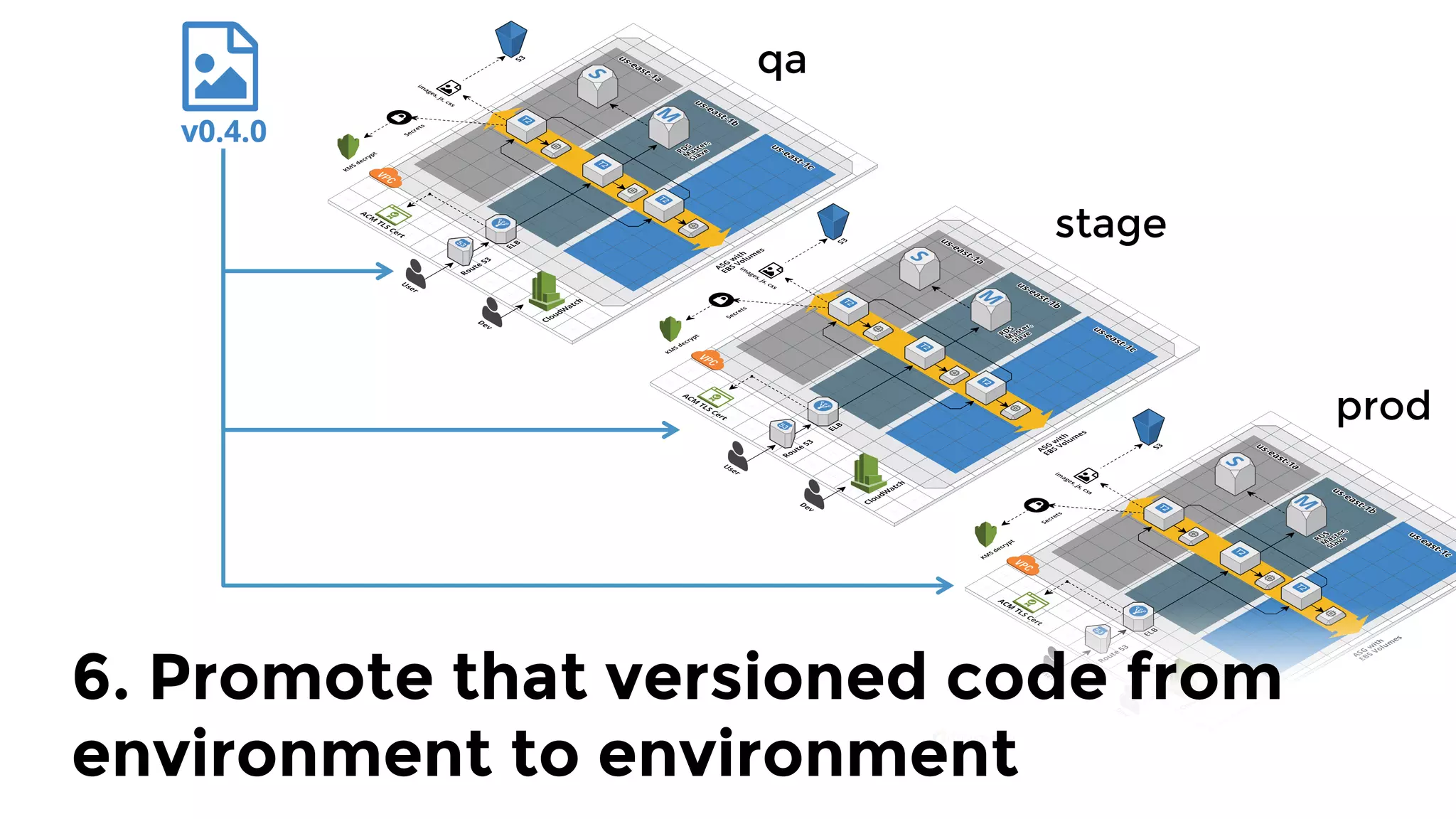

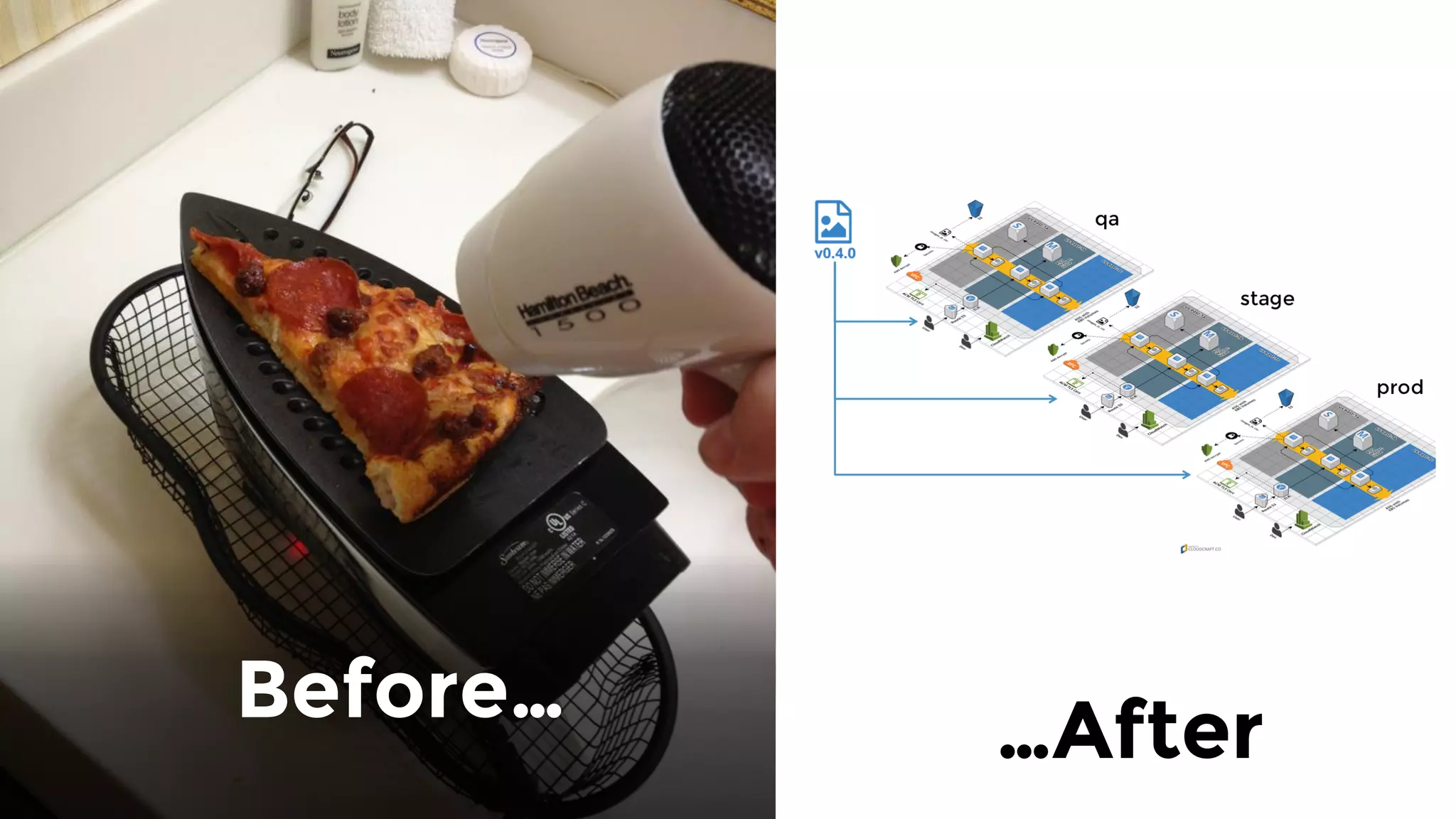

The document discusses the challenges and lessons learned from writing extensive infrastructure code, highlighting the complexities involved in building production-grade systems and the lengthy timelines required for various infrastructure projects. The author emphasizes the significance of using infrastructure as code, automation, and modular design to streamline processes, reduce project durations, and improve code quality. Additionally, it advocates for thorough testing of infrastructure code to ensure reliability and maintainability.