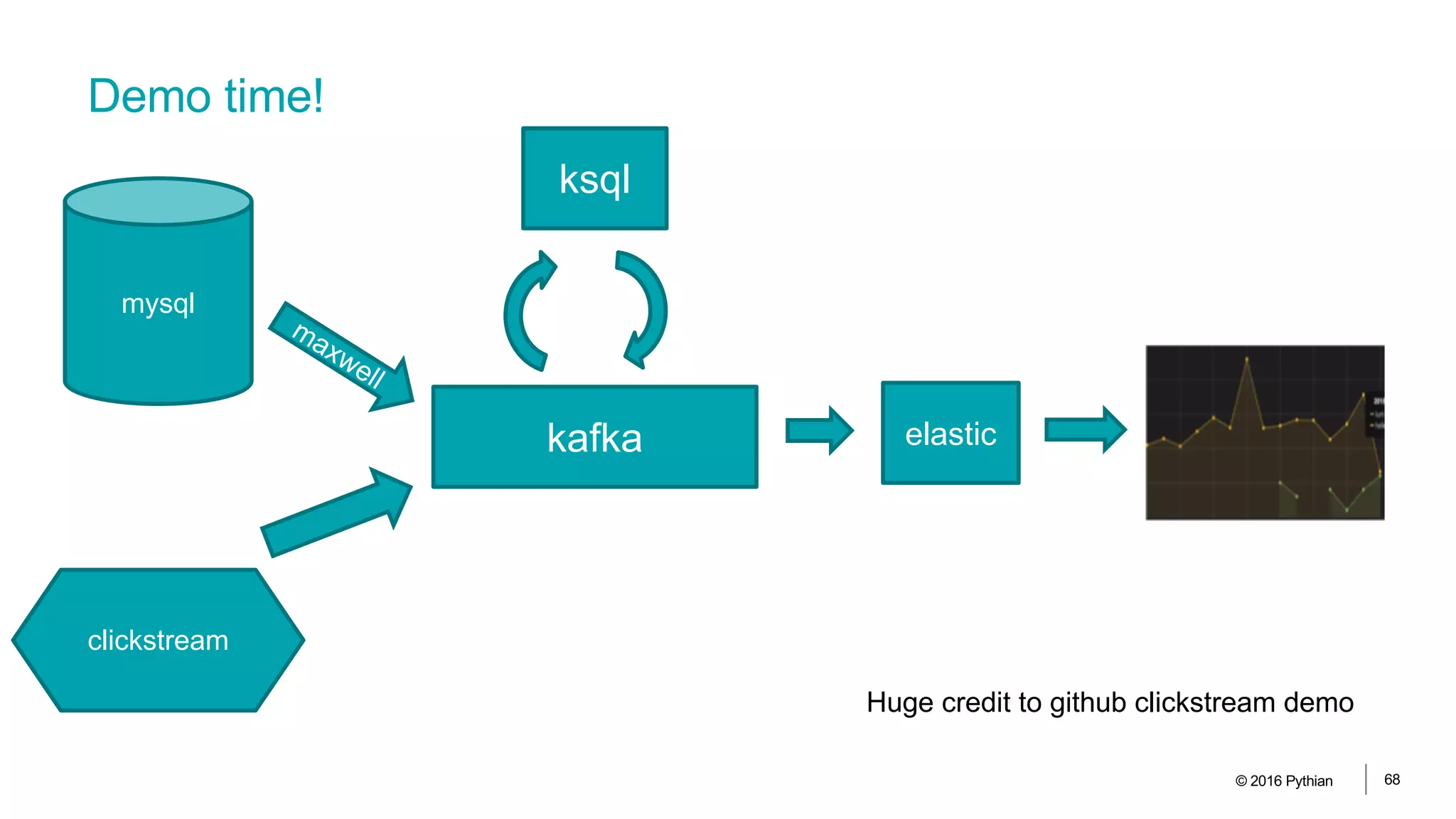

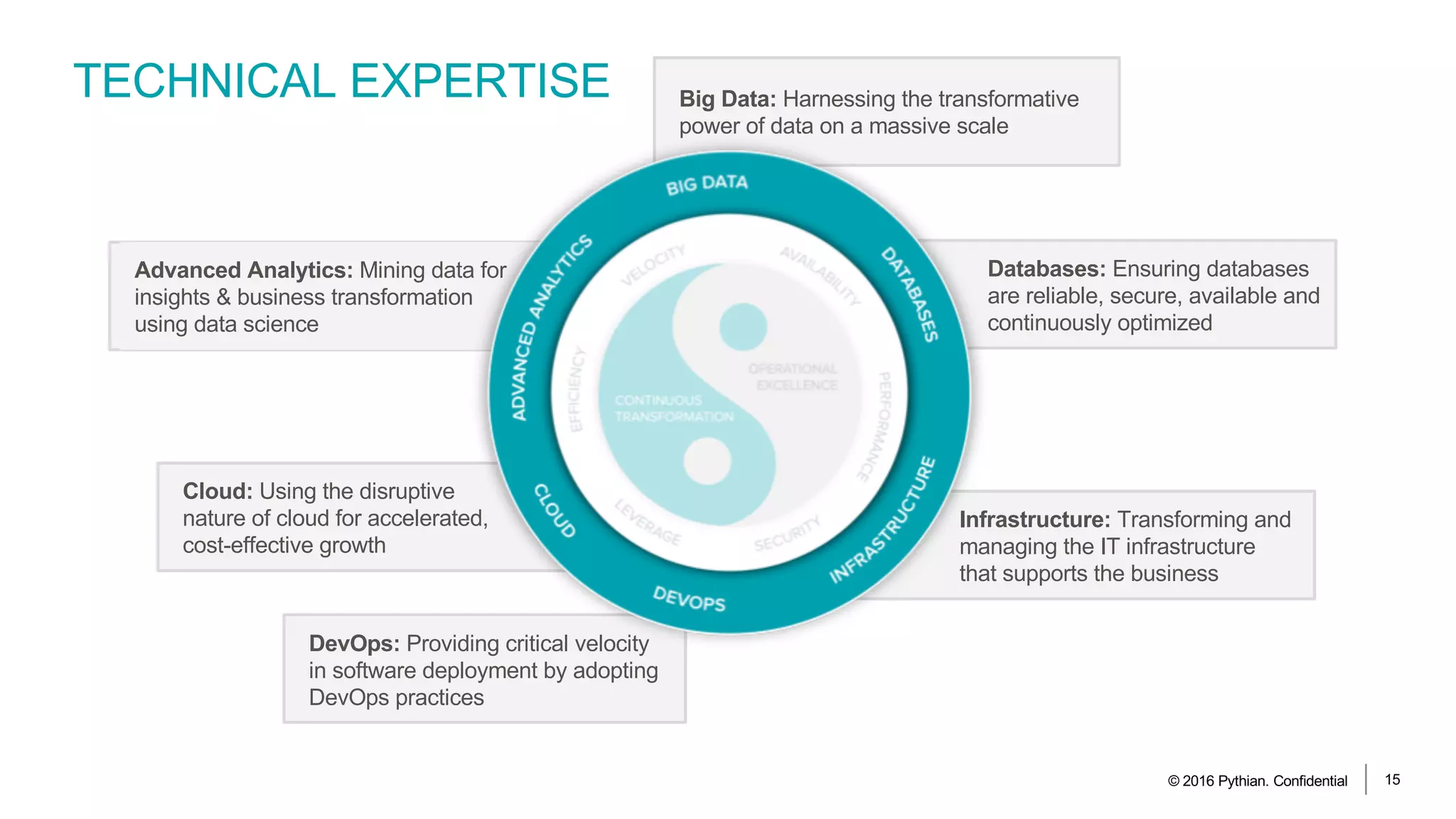

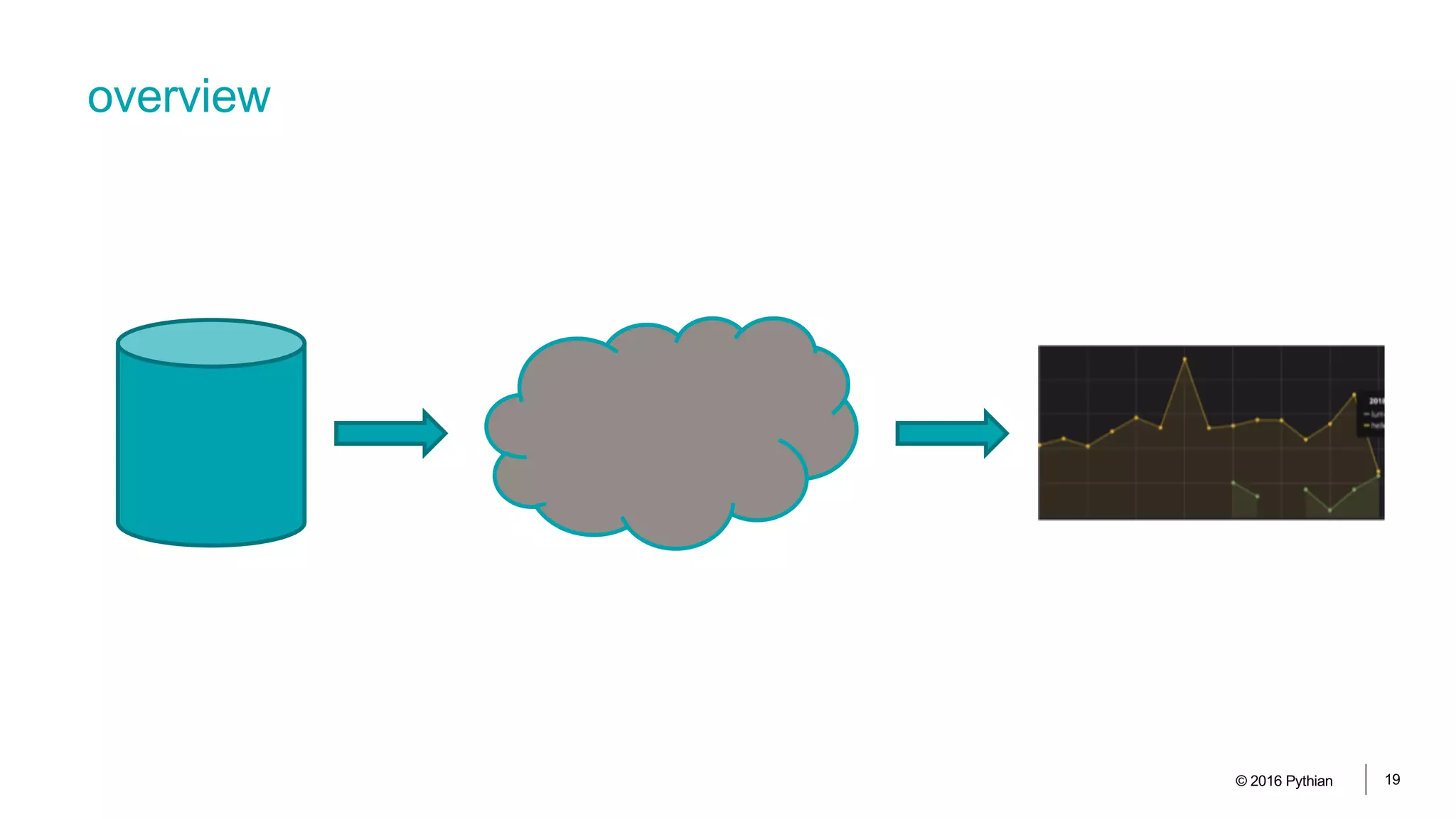

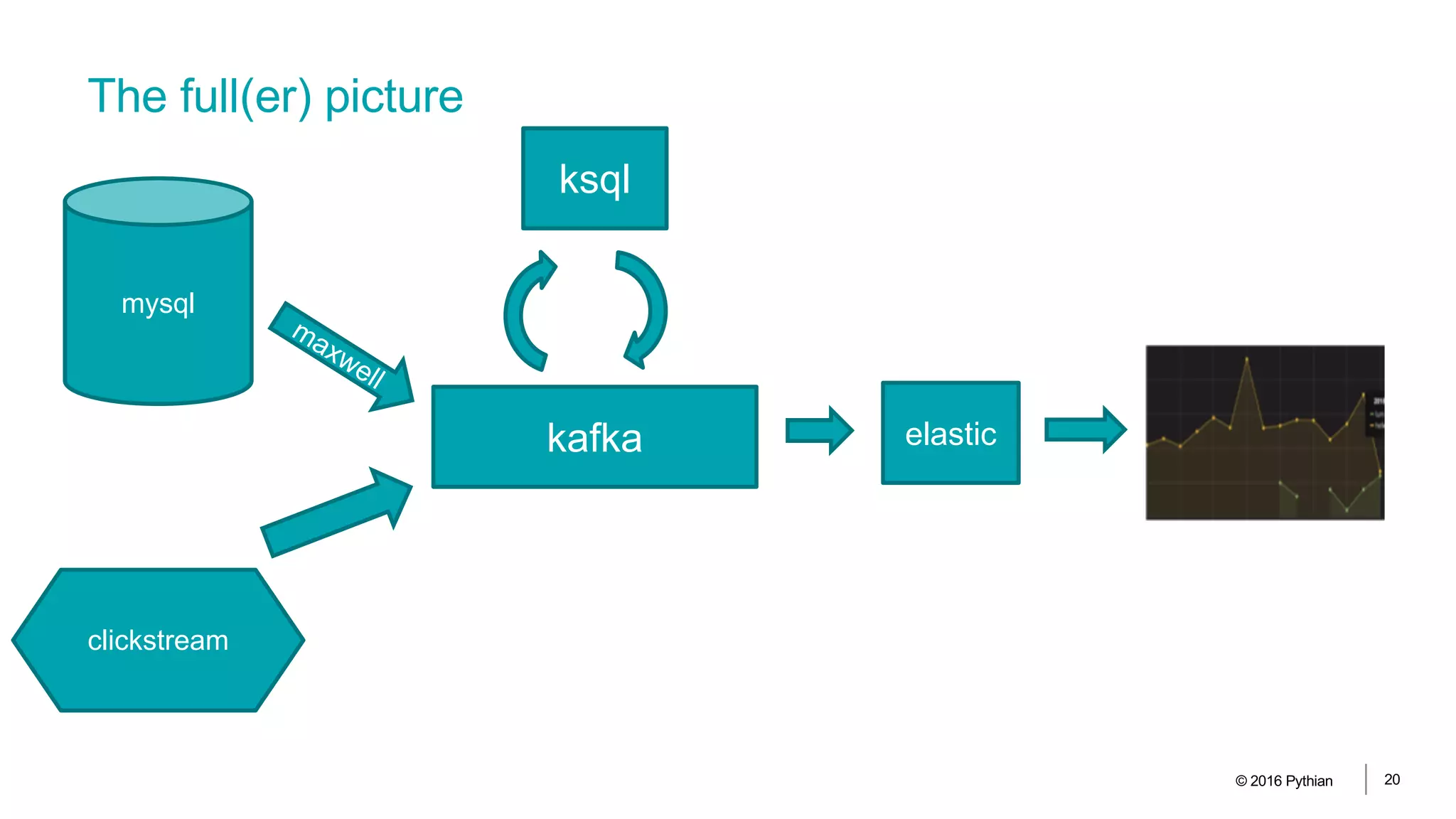

The document discusses streaming ETL processes from relational databases to dashboards using Kafka and KSQL, detailing various technologies and methodologies. It highlights the importance of real-time data processing and the integration of various data sources for analytics and business insights. The agenda includes practical demonstrations of data flow setups, transformations, and analytics with streaming data.

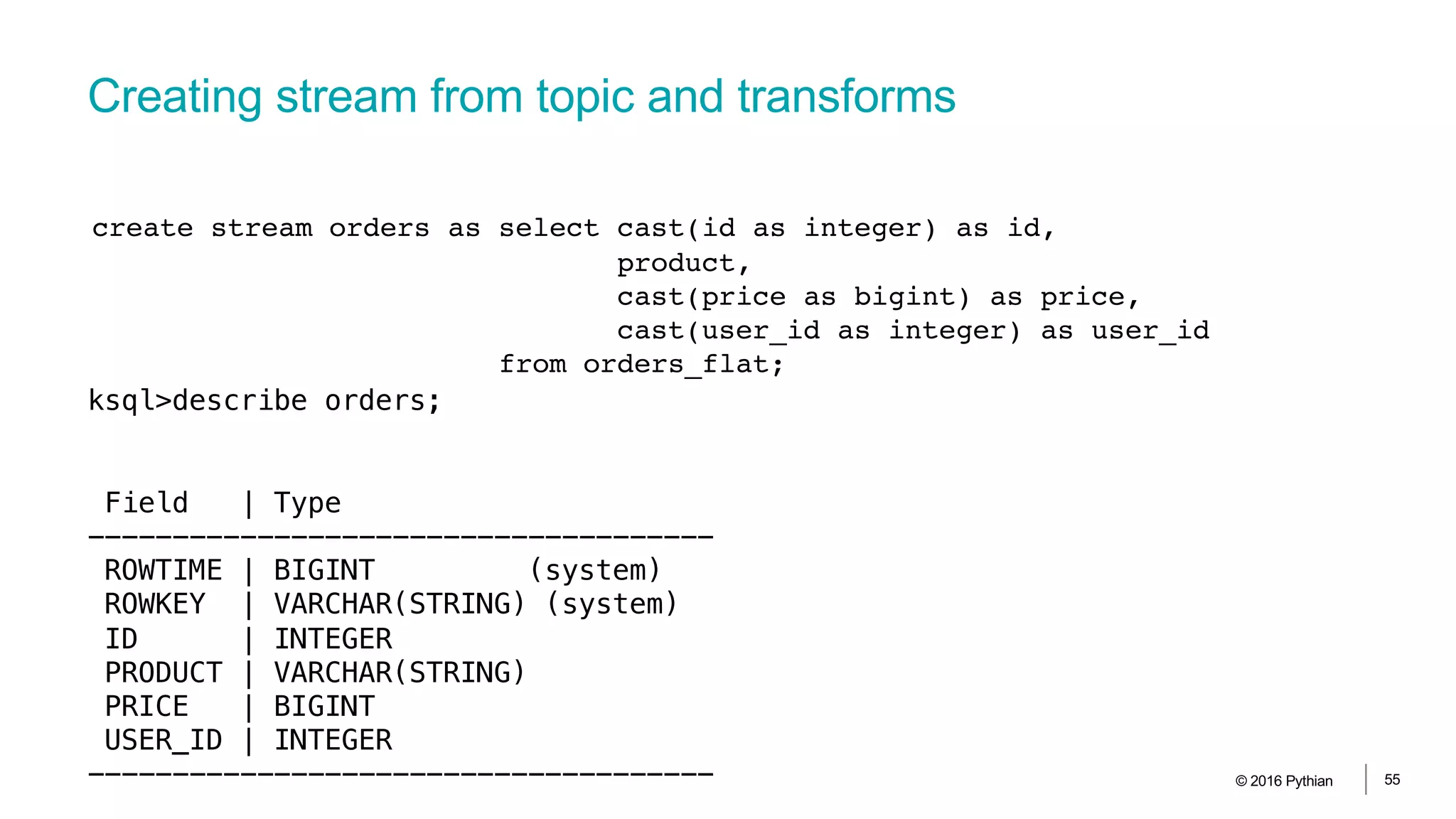

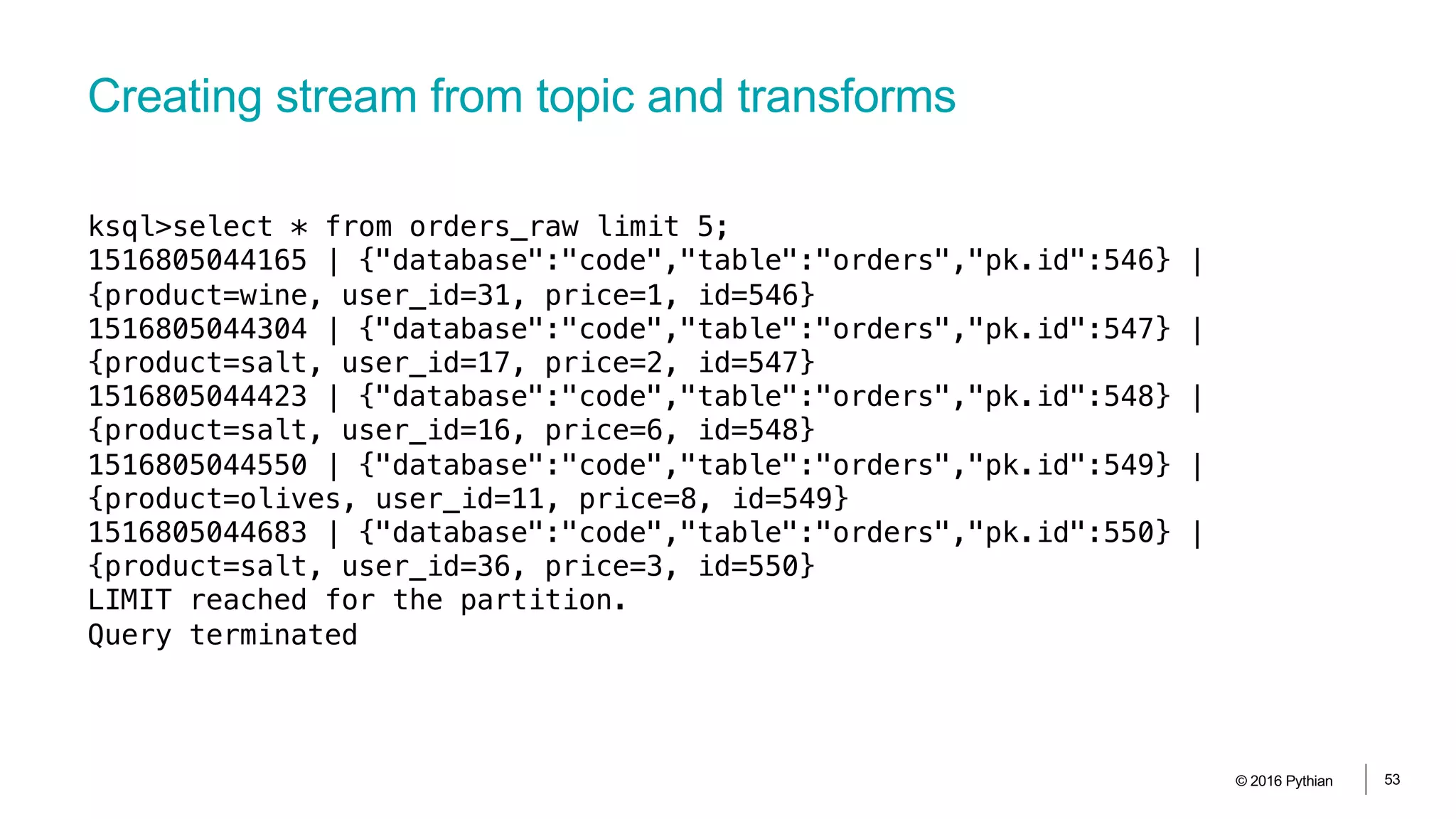

![Creating stream from topic and transforms

© 2016 Pythian 52

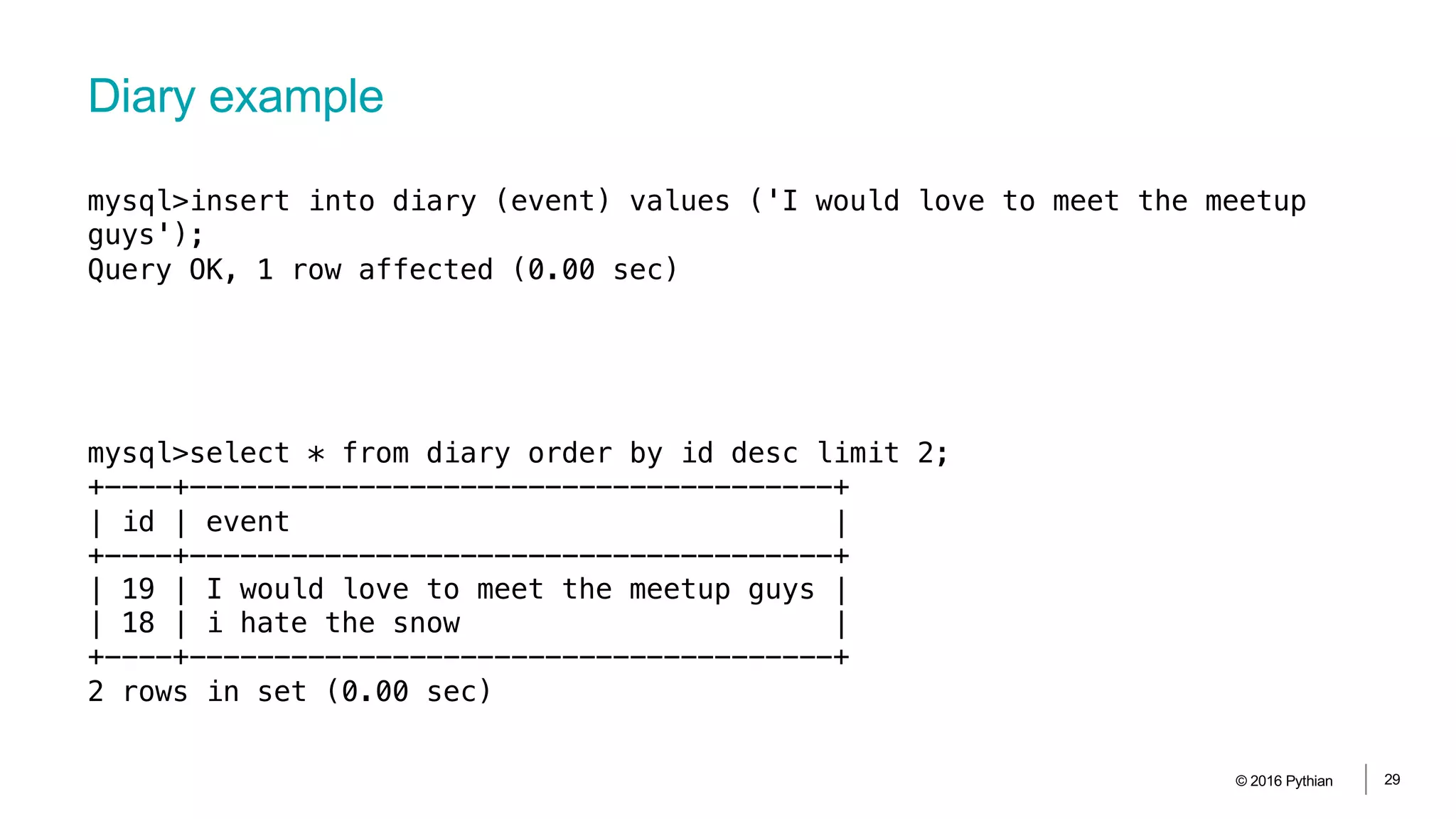

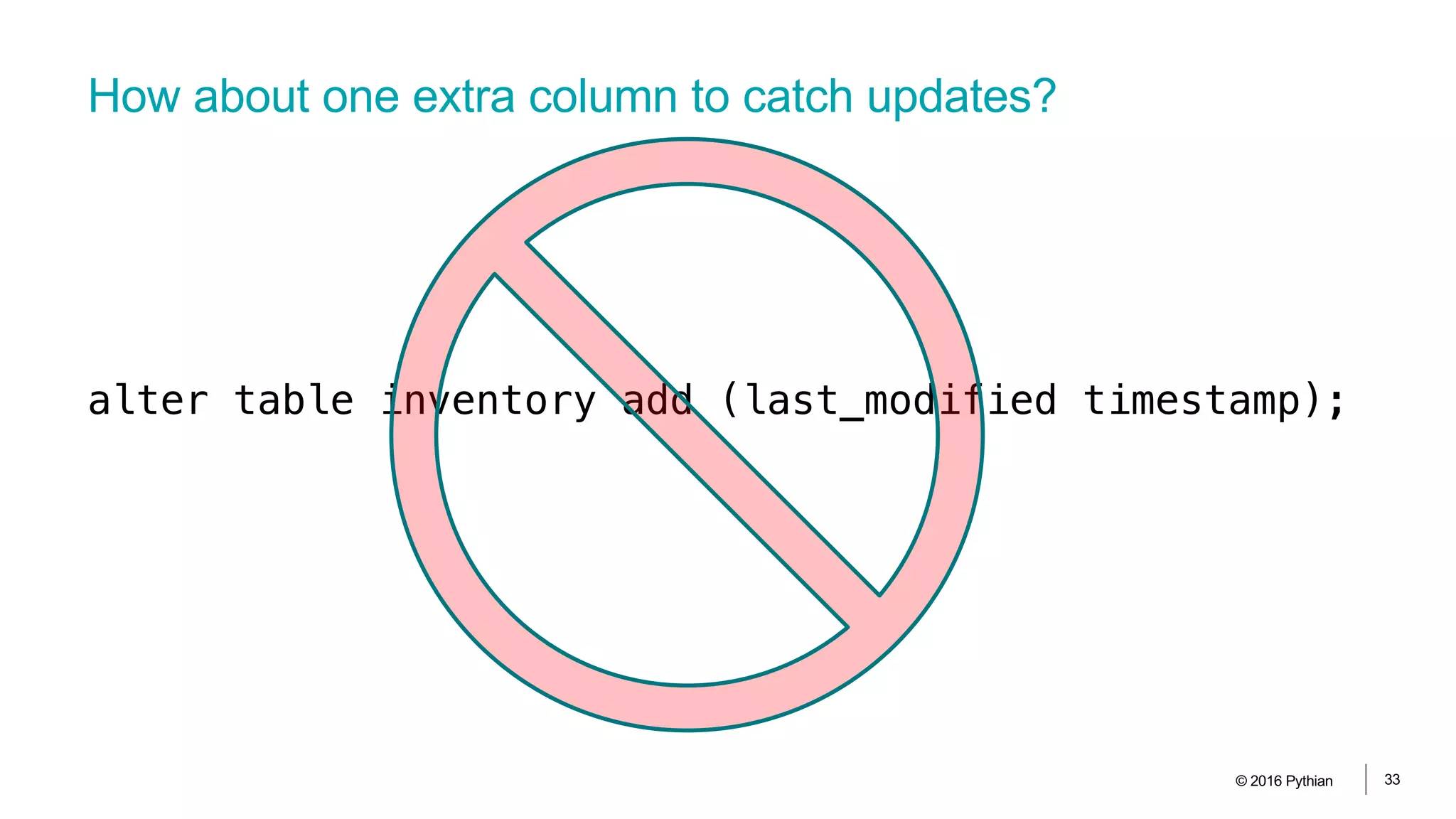

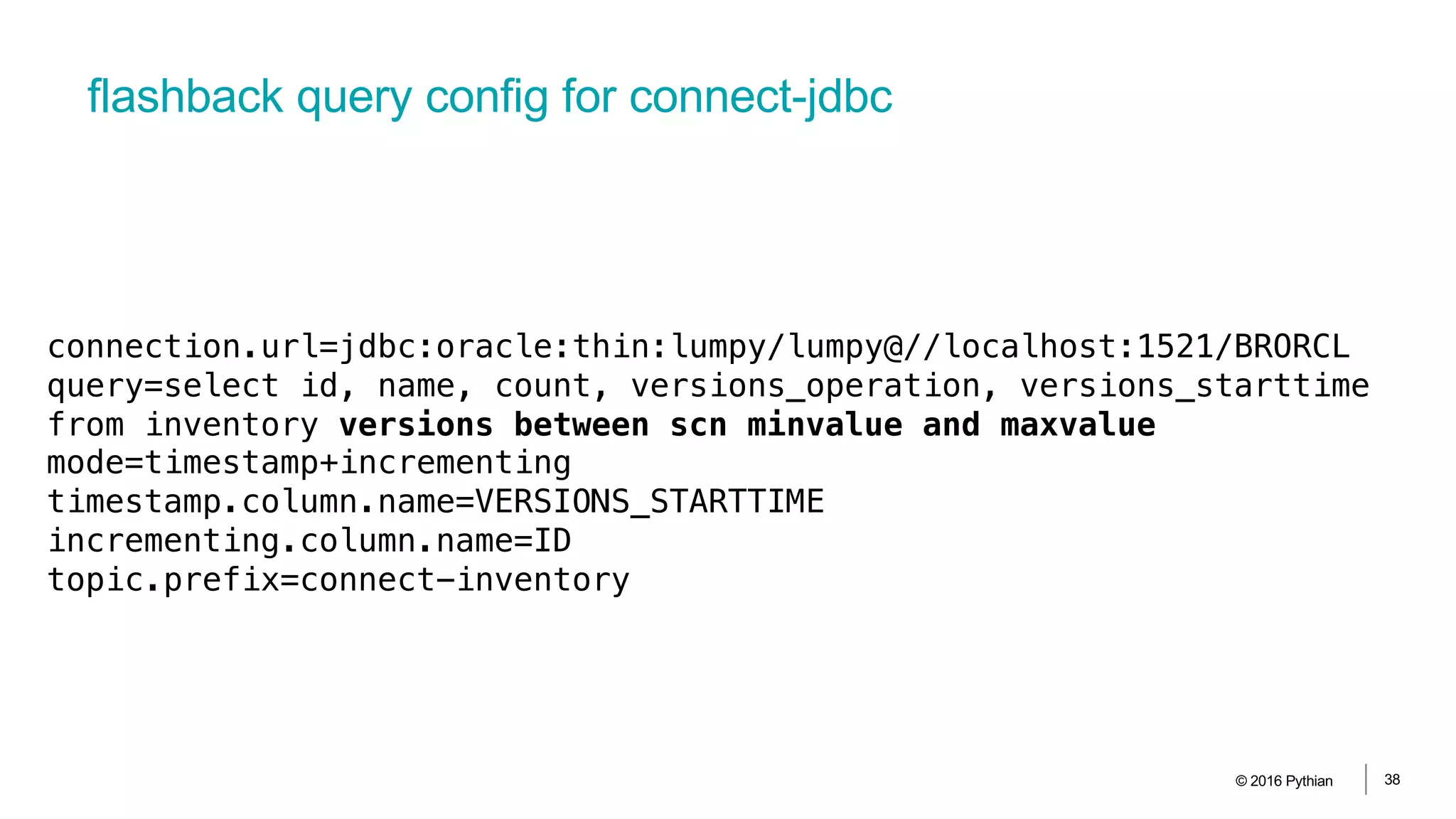

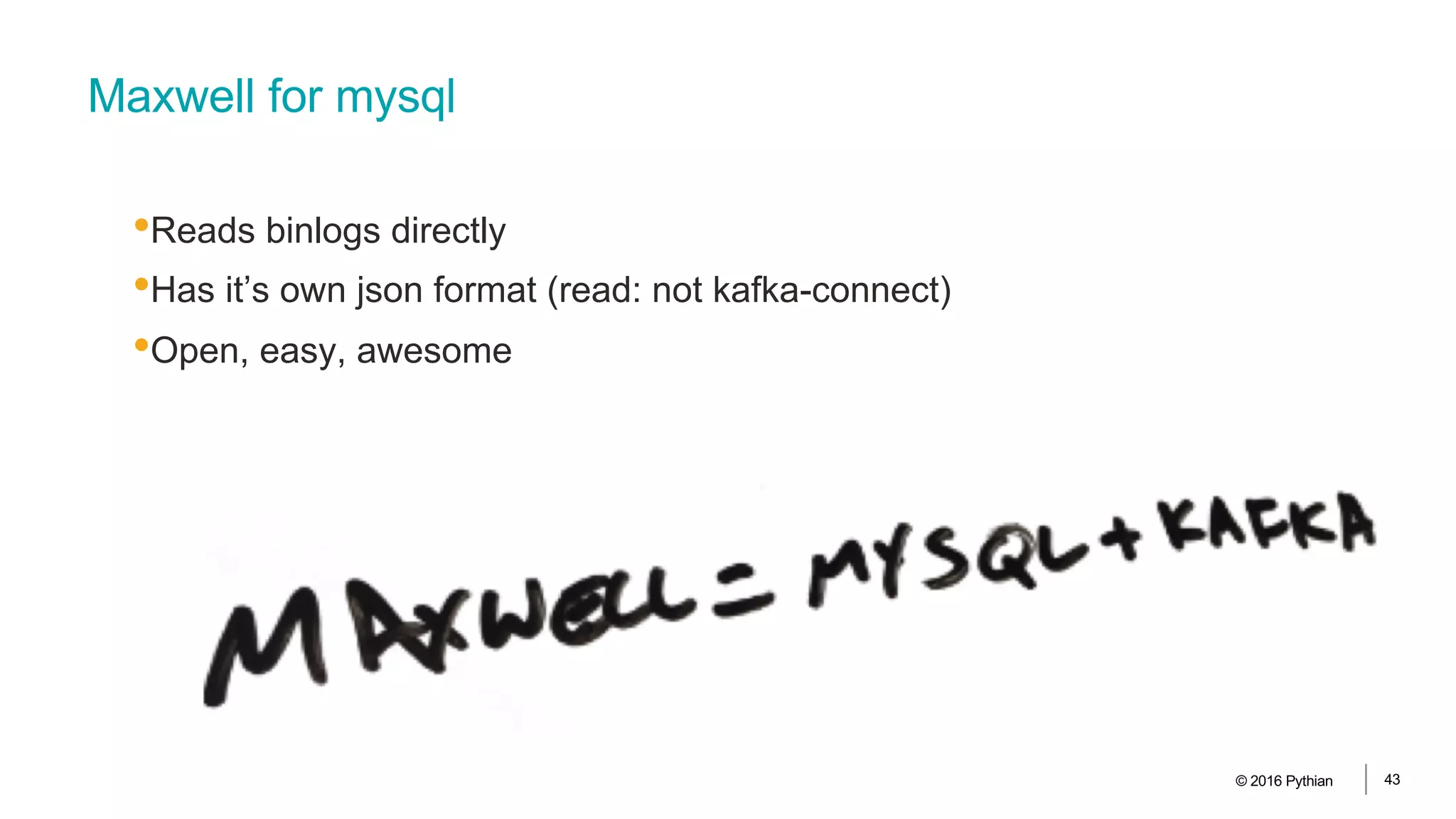

create stream orders_raw (data map(varchar, varchar))

with (kafka_topic = 'maxwell_code_orders', value_format = 'JSON’);

ksql>describe orders_raw;

Field | Type

------------------------------------------------

ROWTIME | BIGINT (system)

ROWKEY | VARCHAR(STRING) (system)

DATA | MAP[VARCHAR(STRING),VARCHAR(STRING)]

------------------------------------------------](https://image.slidesharecdn.com/streamingetl-180125134310/75/Streaming-ETL-from-RDBMS-to-Dashboard-with-KSQL-43-2048.jpg)

![Creating stream from topic and transforms

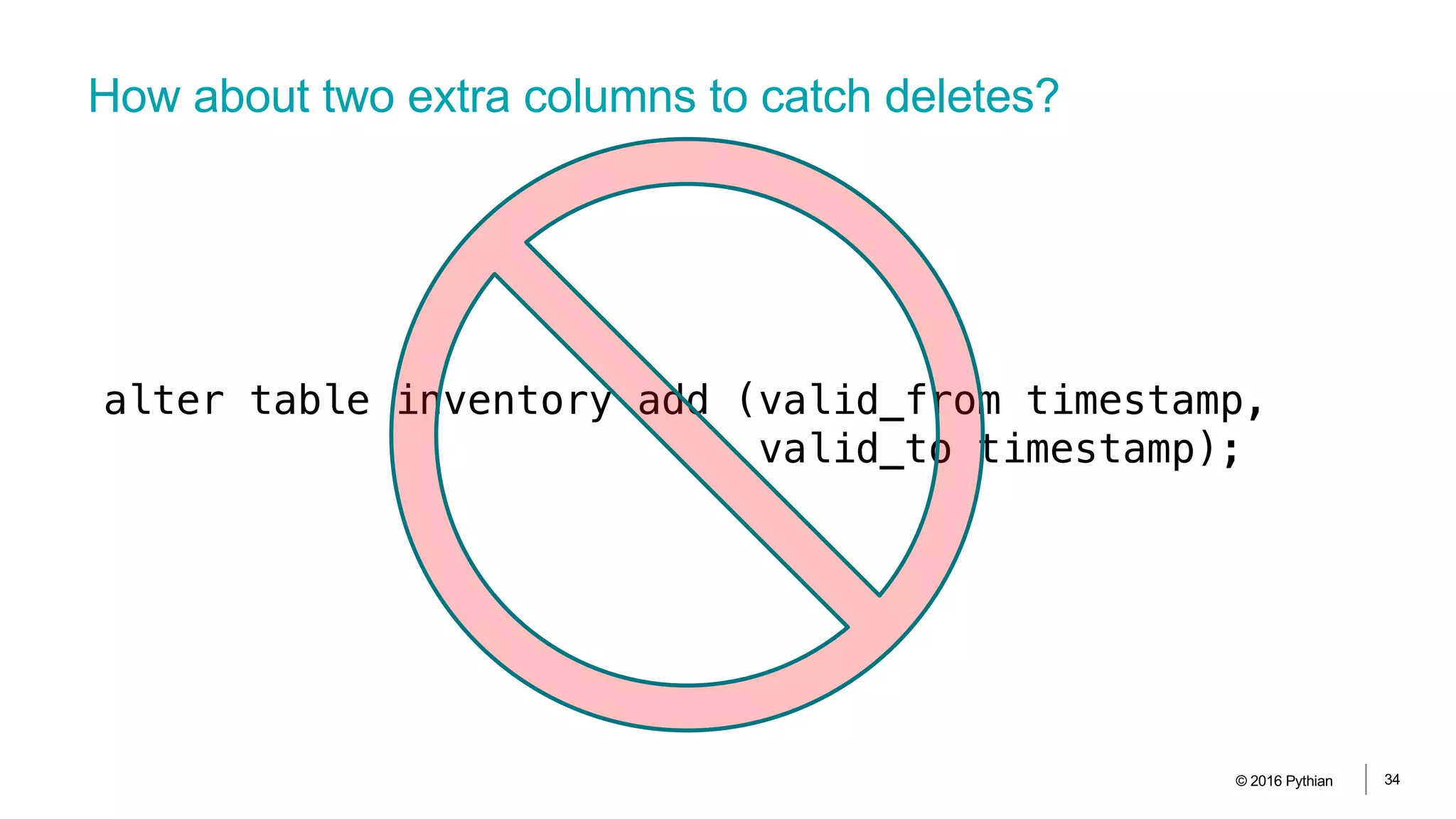

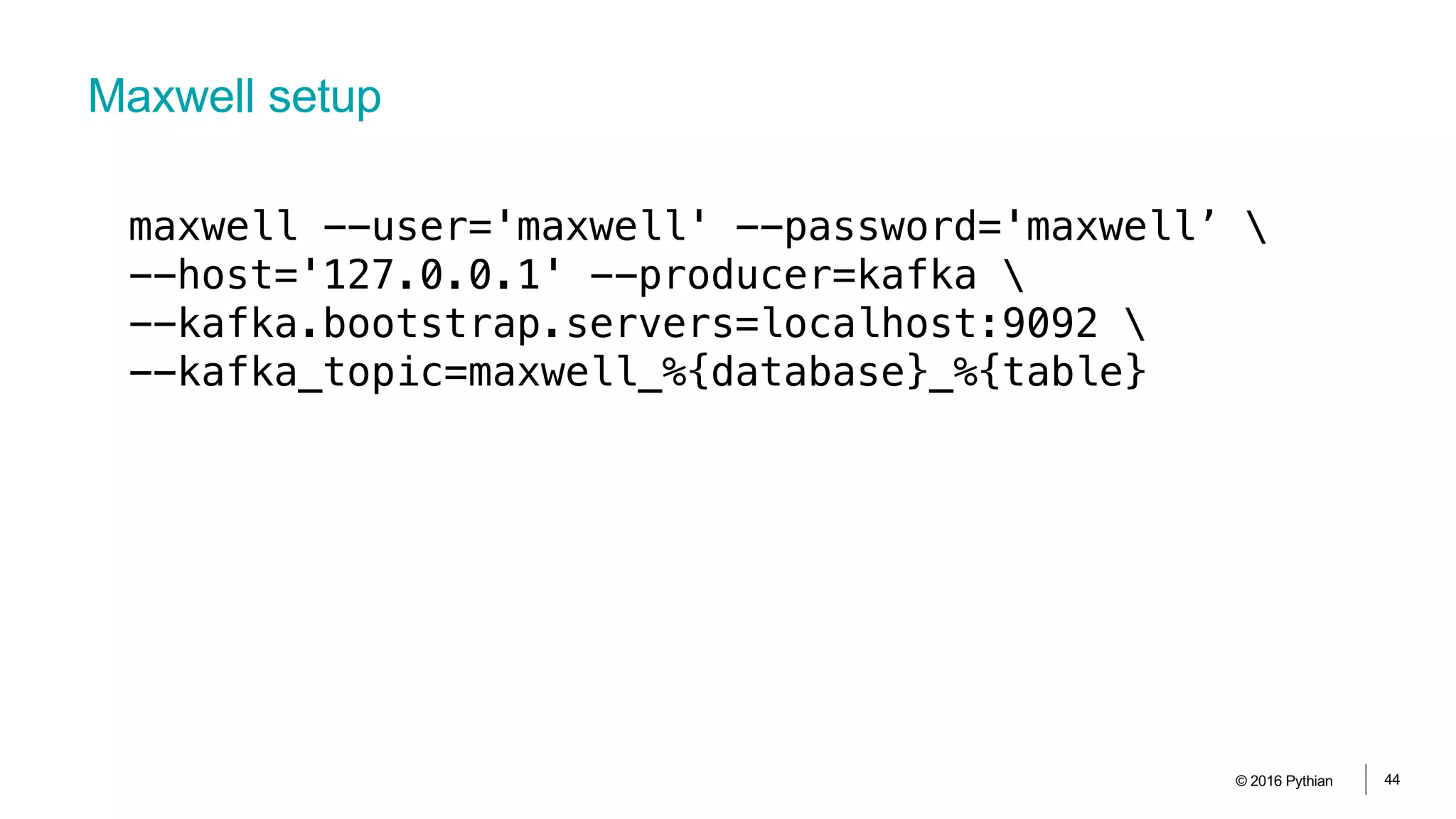

© 2016 Pythian 54

create stream orders_flat as select data['id'] as id,

data['product'] as product,

data['price'] as price,

data['user_id'] as user_id

from orders_raw;

ksql>describe orders_flat;

Field | Type

-------------------------------------

ROWTIME | BIGINT (system)

ROWKEY | VARCHAR(STRING) (system)

ID | VARCHAR(STRING)

PRODUCT | VARCHAR(STRING)

PRICE | VARCHAR(STRING)

USER_ID | VARCHAR(STRING)

-------------------------------------](https://image.slidesharecdn.com/streamingetl-180125134310/75/Streaming-ETL-from-RDBMS-to-Dashboard-with-KSQL-45-2048.jpg)