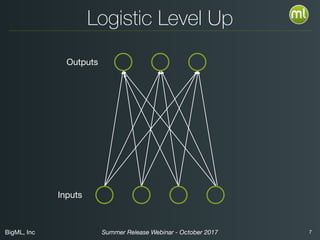

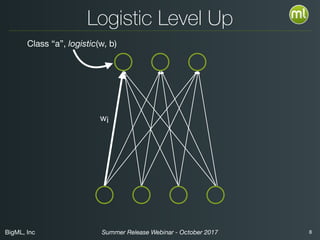

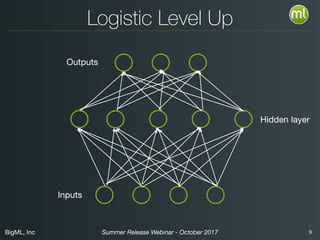

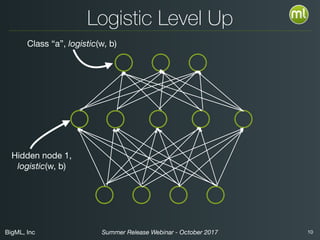

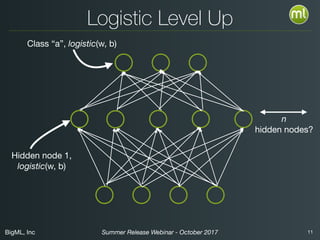

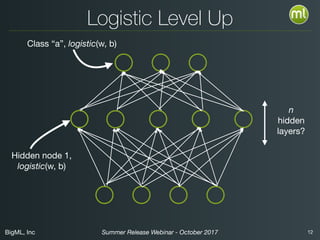

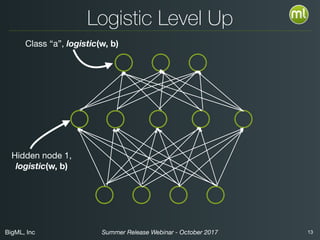

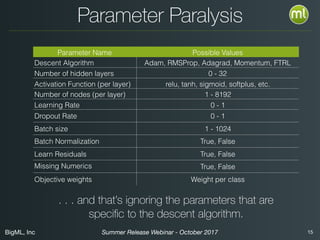

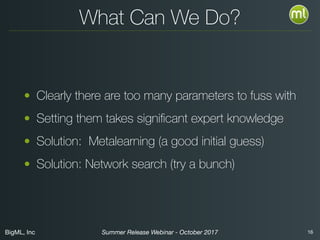

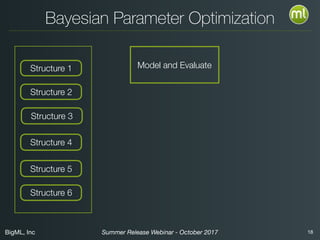

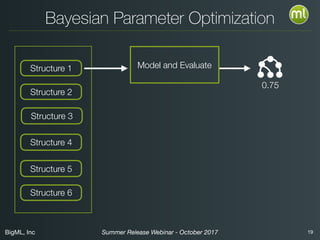

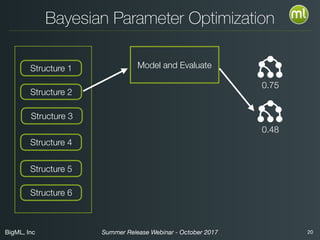

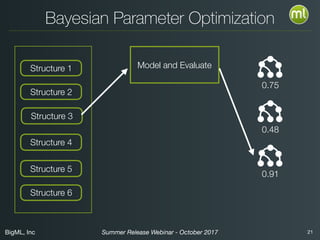

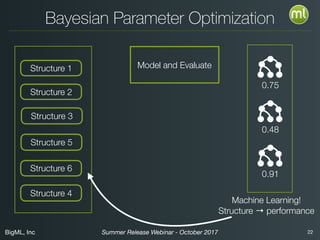

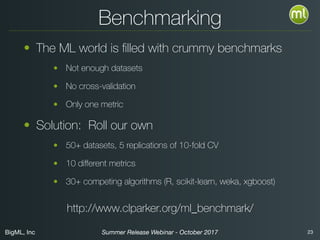

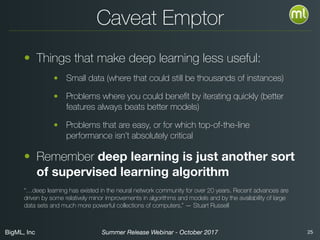

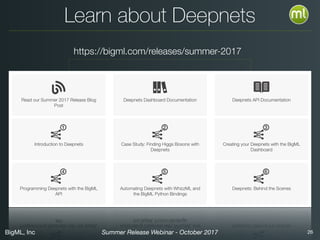

The document discusses BigML's Summer 2017 release, introducing deep neural networks aimed at making advanced machine learning accessible even to novices. It addresses common misconceptions about deep learning, outlines the complexities of parameter settings, and emphasizes the need for solutions such as metalearning and network search to simplify the process. The webinar also highlights benchmarks and warns of situations where deep learning may not be the best choice.