NTCIR15WWW3overview

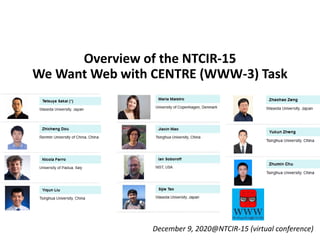

- 1. Overview of the NTCIR-15 We Want Web with CENTRE (WWW-3) Task December 9, 2020@NTCIR-15 (virtual conference)

- 2. Web Search is not a solved problem! • Are we making progress? (Example: does deep learning-based reranking really outperform a properly-tuned BM25 for any query?) • Can we replicate/reproduce the findings? (same method, same/different data, different research groups)

- 3. TALK OUTLINE • Chinese subtask • English subtask • CENTRE • Summary • NTCIR-16 WWW-4

- 4. Chinese subtask definition • Input: 80 WWW-2 topics and 80 new WWW-3 topics (participants had access to the original qrels for WWW-2) • Output: TREC-style run file • Target corpus: SogouT-16 • All runs were pooled and relevance assessments were conducted for 80 WWW-3 new topics • Runs are scored also based on the 80 WWW-3 topics

- 5. Topics • The 80 queries were sampled from Sogou’s query logs in one day of August 2018, which contain 54 torso queries, 13 tail queries and 13 hot queries.

- 6. Runs and qrels • 11 runs from 3 teams (including the organisers’ baseline) were submitted and pooled

- 7. Official results (nDCG and Q)

- 8. Official results (nERR and iRBU)

- 9. Randomised Tukey HSD test results (nDCG and Q) OUTPERFORMS

- 10. Randomised Tukey HSD test results (nERR and iRBU) OUTPERFORMS

- 11. TALK OUTLINE • Chinese subtask • English subtask • CENTRE • Summary • NTCIR-16 WWW-4

- 12. English subtask definition • Input: 80 WWW-2 topics and 80 new WWW-3 topics (participants had access to the original qrels for WWW-2) • Output: TREC-style run file • Target corpus: clueweb12-B13 • All runs were pooled and relevance assessments were conducted for all 160 topics • Runs are scored based on the 80 WWW-3 topics

- 13. The original plan with a REV run (a revived system from NTCIR-14) • Replicability: compare a repli run with a REV run on the WWW-2 topics • Reproducibility: compare a repro run effectiveness on the WWW-3 topics with a REV run effectiveness on the WWW-2 topics • Progress: compare new runs and a REV run (SOTA from NTCIR-14) on the WWW-3 topics But unfortunately, we could not obtain a reliable REV run that represents the SOTA from NTCIR-14 on the NTCIR-15 WWW-3 topics.

- 14. Runs and qrels • 37 runs from 9 teams (including the organisers’ baseline) were submitted and pooled

- 15. Official top 10 runs (nDCG and Q)

- 16. Official top 10 runs (nERR and iRBU)

- 17. Randomised Tukey HSD test results (nDCG and Q) – top runs only OUTPERFORMS

- 18. Randomised Tukey HSD test results (nERR and iRBU) – top runs only OUTPERFORMS

- 19. TALK OUTLINE • Chinese subtask • English subtask • CENTRE • Summary • NTCIR-16 WWW-4

- 20. Replicability and Reproducibility Terminology “An experimental result is not fully established unless it can be independently reproduced.” OLD ACM Terminology (Version 1.0): • Replicability: Different team, same experimental setup • Reproducibility: Different team, different experimental setup With the new ACM terminology (Version 1.1) replicability and reproducibility are swapped! Version 1.0: https://www.acm.org/publications/policies/artifact-review-badging Version 1.1: https://www.acm.org/publications/policies/artifact-review-and-badging-current

- 21. Replicability Measures Ranking: Kendall’s τ and RBO Absolute Per-Topic Effectiveness: RMSEabs Statistical approach: p-value of paired t-test Effect over a baseline: RMSEΔ, Effect Ratio (ERrepli) and Delta Relative Improvement (ΔRIrepli) Reproducibility measures unpaired

- 22. Replicability & Reproducibility Runs • Target Runs submitted at WWW-2: • Advanced: THUIR-E-CO-MAN-Base2 (LambdaMART) • Baseline: THUIR-E-CO-PU-Base4 (BM25) • Replicability and Reproducibility runs submitted at WWW-3: • Advanced: KASYS-E-CO-REP-2 and SLWWW-E-CO-REP-4 • Baseline: KASYS-E-CO-REP-3 • Replicability: WWW-2 qrels and topics; • Reproducibility: WWW-2 qrels and topics compared against WWW-3 qrels and topics.

- 23. Replicability recap WWW-2 topics WWW-3 topics WWW-2runsWWW-3runs A-run (advanced) B-run (baseline) Effect A-run (advanced) B-run (baseline) Effect

- 24. Replicability Results: Ranking of Documents • Kendall’s τ and RBO: computed between the original ranking of documents and the replicated ranking; • The closer to 1 the better the replicated run; • Scores close to 0 mean that the original and replicated runs are not correlated; • It is extremely hard to obtain the same list of documents!

- 25. • RMSE: the closer to 0 the better; • p-value: small p-value means that the runs are significantly different (without specifying whether they are better or not); Large RMSEs Replicability Results: RMSE and p-values Very small p-values

- 26. Replicability Results: Effect over a Baseline • Implications of ER scores: • ER ≤ 0: Failed replication, A-run failed to outperform the B-run; • 0 < ER < 1: Somehow successful, the replicated effect is smaller compared to the original effect; • ER = 1: Perfect replication; • ER > 1: Successful replication, the replicated effect is larger compared to the original effect. • Similar interpretation of ΔRI but 0 is the perfect replication;

- 27. Reproducibility recap WWW-2 topics WWW-3 topics WWW-2runsWWW-3runs A-run (advanced) B-run (baseline) Effect A-run (advanced) B-run (baseline) Effect

- 28. Reproducibility Results: p-values and Effects over a Baseline • Recall that there is no target original run; • Reproduciblity is even harder than replicability!

- 29. TALK OUTLINE • Chinese subtask • English subtask • CENTRE • Summary • NTCIR-16 WWW-4

- 30. Summary • Chinese subtask (only 3 teams) Best run: RUCIR-C-CD-NEW-4 • English subtask (9 teams) Best runs: KASYS-E-CO-NEW-{1,4} and mpii-E-CO- NEW-1. KASYS uses a BERT-based method from [Yilmaz+ EMNLP 2019]. • CENTRE: We need a community effort since replicability and reproducibility are very tough problems!

- 31. Thank you participants! And many thanks to the NTCIR PC chairs, GCs, and staff!

- 32. TALK OUTLINE • Chinese subtask • English subtask • CENTRE • Summary • NTCIR-16 WWW-4

- 33. WWW will be back (IF our task proposal is accepted) • English subtask only • New English corpus! (Common Crawl?) • New target for replicability, reproducibility, and a baseline for progress: University of Tsukuba’s BERT-based run from WWW-3 • Topics to be released in October 2021 • Run submission deadline in November 2021 • Please follow @ntcirwww on Twitter!

- 34. Selected references [Breuer+ SIGIR2020] How to Measure the Reproducibility of System-oriented IR Experiments, ACM SIGIR 2020. [Sakai+ TOIS2020] Retrieval Evaluation Measures that Agree with Users' SERP Preferences: Traditional, Preference-based, and Diversity Measures, ACM TOIS 39(2), to appear, 2020. [Yilmaz+ EMNLP2019] Cross-Domain Modeling of Sentence-Level Evidence for Document Retrieval, EMNLP 2019. More about CENTRE Evaluation measures including iRBU University of Tsukuba’s top run is based on this