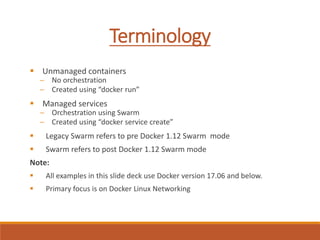

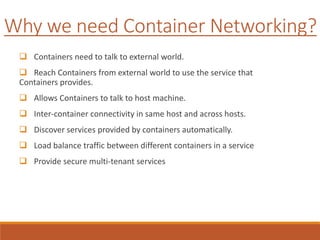

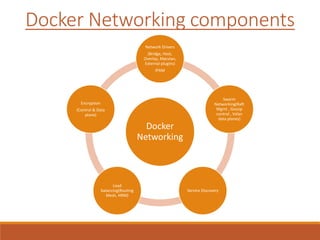

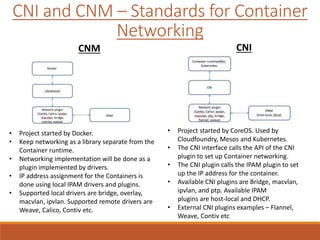

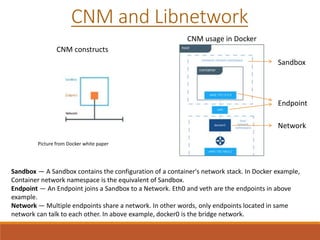

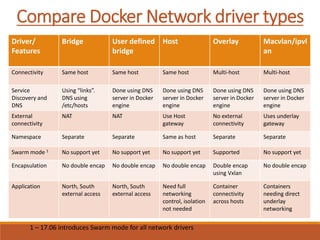

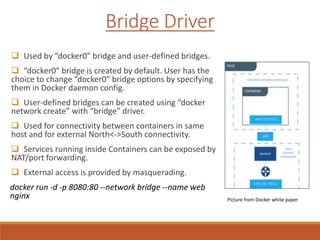

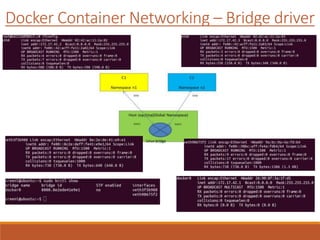

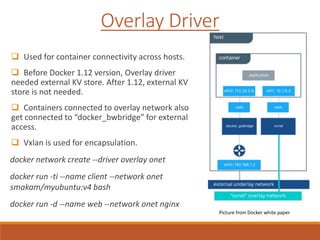

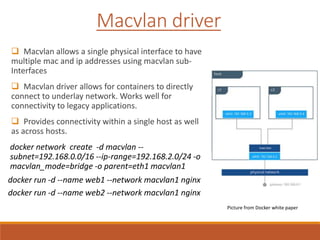

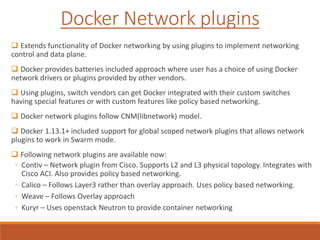

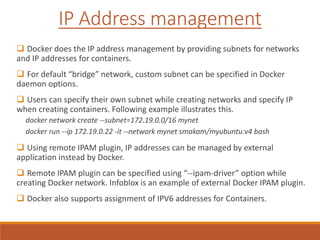

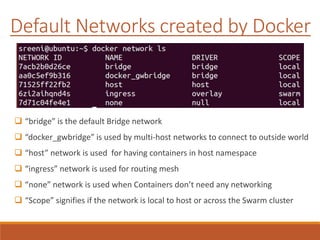

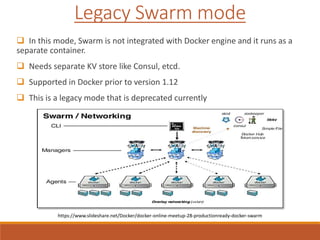

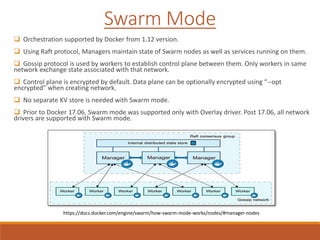

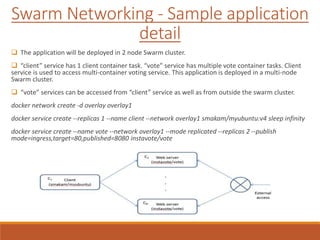

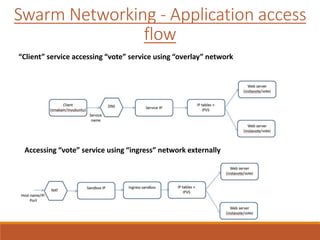

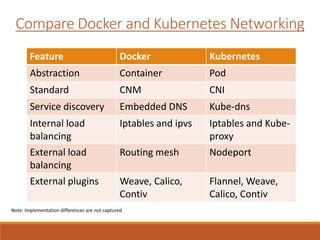

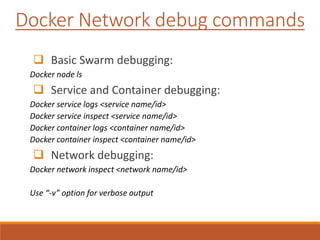

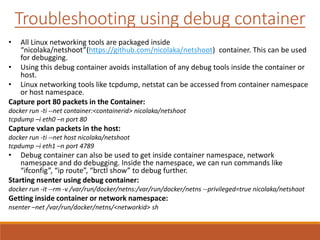

The document provides an overview of Docker networking as of version 17.06. It begins with introductions of the presenter and some key terminology used. It then discusses why container networking is needed and compares features of container and VM networking. The major components of Docker networking including network drivers, IPAM, Swarm networking, service discovery, and load balancing are outlined. Concepts of CNI/CNM standards and IP address management are explained. Examples of different network drivers such as bridge, overlay, macvlan are provided. The document also covers Docker networking concepts such as default networks, Swarm mode, service discovery, and load balancing. It concludes with some debugging commands and a reference slide.