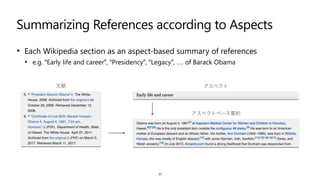

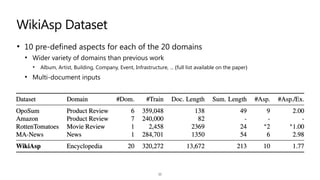

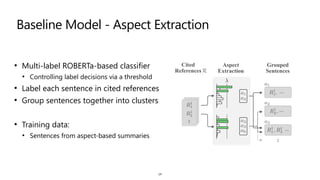

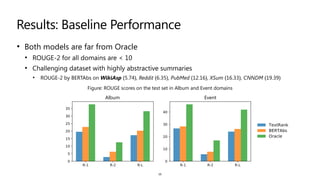

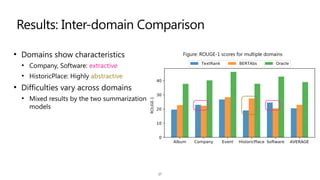

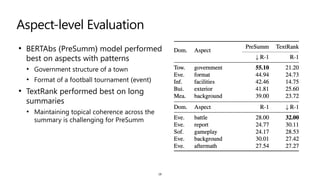

The document introduces WikiAsp, a new multi-domain dataset for aspect-based summarization. WikiAsp contains summaries from 20 different domains such as albums, artists, buildings, and companies. For each domain, it provides 10 predefined aspects that segment the information, such as "background" or "aftermath". The dataset was created by analyzing Wikipedia references and sections. Baseline models achieve low ROUGE scores on the dataset, indicating it poses challenges for aspect-based summarization, especially across different domains which have varying characteristics. The dataset will help the development of models that can generate targeted summaries according to different aspects.

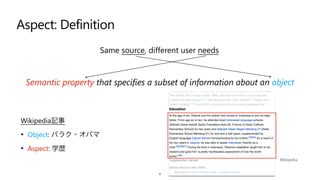

![Aspect: Definition

5

Semantic property that specifies a subset of information about an object

科学論文

• Object: "Attention Is All You Need"

• Aspect: "Multi-Head Attention"

Same source, different user needs

[Vaswani+17]](https://image.slidesharecdn.com/wikiaspcolloqium-211230001345/85/WikiAsp-A-Dataset-for-Multi-domain-Aspect-based-Summarization-5-320.jpg)

![• "Aspects" [Snyder&Barzilay+07]

• Aspect Ranking

• Restaurant reviews

• "Keywords" [Gamon+05]

• Aspect-based Sentiment Analysis

• Customer Feedback

• Aspect-based Summarization

• Proposed by [Titov&McDonald08]

• Customer review summarization

• "Feature" [Hu&Liu04]

• Extracting customer reviews

• Focused on a product "feature"

Evolution of Aspects - Same use, Different Terms

6

1972 1983 1992 2004 2005 2007 2008](https://image.slidesharecdn.com/wikiaspcolloqium-211230001345/85/WikiAsp-A-Dataset-for-Multi-domain-Aspect-based-Summarization-6-320.jpg)

![Evolution of Aspects - Task Developments

7

1972 1983 1992 2004 2005 2007 2008 2016 2020

• Aspect-based Summarization

• Focuses on topic-level content control, with limited application domains

• Product reviews [Angelidis&Lapata+18], Movie reviews [Wang&Ling16],

News Domains [Krishna&Srinivasan18]

• Abstractive Question Answering

• Emphasizes the ability to obtain the most relevant "answer" rather than

comprehensive text

• Wikipedia [Lewis+20]](https://image.slidesharecdn.com/wikiaspcolloqium-211230001345/85/WikiAsp-A-Dataset-for-Multi-domain-Aspect-based-Summarization-7-320.jpg)

![Wikipedia: Large and Domain-diverse Dataset for Summarization

• Human authors compose Wikipedia articles

from references

• Covers various domains from encyclopedic

standpoint

• Previously formulated into a summarization

task [Liu+18]

• Input: Cited (web) references

• Output: Lead section of a Wikipedia article

9

文献

最初の段落](https://image.slidesharecdn.com/wikiaspcolloqium-211230001345/85/WikiAsp-A-Dataset-for-Multi-domain-Aspect-based-Summarization-9-320.jpg)

![Data Collection

1. Collect base set of documents

• Collect (references, article) pairs from WikiSum [Liu+18]

2. Determine domains

• 20 DBPedia ontology classes constructed bottom-up

3. Find salient aspects

• 10 section titles that mostly describe textual content

• e.g. ✘ “results” ✔ “background”

11

Aspect Count Take?

Background 4326

3092

✔

Aftermath 3092 ✔

Results 2733 ✘

:

History 1735 ✔

:

DBPedia Ontology Hierarchy](https://image.slidesharecdn.com/wikiaspcolloqium-211230001345/85/WikiAsp-A-Dataset-for-Multi-domain-Aspect-based-Summarization-11-320.jpg)

![Baseline Model - Summarization

• Standard summarization setting

• Input: Aspect-clustered sentences

• Output: Aspect-based summary

• Models

• TextRank [Barrios+16]

• Unsupervised extractive model

• BERTAbs [Liu+19]

• BERT-based abstractive model

• Trained on each domain separately

15](https://image.slidesharecdn.com/wikiaspcolloqium-211230001345/85/WikiAsp-A-Dataset-for-Multi-domain-Aspect-based-Summarization-15-320.jpg)

![Domain-specific Challenges

• Pronoun Resolution for Opinion-based Inputs

• Some source documents used for aspects like "Public reception" are subjective

• Handling mixed-person texts is necessary for certain domains

"A magical album that you can listen to and enjoy many times."

"I would always suggest this album to anyone."

• Chronological Explanation

• Description of a history of an entity, timeline of an event, etc. requires chronologically

consistent content organization

"On 13 March 1815, ..., the powers at the Congress of Vienna declared him an outlaw. ...

As 17 June drew to a close, Wellington's army had arrived at its position at Waterloo."

19

[From Wiki references for Discovery (Daft Punk album)]

[From Wiki article for Battle of Waterloo]](https://image.slidesharecdn.com/wikiaspcolloqium-211230001345/85/WikiAsp-A-Dataset-for-Multi-domain-Aspect-based-Summarization-19-320.jpg)