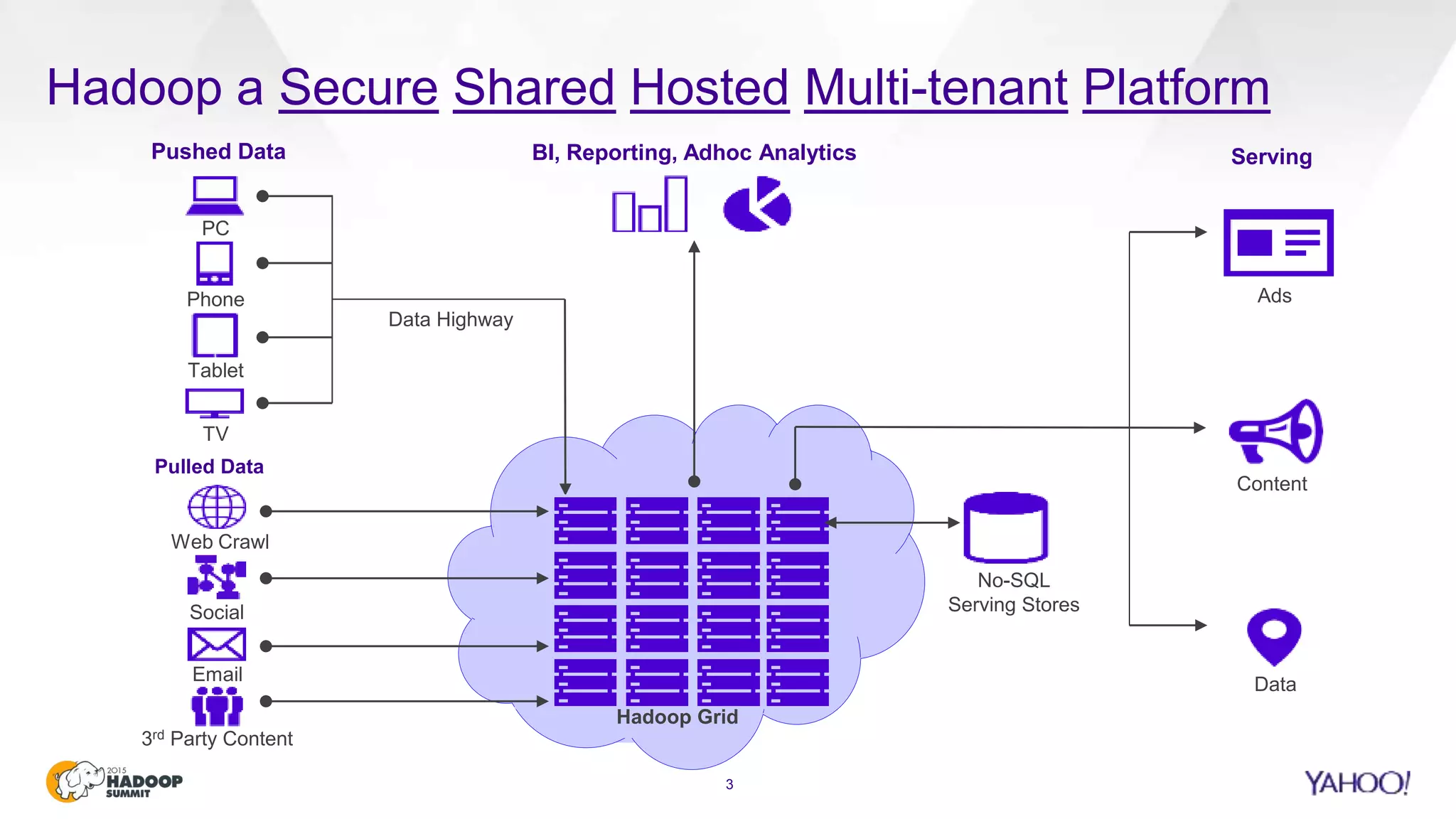

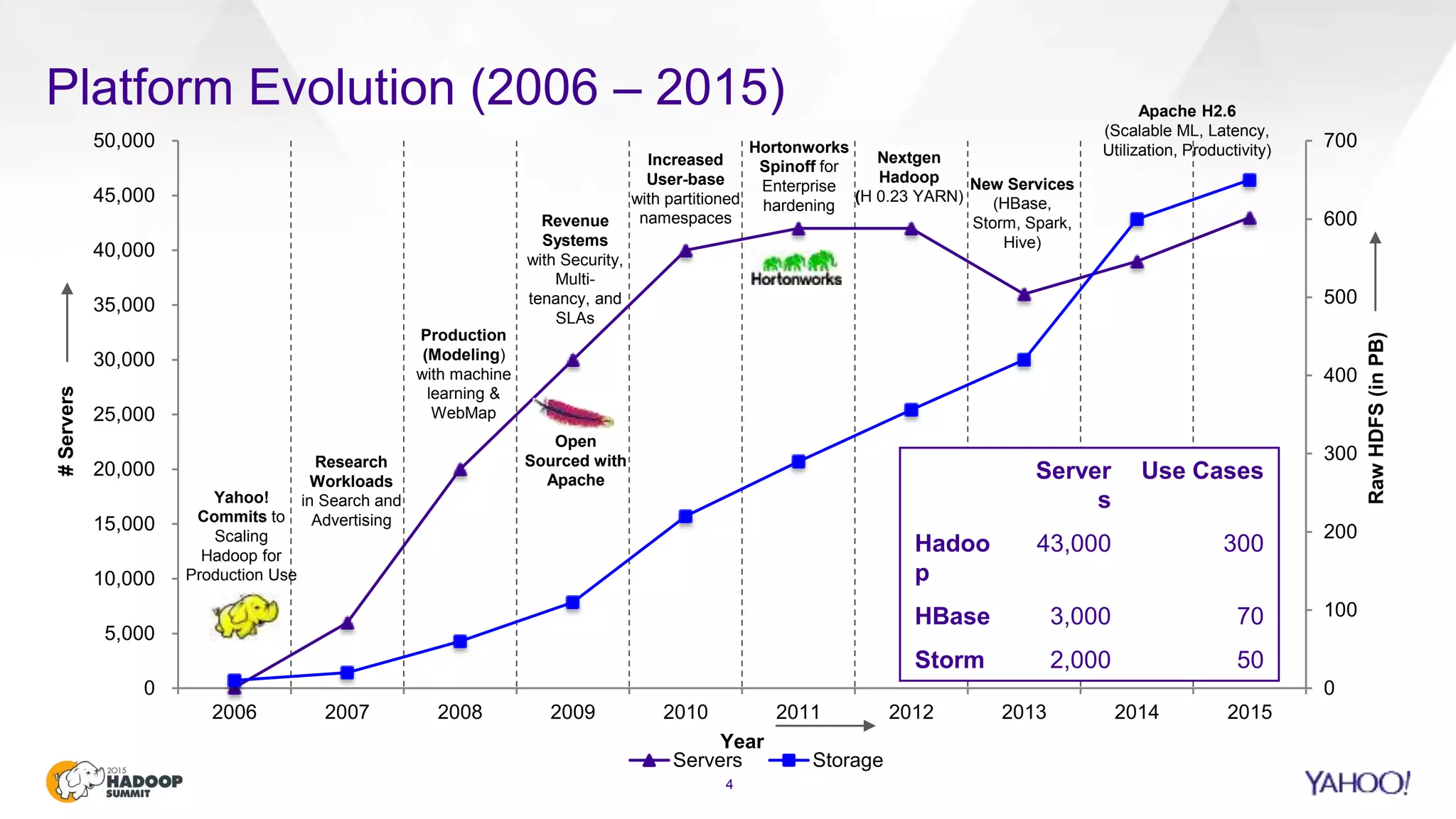

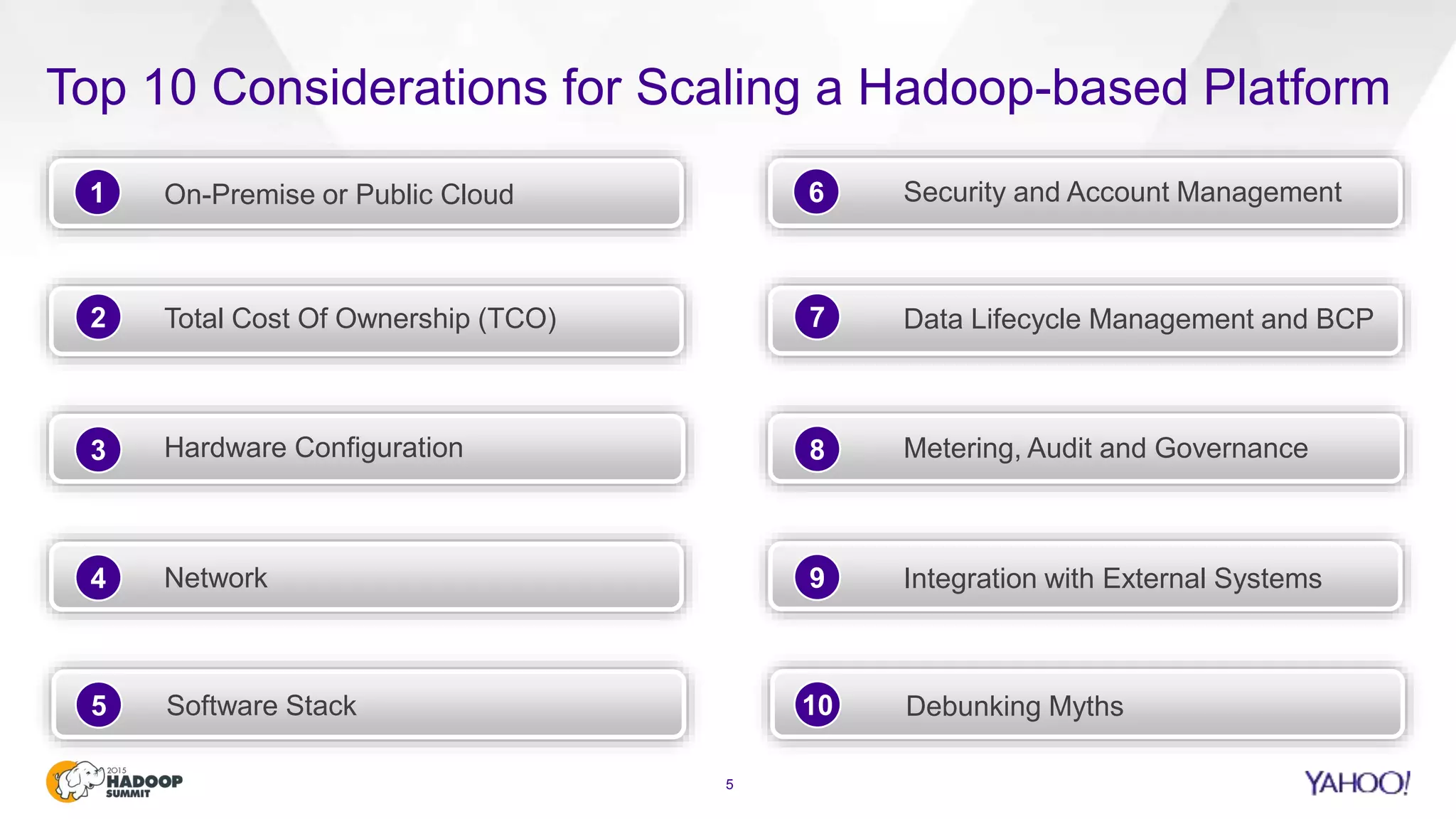

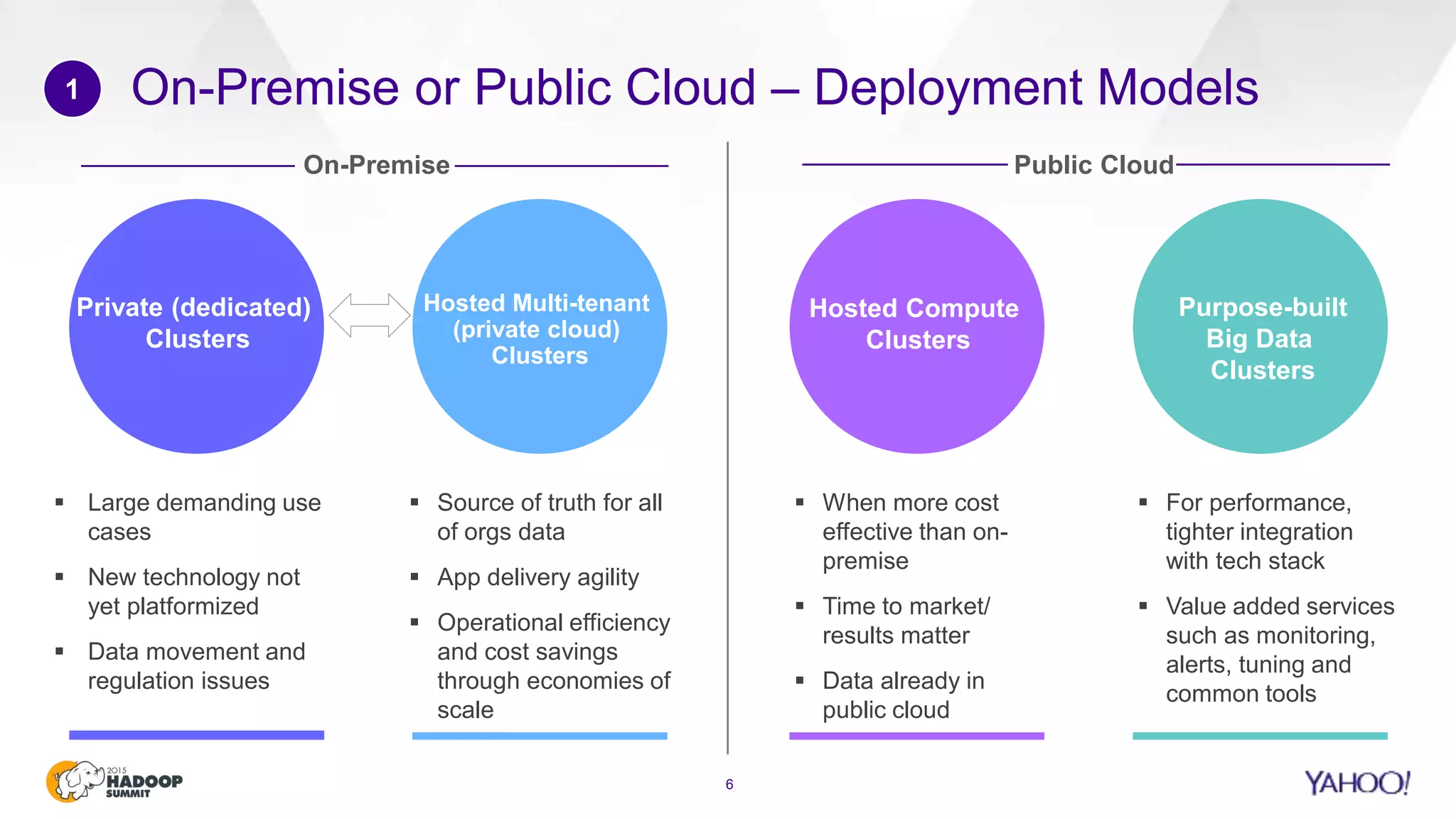

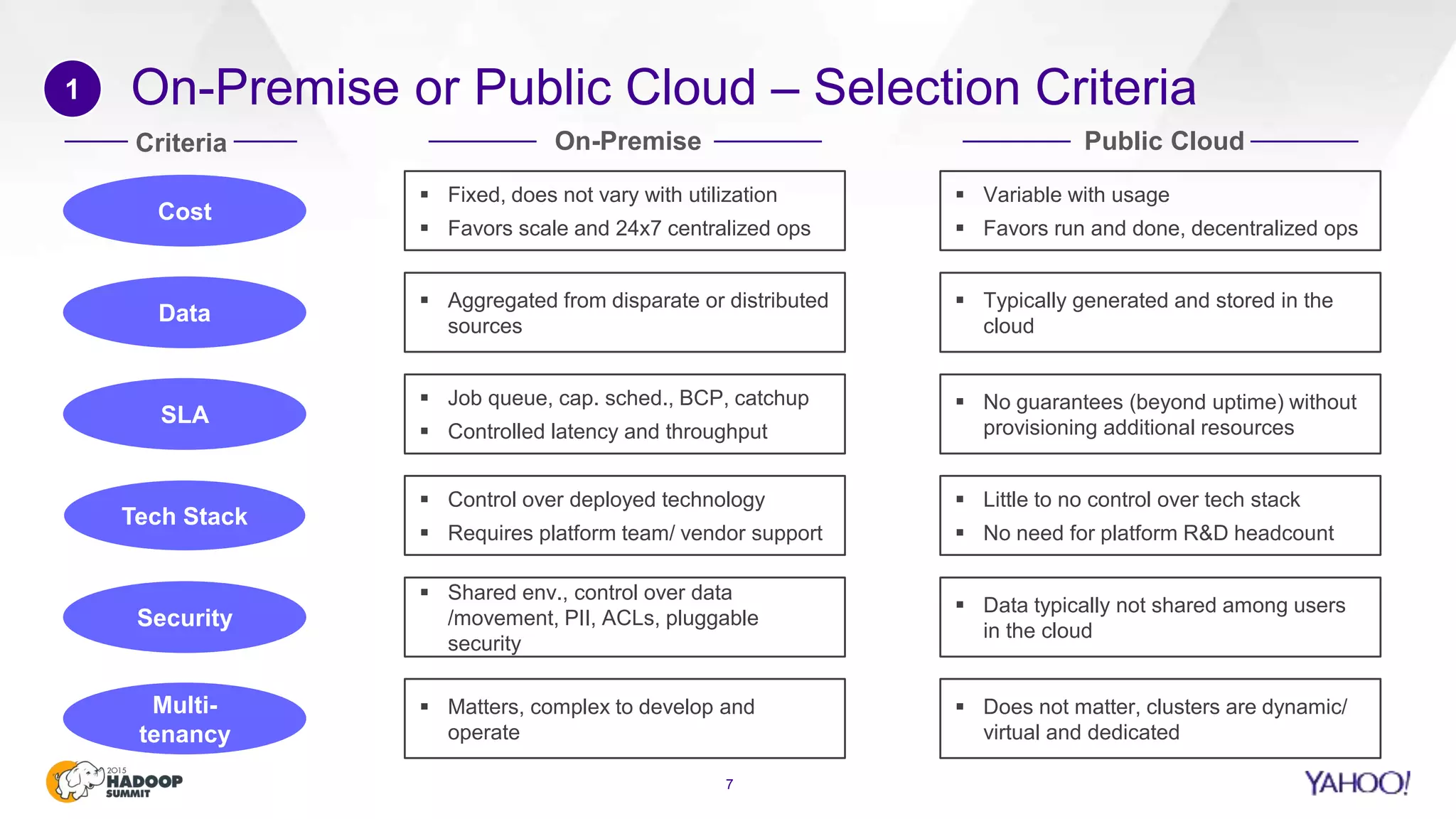

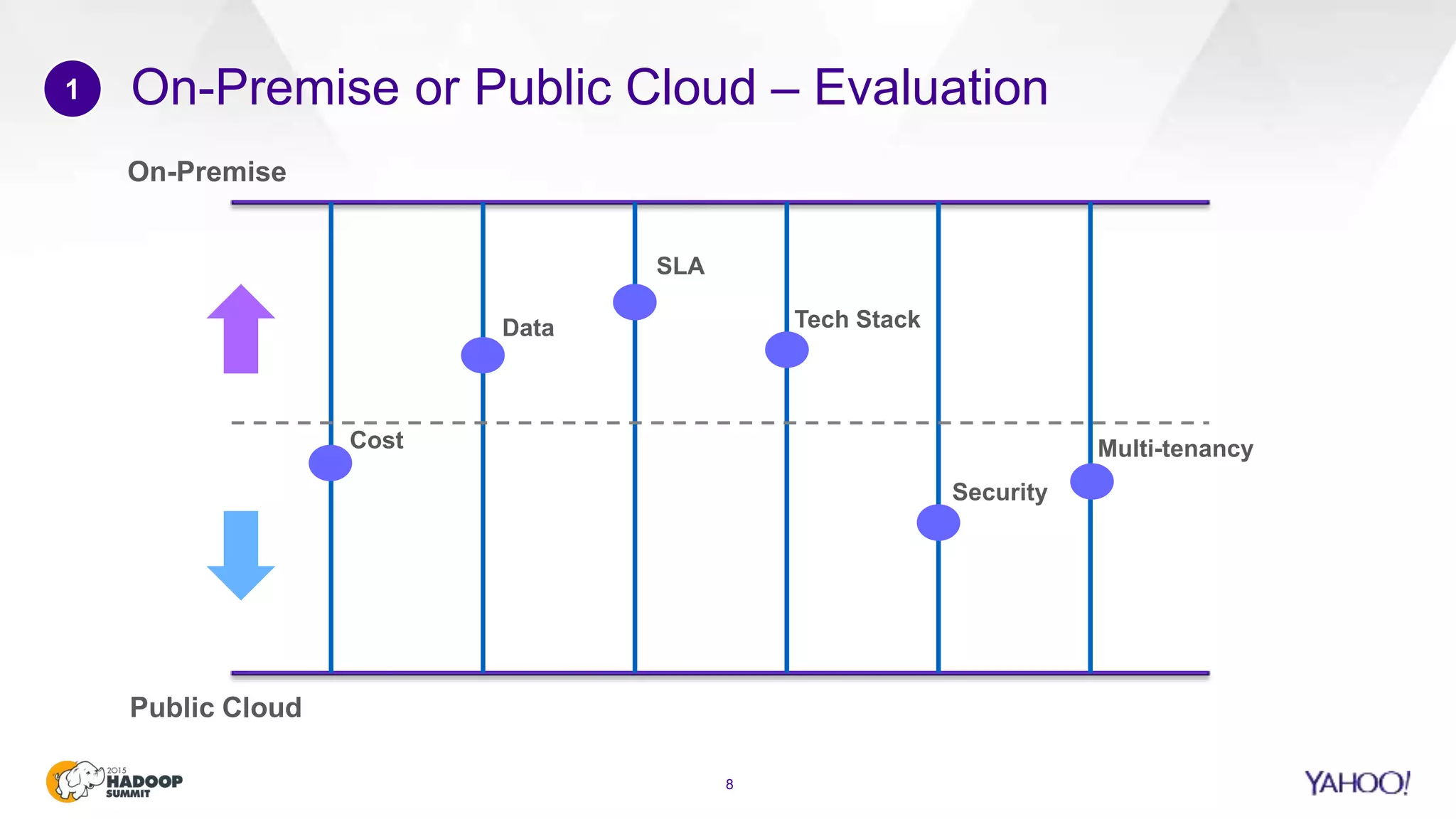

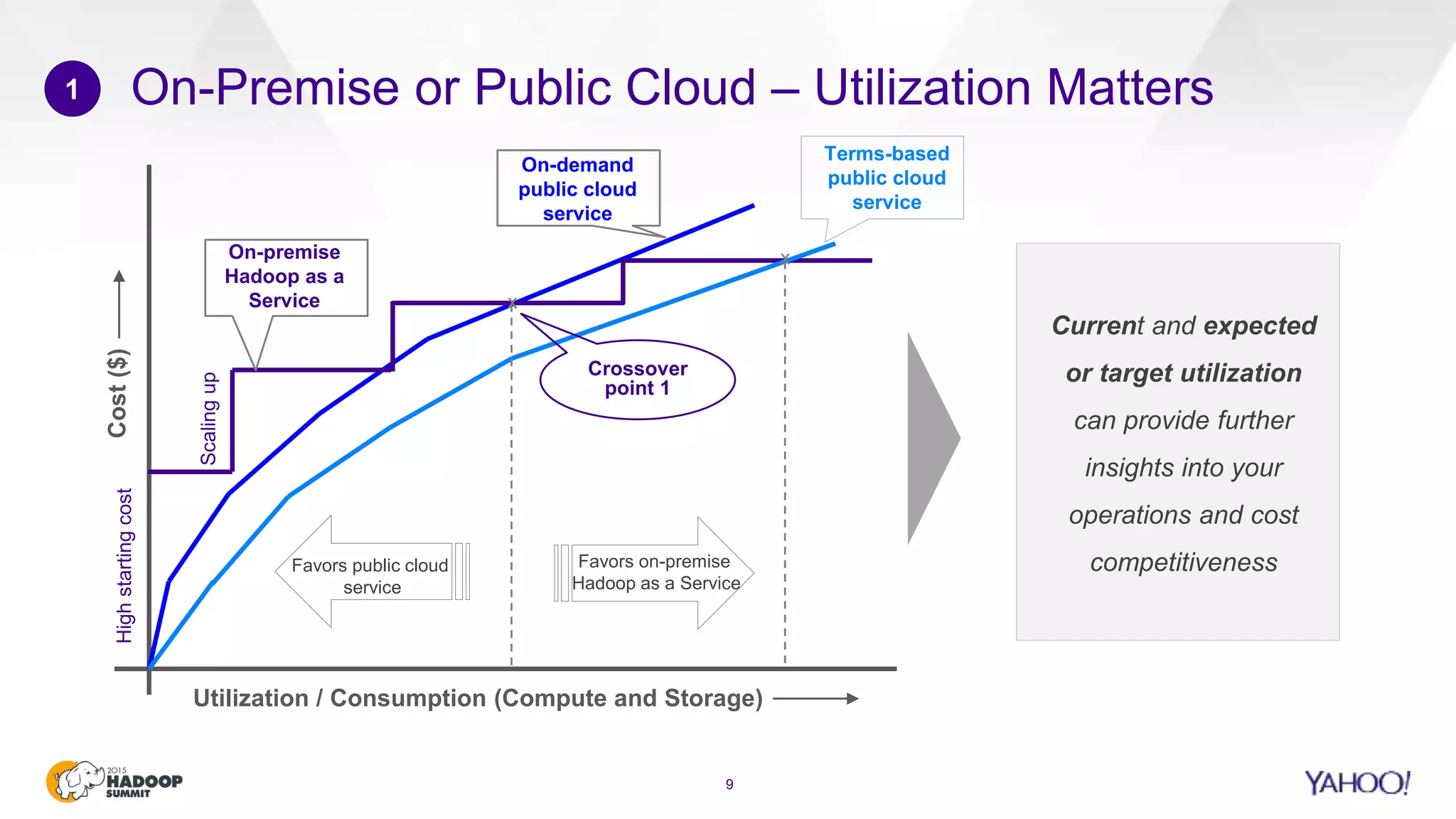

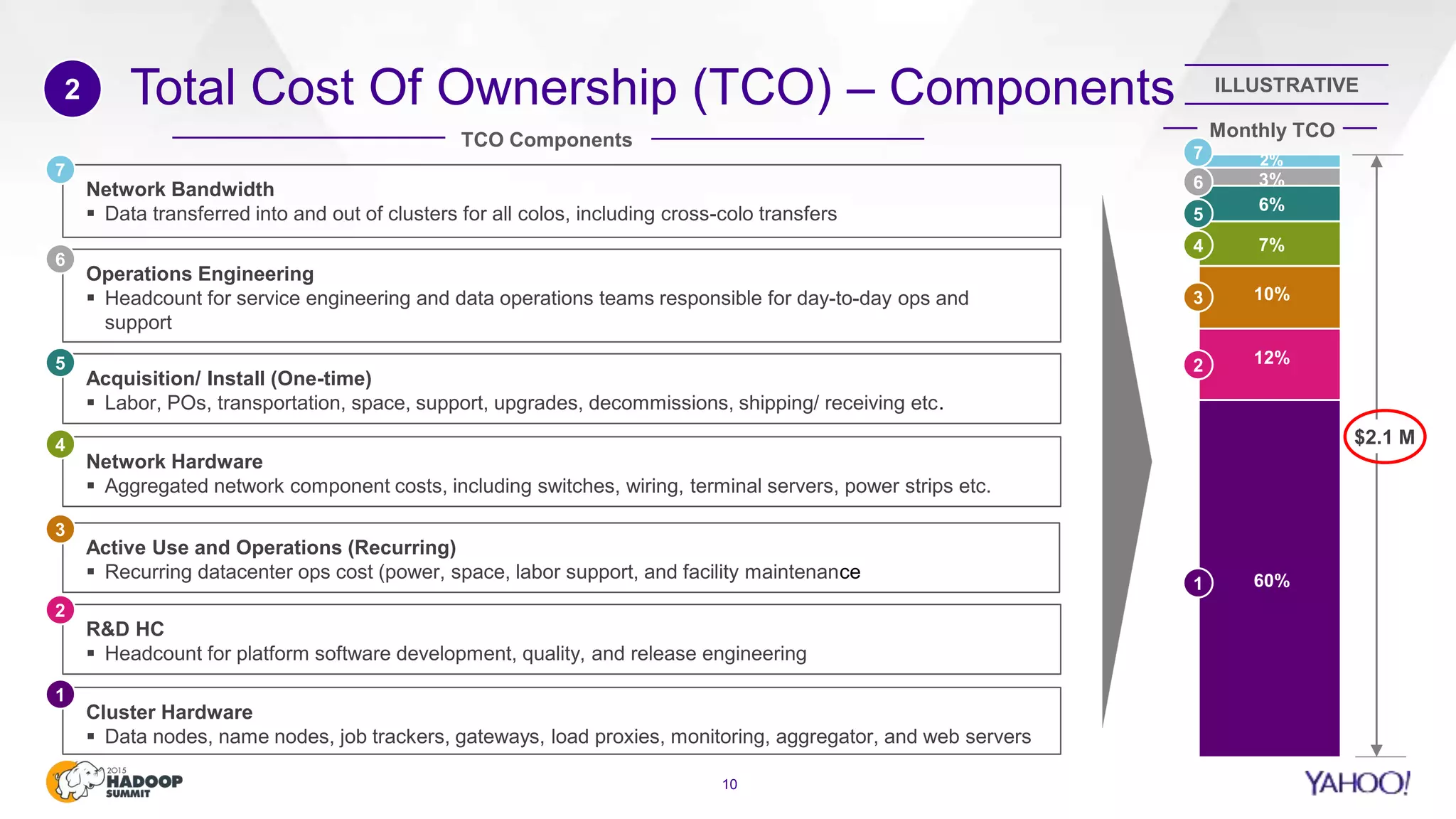

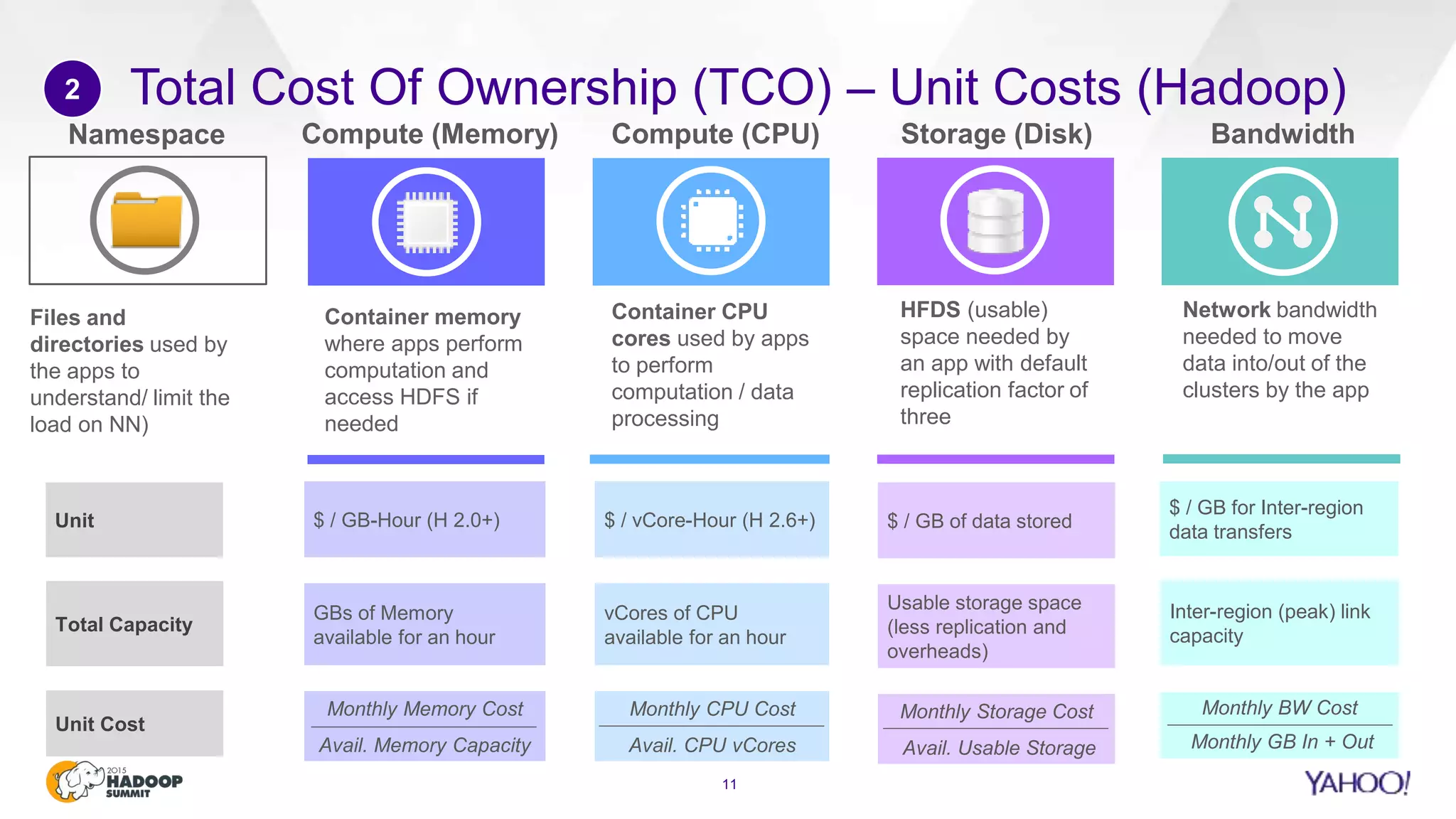

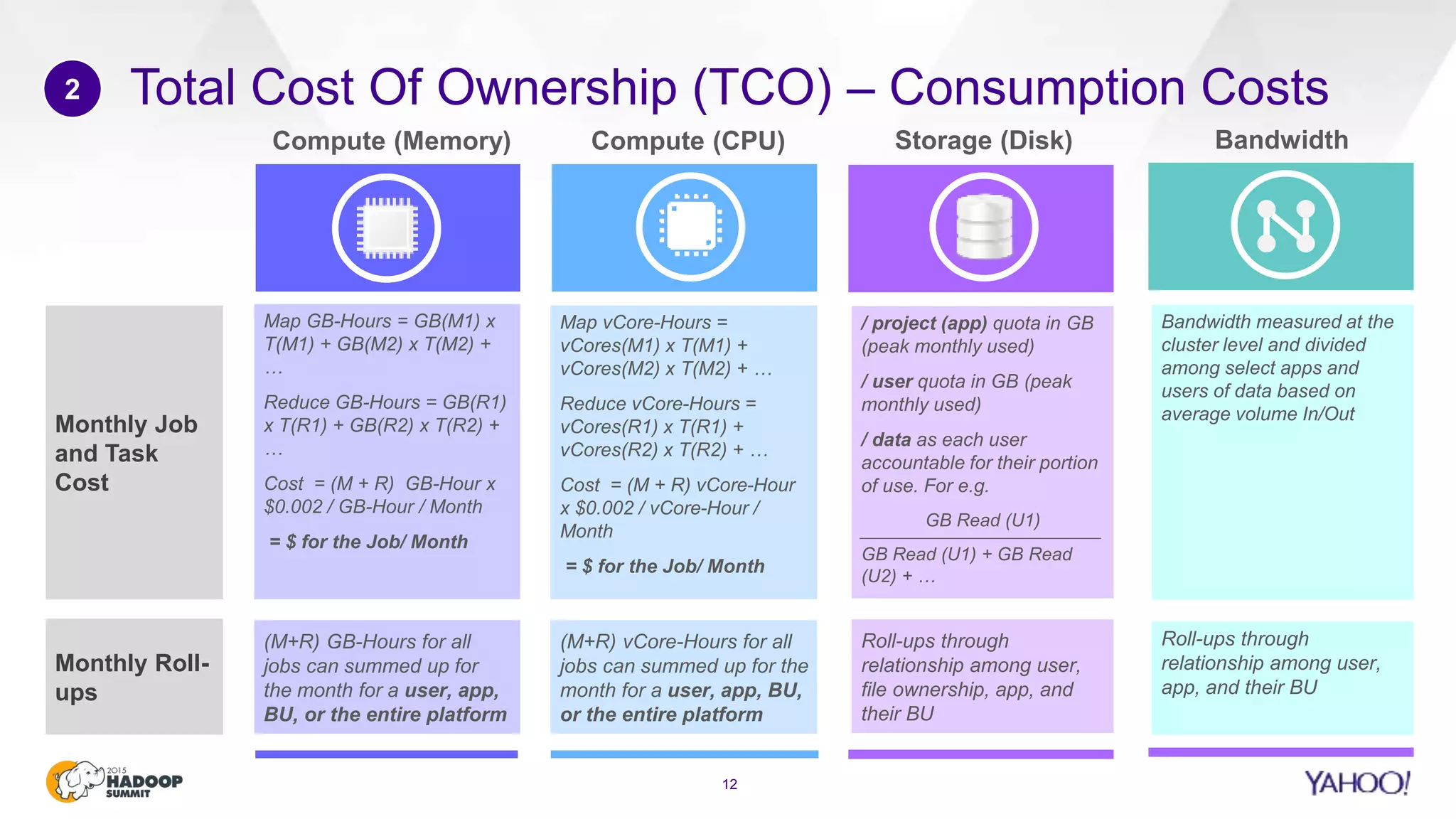

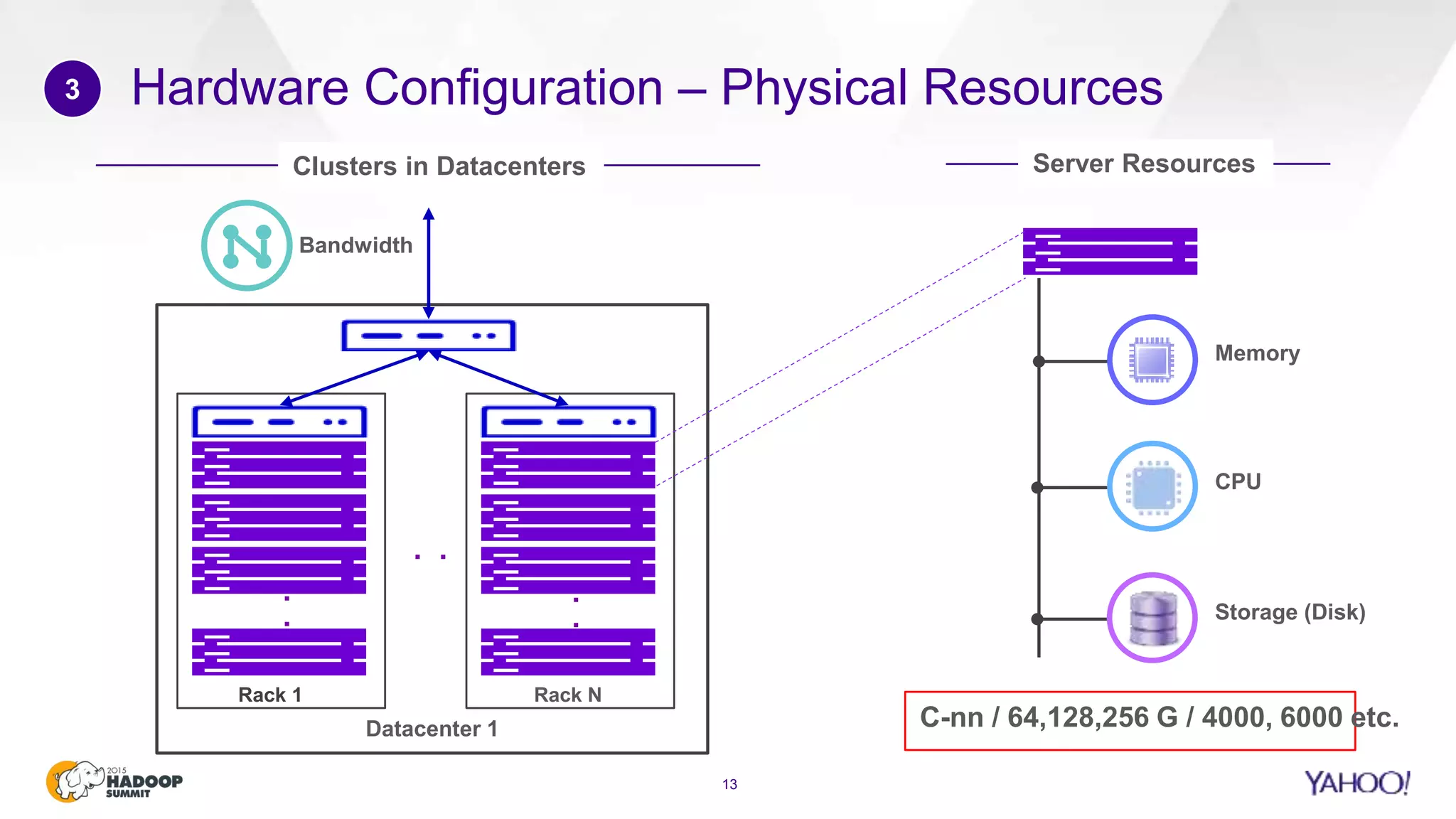

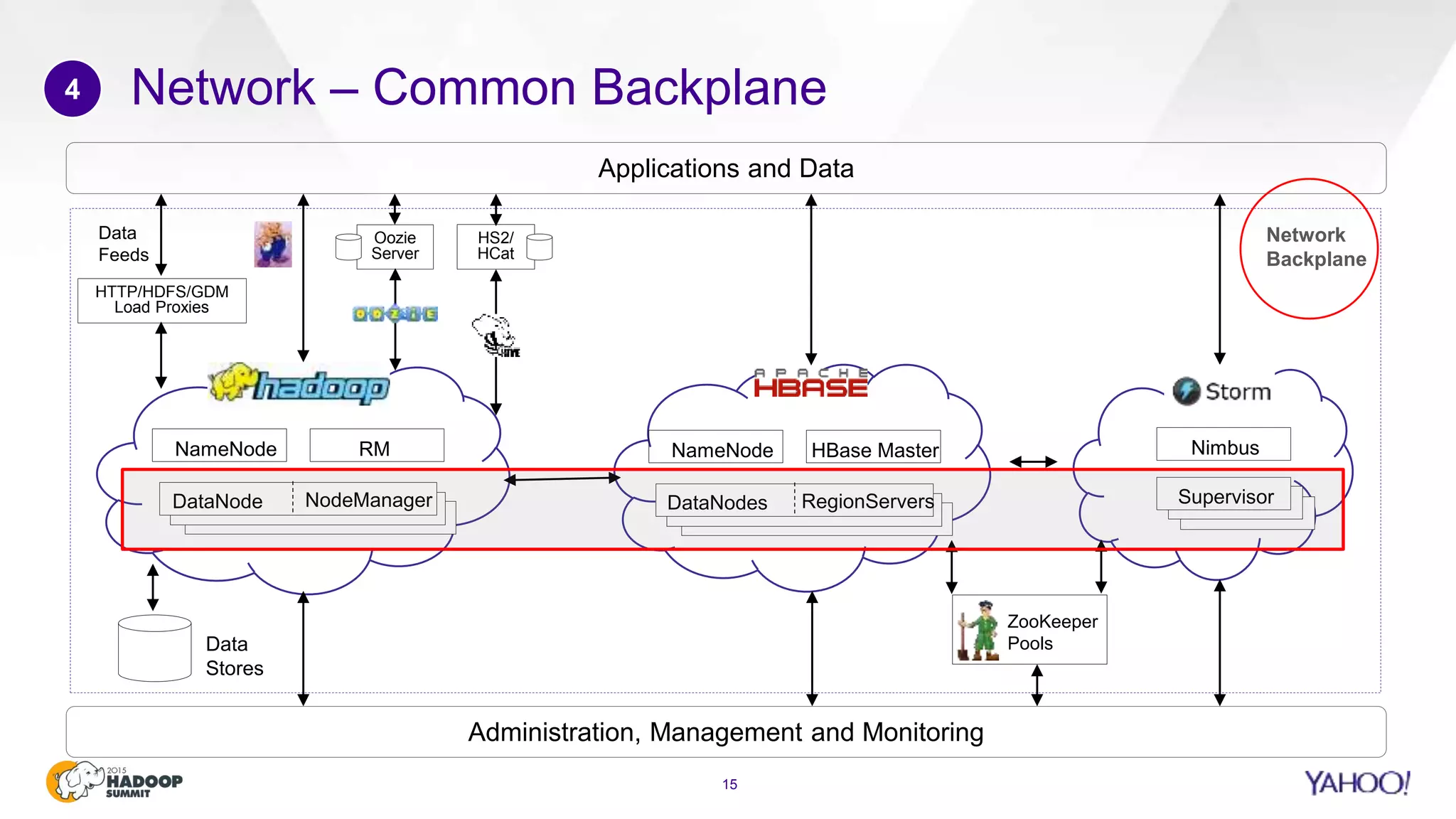

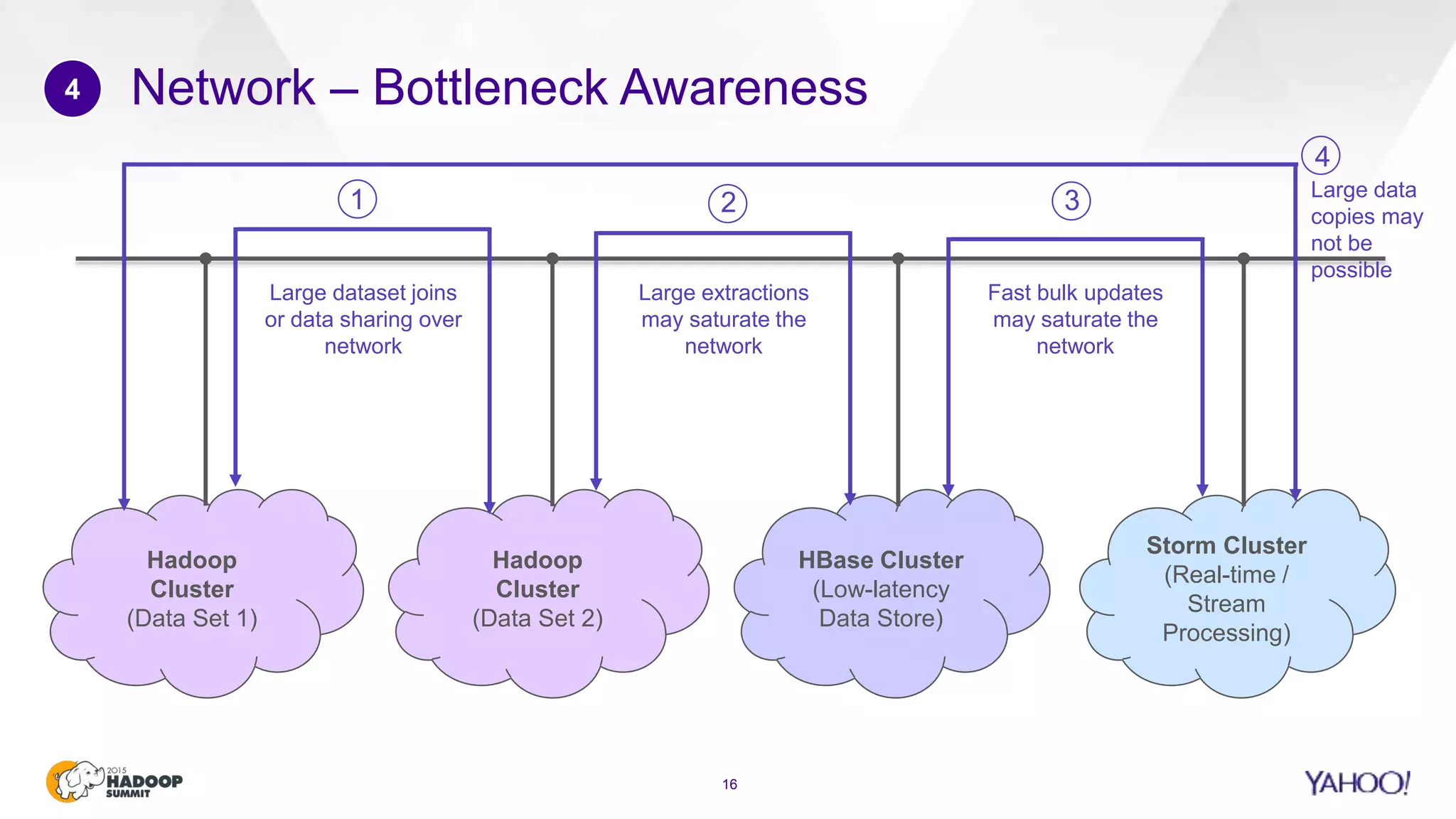

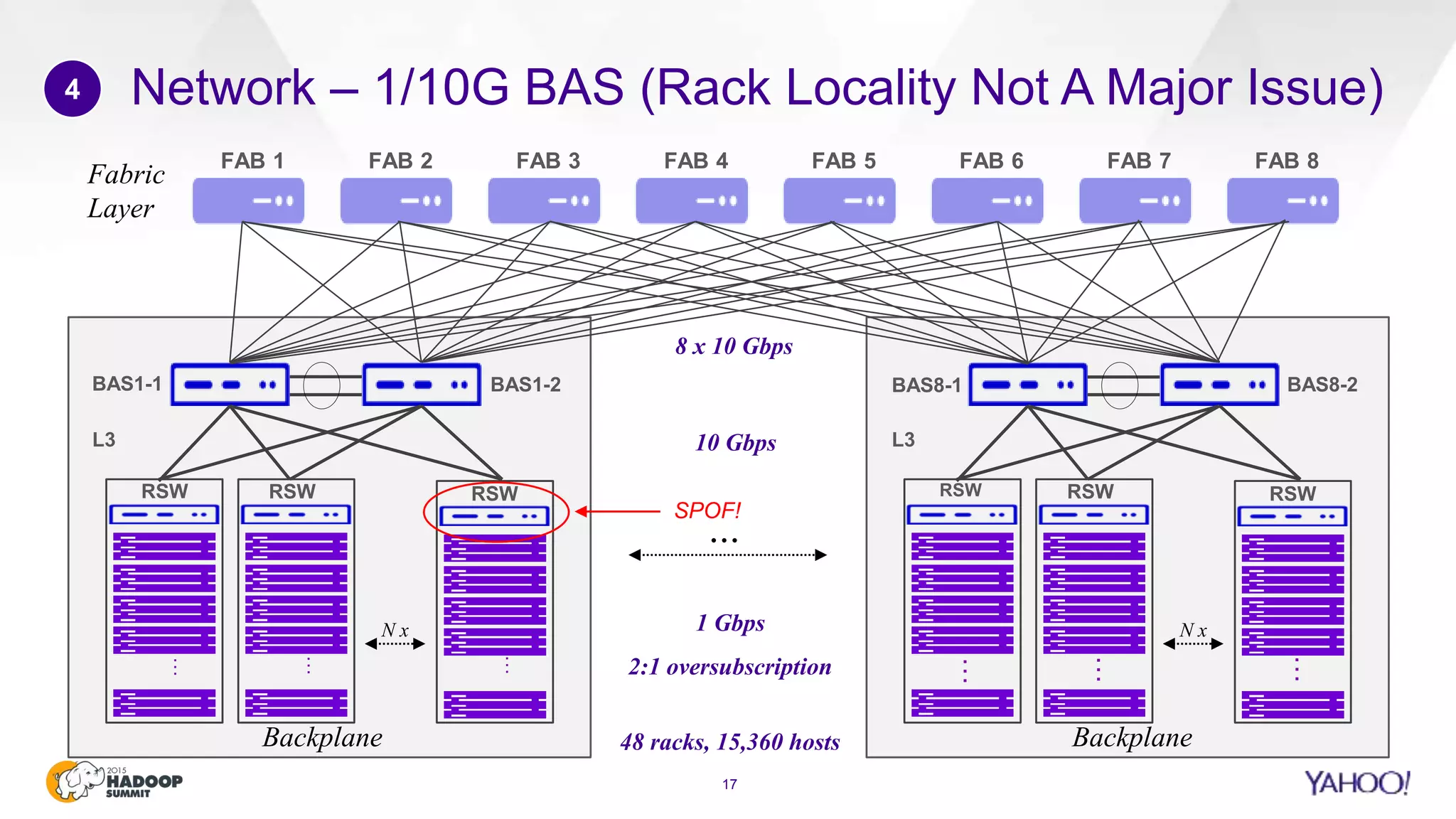

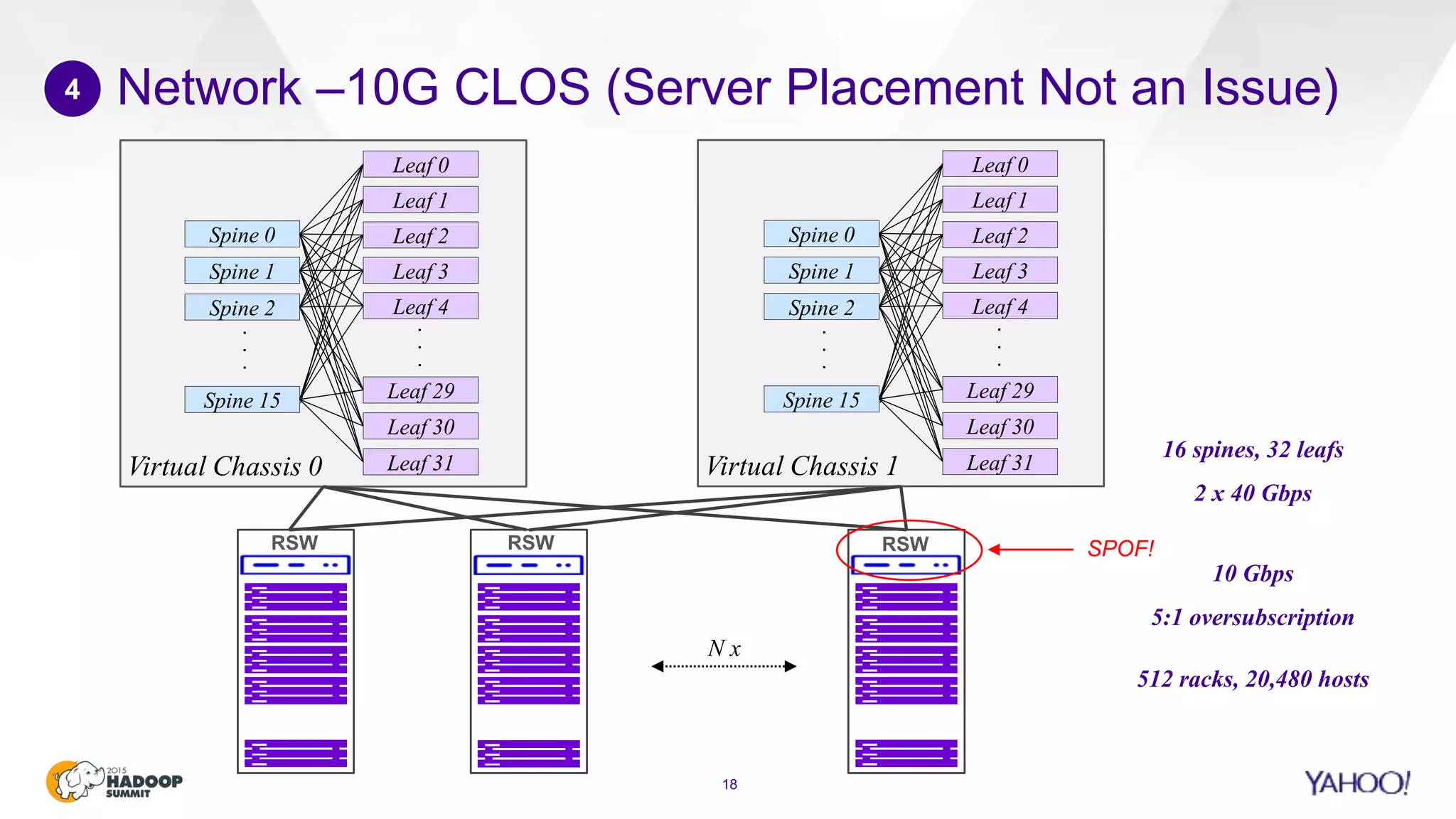

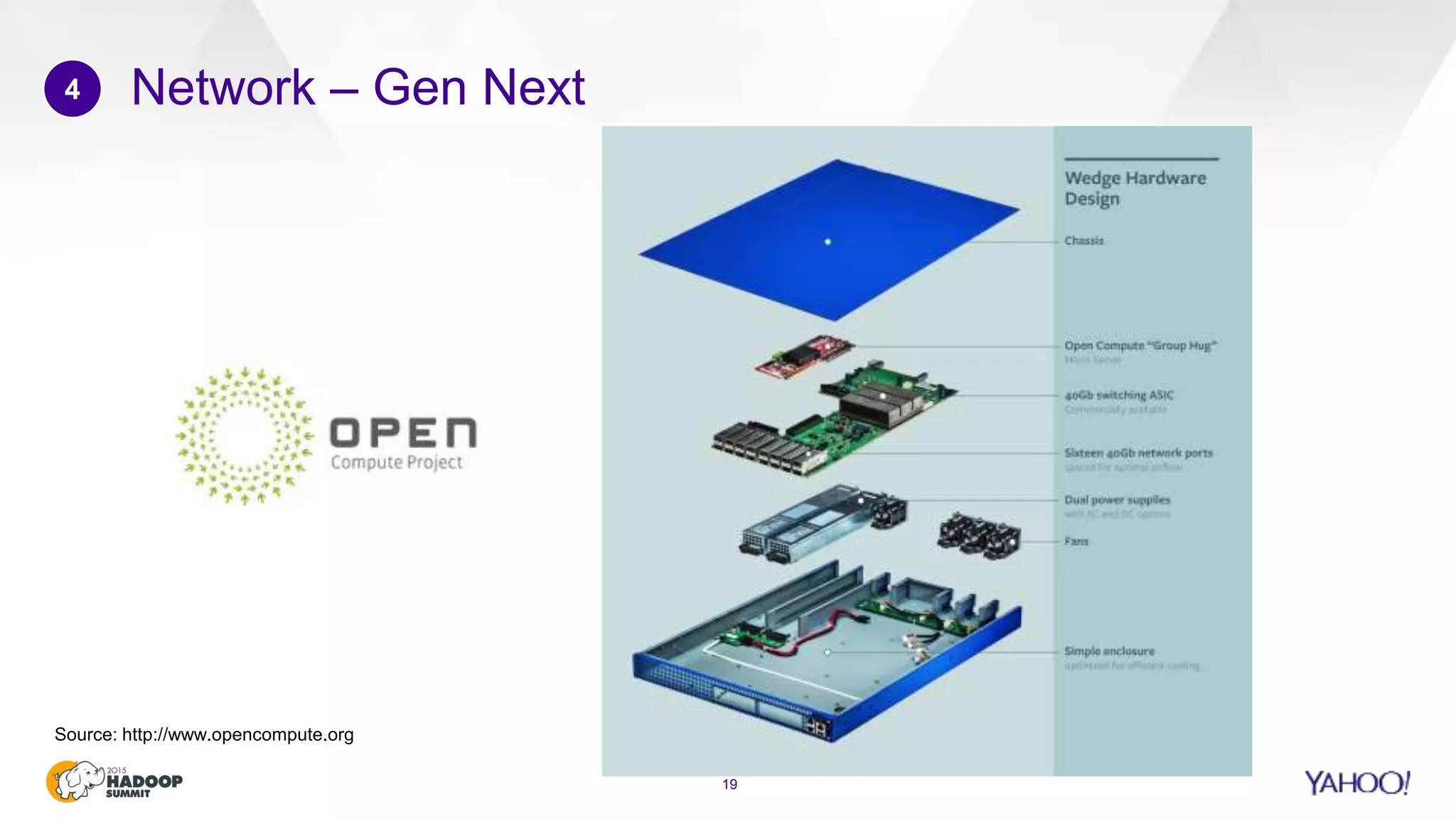

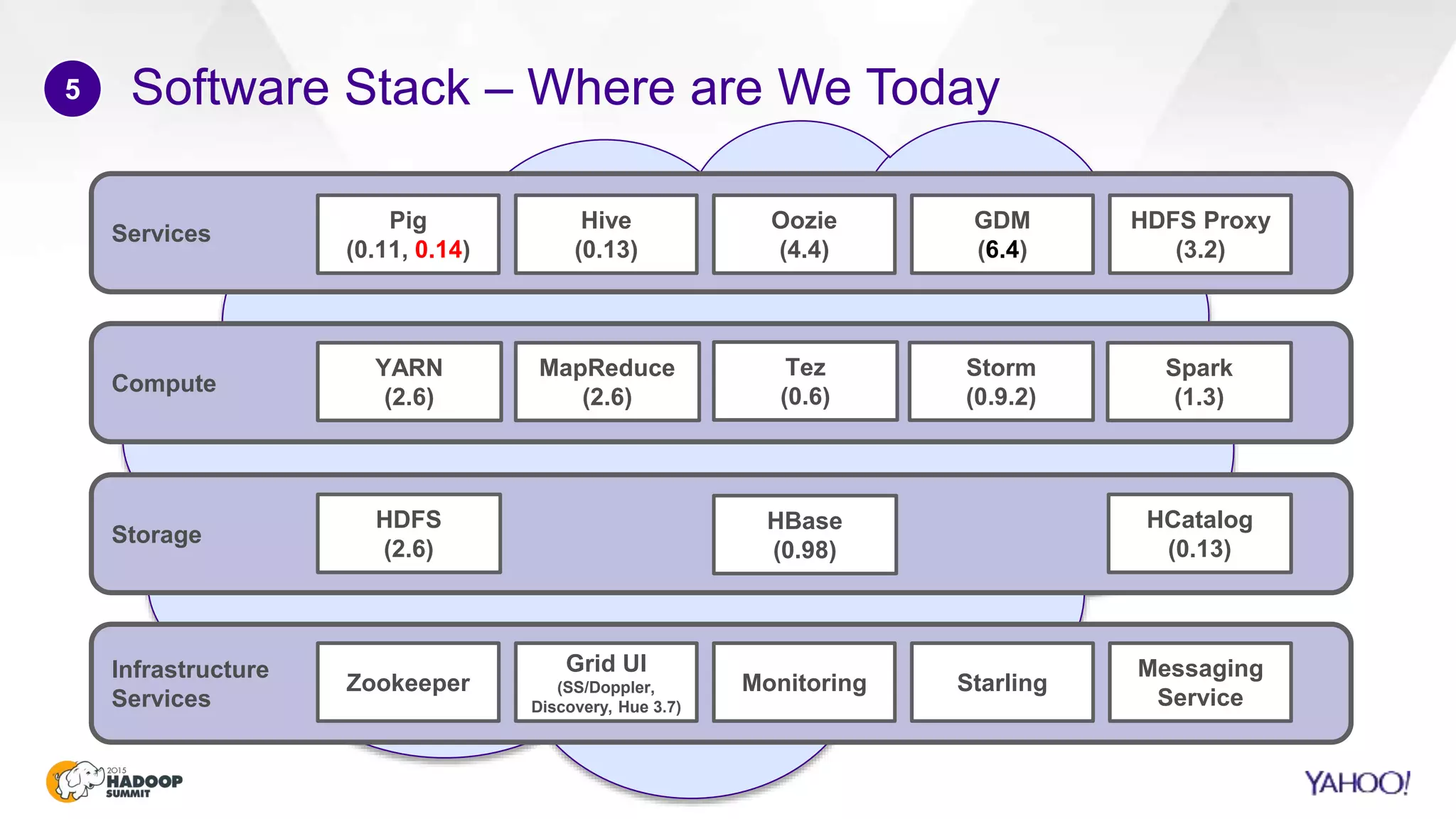

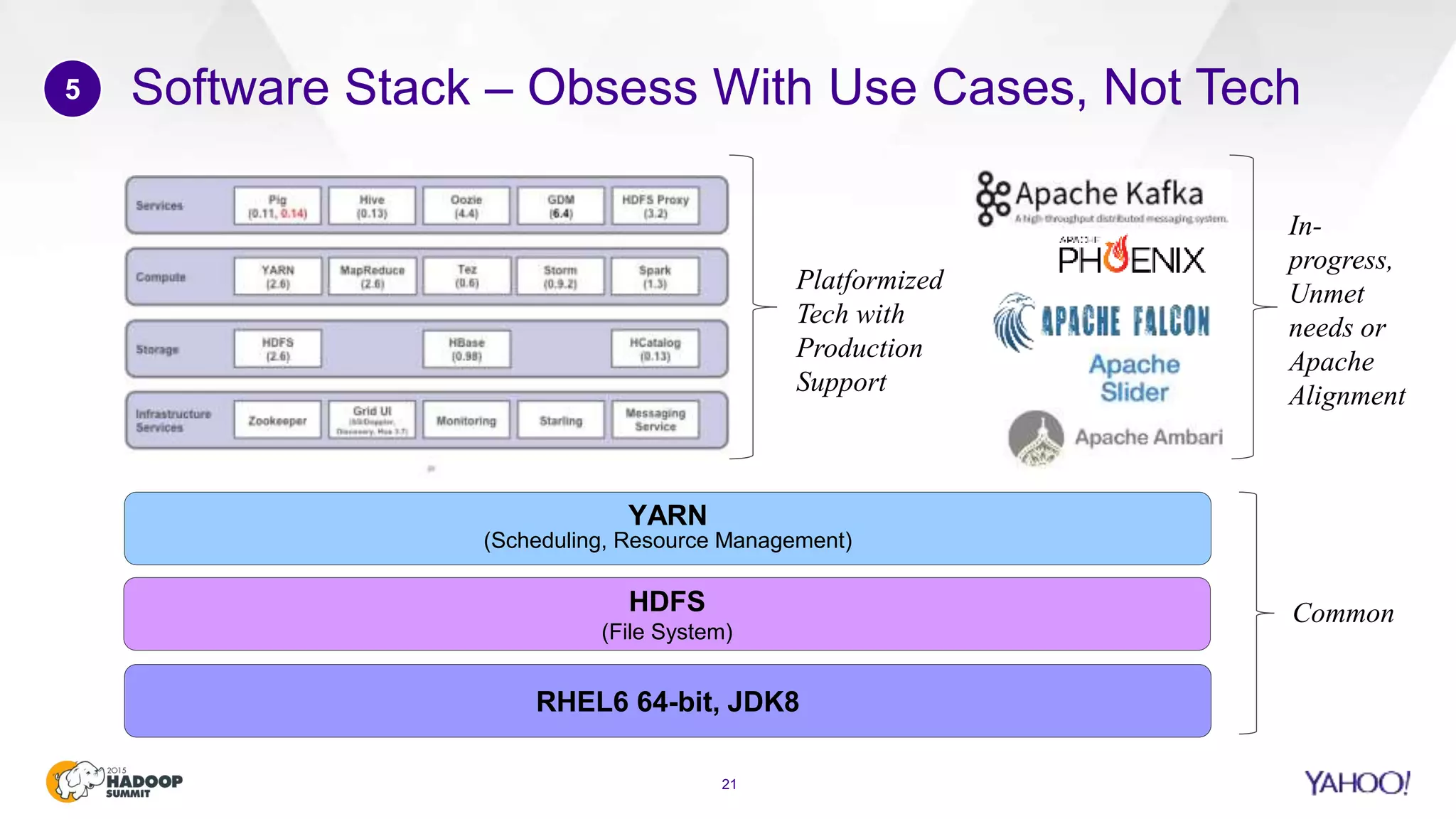

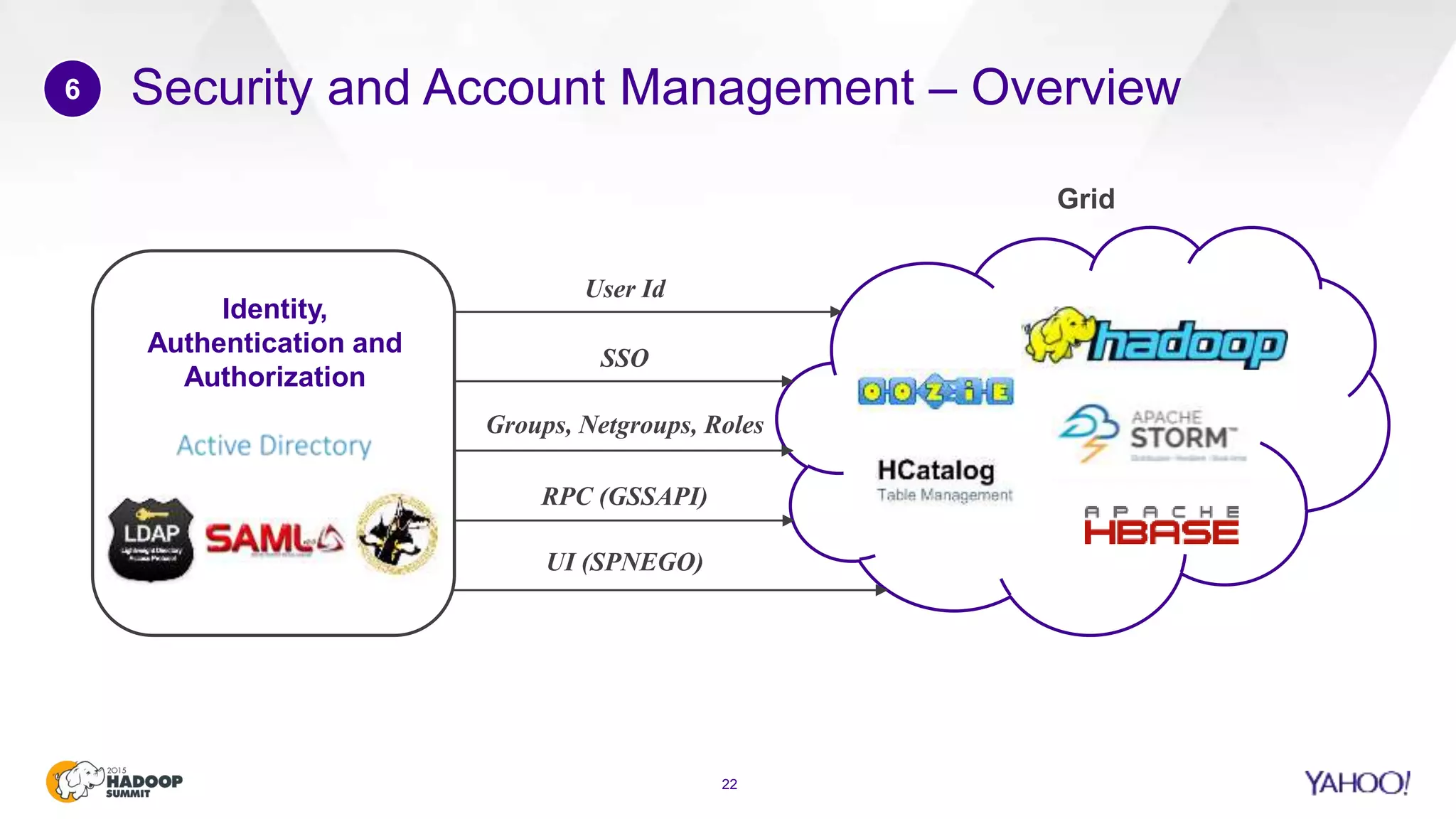

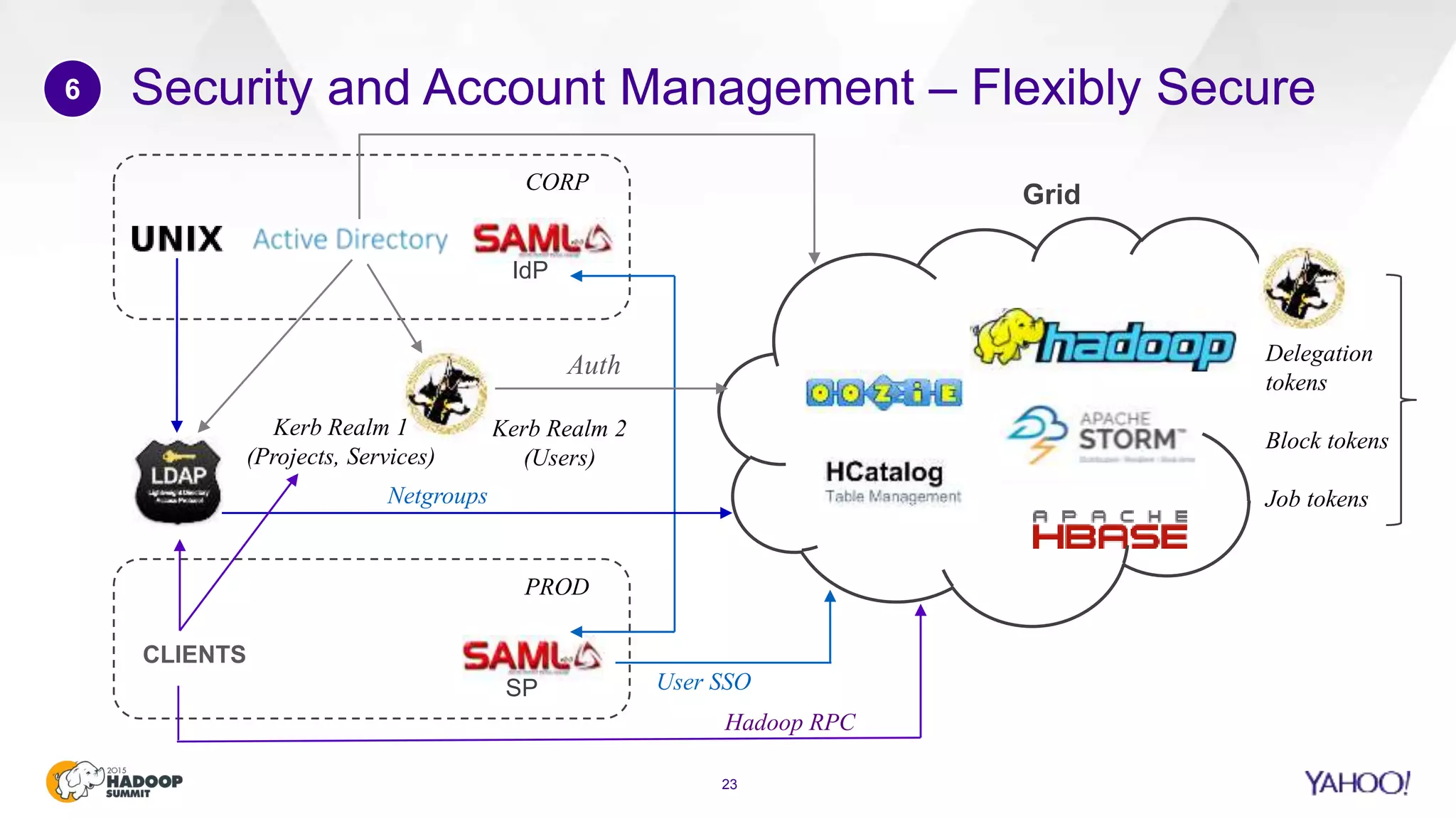

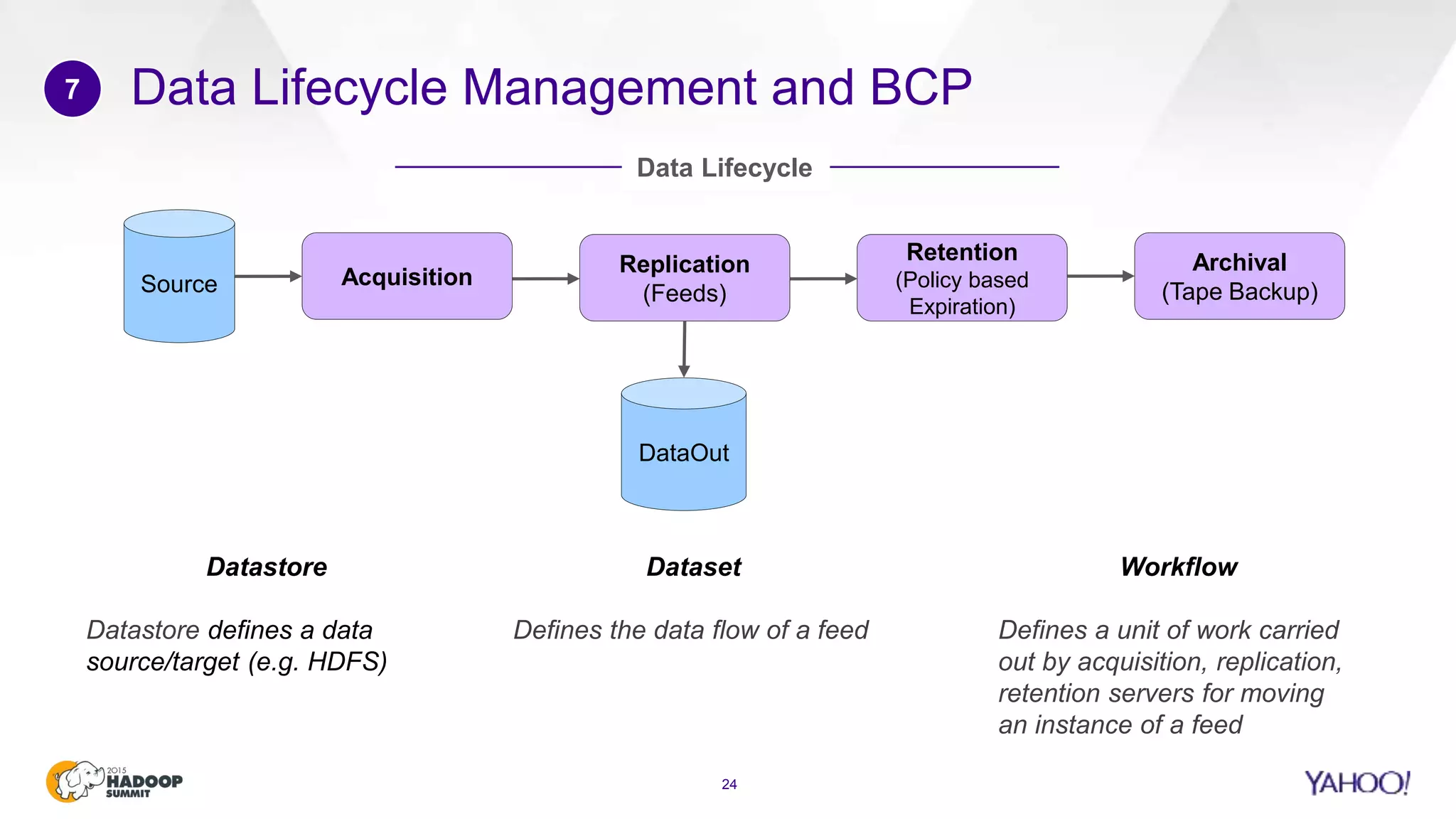

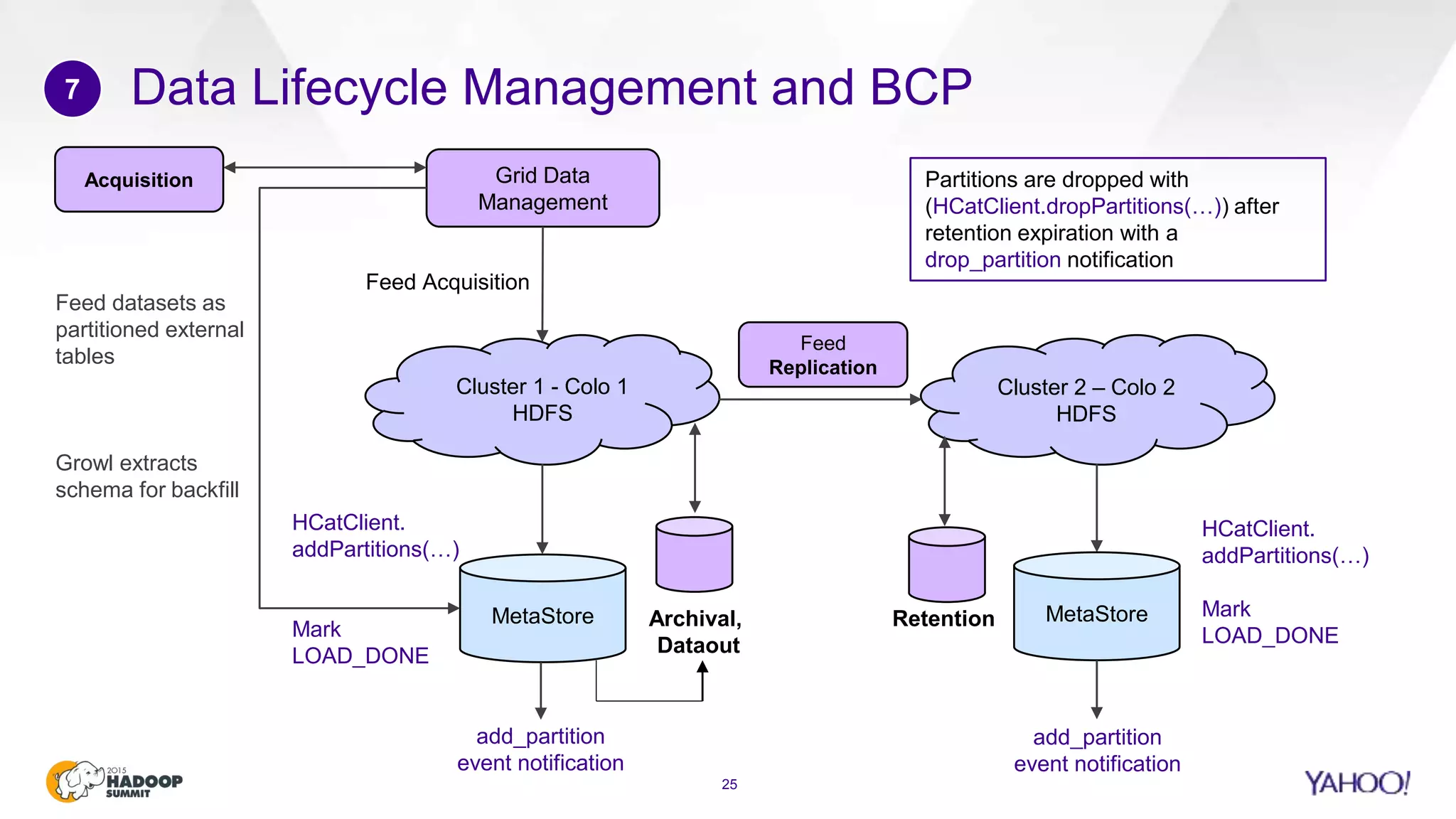

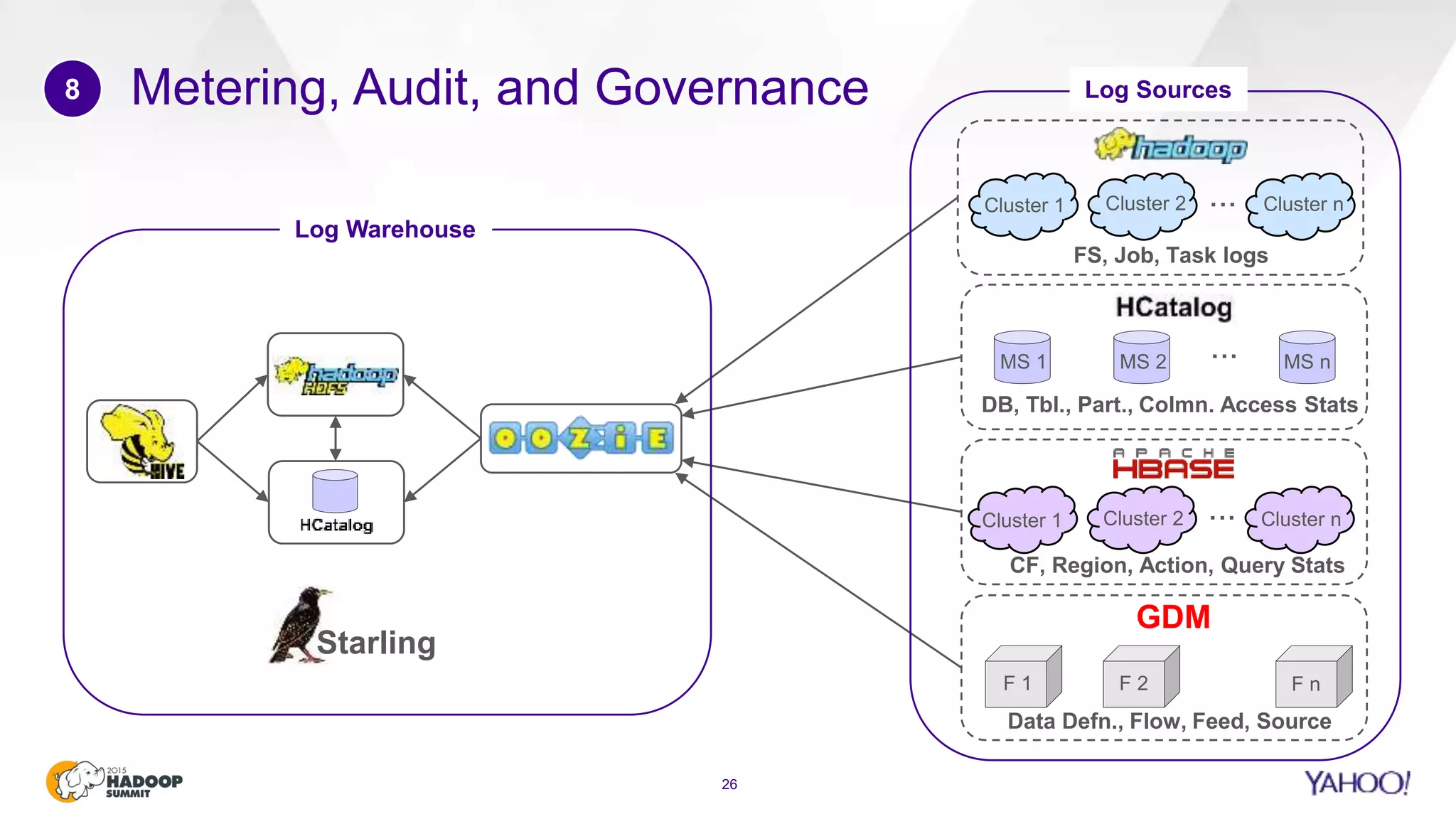

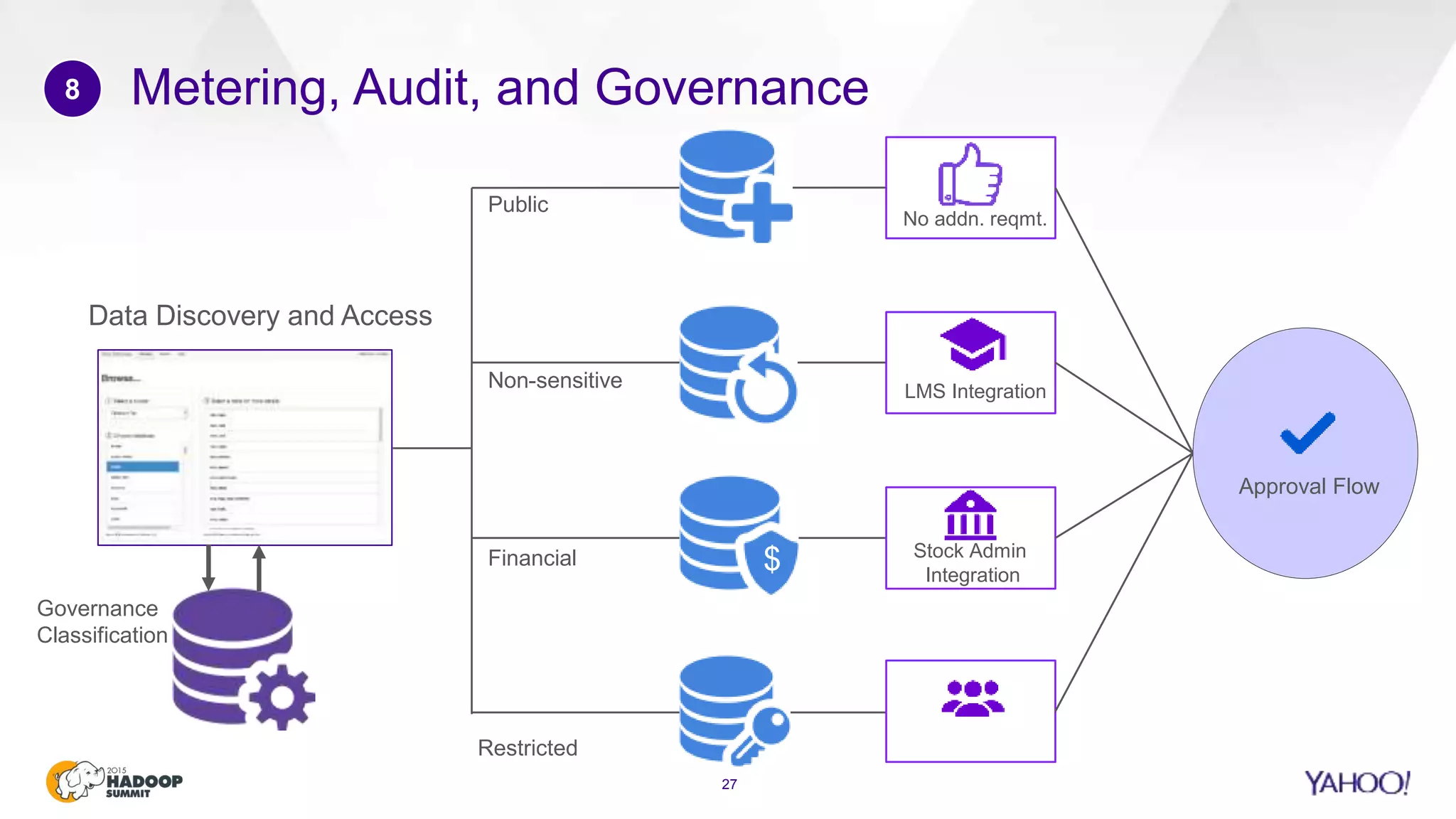

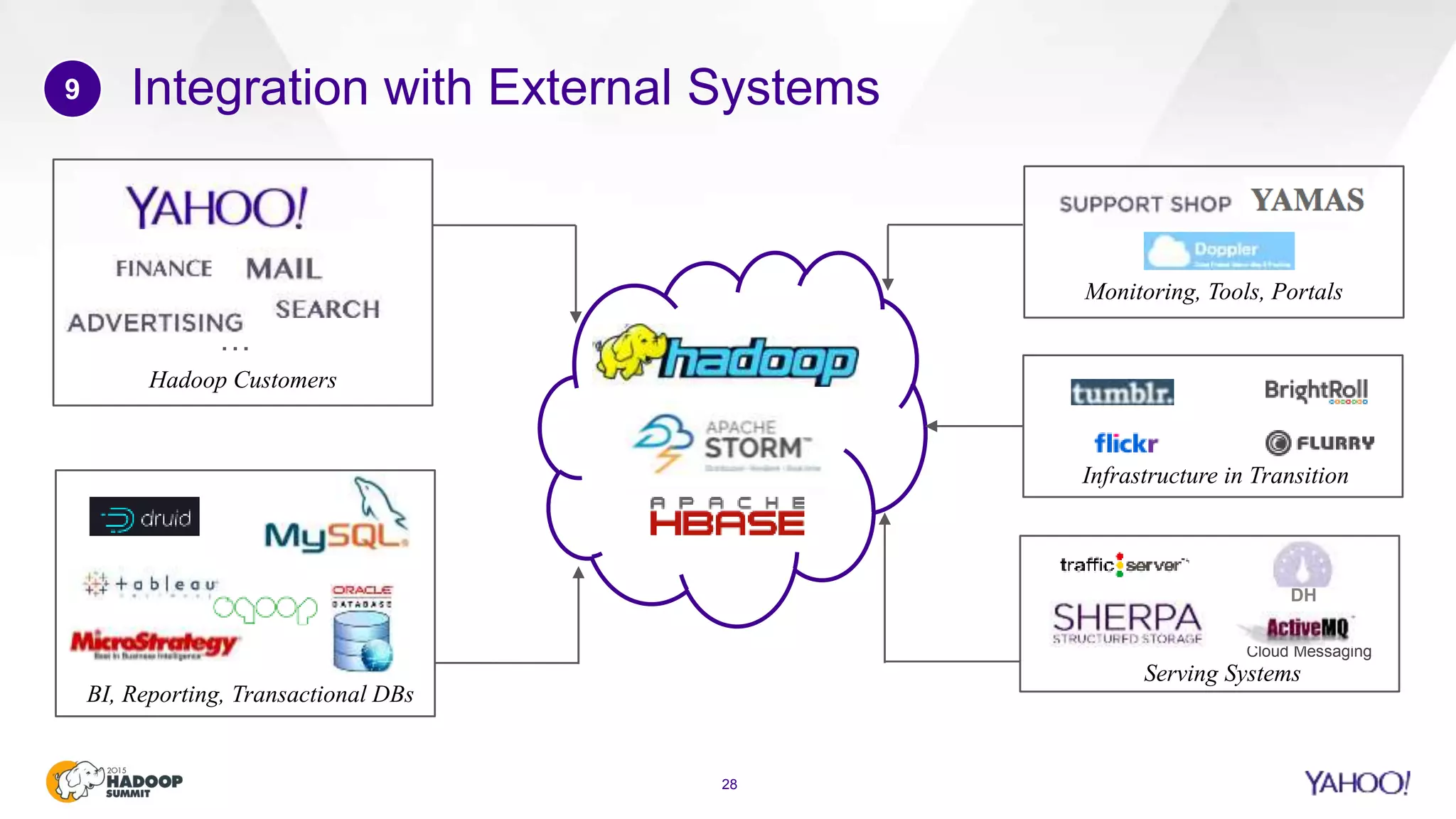

This document discusses considerations for scaling Hadoop platforms at Yahoo. It covers topics such as deployment models (on-premise vs. public cloud), total cost of ownership, hardware configuration, networking, software stack, security, data lifecycle management, metering and governance, and debunking myths. The key takeaways are that utilization matters for cost analysis, hardware becomes increasingly heterogeneous over time, advanced networking designs are needed to avoid bottlenecks, security and access management must be flexible, and data lifecycles require policy-based management.