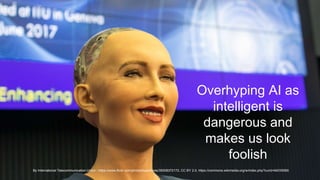

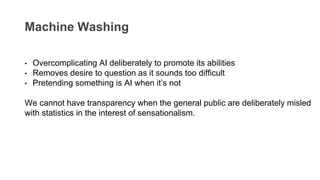

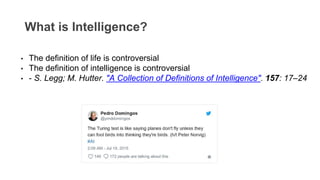

Dr. Janet Bastiman critiques the overhyped portrayal of AI as sentient and warns that such representations mislead the public’s understanding of the technology. She emphasizes the need for clear definitions of artificial intelligence and its distinction from concepts like artificial sentience, which remains controversial and ill-defined. Ultimately, while advancements in AI have been significant, we are far from achieving true artificial sentience, and this creates challenges in both ethical considerations and public perception.