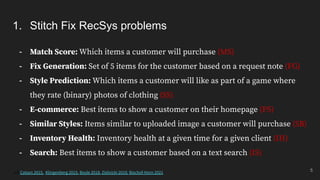

1) The document discusses several recommendation problems at Stitch Fix including match score, fix generation, style prediction, inventory health, and search. It outlines concerns with each problem including different loss functions, organizational barriers, and lack of joint training or validation.

2) It describes mistakes made such as type 1 errors from peeking, the "balkanization" of teams working independently, and humans being left out of the model evaluation process. Weak composition of models without joint training was also a challenge.

3) The document advocates for practices like global holdouts, published validation, random re-testing, and strengthening weak composition between models. It suggests institutionalizing internal task leaderboards and validation to improve experimental rigor.

![Overview

2

1. Framing some problems [Business, RecSys]

2. Some causes for concern [Case studies for 3 models]

3. Mistakes and challenges [Type 1, Org, HITL, Weak Composition]

4. Very good things [Great place to do great work]

5. Good Big Model? [One with everything]

6. Learnings [Some things to strive for]](https://image.slidesharecdn.com/recsyssummit2022-eor-220727152150-b548faf6/85/NVIDIA-RecSys-Summit-2022-EoR-2-320.jpg)