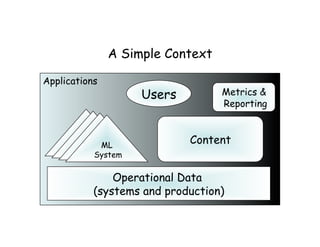

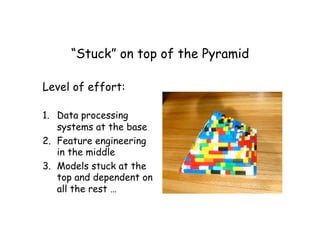

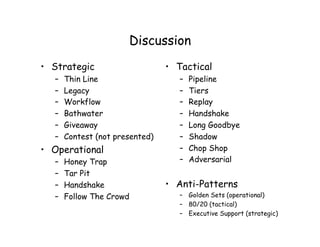

This document discusses patterns and anti-patterns for developing machine learning systems. It provides examples of strategic, tactical and operational patterns including developing thin systems, handling legacy systems, organizing workflows as pipelines, and using techniques like honey traps and tar pits to test systems. Anti-patterns that could hinder development are also discussed such as over-reliance on golden sets, stopping at 80% solutions, and taking on unrealistic executive expectations. The document aims to help navigate challenges in machine learning project development.