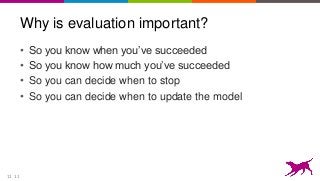

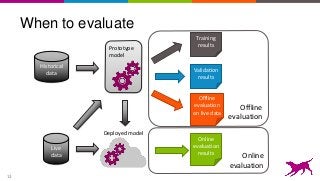

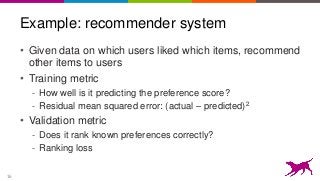

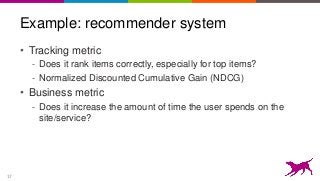

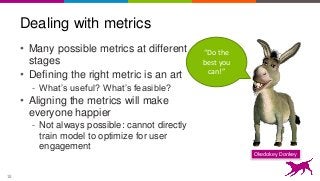

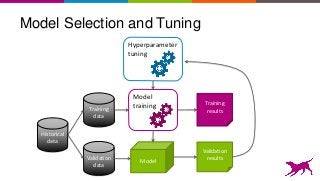

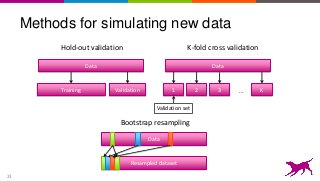

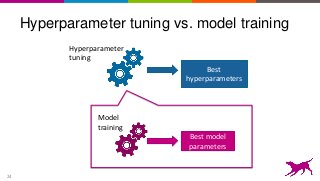

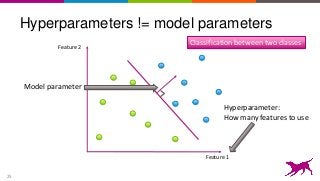

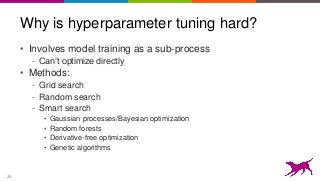

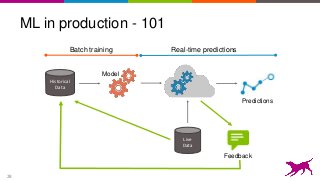

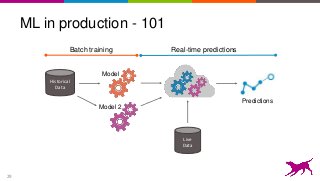

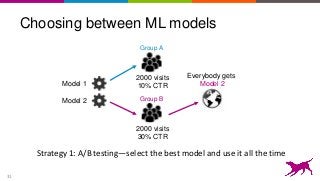

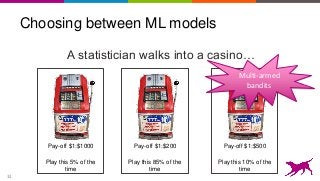

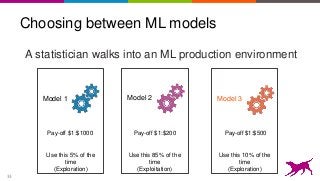

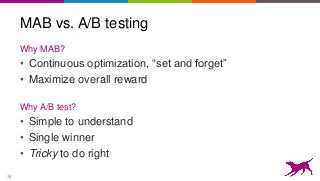

This is an overview on evaluating machine learning models: when, how, metrics, datasets, methods. Topics include metrics, validation, hyperparameter tuning, A/B testing, and multi-armed bandits. It's a summary of my short report on the topic: http://oreil.ly/1LkP2tn.