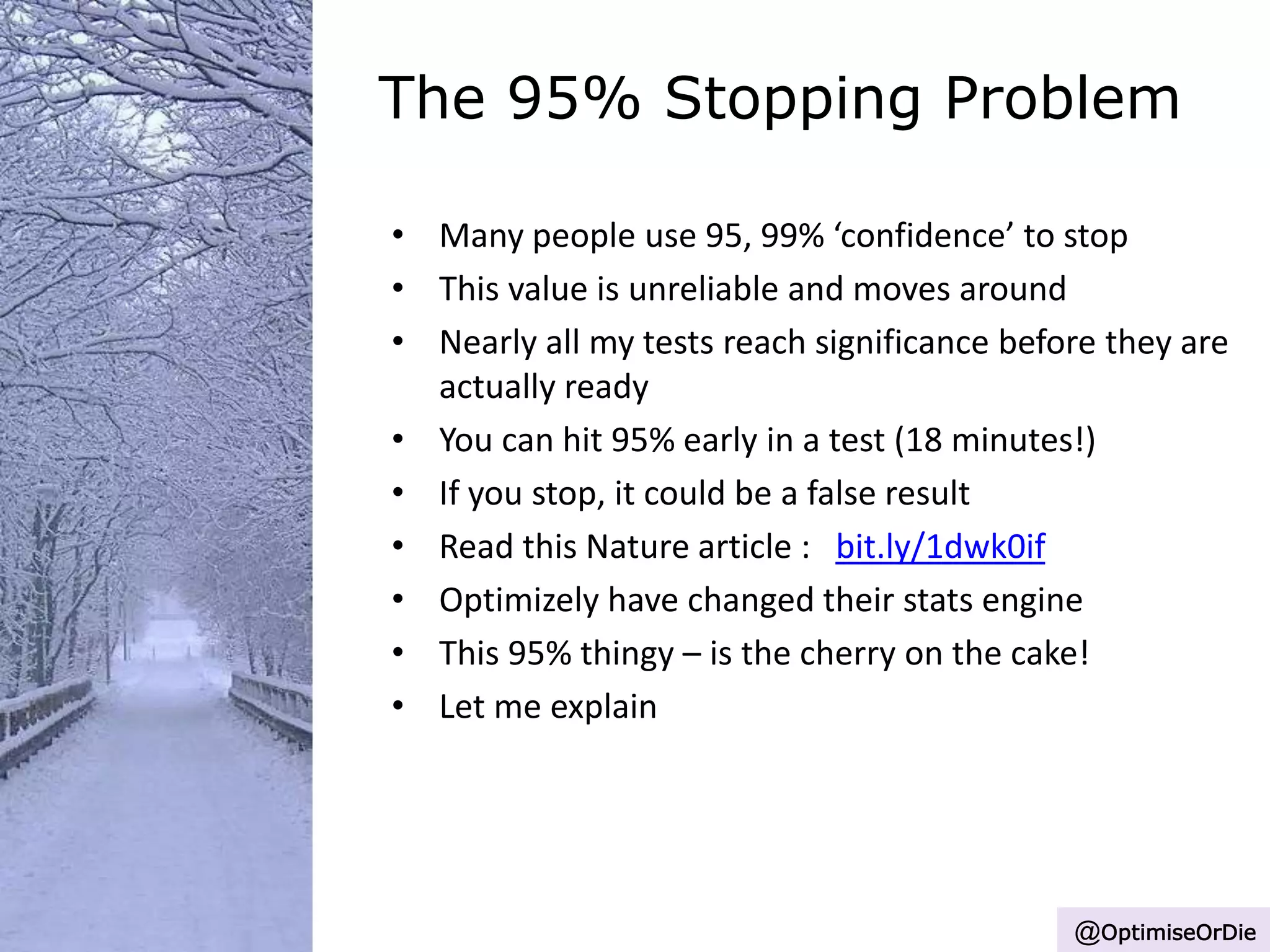

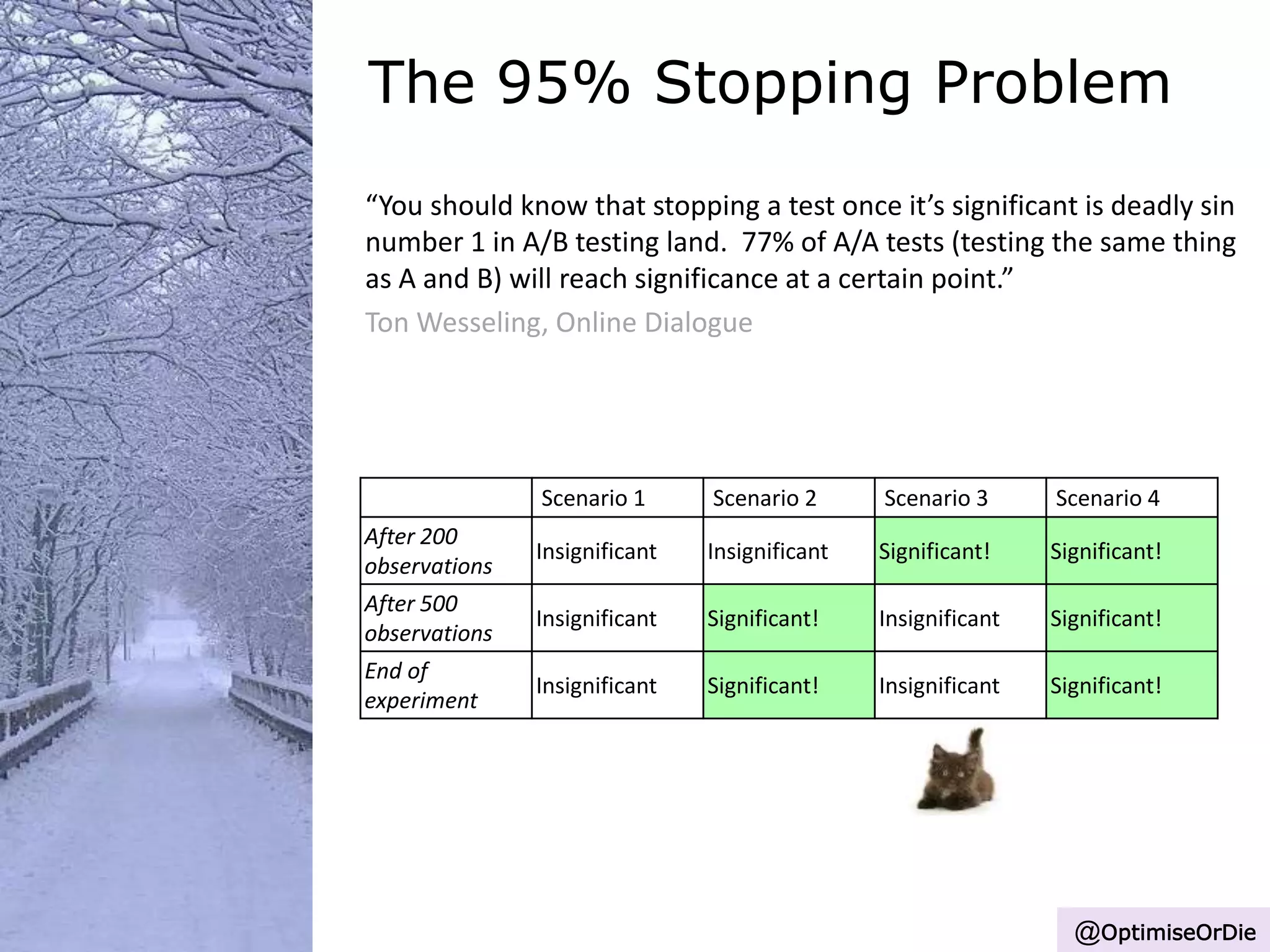

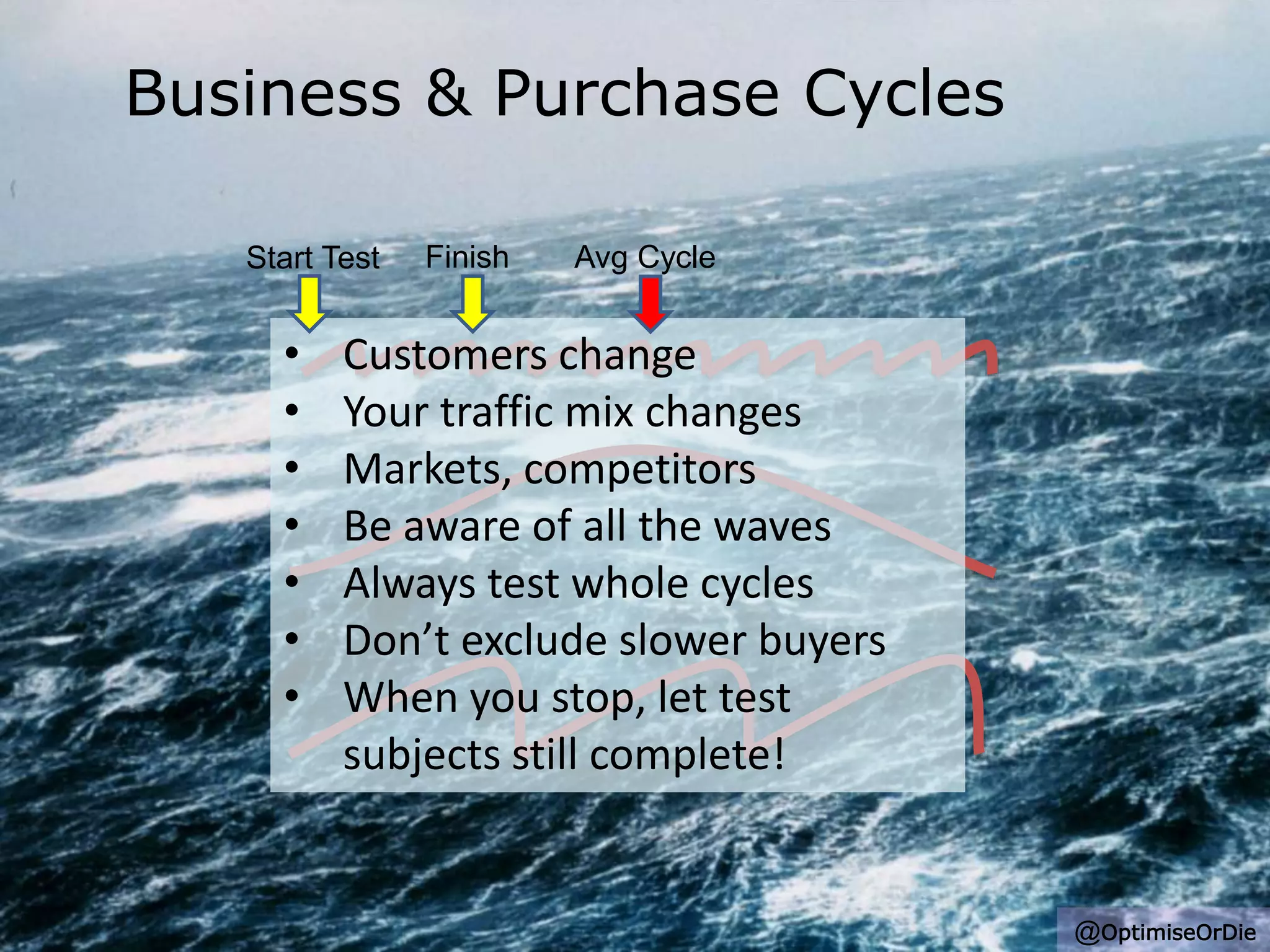

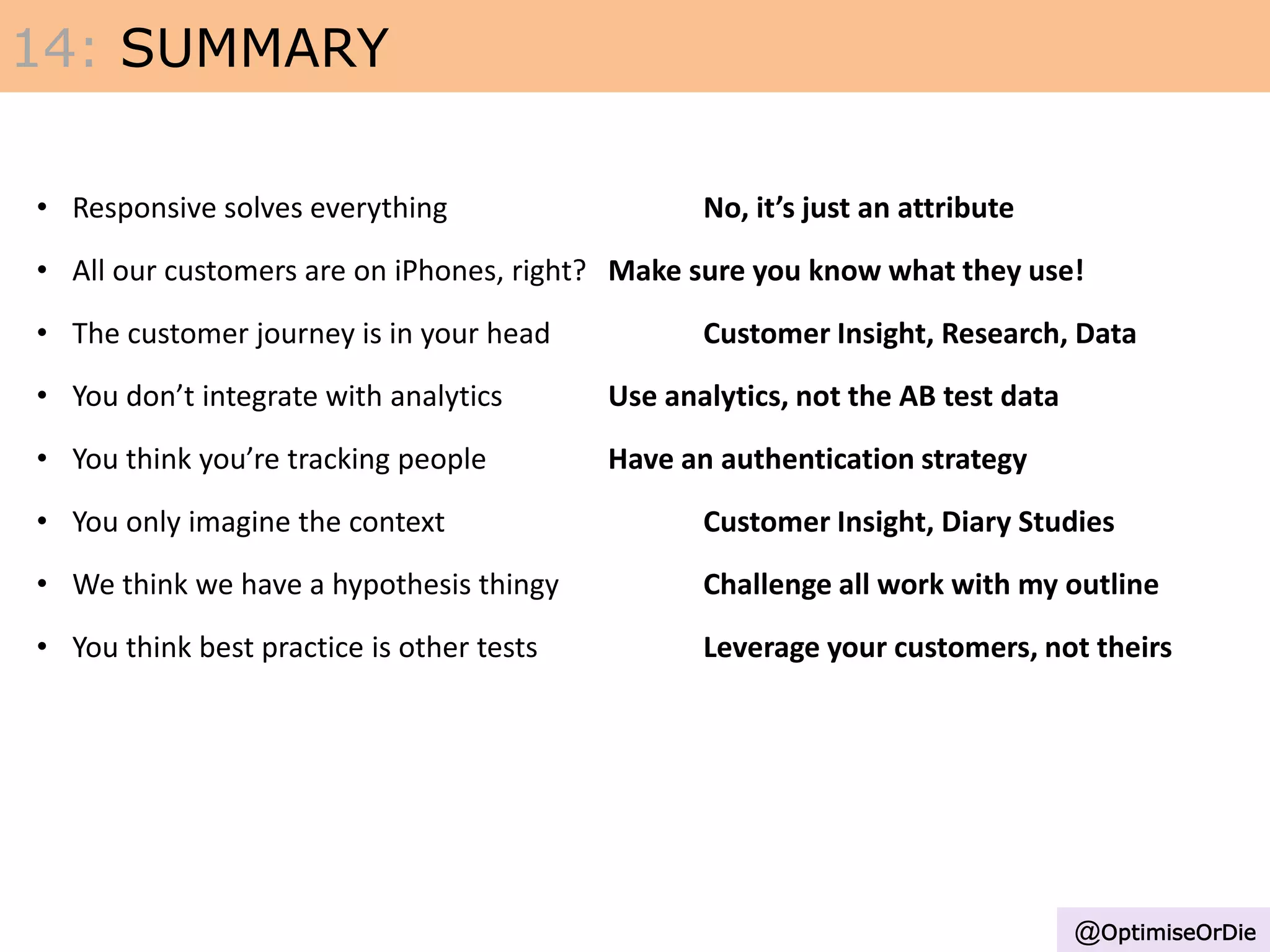

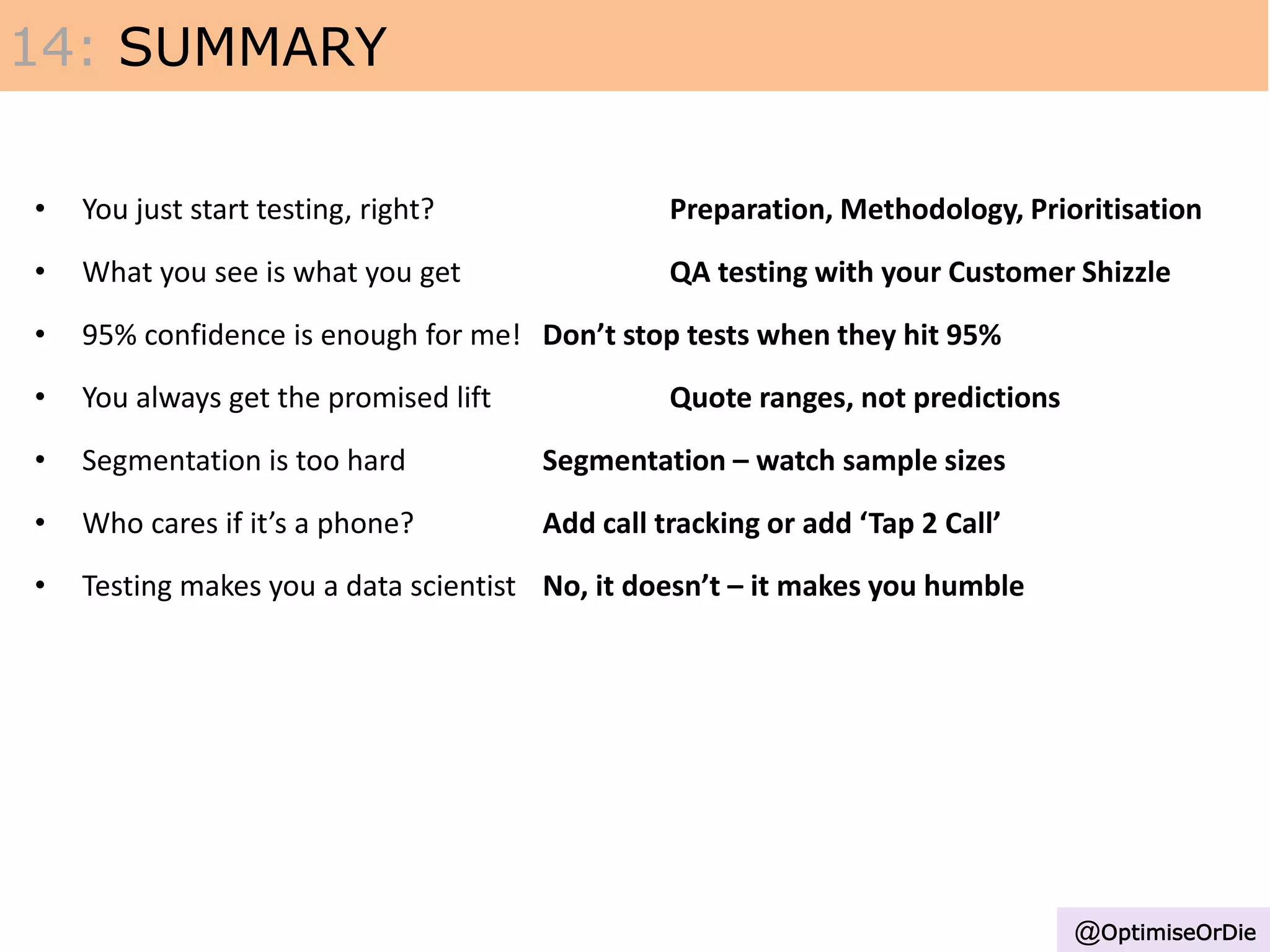

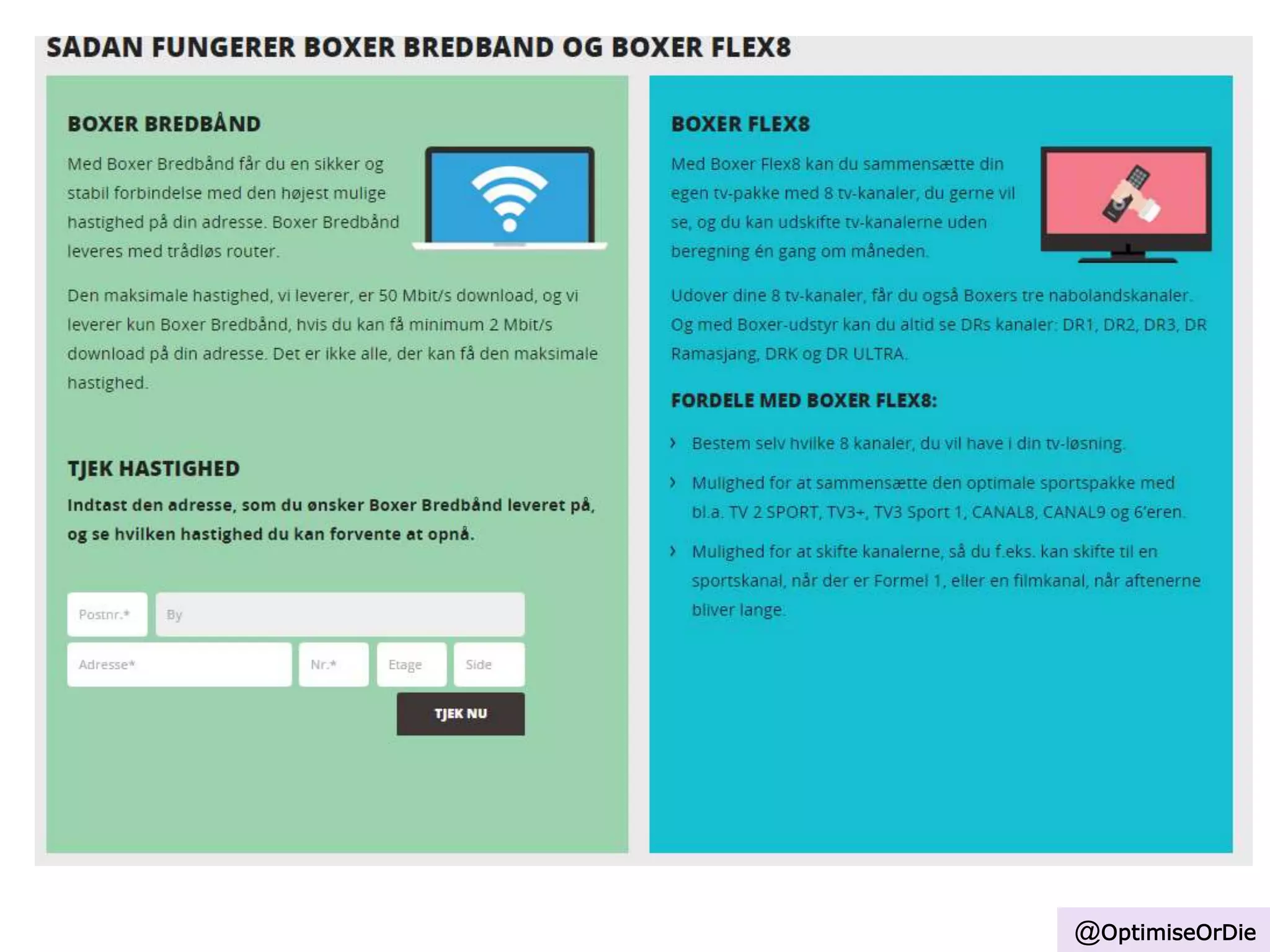

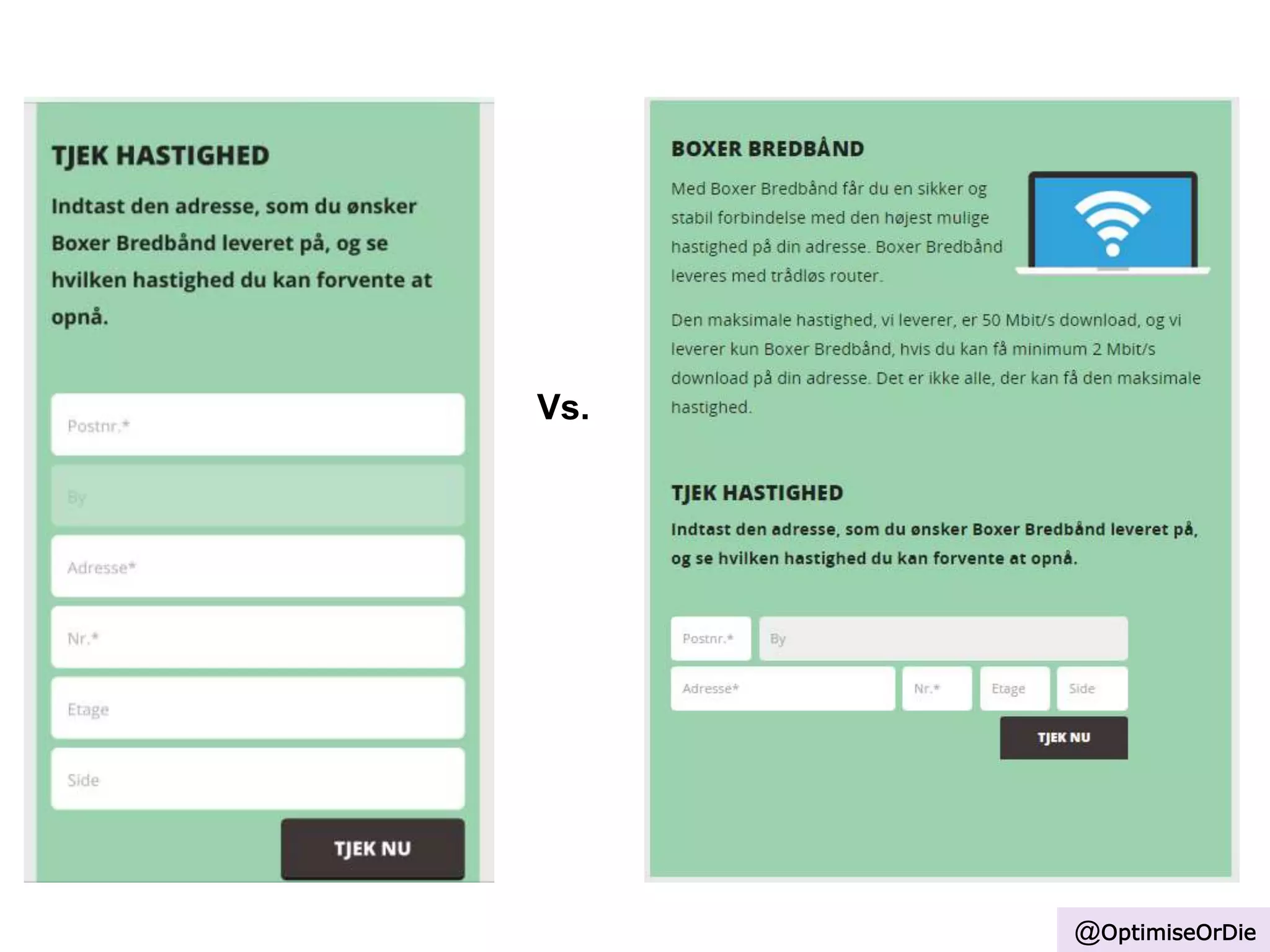

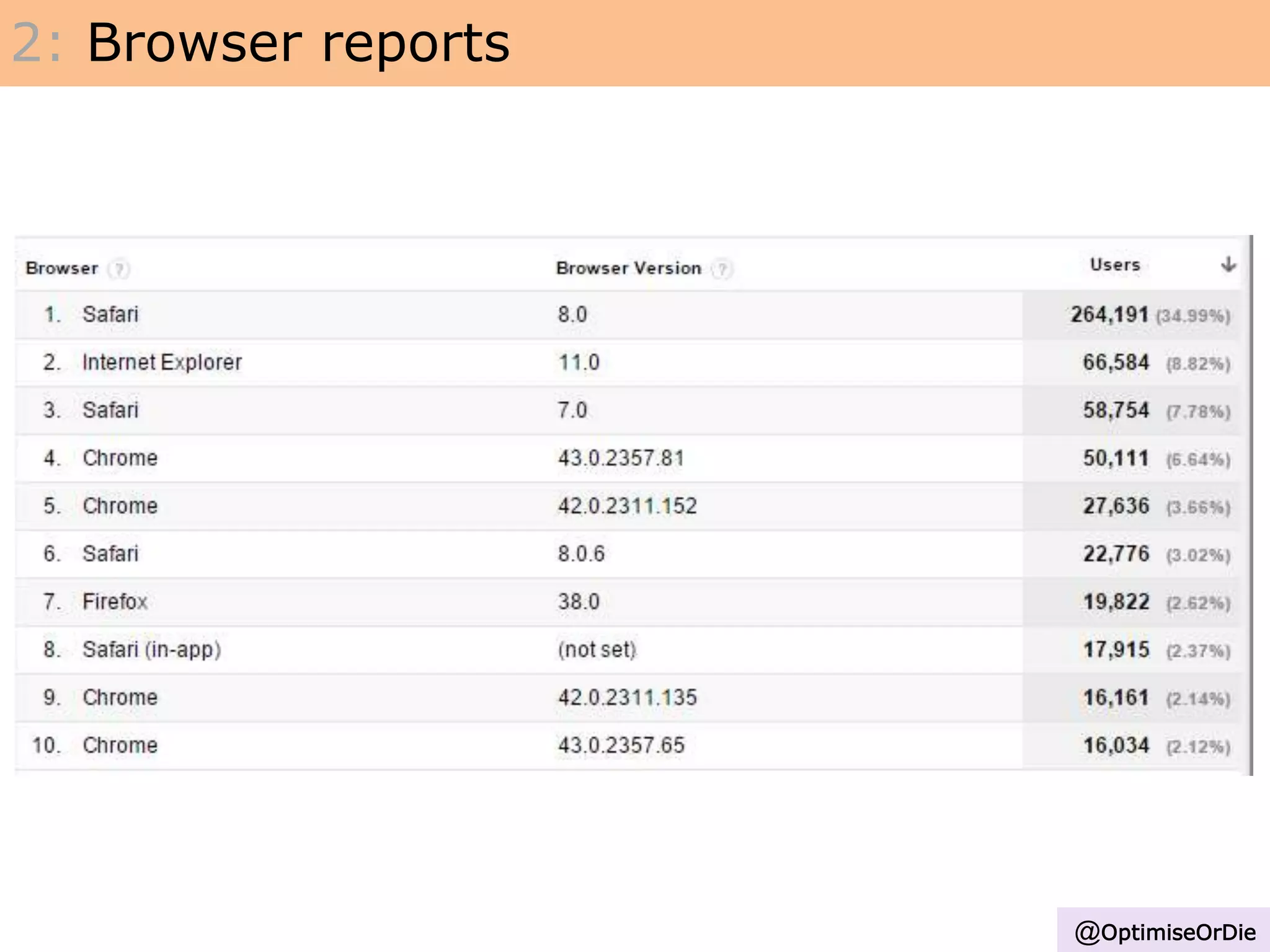

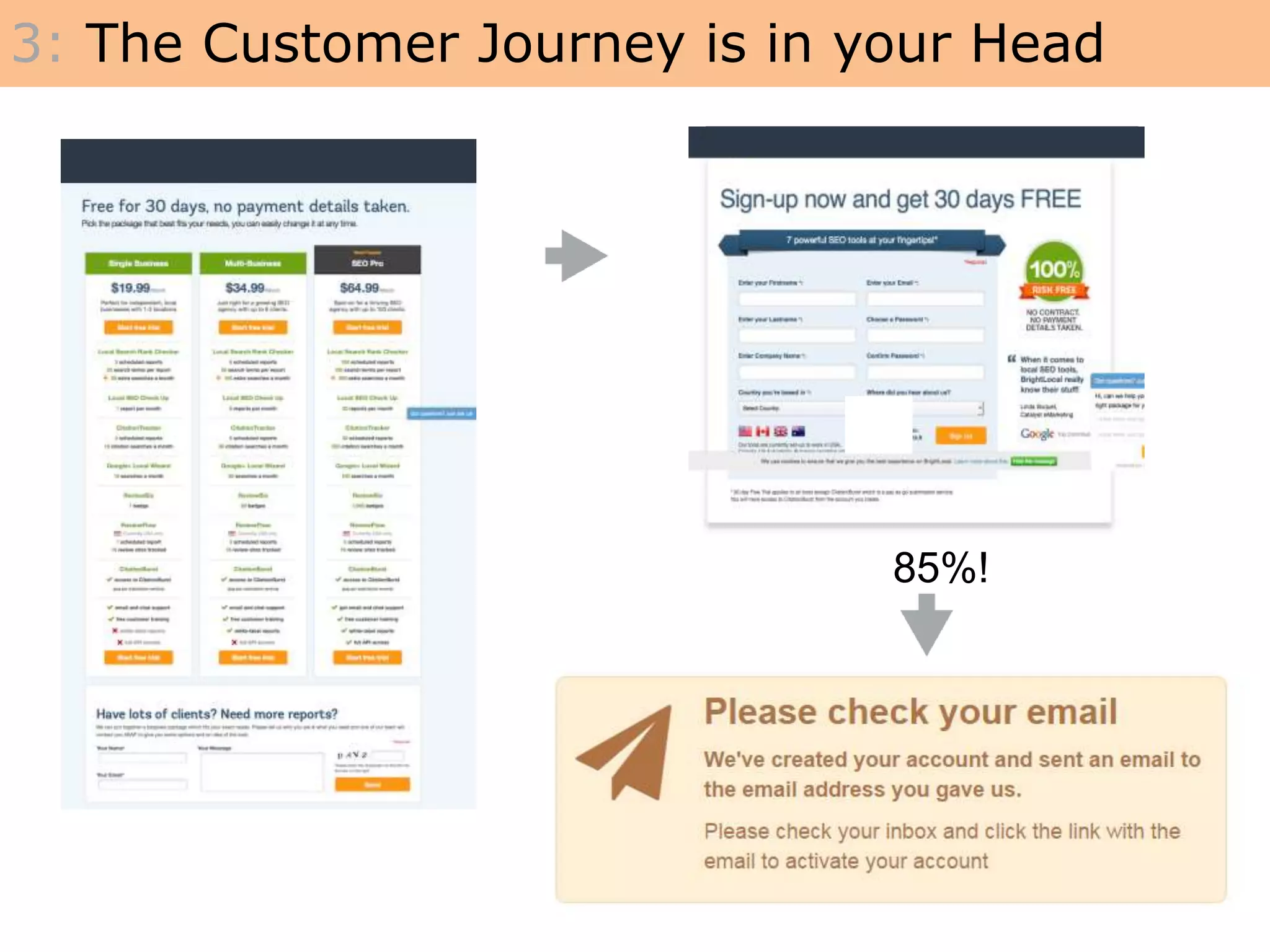

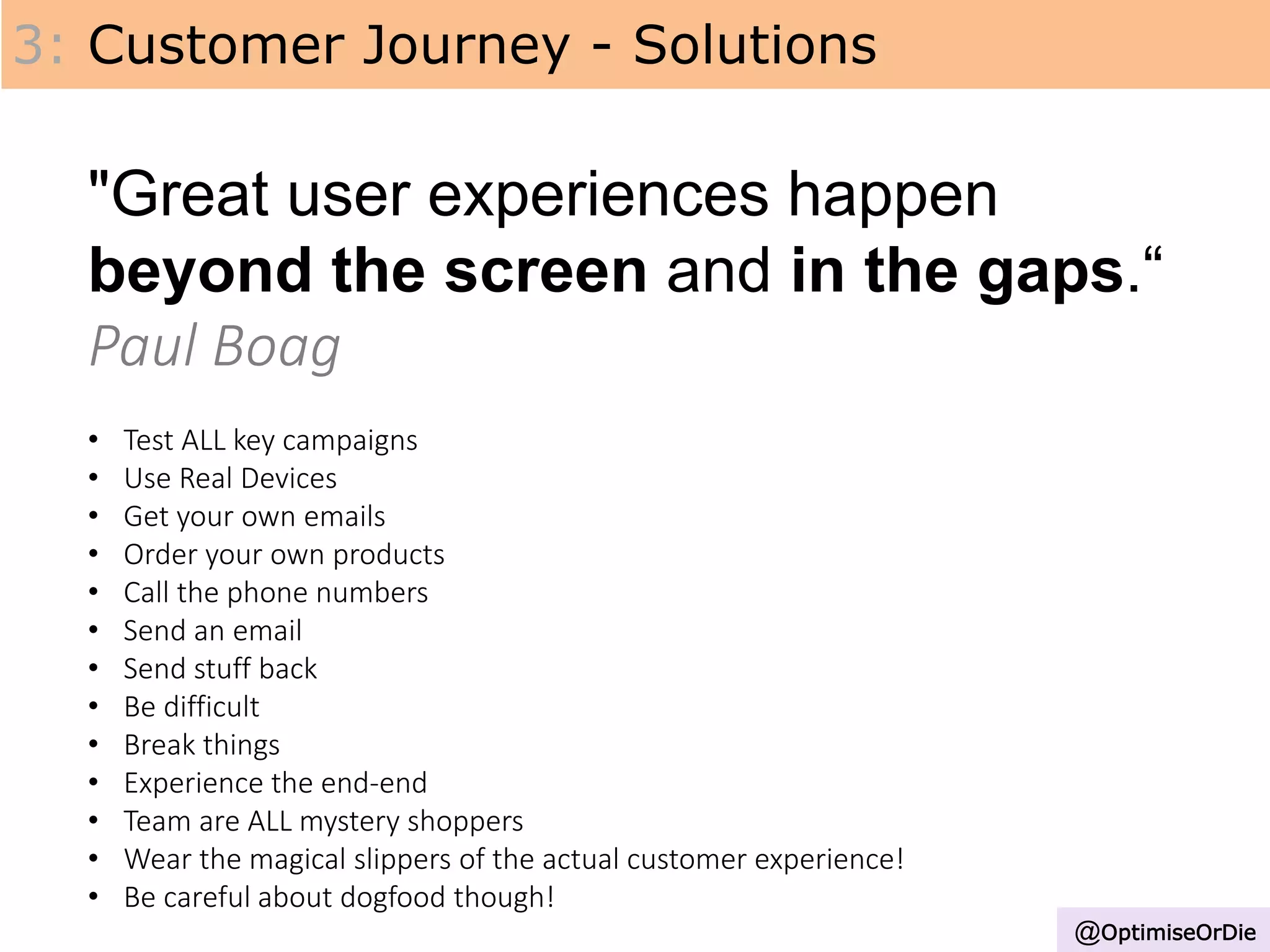

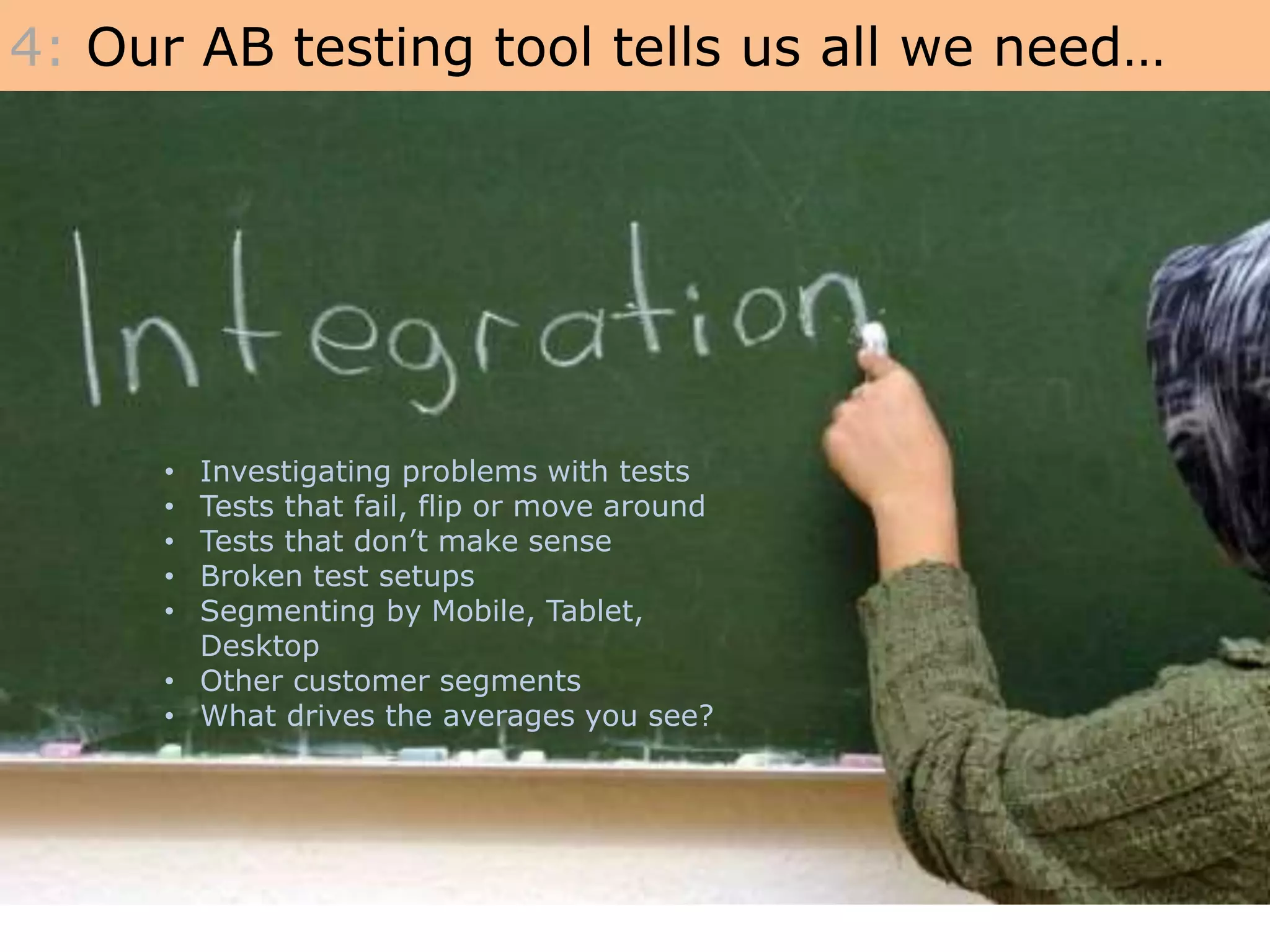

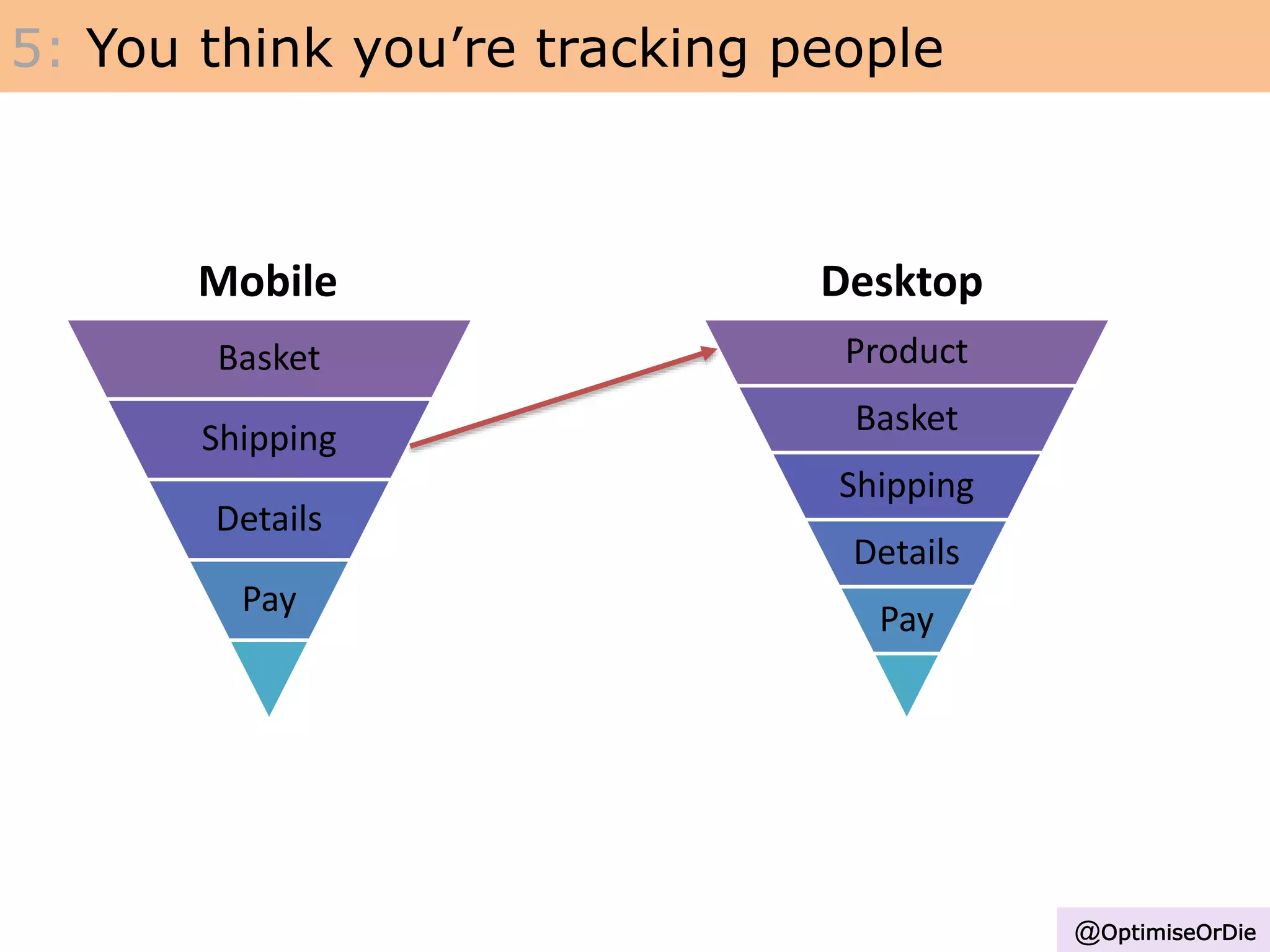

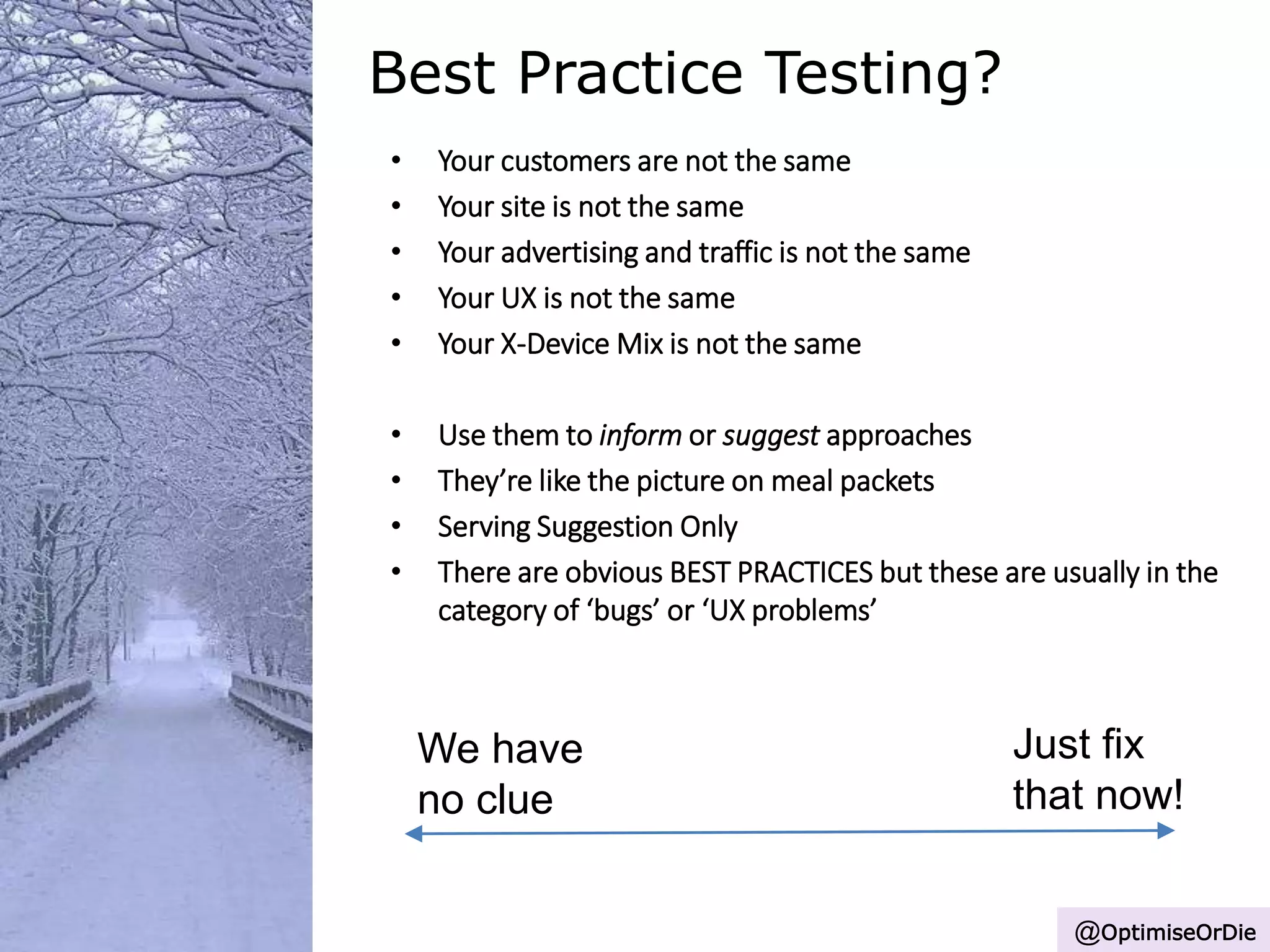

The document discusses common myths and misconceptions surrounding cross-device testing in digital marketing, emphasizing the importance of accurate analytics and understanding customer journeys. It highlights key mistakes made during testing, the necessity of genuine user experiences, and the unreliability of stopping tests at a certain confidence level. Ultimately, it advocates for a more thoughtful and data-driven approach to testing and optimization strategies.

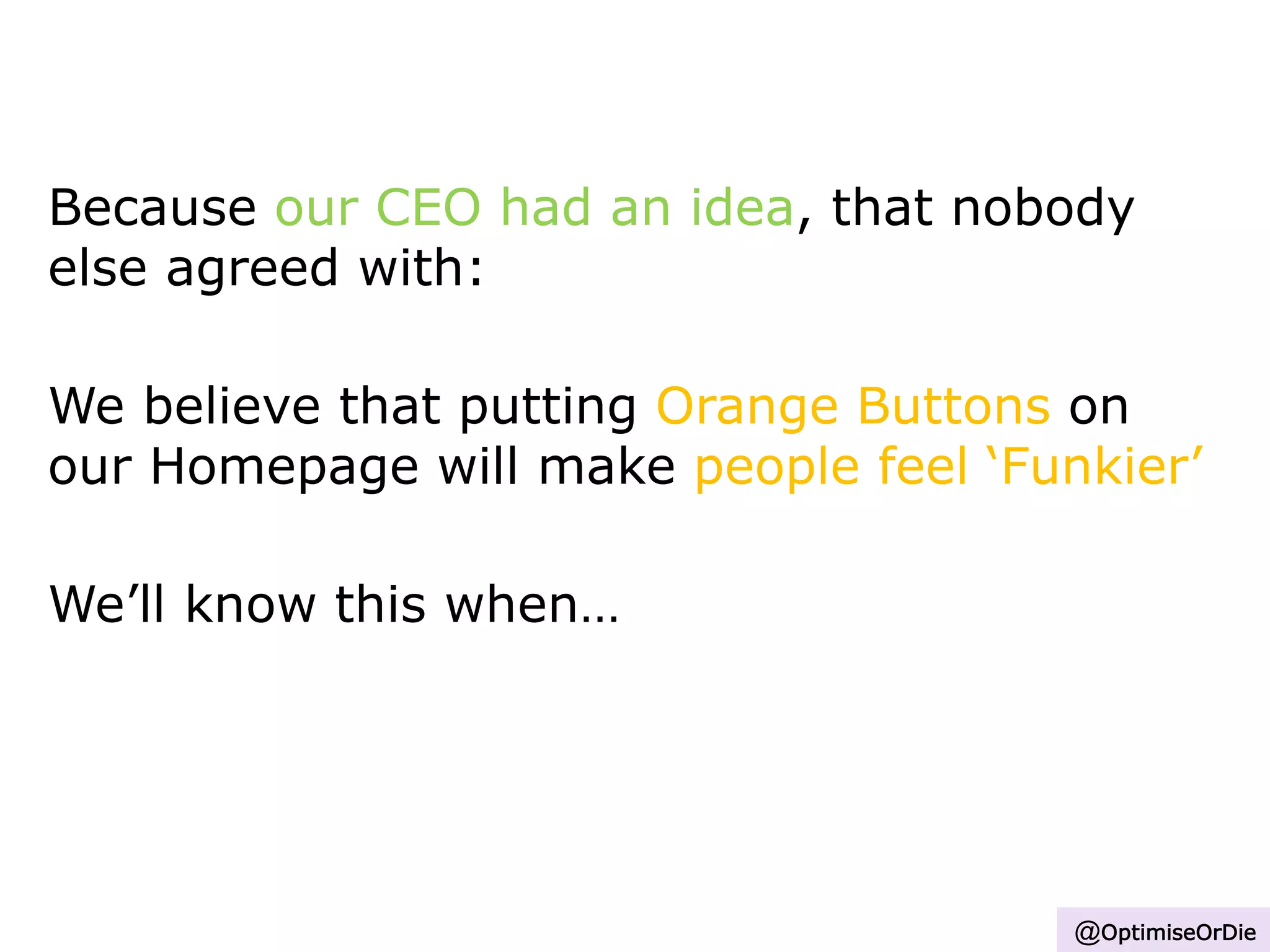

![Because we observed data [A] and feedback

[B],

We believe that doing [C] for People [D]

will make outcome [E] happen.

We’ll know this when we observe data [F]

and obtain feedback [G].

(Reverse this)

@OptimiseOrDie](https://image.slidesharecdn.com/mythsliesandillusionsofcrossdevicetesting-elitecamp-30june2015-v3-150613123525-lva1-app6892/75/Myths-and-Illusions-of-Cross-Device-Testing-Elite-Camp-June-2015-43-2048.jpg)