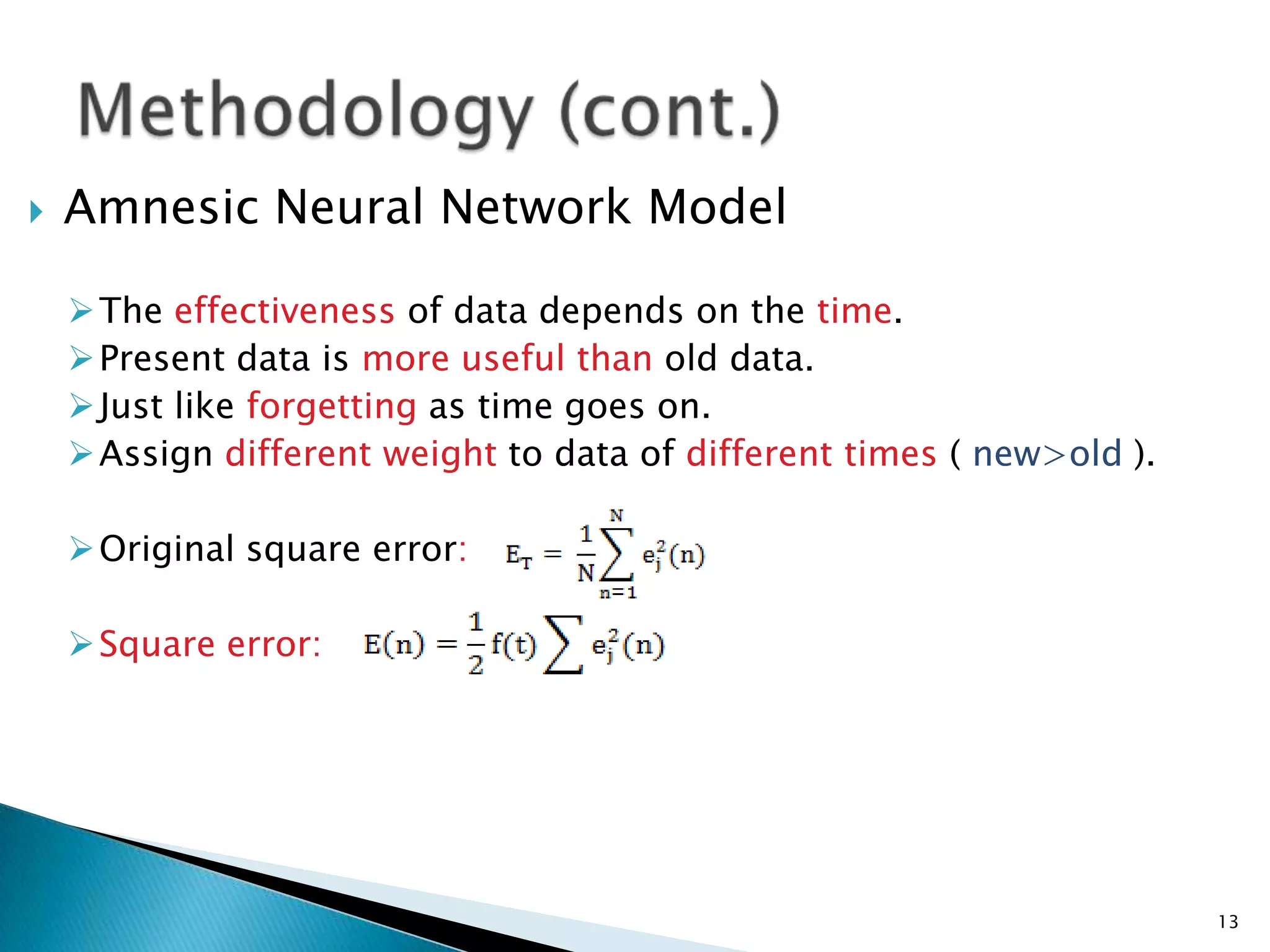

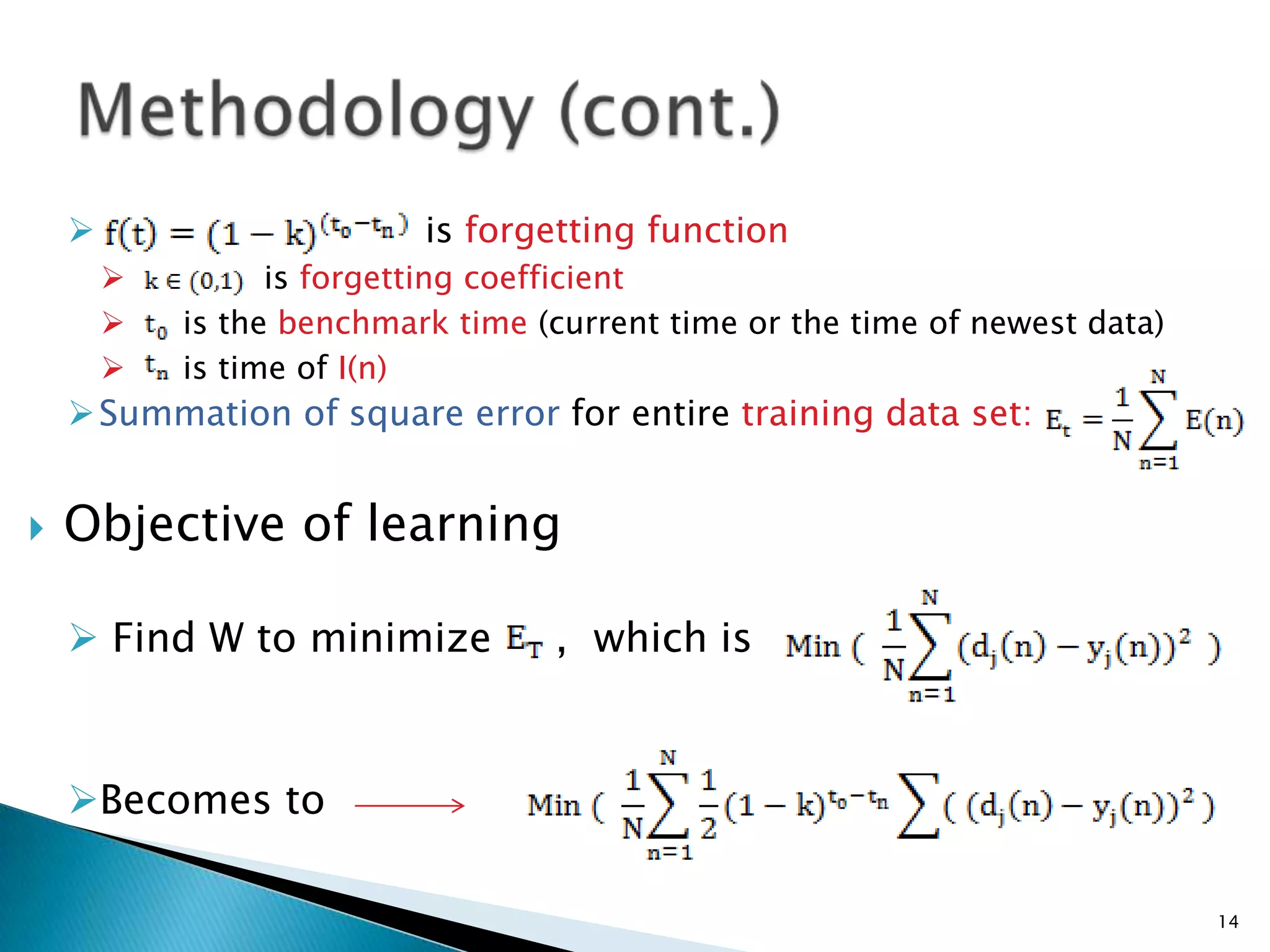

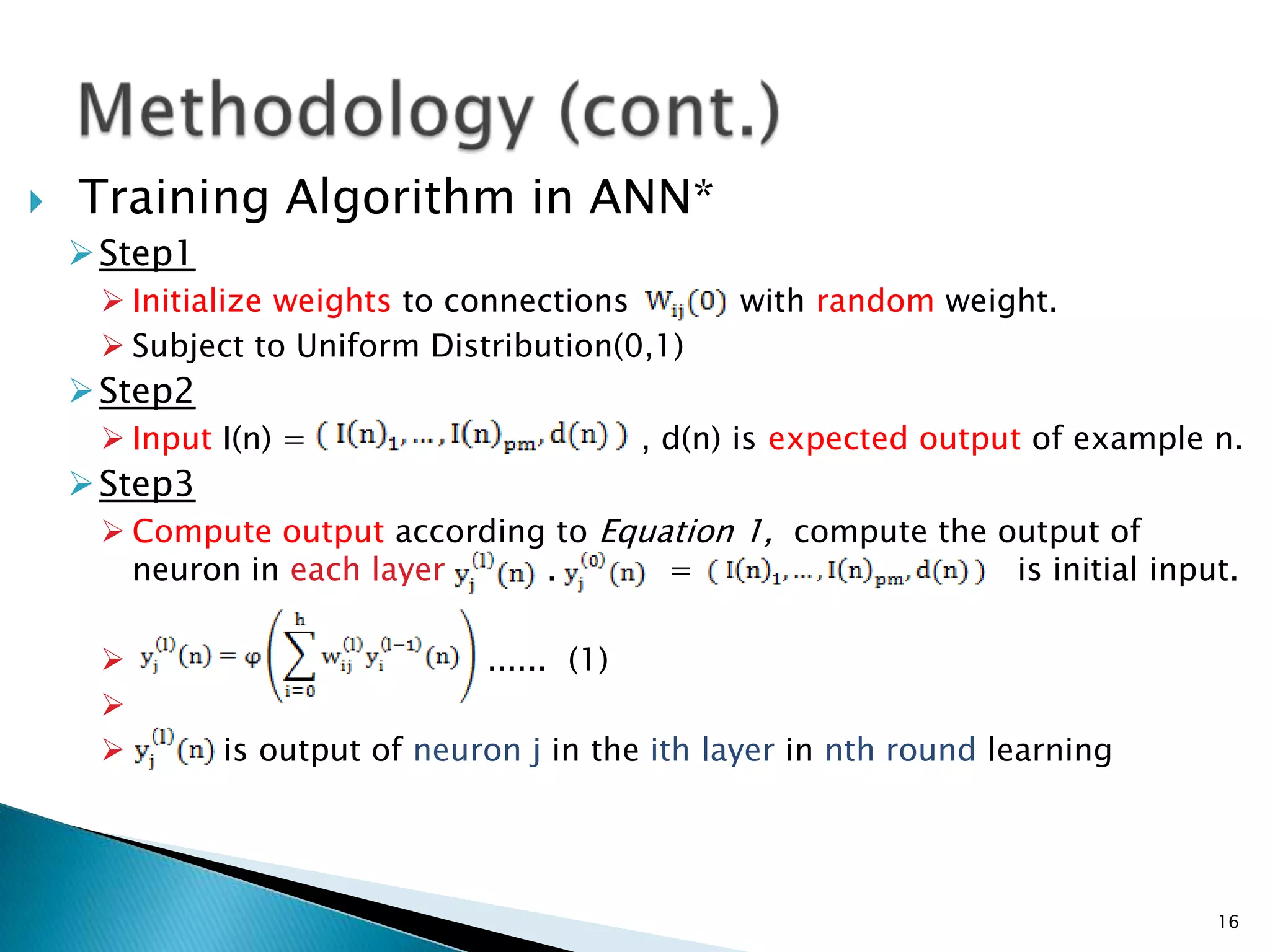

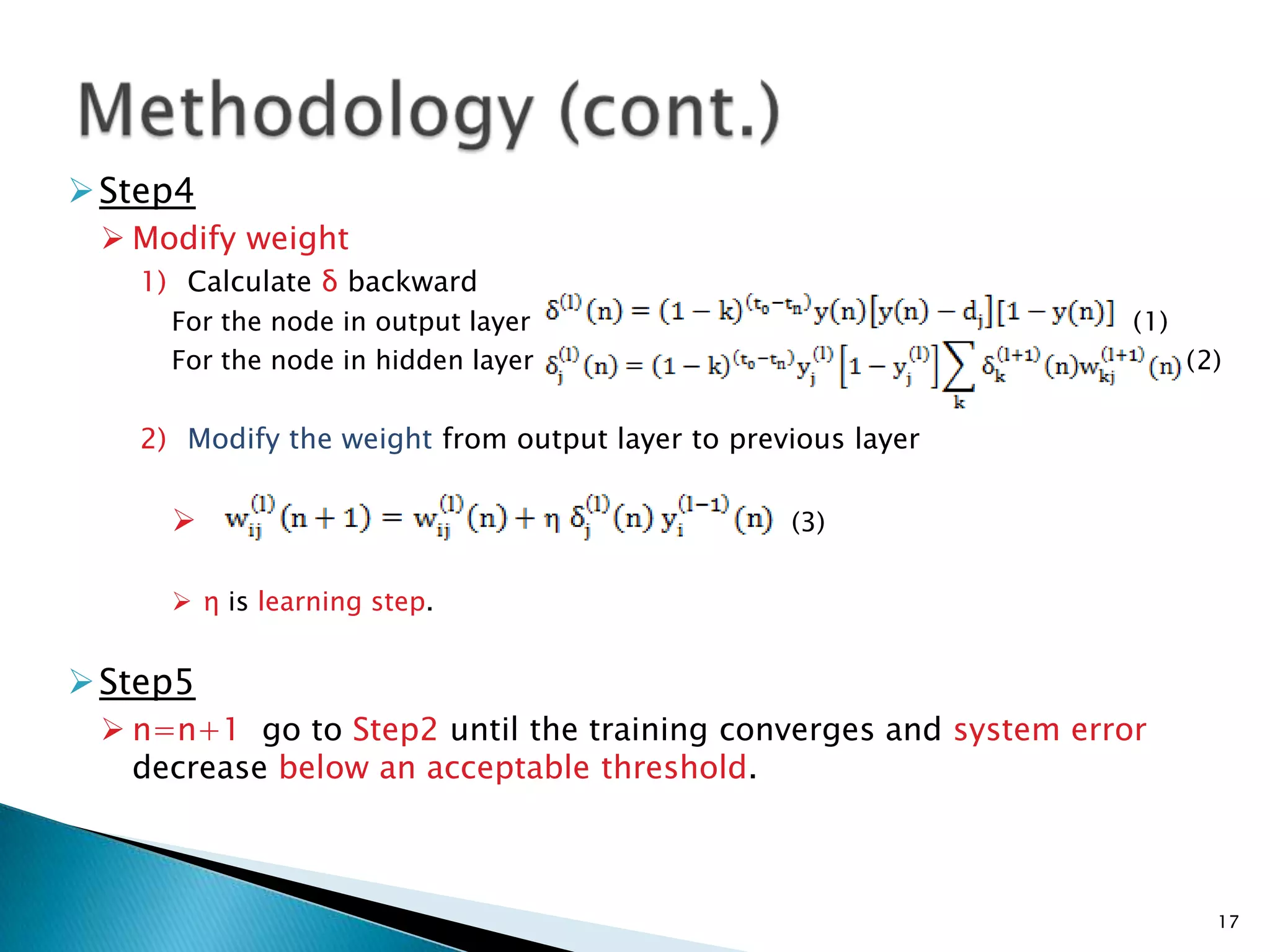

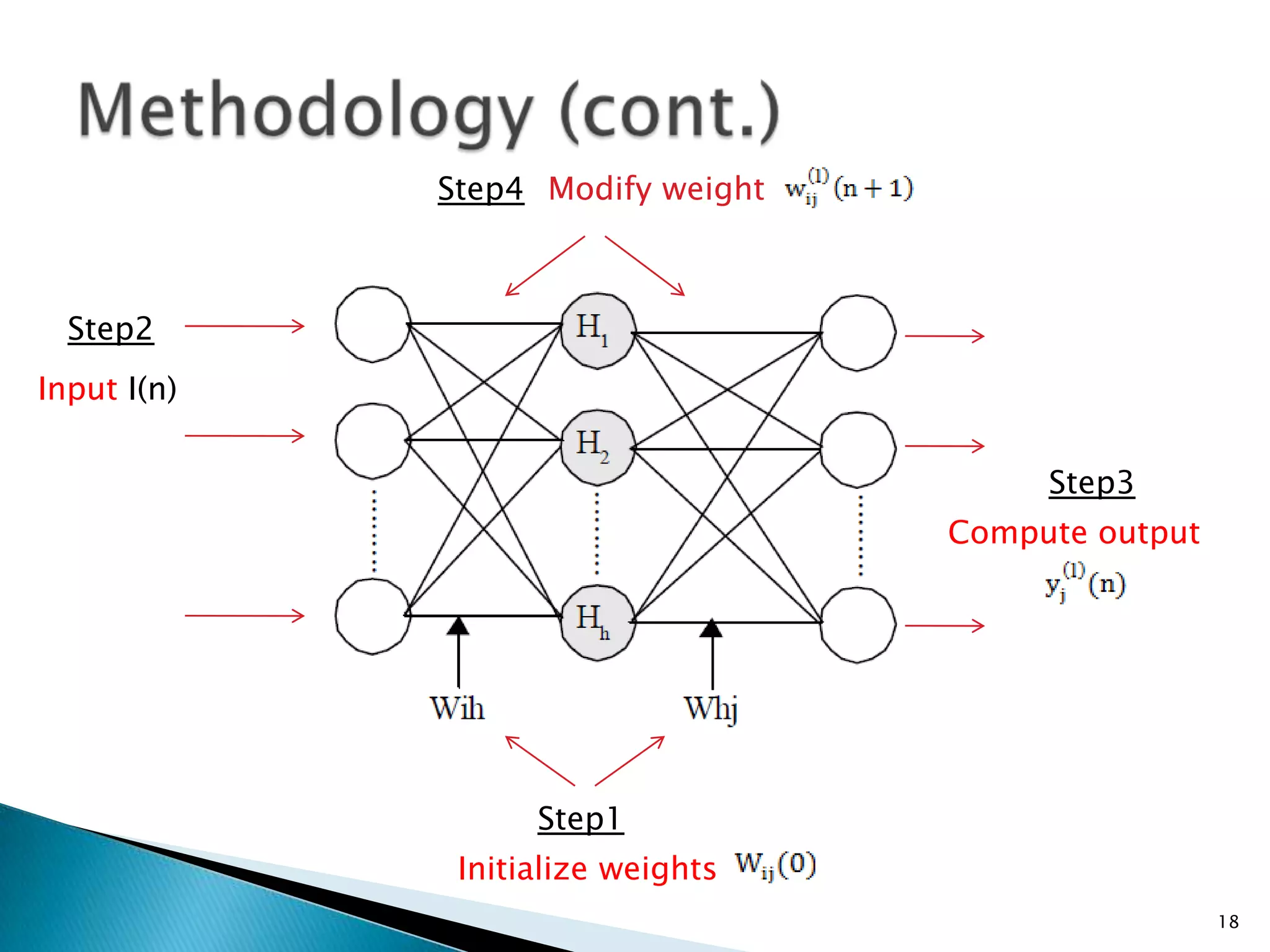

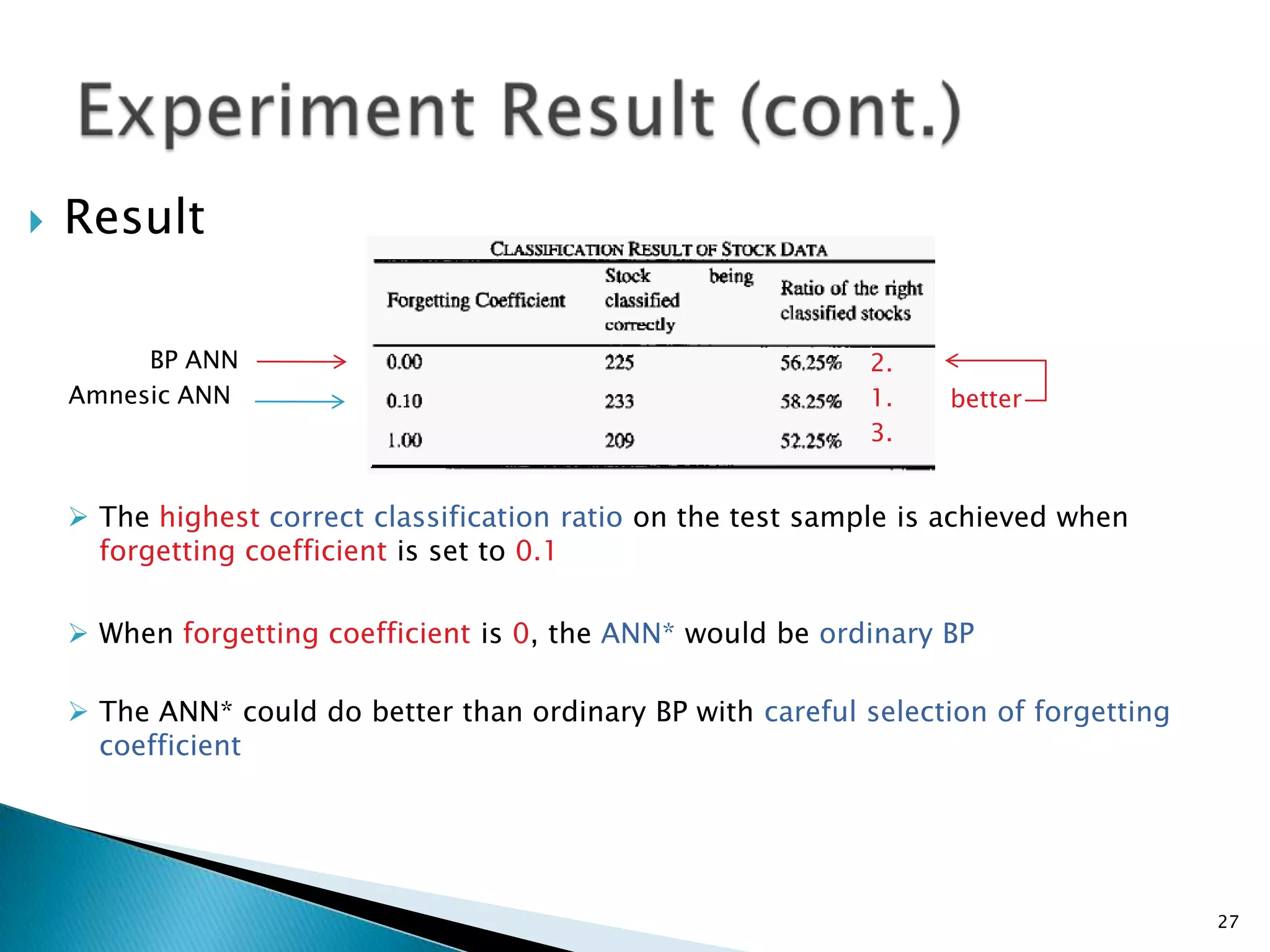

1. The document presents an Amnesic Neural Network model for stock trend prediction that incorporates "forgetting" to address time variation in customer behavior data.

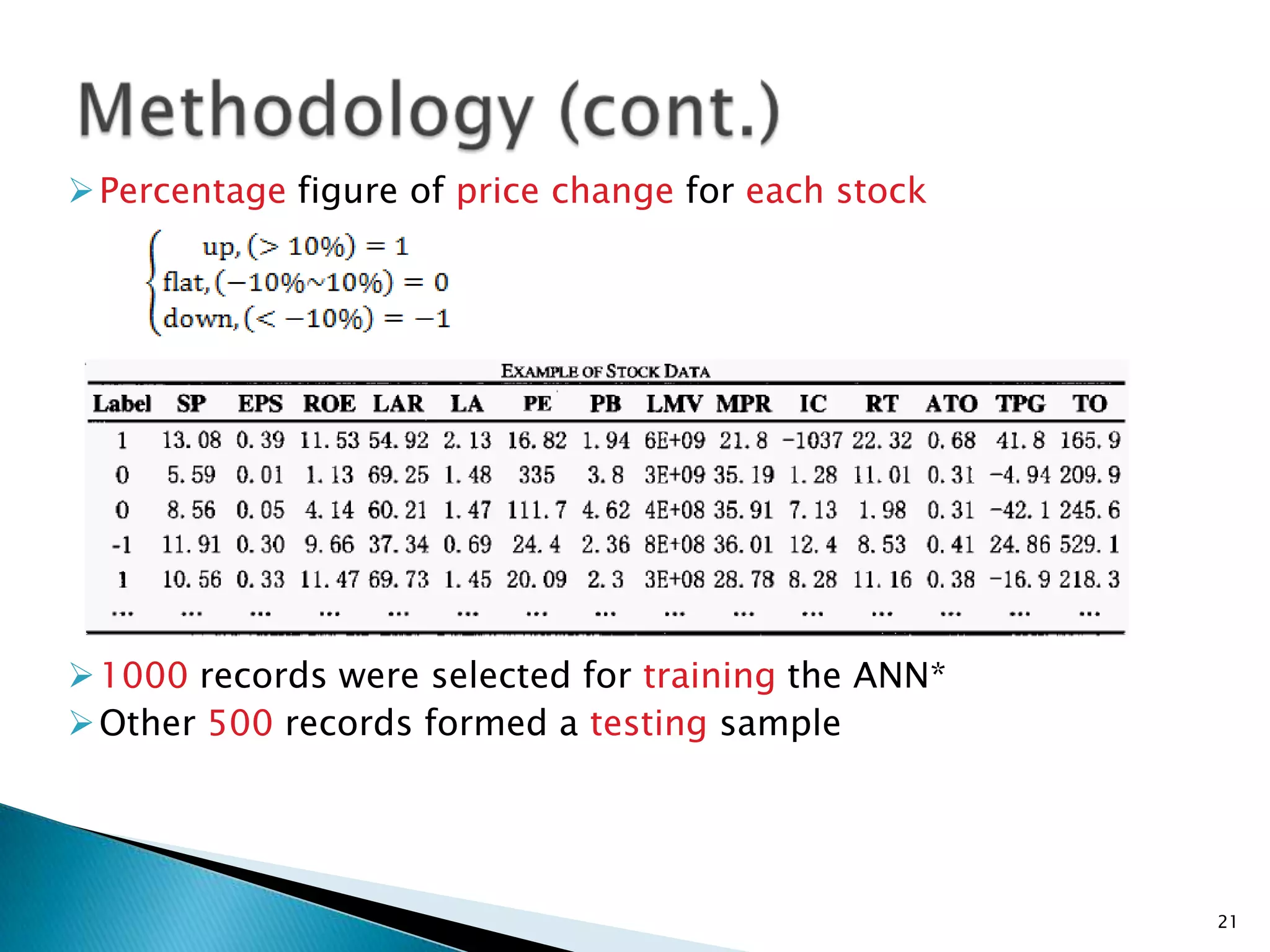

2. It applies the model to data on 900 stocks from 2001-2004 to classify price changes, training the network on 1000 records and testing it on 500.

3. The model achieved the highest classification accuracy of the test data with a forgetting coefficient of 0.1, outperforming a standard BP neural network model without forgetting.