HKG15-106: Replacing CMEM: Meeting TI's SoC shared buffer allocation, management, and address translation requirements

---------------------------------------------------

Speaker: Gil Pitney + UMM team

Date: February 9, 2015

---------------------------------------------------

★ Session Summary ★

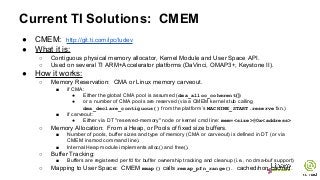

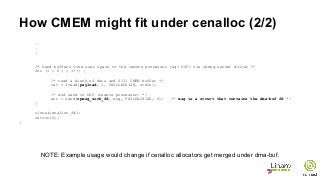

“CMEM is an API and kernel driver for managing one or more blocks of physically contiguous memory. It also provides address translation services (e.g. virtual to physical translation) and user-mode cache management APIs. ” See : http://processors.wiki.ti.com/index.php/CMEM_Overview

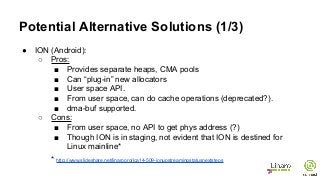

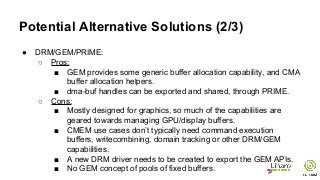

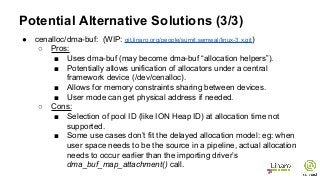

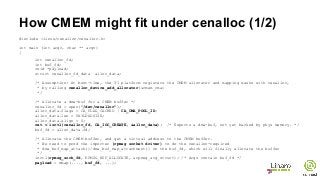

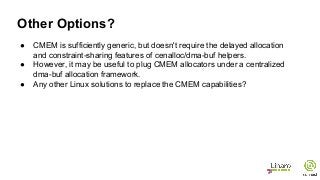

CMEM allows TI to share buffers between user space (ARM) and the DSP (or other remote processors). For Keystone, we also need the ability to allocate large (> 2GB) buffers from CMA. In addition to managing shared data buffers for media applications, CMEM is used by the networking stack to get physical addresses to program hardware registers (new UIO capability needed?) This session plans to be a presentation of TI SoC requirements which are currently being met by CMEM, and a discussion of how/if we can meet these requirements instead using UMM (cenalloc/dma-buf), DRM, CMA or other mainline Linux mechanisms existing or under development.

--------------------------------------------------

★ Resources ★

Pathable: https://hkg15.pathable.com/meetings/250766

Video: https://www.youtube.com/watch?v=j0BhqQlOPQ0

Etherpad: http://pad.linaro.org/p/hkg15-106

---------------------------------------------------

★ Event Details ★

Linaro Connect Hong Kong 2015 - #HKG15

February 9-13th, 2015

Regal Airport Hotel Hong Kong Airport

---------------------------------------------------

http://www.linaro.org

http://connect.linaro.org