Report

Share

Recommended

More Related Content

What's hot

What's hot (20)

Statistical global modeling of β^- decay halflives systematics ...

Statistical global modeling of β^- decay halflives systematics ...

Premeditated Initial Points for K-Means Clustering

Premeditated Initial Points for K-Means Clustering

COLOCATION MINING IN UNCERTAIN DATA SETS: A PROBABILISTIC APPROACH

COLOCATION MINING IN UNCERTAIN DATA SETS: A PROBABILISTIC APPROACH

High-throughput discovery of low-dimensional and topologically non-trivial ma...

High-throughput discovery of low-dimensional and topologically non-trivial ma...

Applications of Machine Learning for Materials Discovery at NREL

Applications of Machine Learning for Materials Discovery at NREL

A survey paper on sequence pattern mining with incremental

A survey paper on sequence pattern mining with incremental

Extremely Low Bit Transformer Quantization for On-Device NMT

Extremely Low Bit Transformer Quantization for On-Device NMT

Similar to (Talk in Powerpoint Format)

Similar to (Talk in Powerpoint Format) (20)

Clustering for Stream and Parallelism (DATA ANALYTICS)

Clustering for Stream and Parallelism (DATA ANALYTICS)

. An introduction to machine learning and probabilistic ...

. An introduction to machine learning and probabilistic ...

Opportunities for X-Ray science in future computing architectures

Opportunities for X-Ray science in future computing architectures

Machine Learning: Foundations Course Number 0368403401

Machine Learning: Foundations Course Number 0368403401

Reduct generation for the incremental data using rough set theory

Reduct generation for the incremental data using rough set theory

More from butest

More from butest (20)

Popular Reading Last Updated April 1, 2010 Adams, Lorraine The ...

Popular Reading Last Updated April 1, 2010 Adams, Lorraine The ...

The MYnstrel Free Press Volume 2: Economic Struggles, Meet Jazz

The MYnstrel Free Press Volume 2: Economic Struggles, Meet Jazz

Executive Summary Hare Chevrolet is a General Motors dealership ...

Executive Summary Hare Chevrolet is a General Motors dealership ...

Welcome to the Dougherty County Public Library's Facebook and ...

Welcome to the Dougherty County Public Library's Facebook and ...

(Talk in Powerpoint Format)

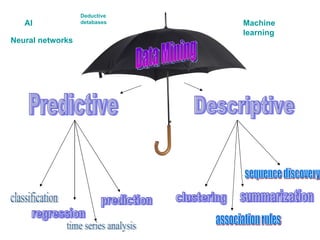

- 1. Data Mining Predictive Descriptive classification regression time series analysis prediction clustering association rules summarization sequence discovery AI Machine learning Neural networks Deductive detabases

- 11. One famous technique Ross Quinlan’s ID3 algorithm

- 12. The weather data N TRUE high mild rain 14 P FALSE normal hot overcast 13 P TRUE high mild overcast 12 P TRUE normal mild sunny 11 P FALSE normal mild rain 10 P FALSE normal cool sunny 9 N FALSE high mild sunny 8 P TRUE normal cool overcast 7 N TRUE normal cool rain 6 P FALSE normal cool rain 5 P FALSE high mild rain 4 P FALSE high hot overcast 3 N TRUE high hot sunny 2 N FALSE high hot sunny 1 Class Windy Humidity Temperature Outlook Object

- 30. Baysian Networks Graphical Models = Markov models undirected edges