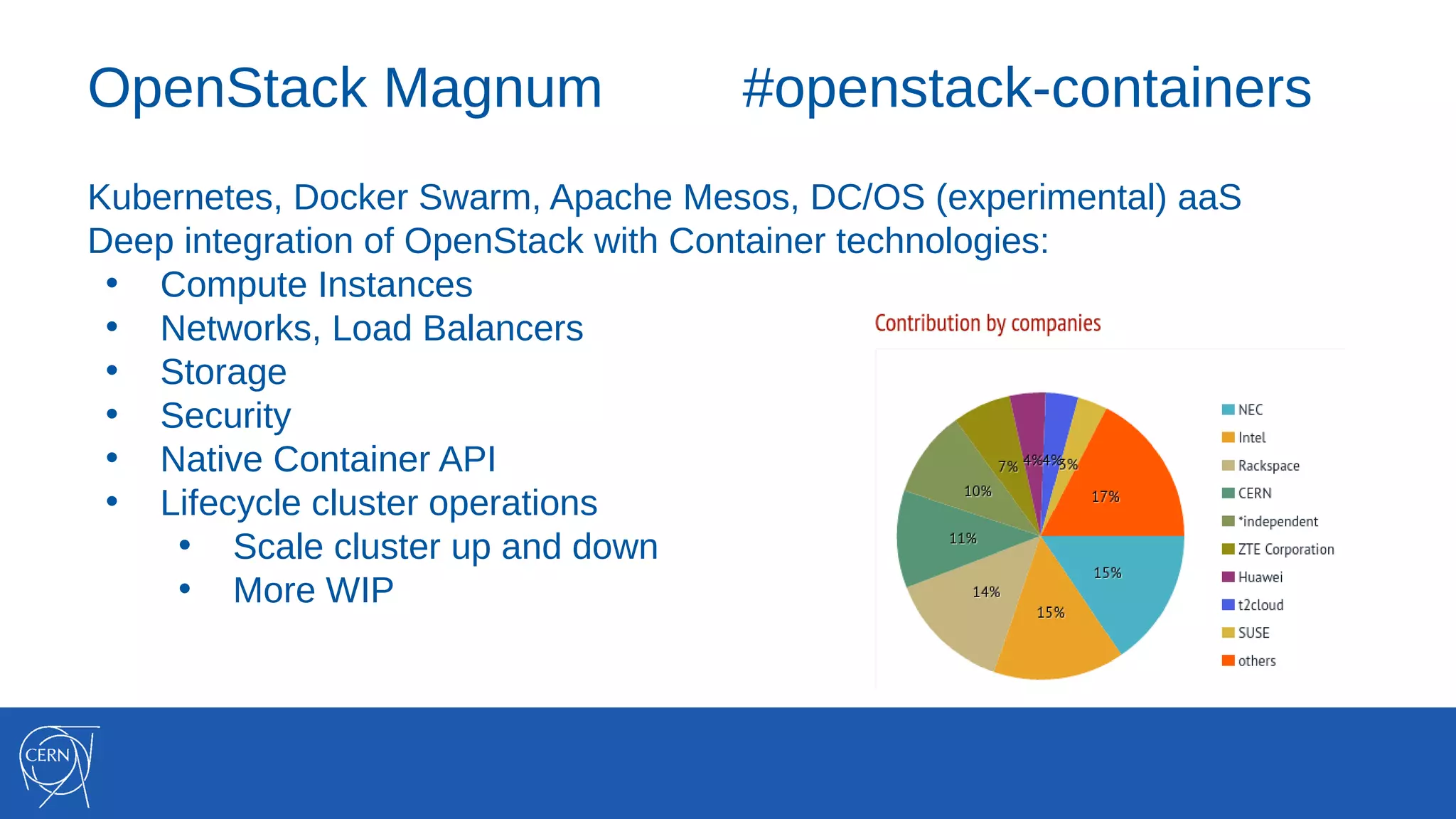

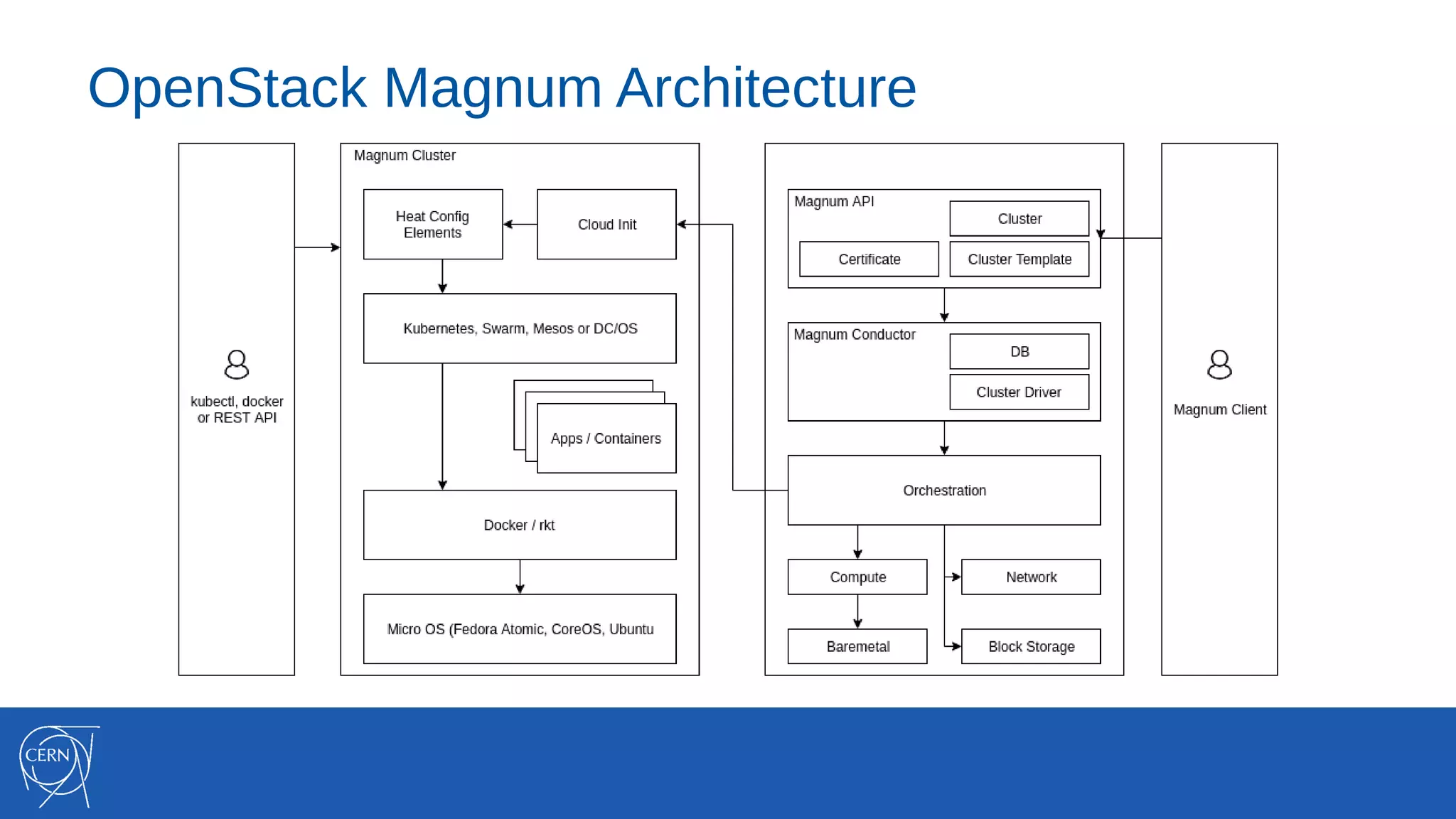

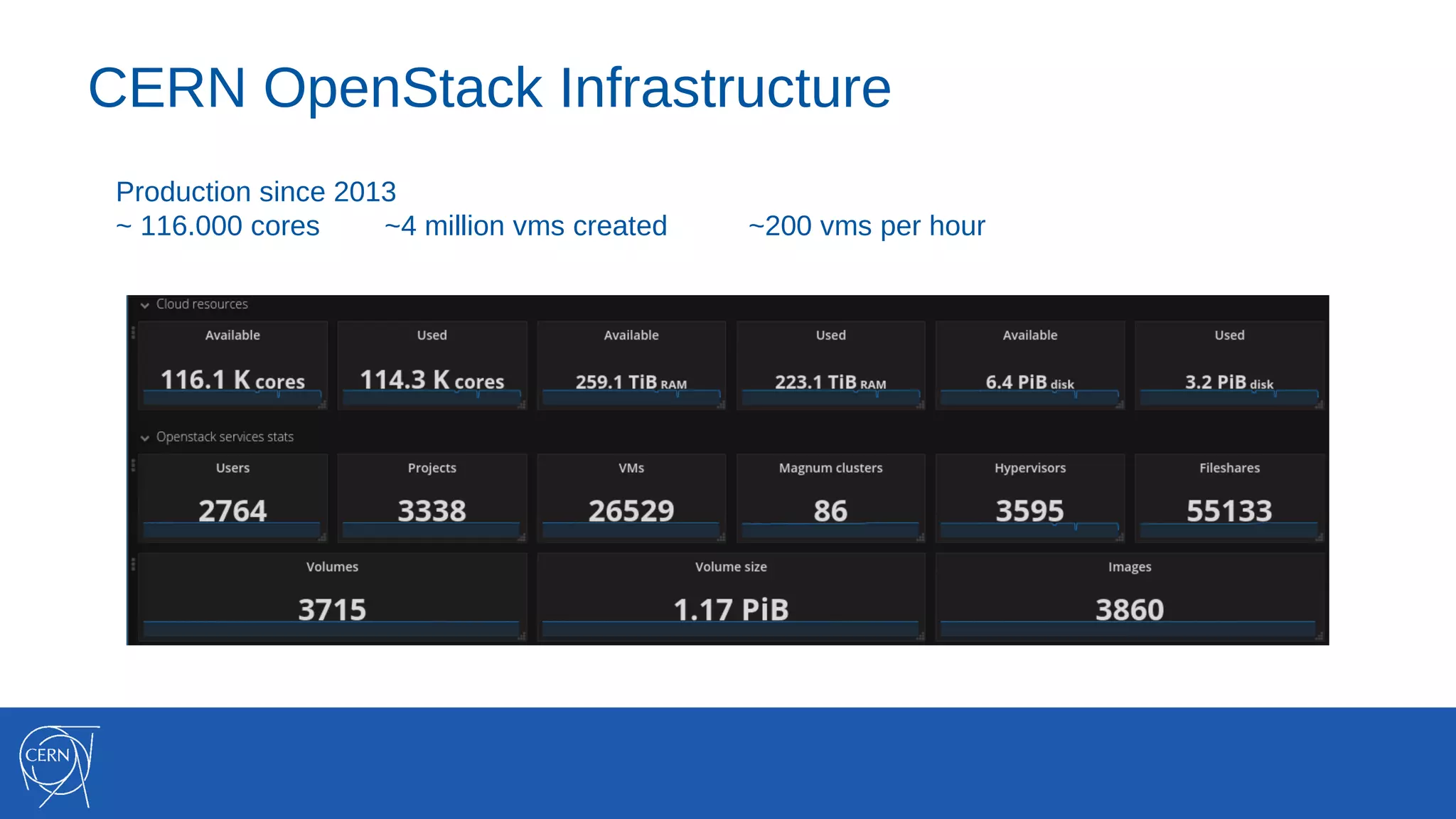

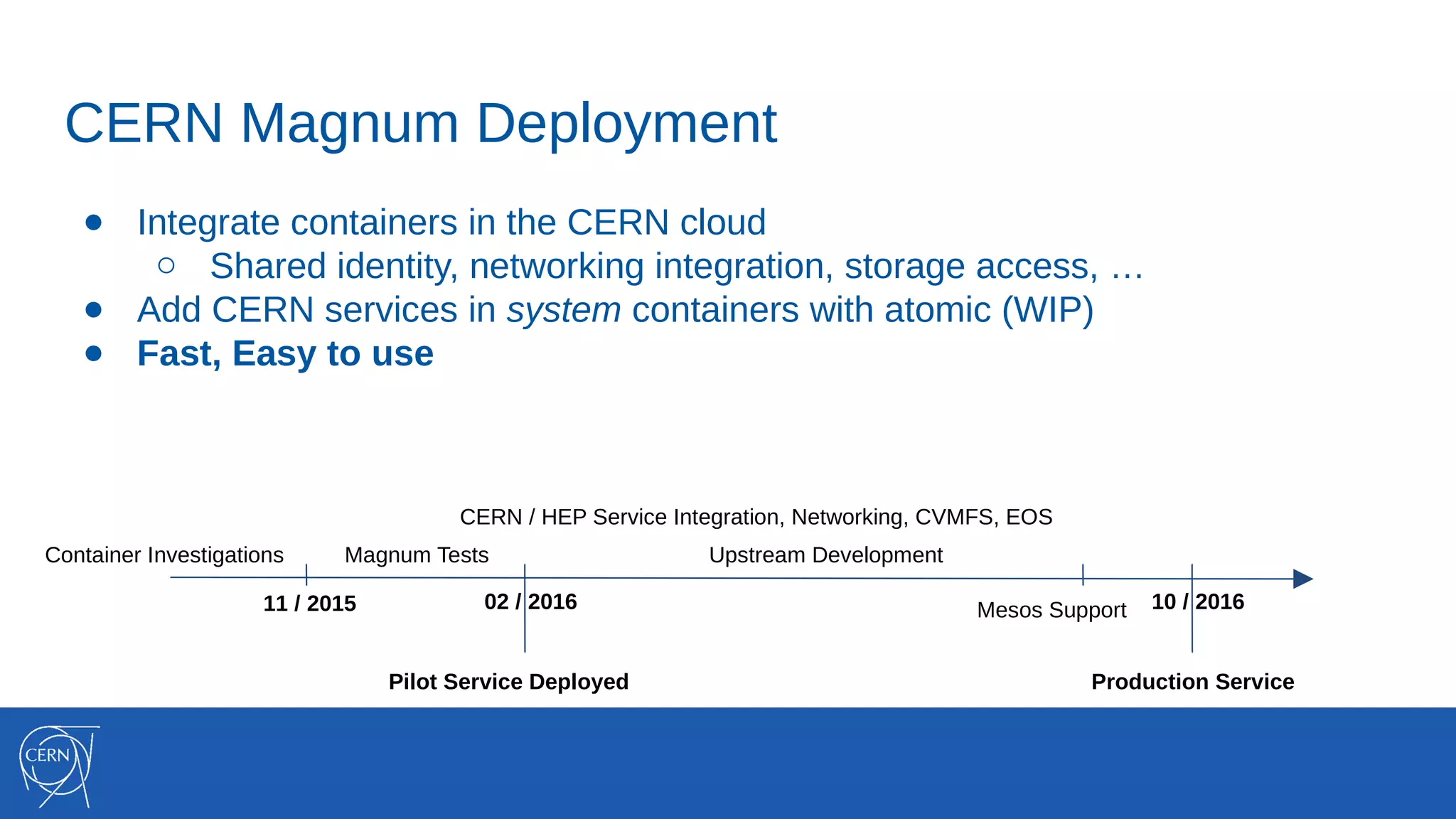

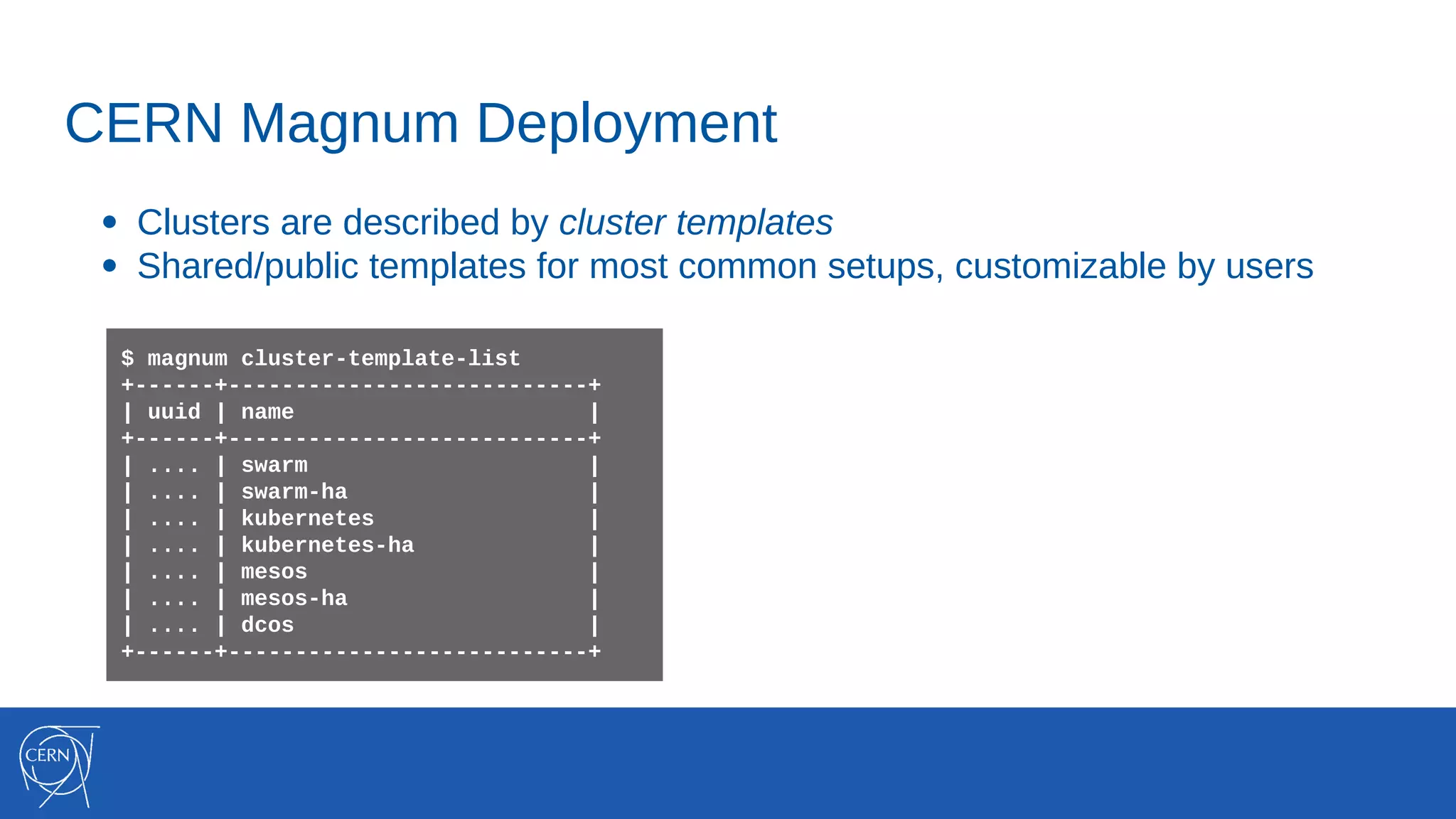

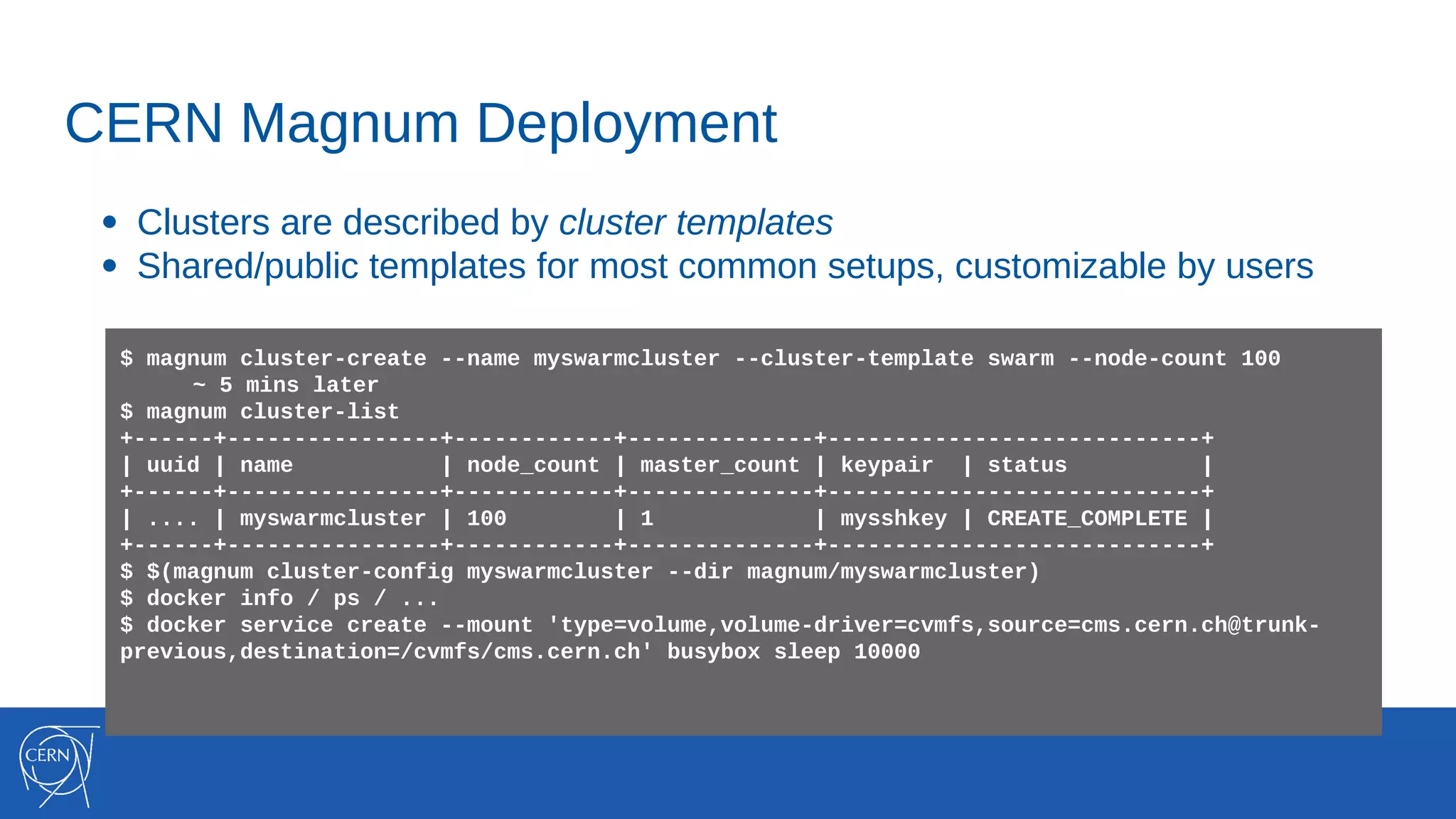

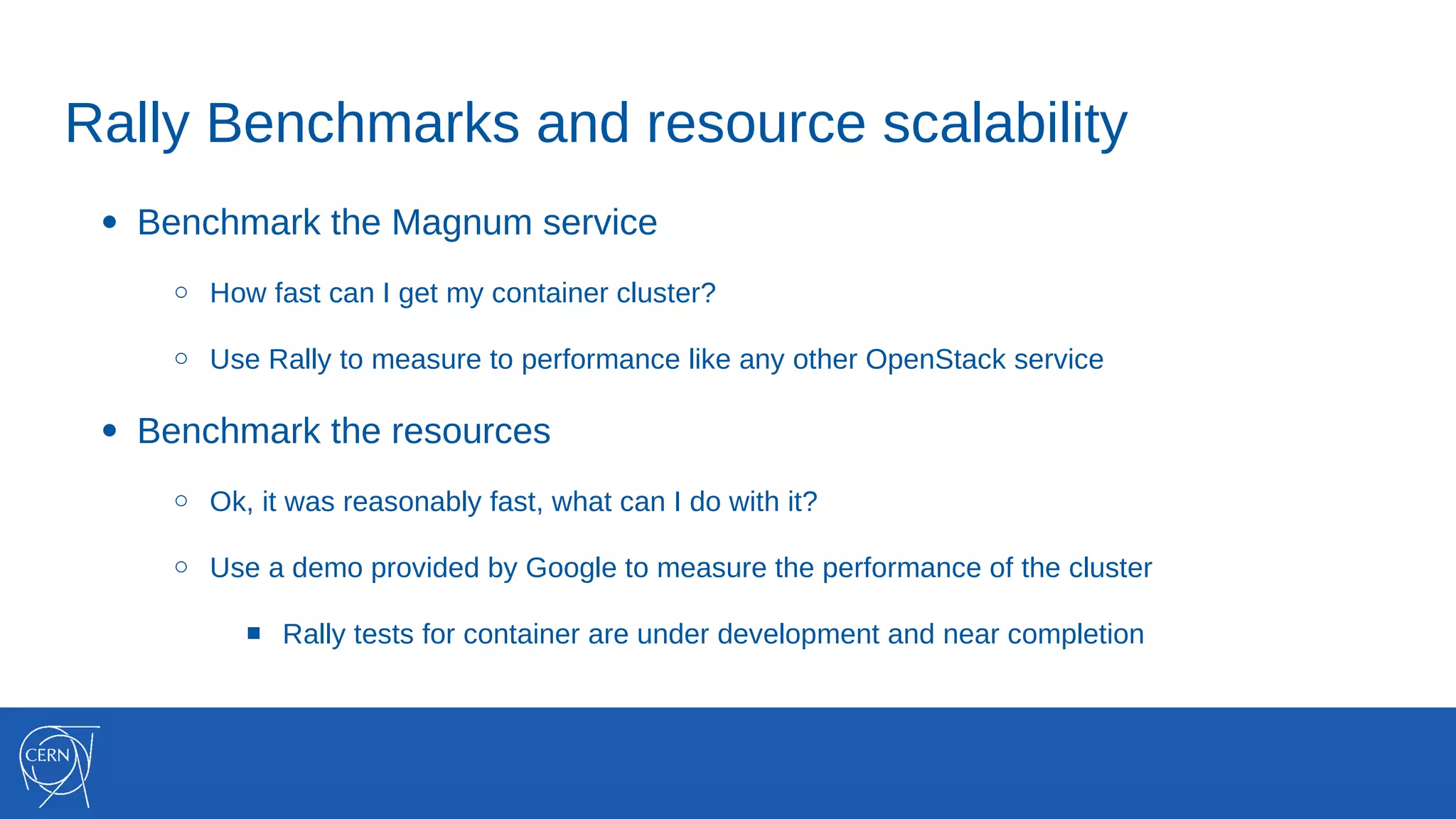

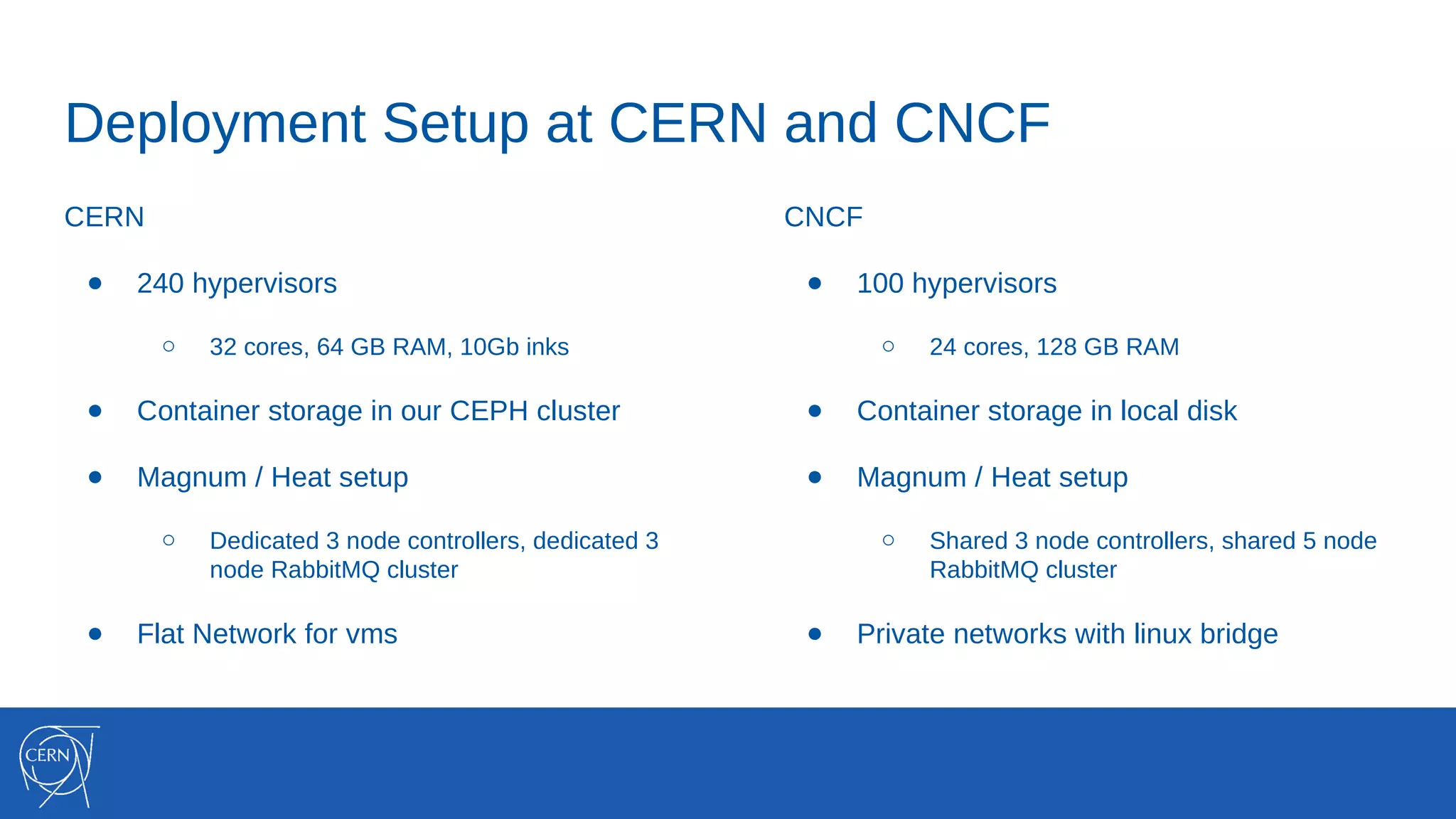

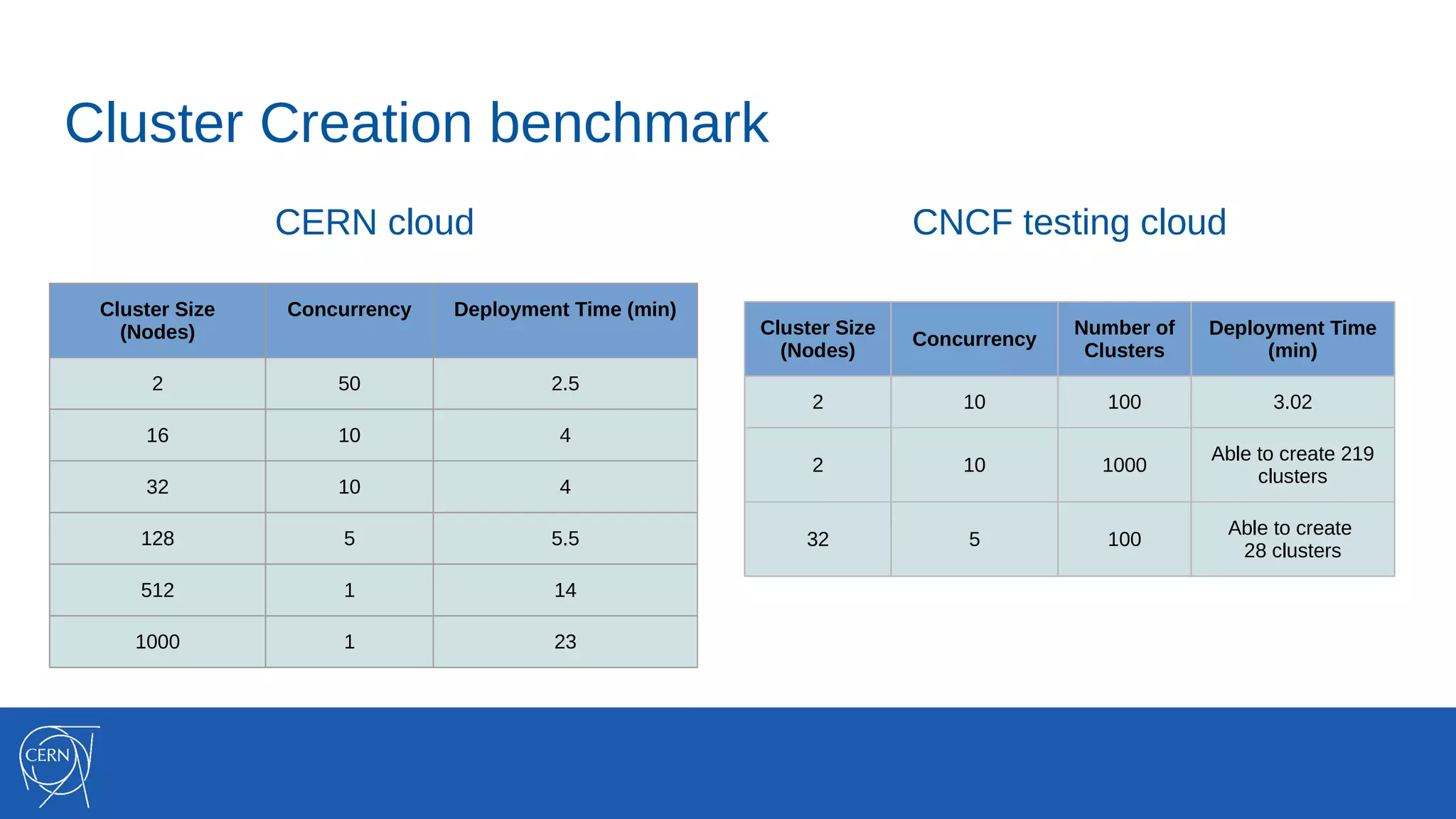

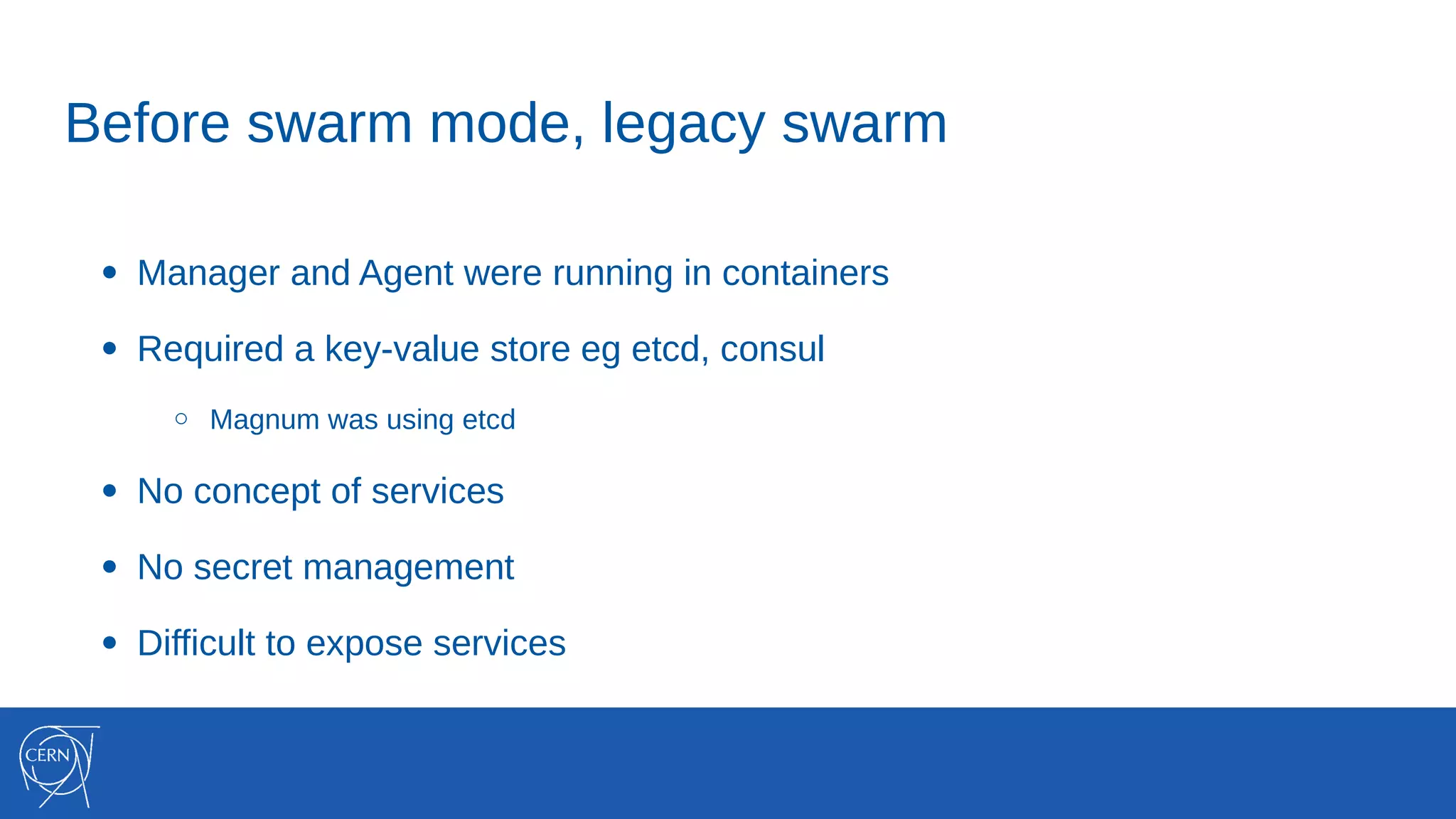

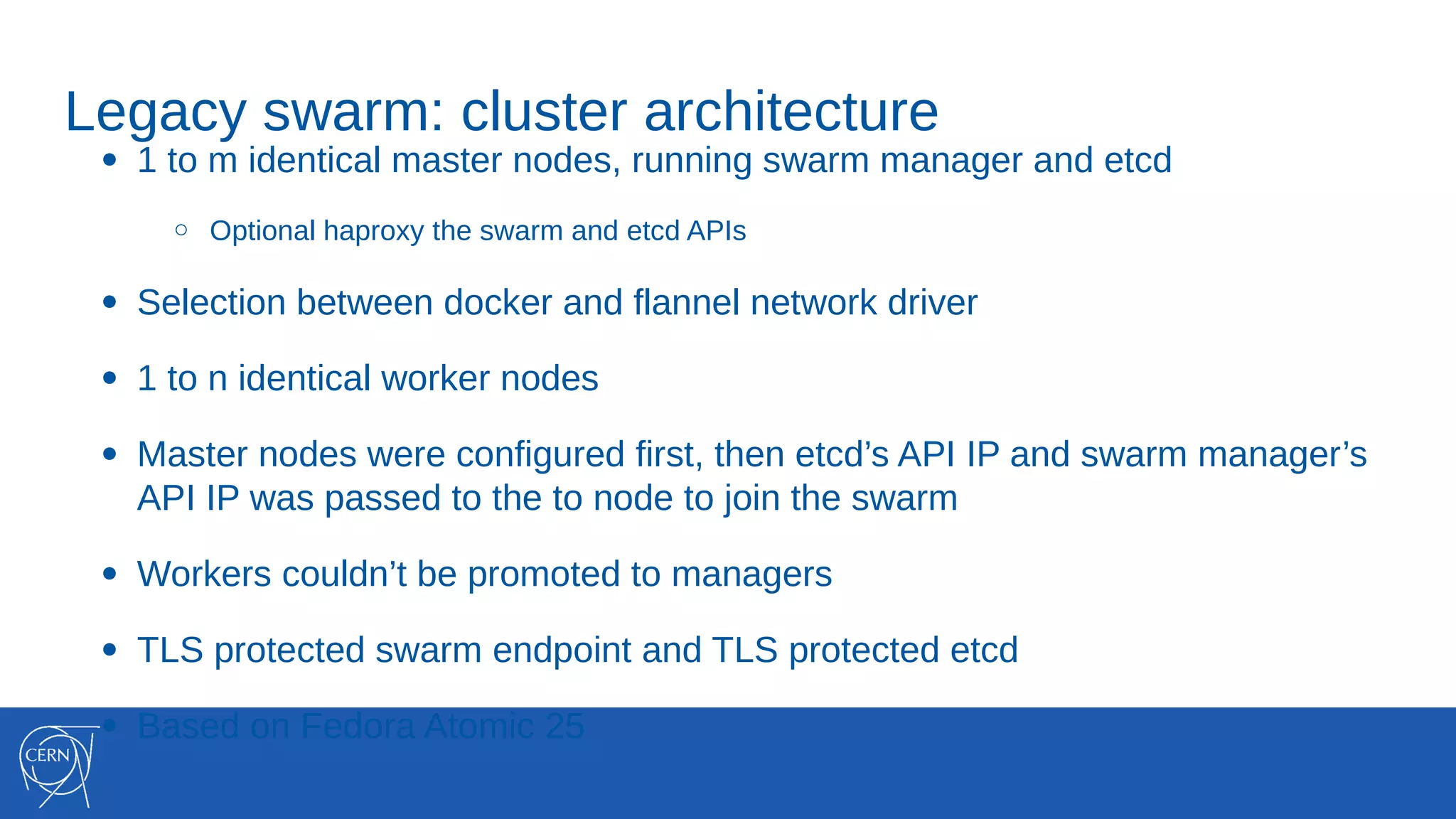

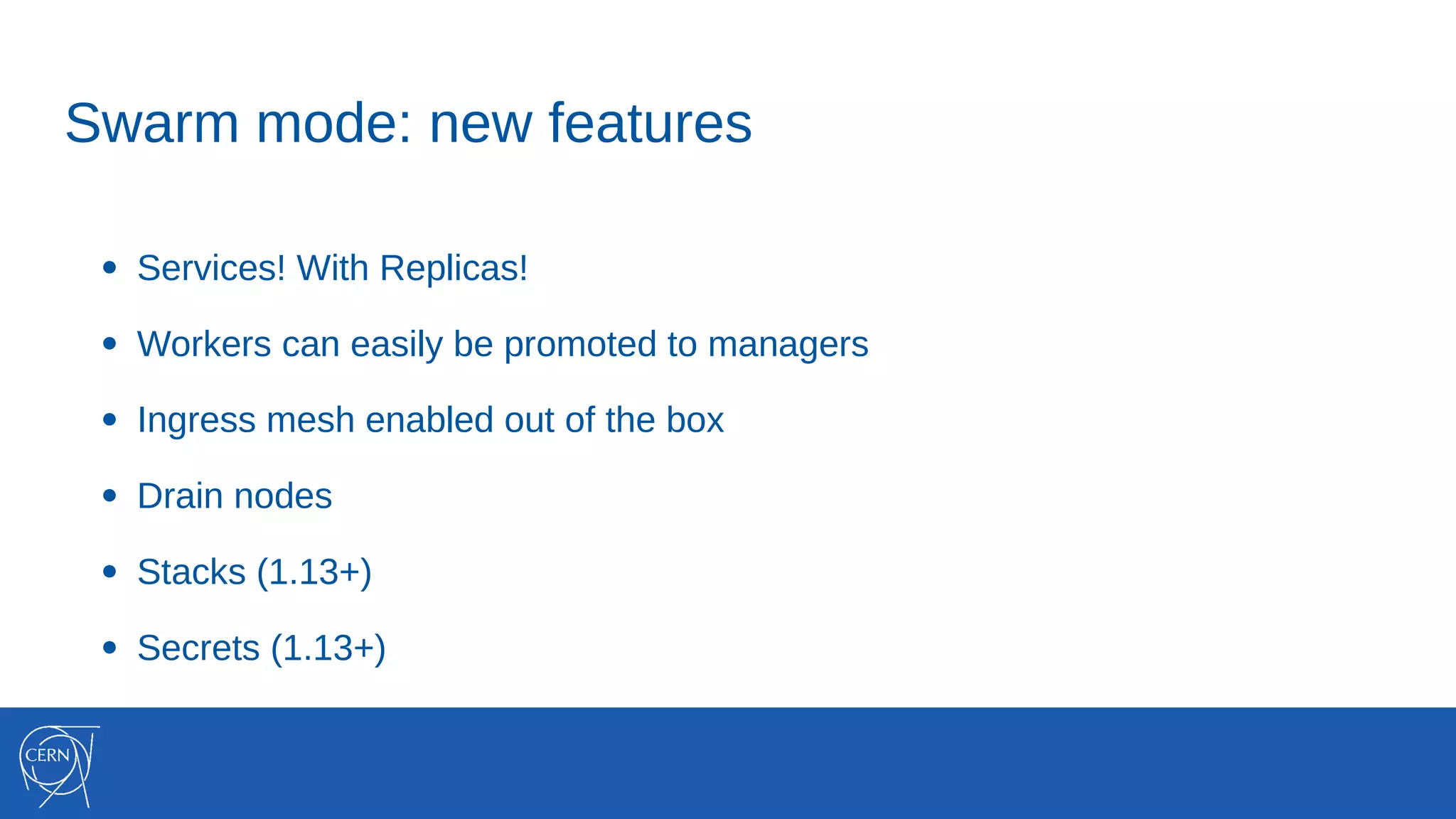

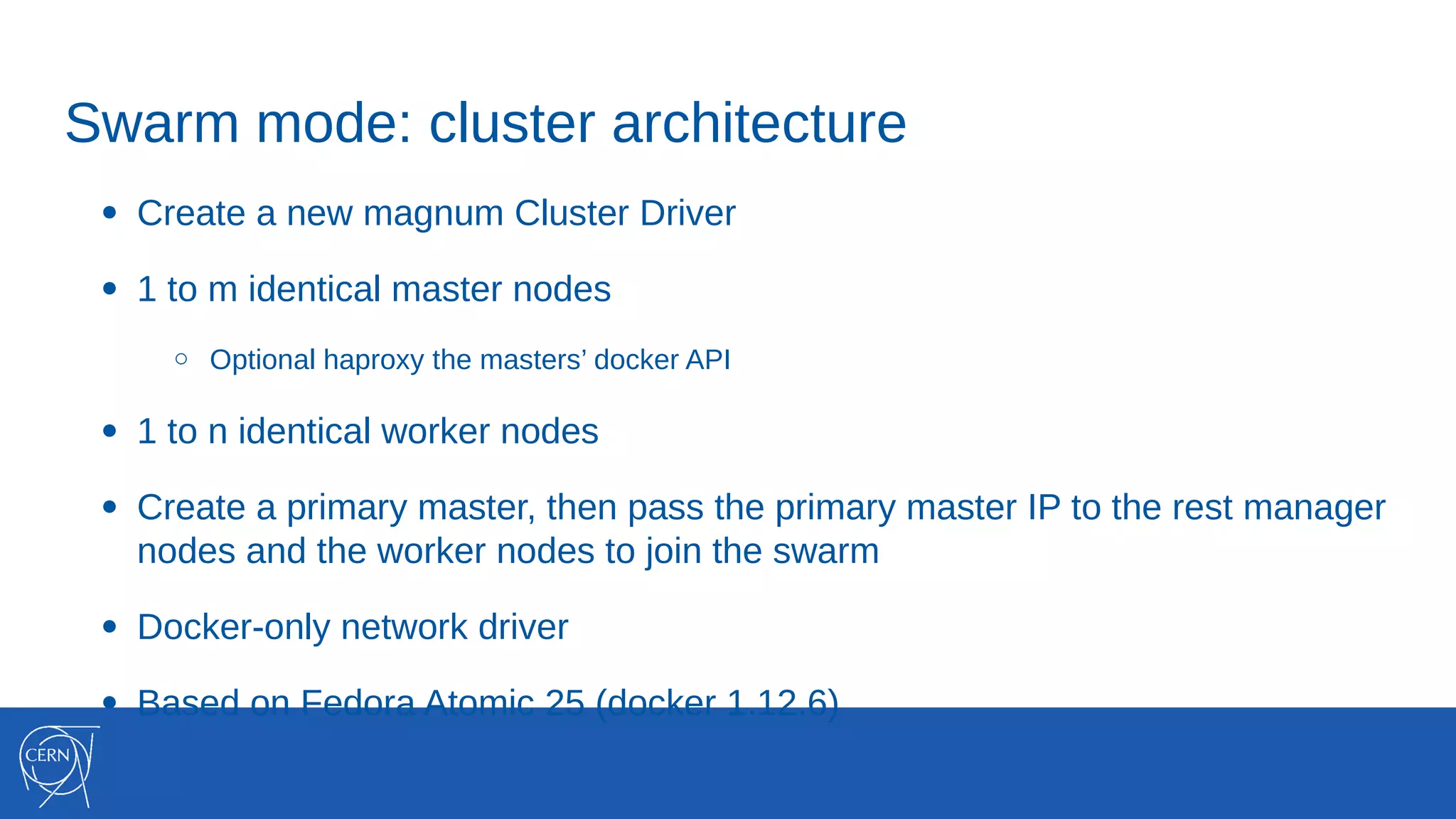

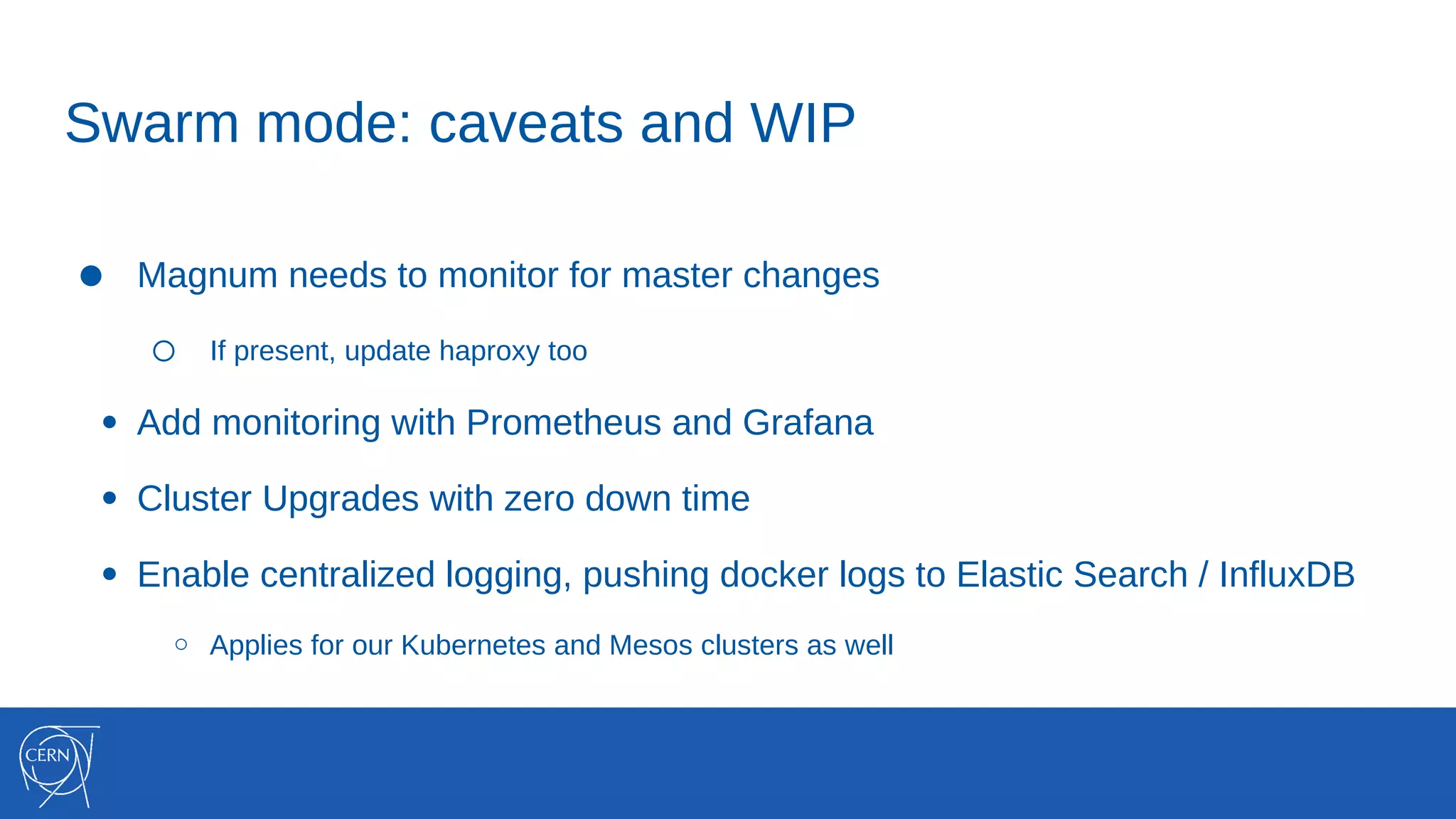

The document discusses the integration of container technologies, specifically OpenStack Magnum, within CERN's cloud infrastructure since 2013, detailing its deployment, use cases, and architectural developments. It highlights various container setups like Kubernetes and Docker Swarm, benchmarks for cluster performance, and challenges faced in transitioning to Swarm mode. Additionally, it outlines essential features and caveats regarding the management and monitoring of container deployments at CERN.