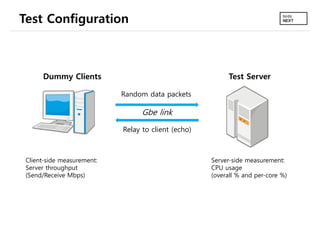

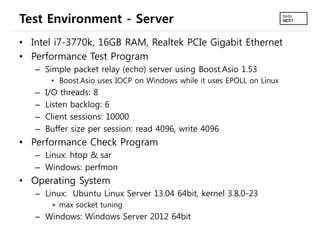

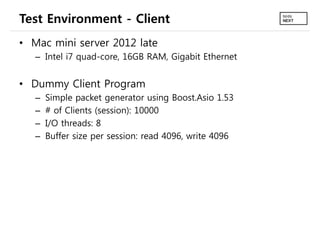

1. The document compares the performance of IOCP and EPOLL for network I/O handling on Windows and Linux servers.

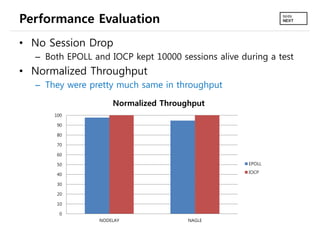

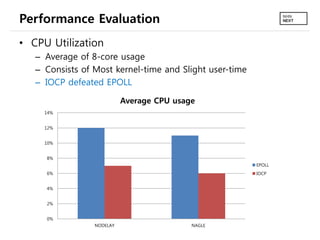

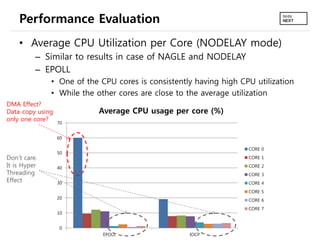

2. Testing showed that throughput was similar between IOCP and EPOLL, but IOCP had lower overall CPU usage without RSS/multi-queue enabled.

3. With RSS/multi-queue enabled on the NIC, CPU usage was nearly identical between IOCP and EPOLL.