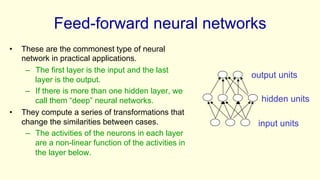

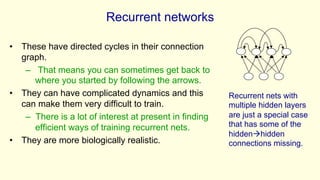

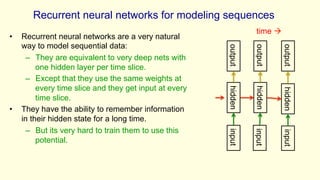

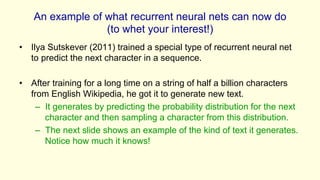

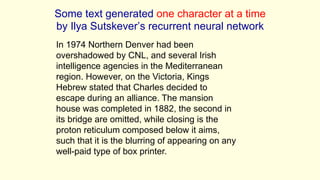

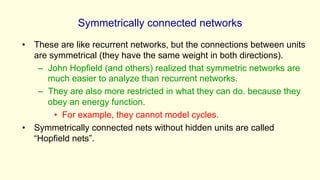

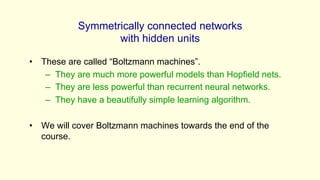

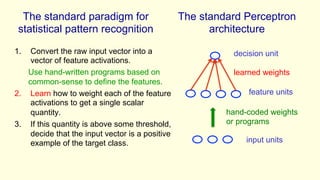

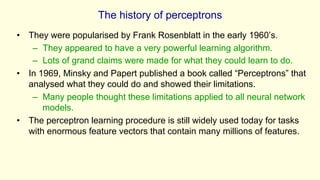

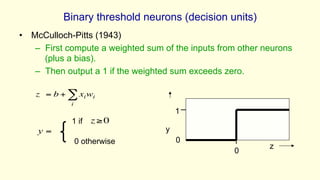

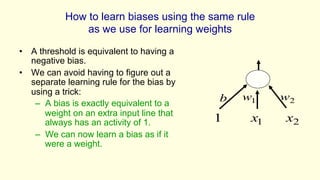

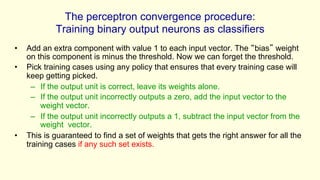

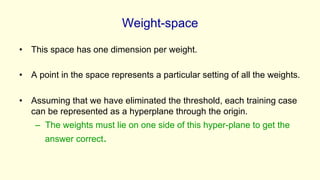

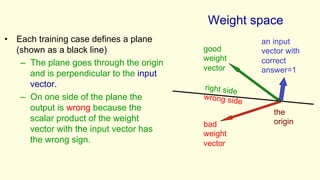

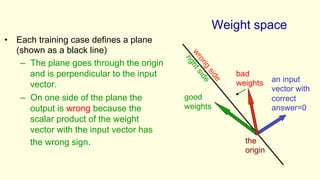

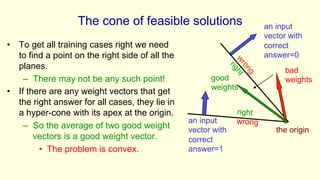

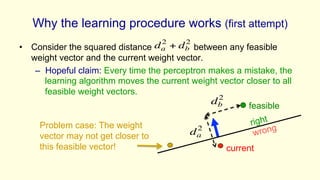

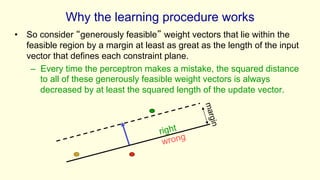

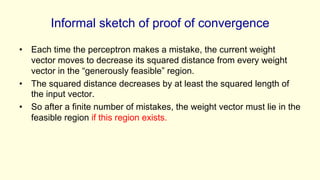

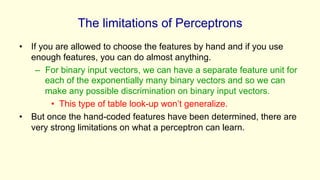

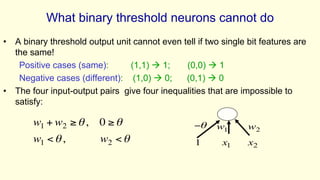

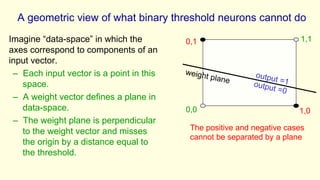

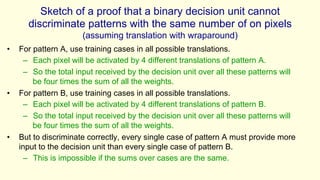

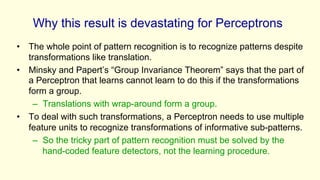

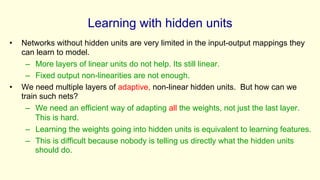

The document discusses various neural network architectures, focusing on feed-forward, recurrent, and symmetrically connected networks. It highlights the limitations of perceptrons and outlines their learning procedures, emphasizing the challenges in pattern recognition and the necessity for hidden units in deeper networks to effectively learn complex input-output mappings. Key concepts include the geometrical view of perceptrons, the nature of learning weights, and the implications of transformations in pattern recognition.