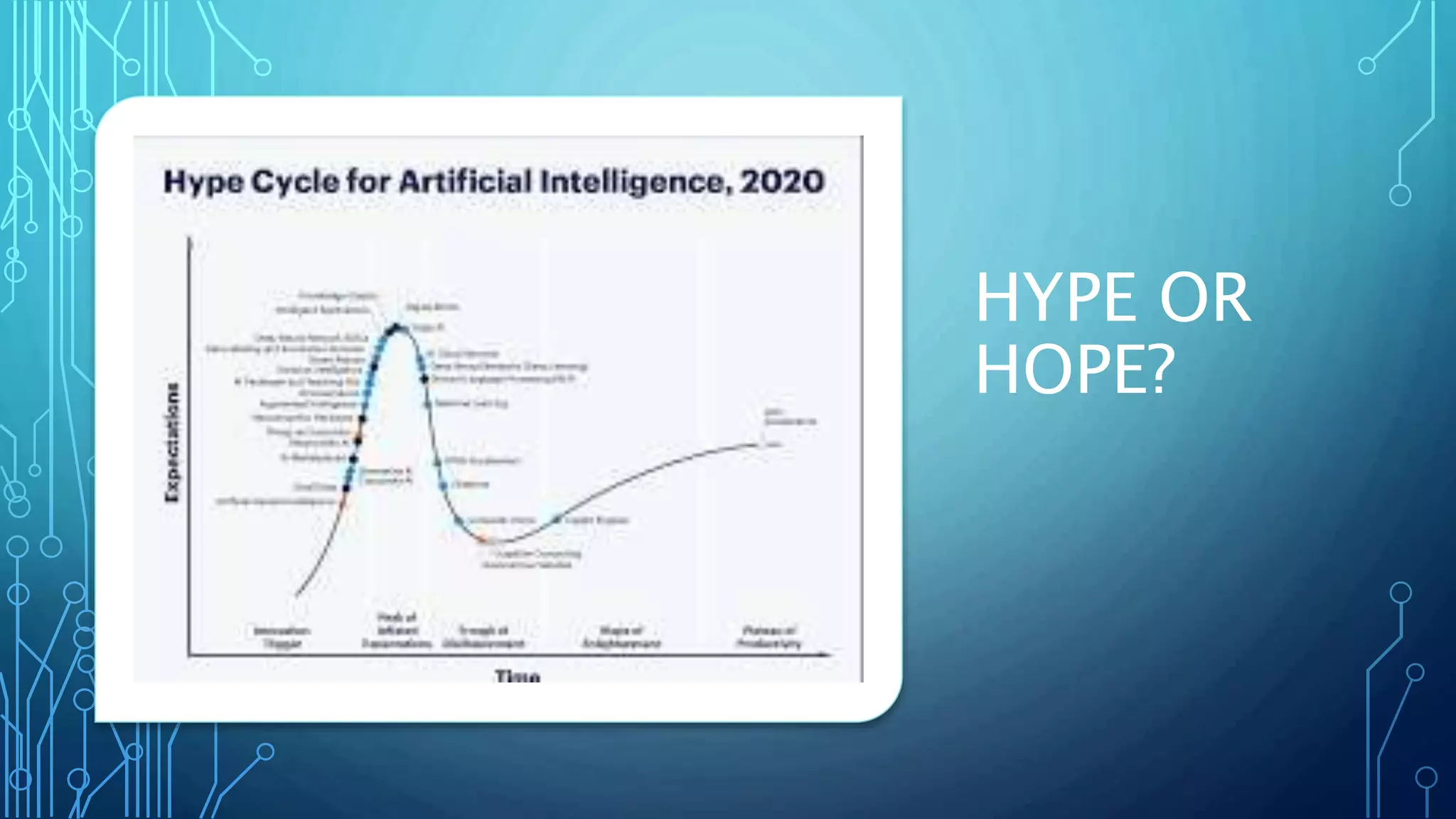

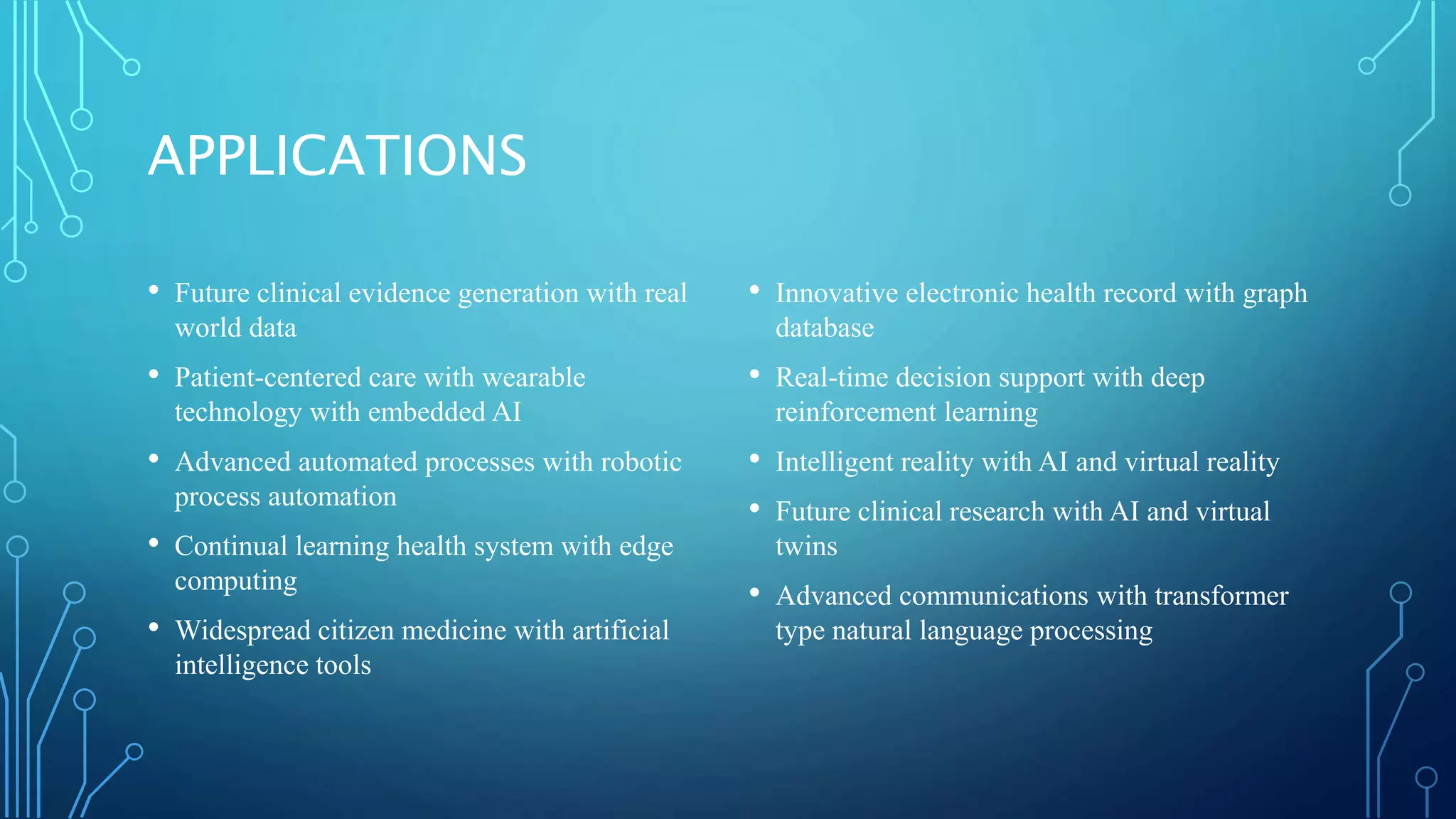

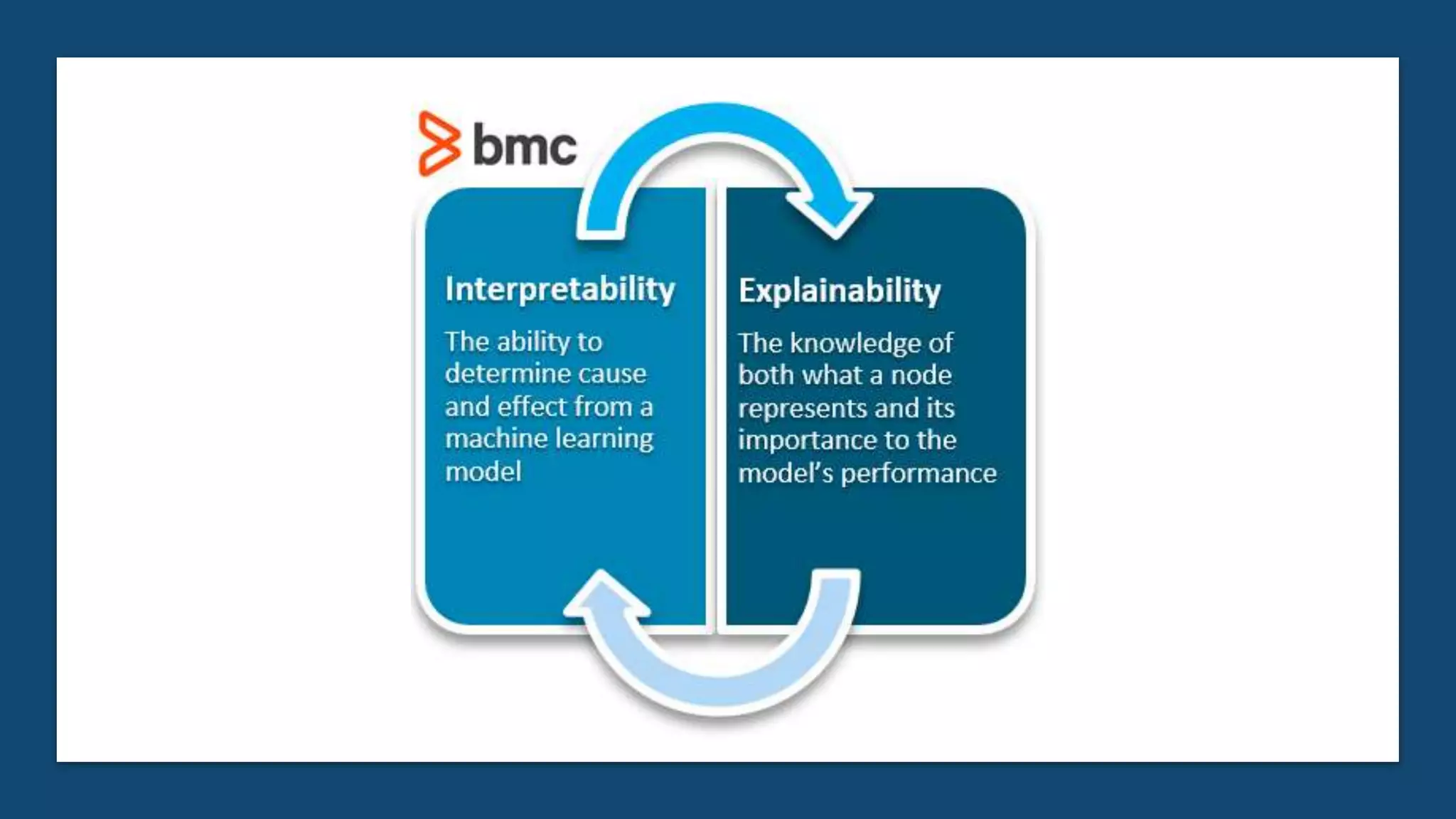

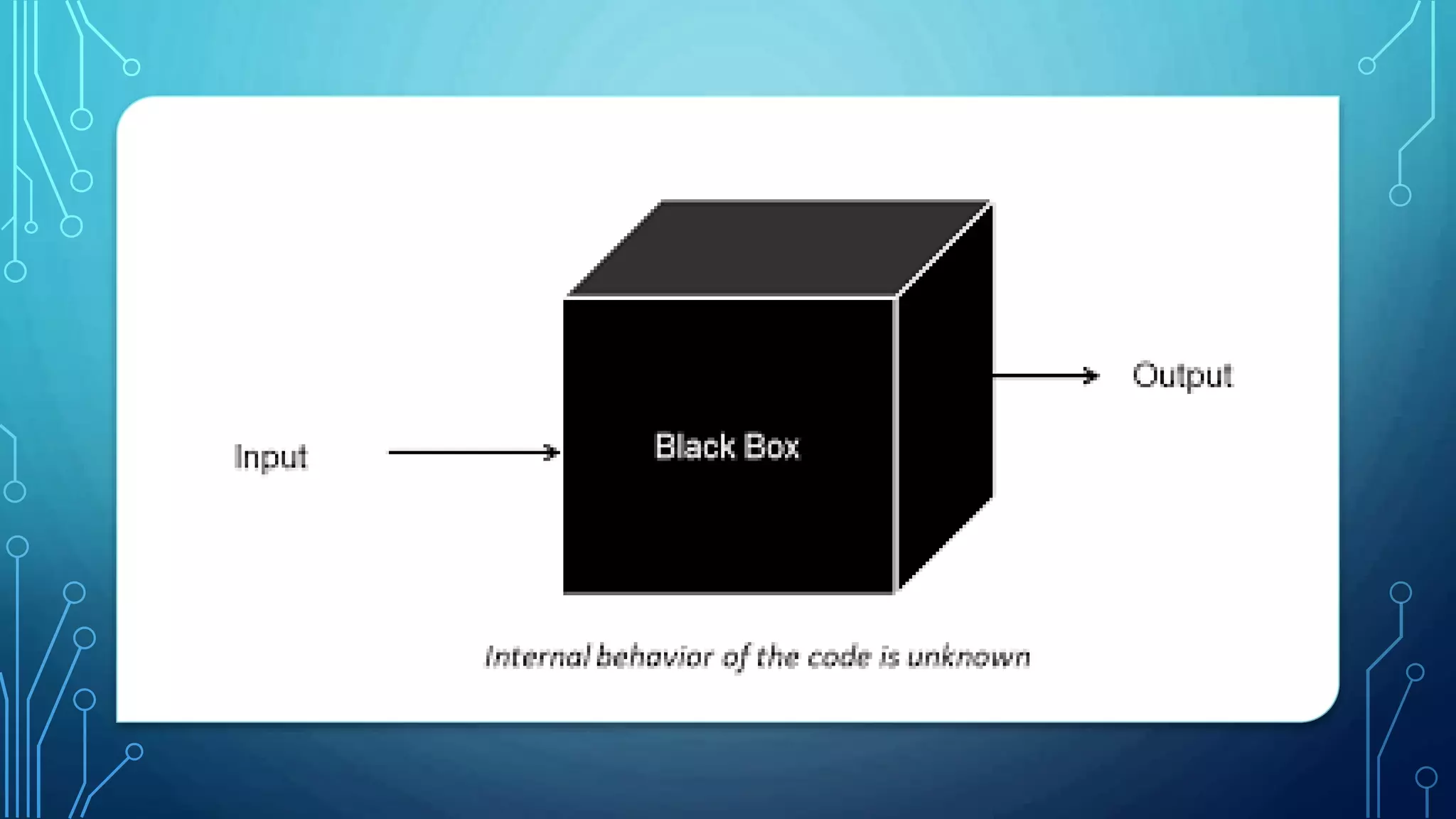

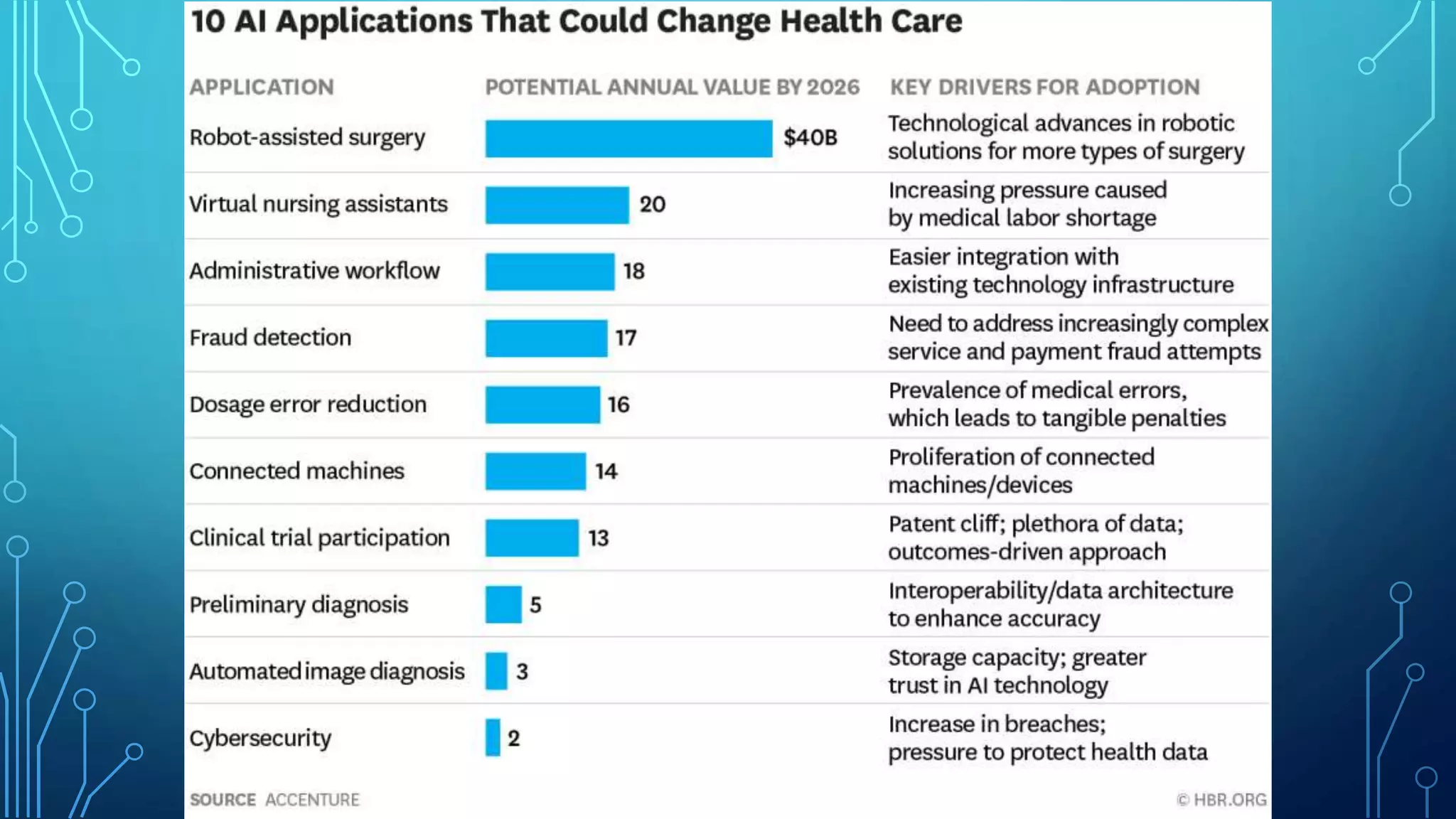

This document provides an overview of artificial intelligence in healthcare by an expert in the field. It discusses key concepts like machine learning, deep learning and artificial intelligence. It explores applications of AI in areas like computer vision, vocal biomarkers, and skin cancer detection. It also covers barriers to adoption like trust, transparency and bias issues. Ethical principles from WHO are outlined around human autonomy, safety, transparency and accountability. The impacts on the medical workforce and potential to widen healthcare access gaps are debated. A proposed "Patient AI Bill of Rights" addresses issues like consent, bias, explanations and oversight.