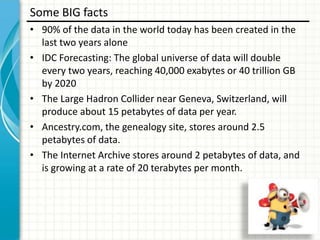

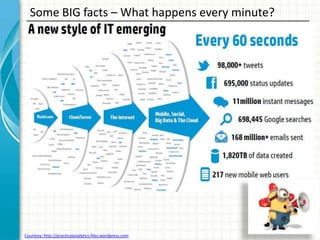

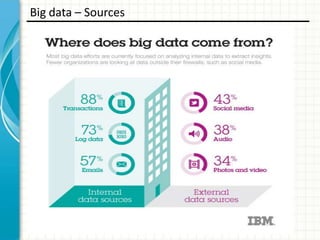

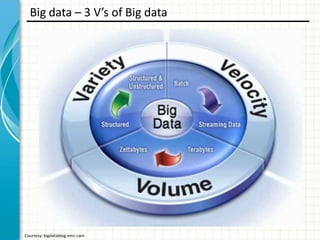

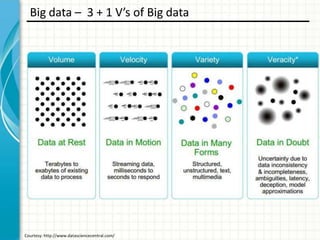

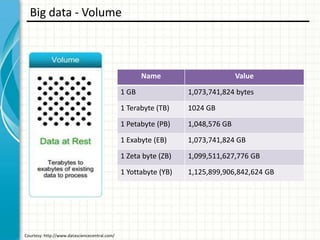

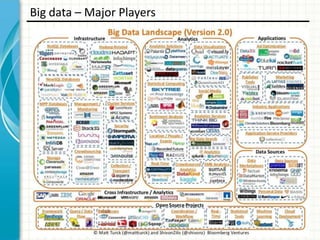

This document provides an overview of big data, including what it is, key facts and figures, the objectives of big data, sources of big data, and the 3 Vs and 3+1 Vs that characterize big data. It discusses volume, velocity, variety, and veracity. The document also outlines big data technologies, opportunities, major players in the field, and concludes with an invitation for questions.