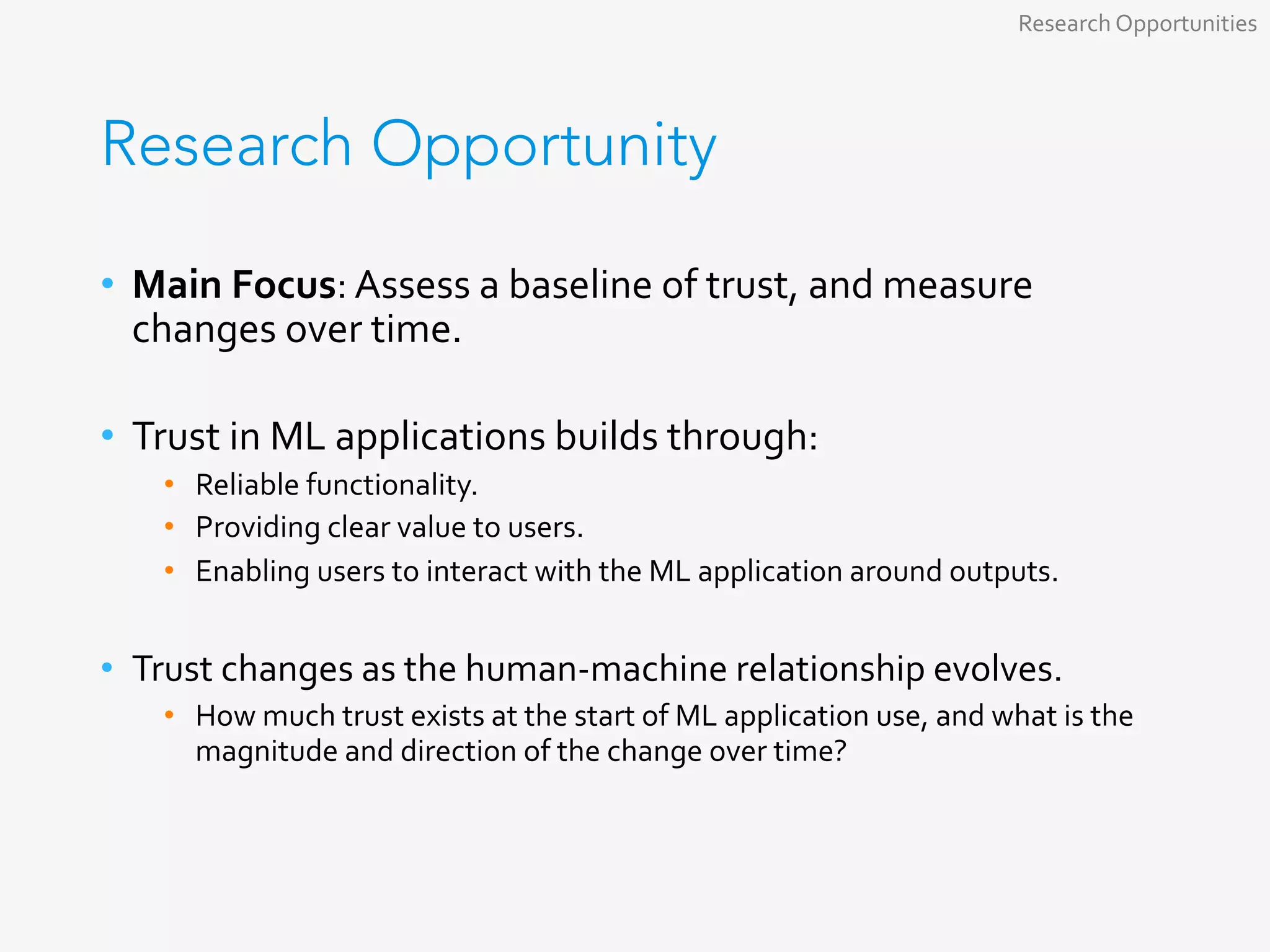

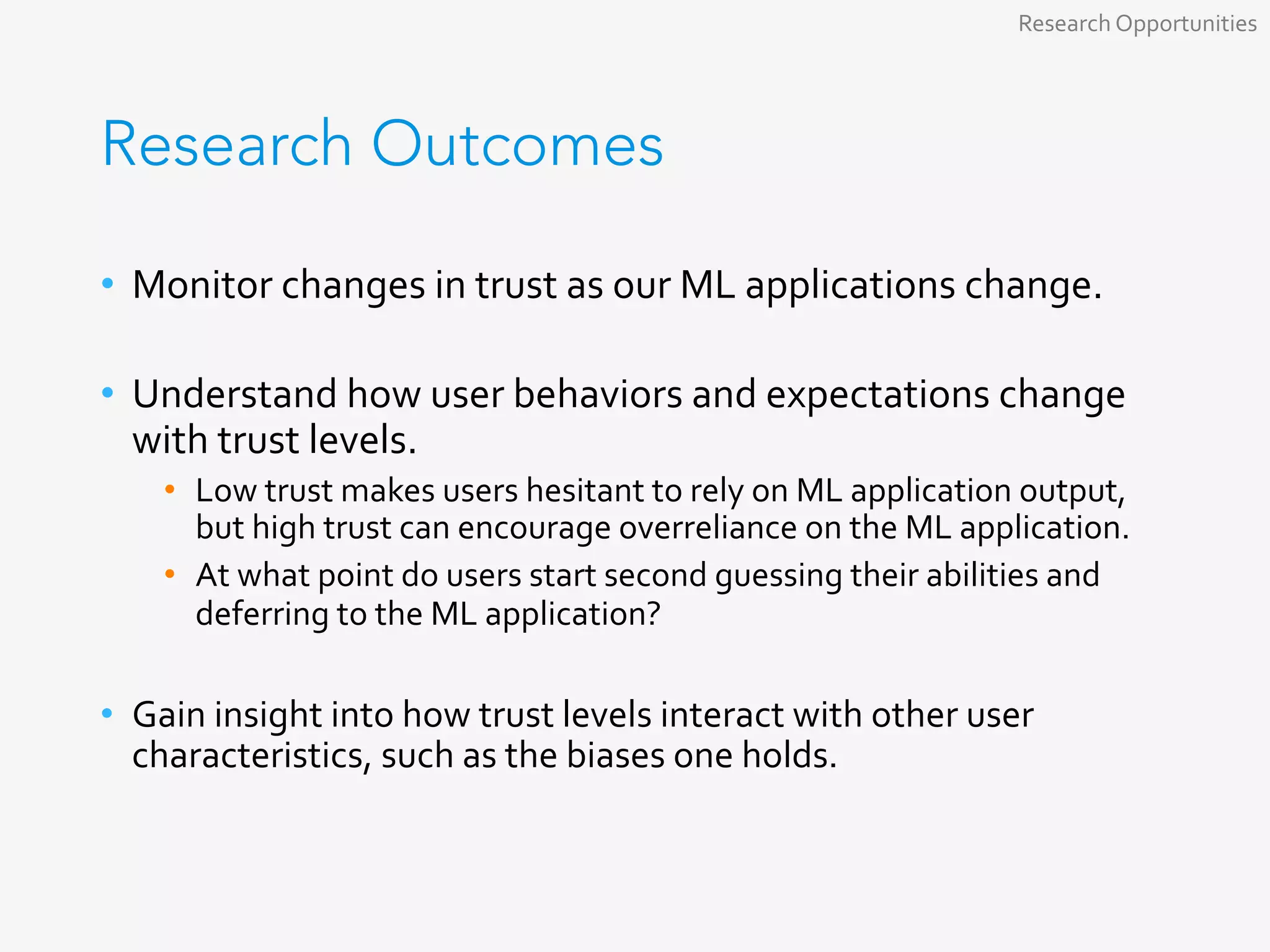

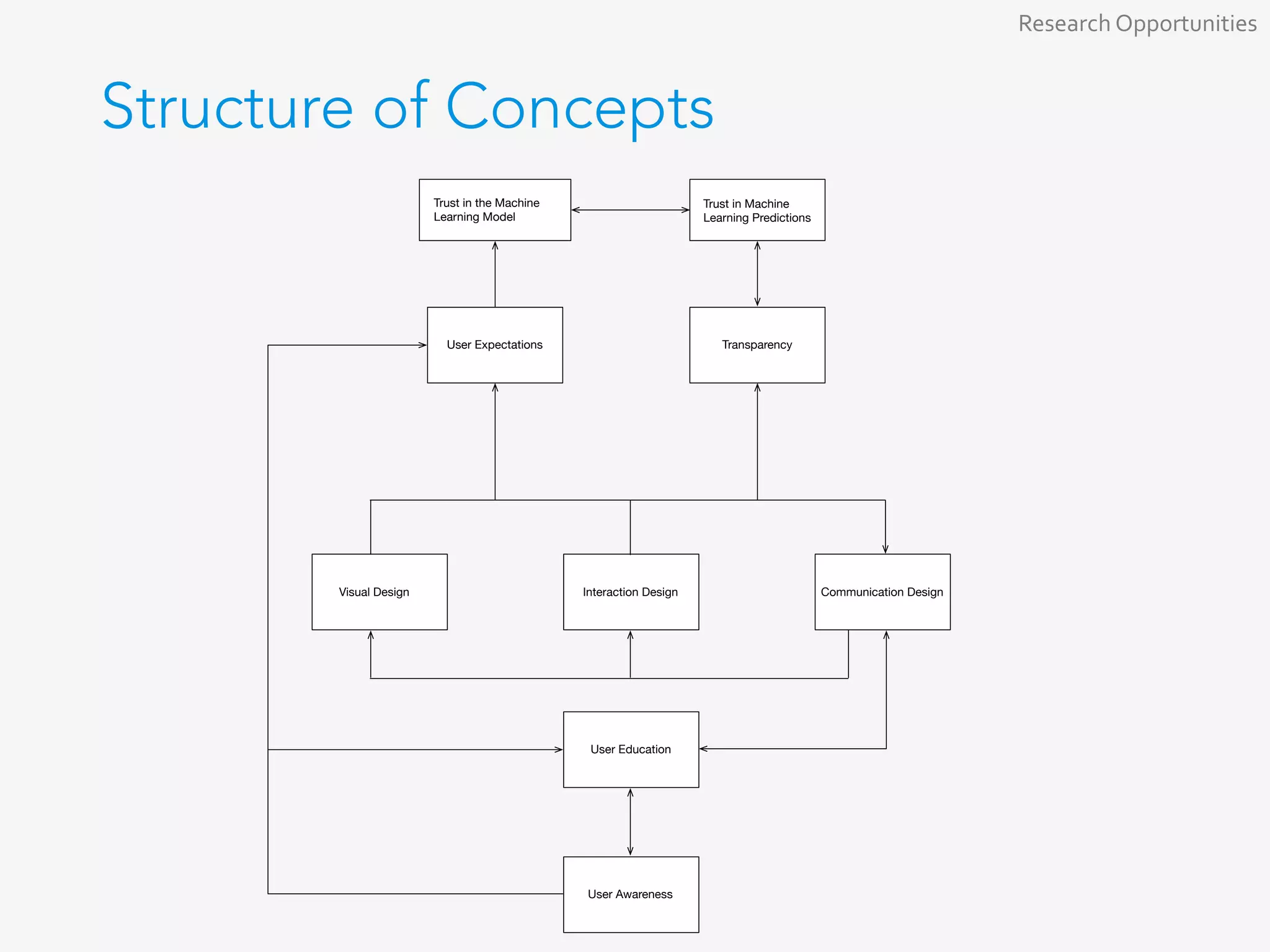

The document discusses user experience considerations for machine learning applications, outlining key insights from a literature review conducted by the EDP UX team. It highlights user issues such as the importance of technology literacy and cognitive biases, as well as design opportunities to improve transparency and trust in ML products. Additionally, the document emphasizes the need for effective communication design to enhance user understanding and acceptance of machine learning technologies.

![Trust for ML Applications [Continued]

• What does trusting ML predictions mean?

• Users acting on predictions in a recommended way.

• Instilling trust in ML predictions:

• Transparent communications around the prediction made.

• Create opportunities for users to verify prediction accuracy, and offer

feedback when possible.

• ML models precede ML predictions, so if users do not trust the

model, trust in the predictions may suffer.

Research Opportunities](https://image.slidesharecdn.com/dyy8mp1sqssqfdbow1fg-signature-65c1dda3bc5ef72532df877ce5b6999b7db6b1f8391319a06ee65fd6b5dbae3a-poli-180901150702/75/User-Experience-of-Machine-Learning-37-2048.jpg)