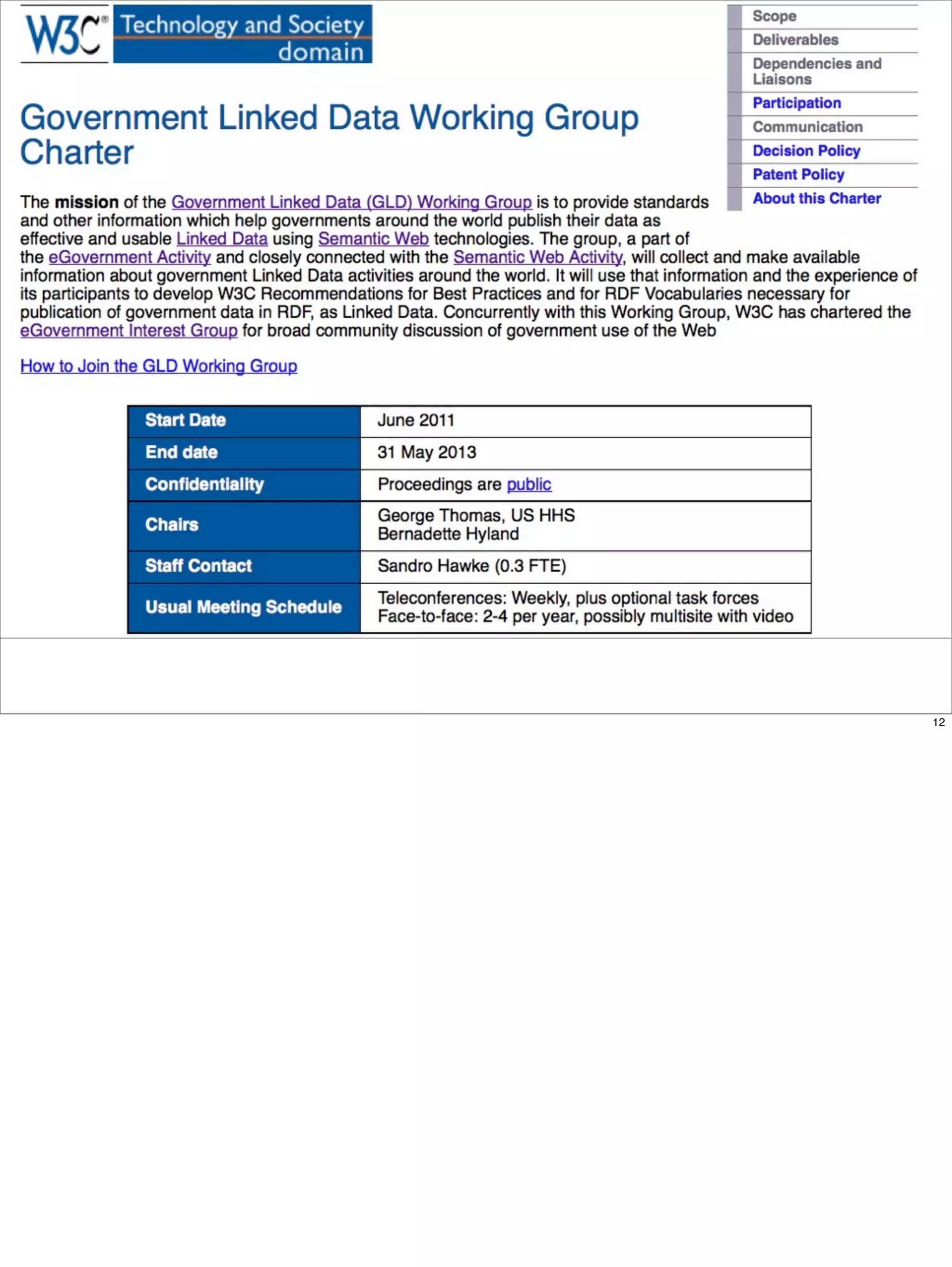

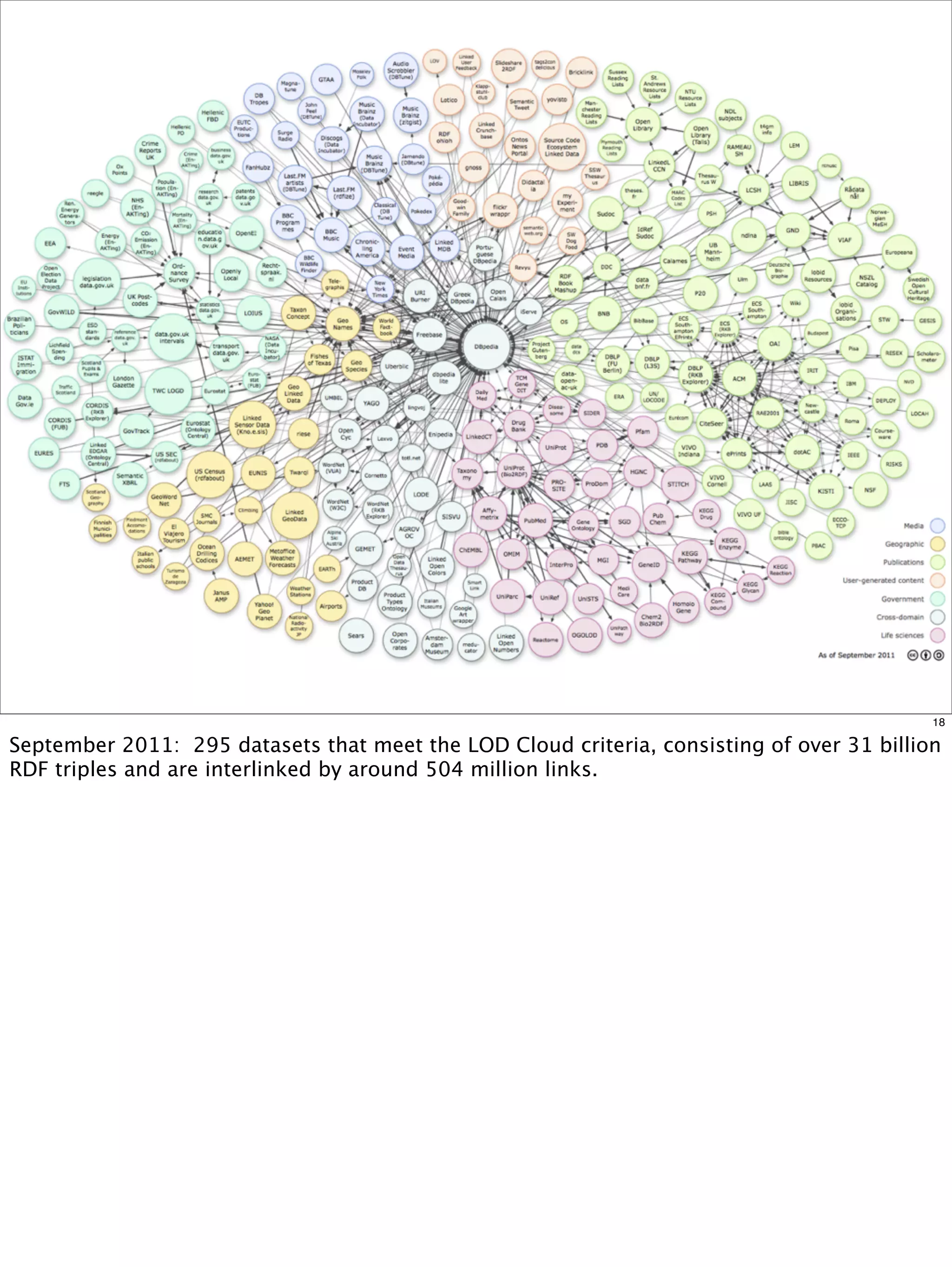

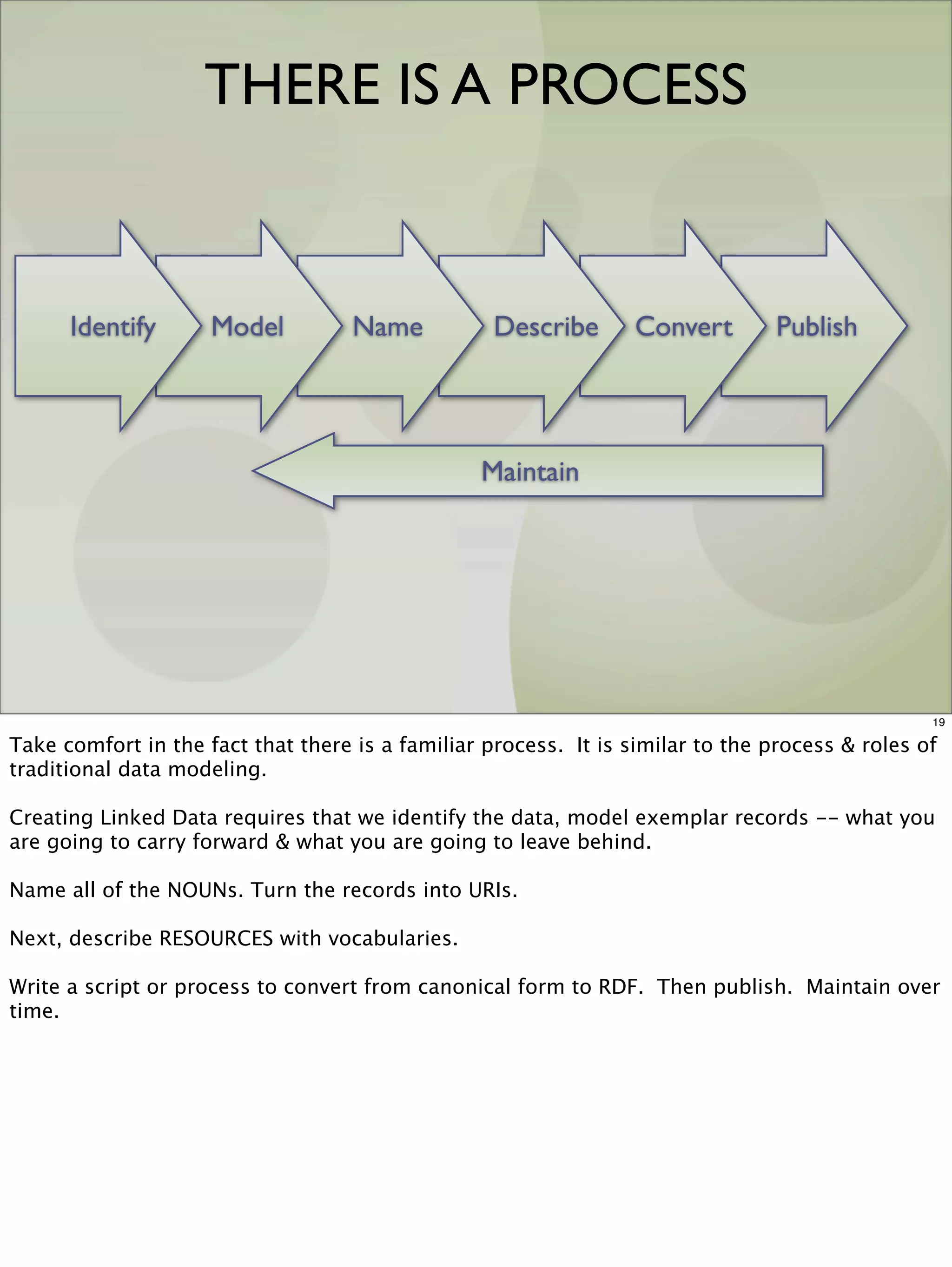

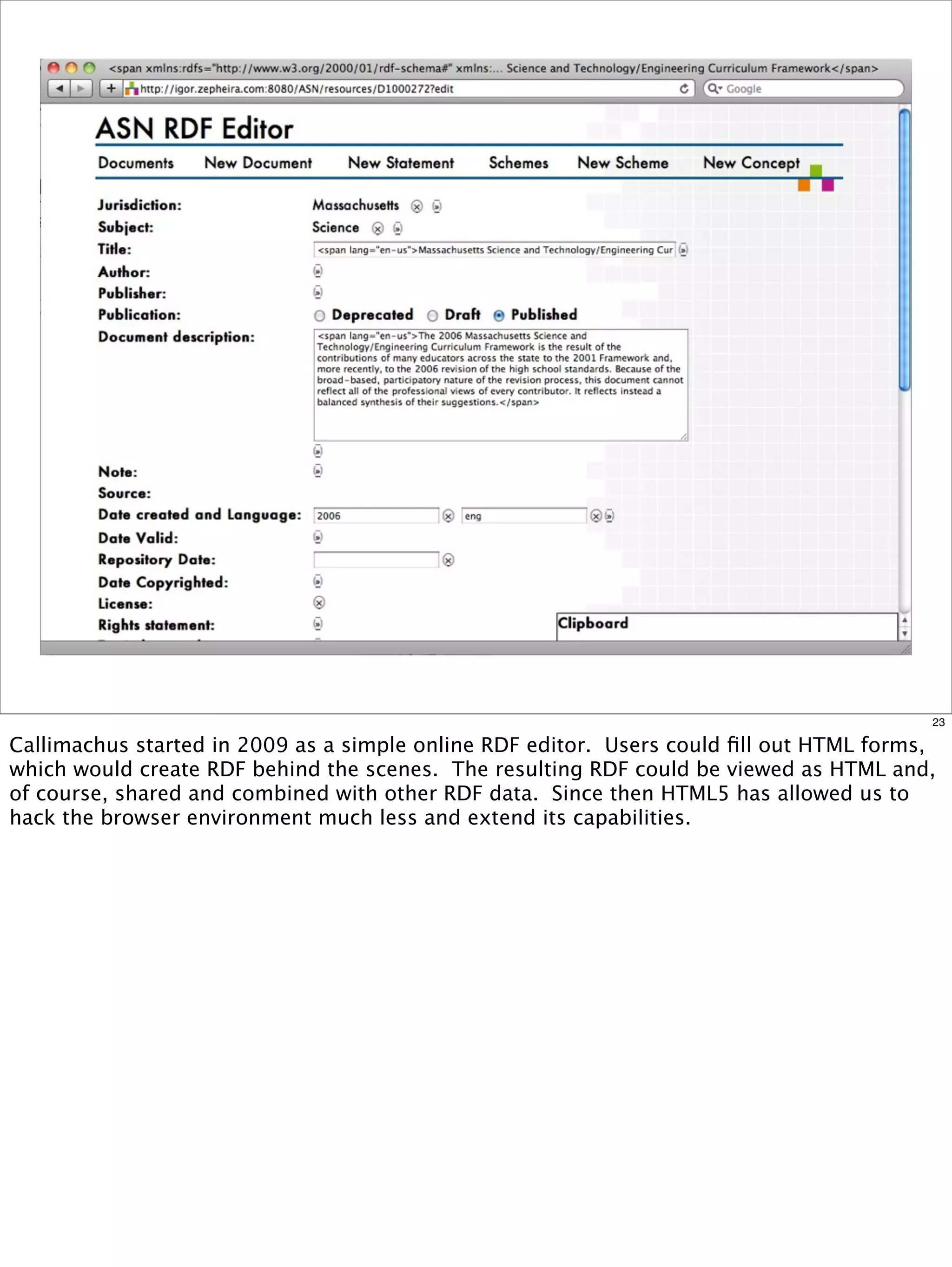

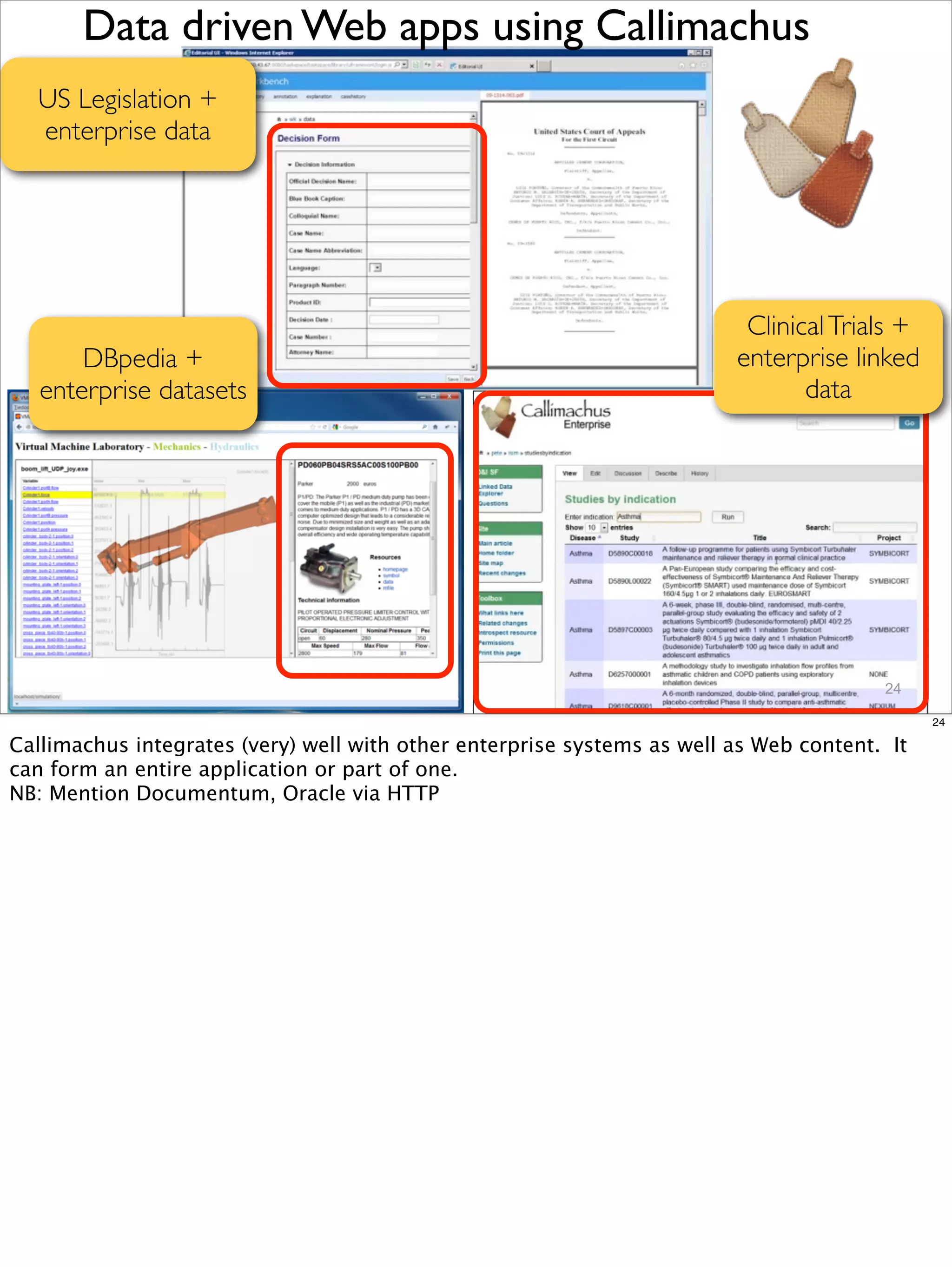

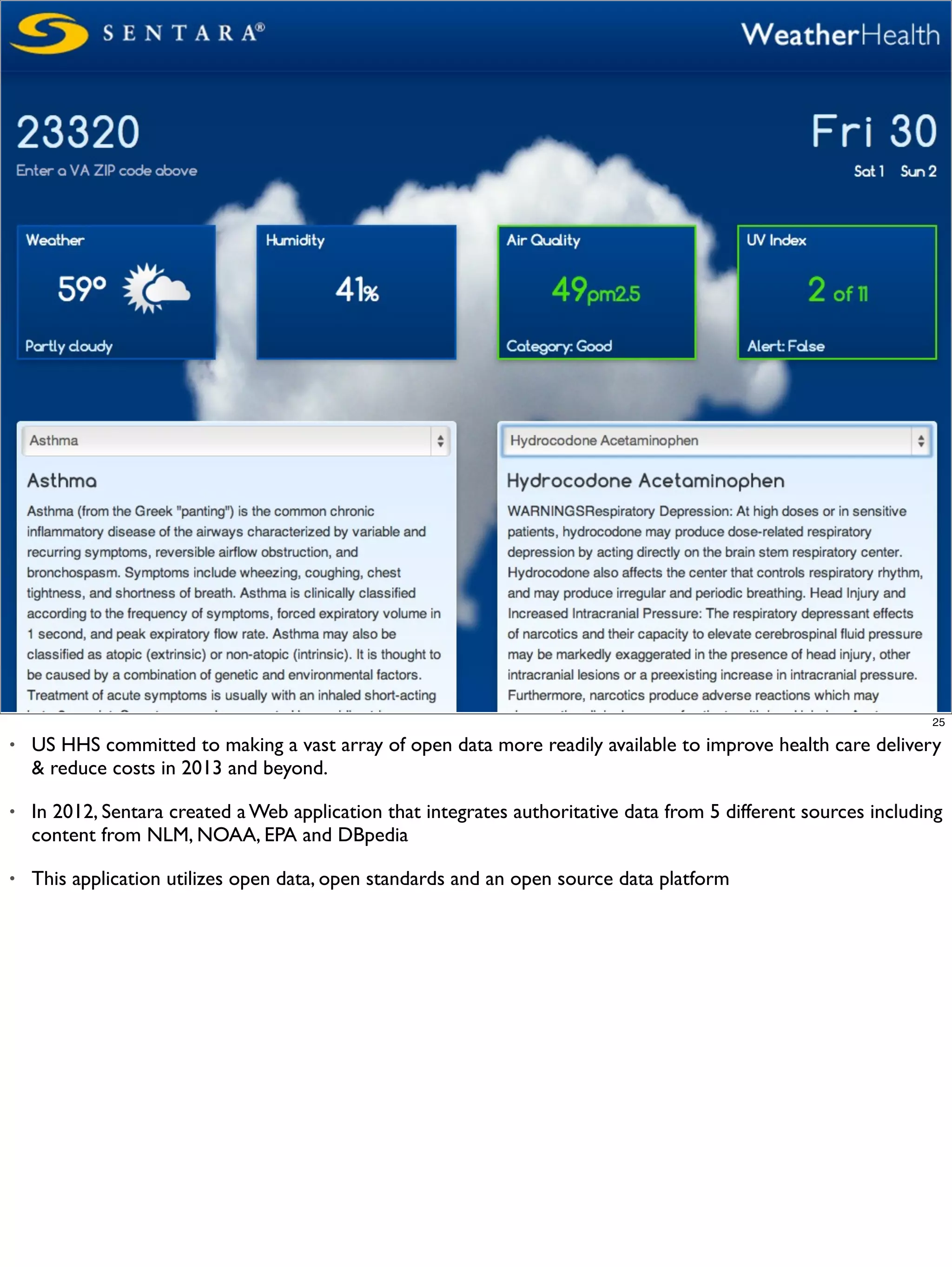

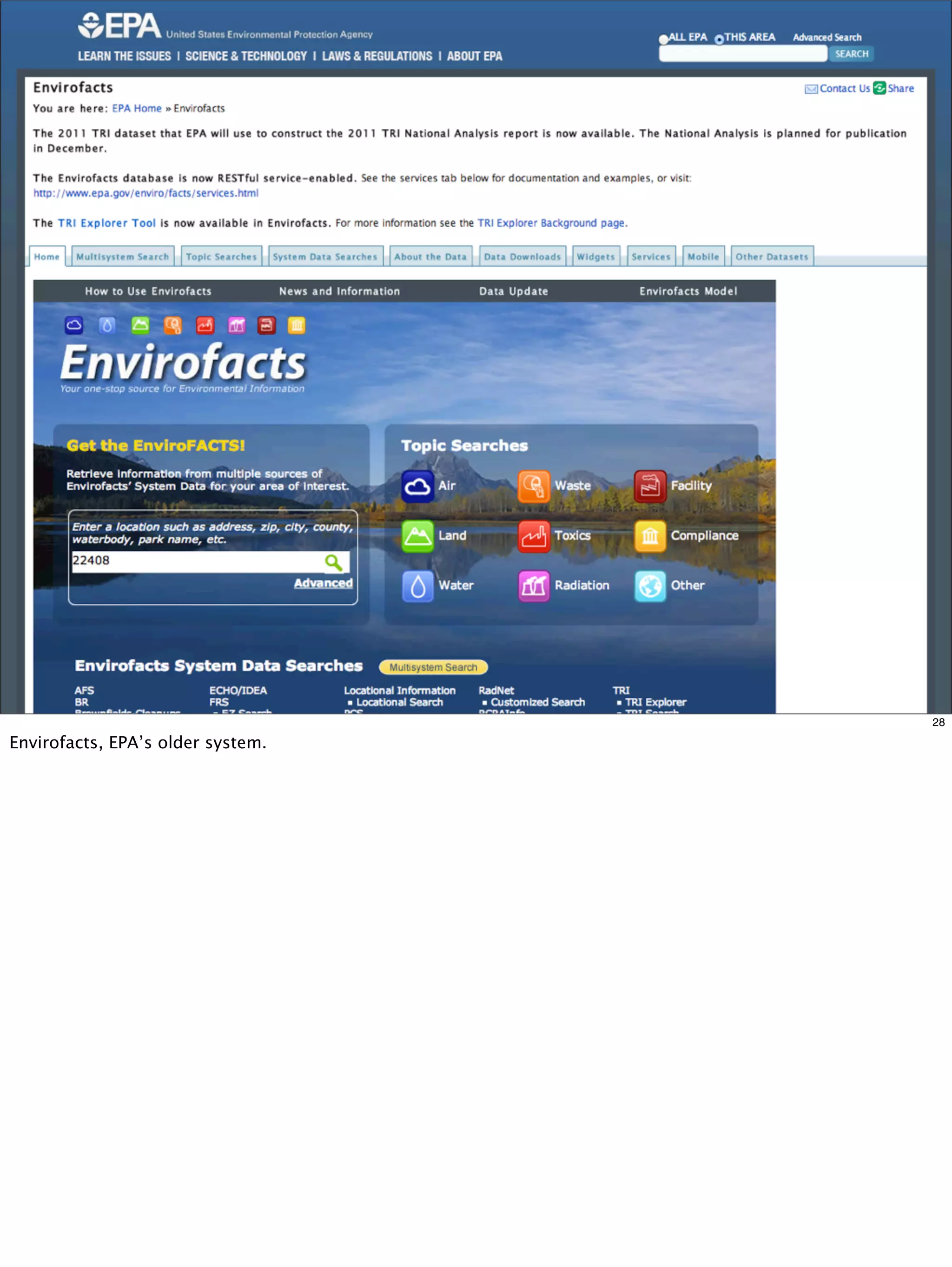

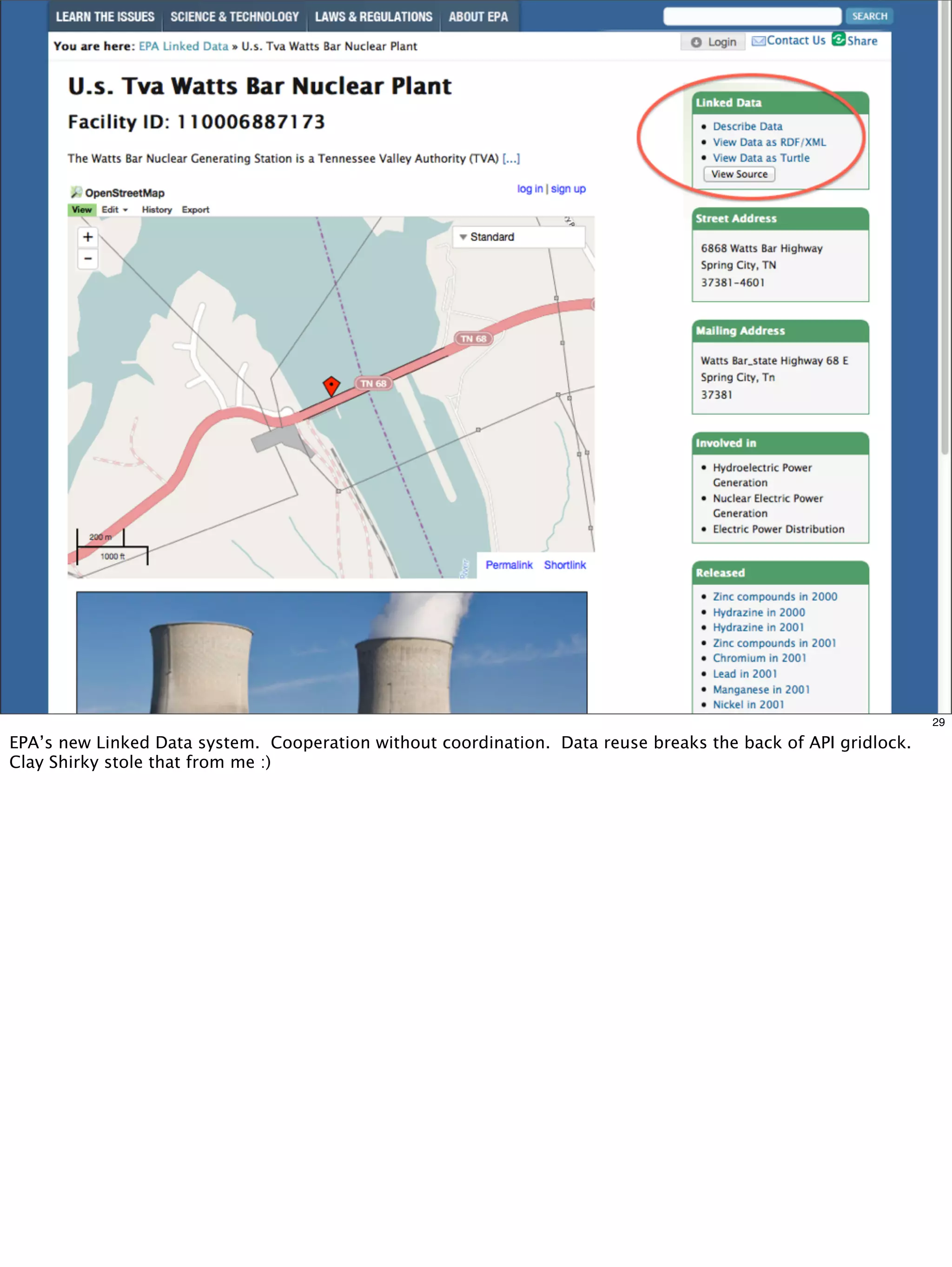

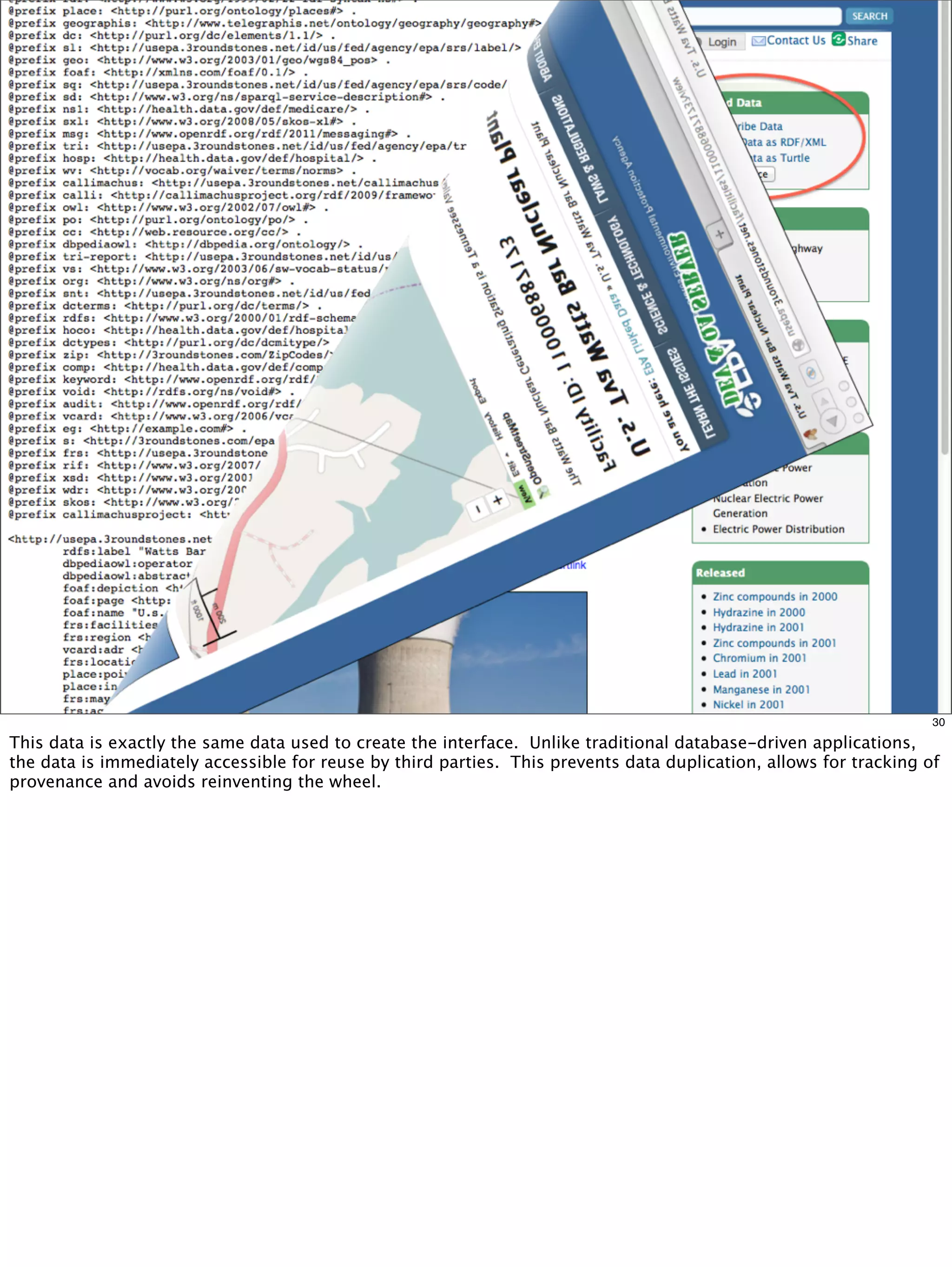

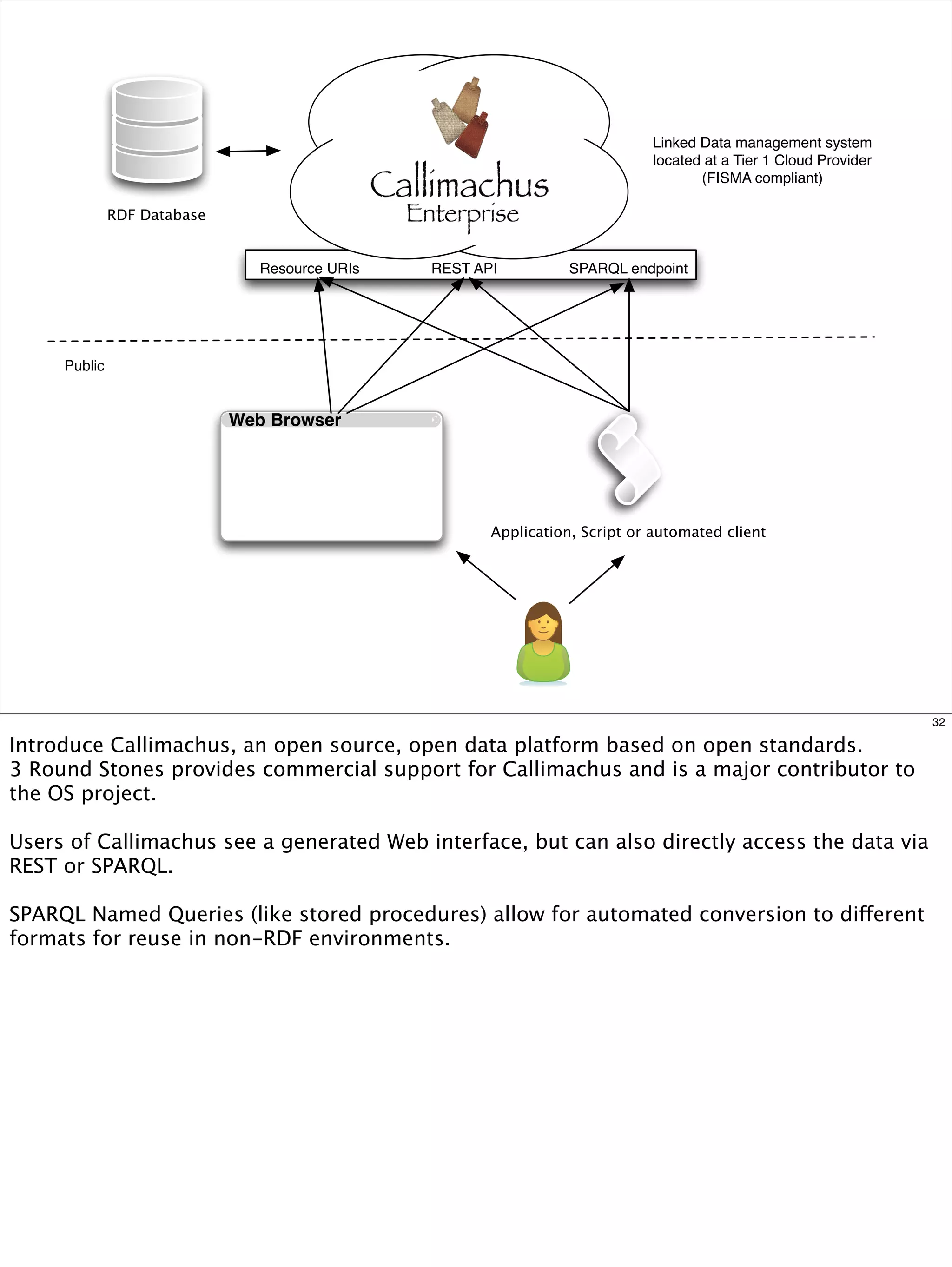

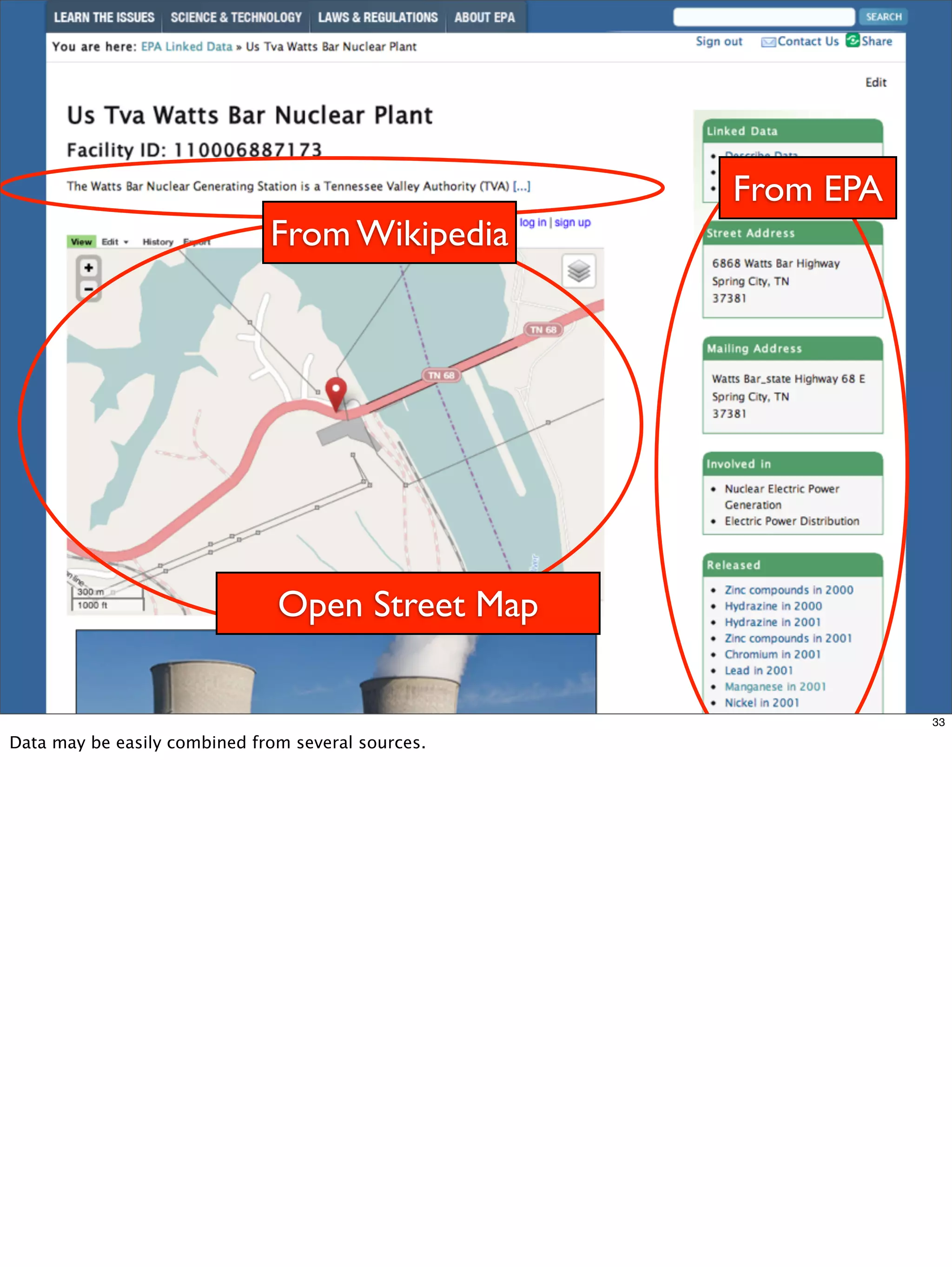

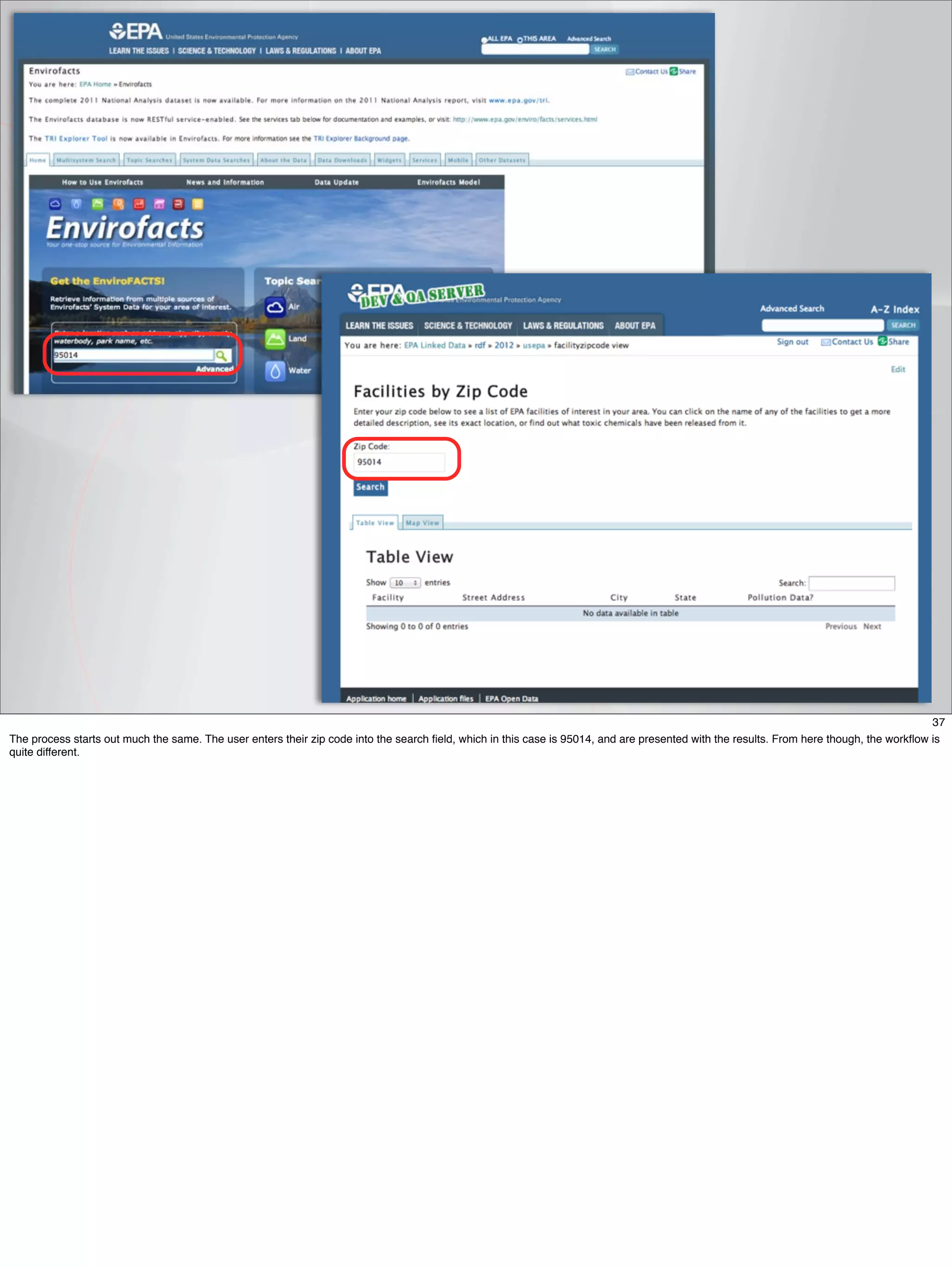

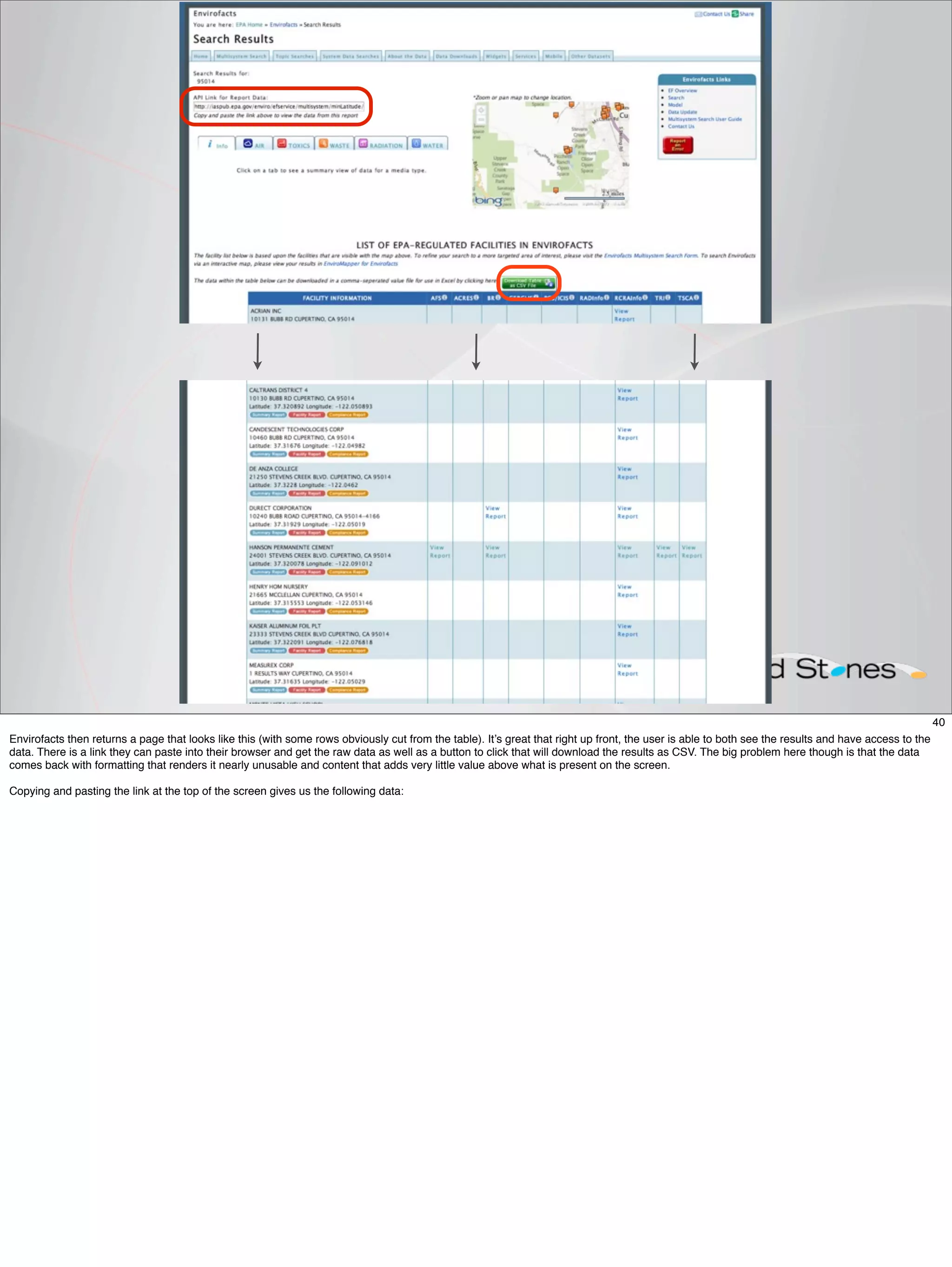

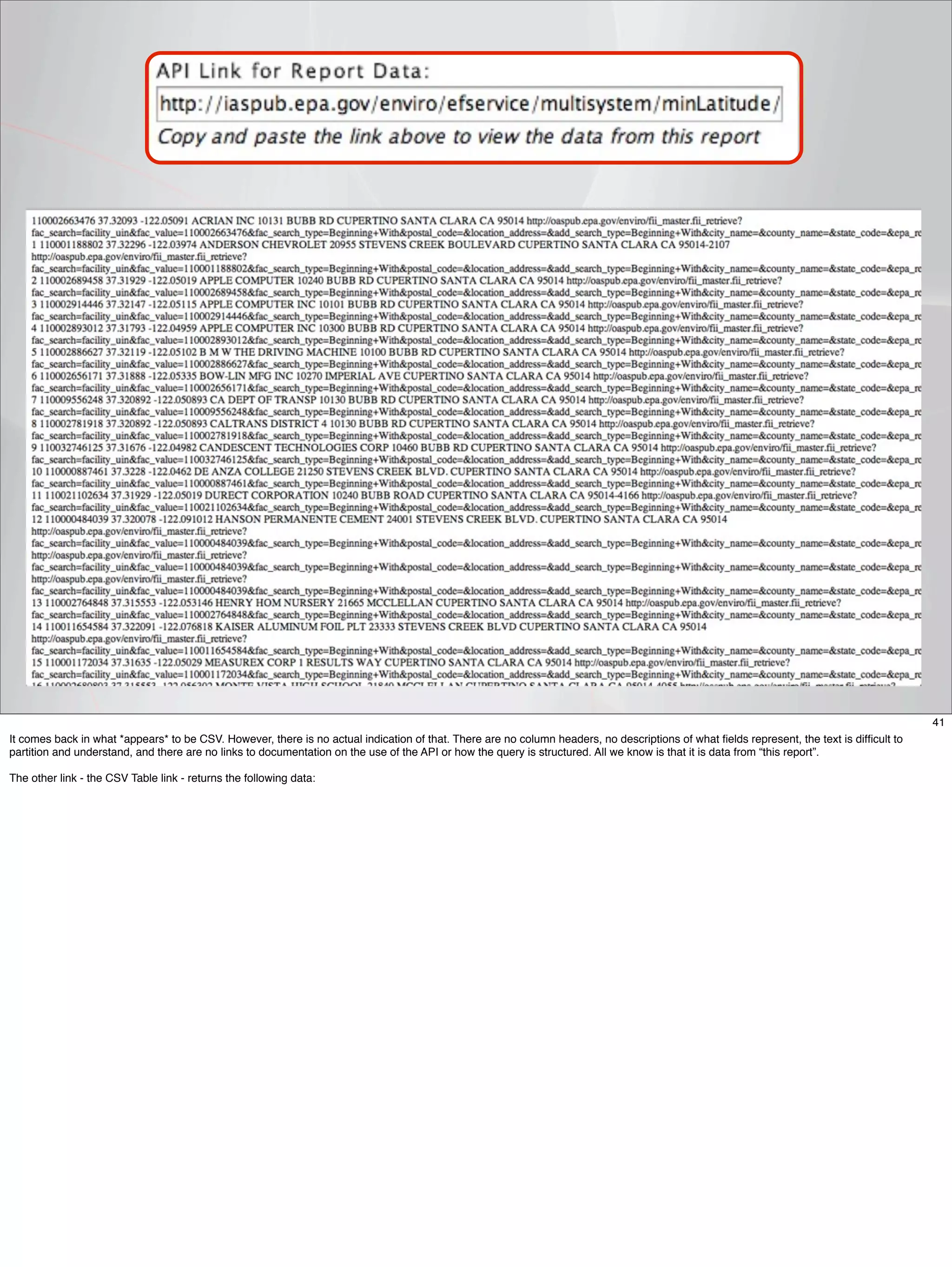

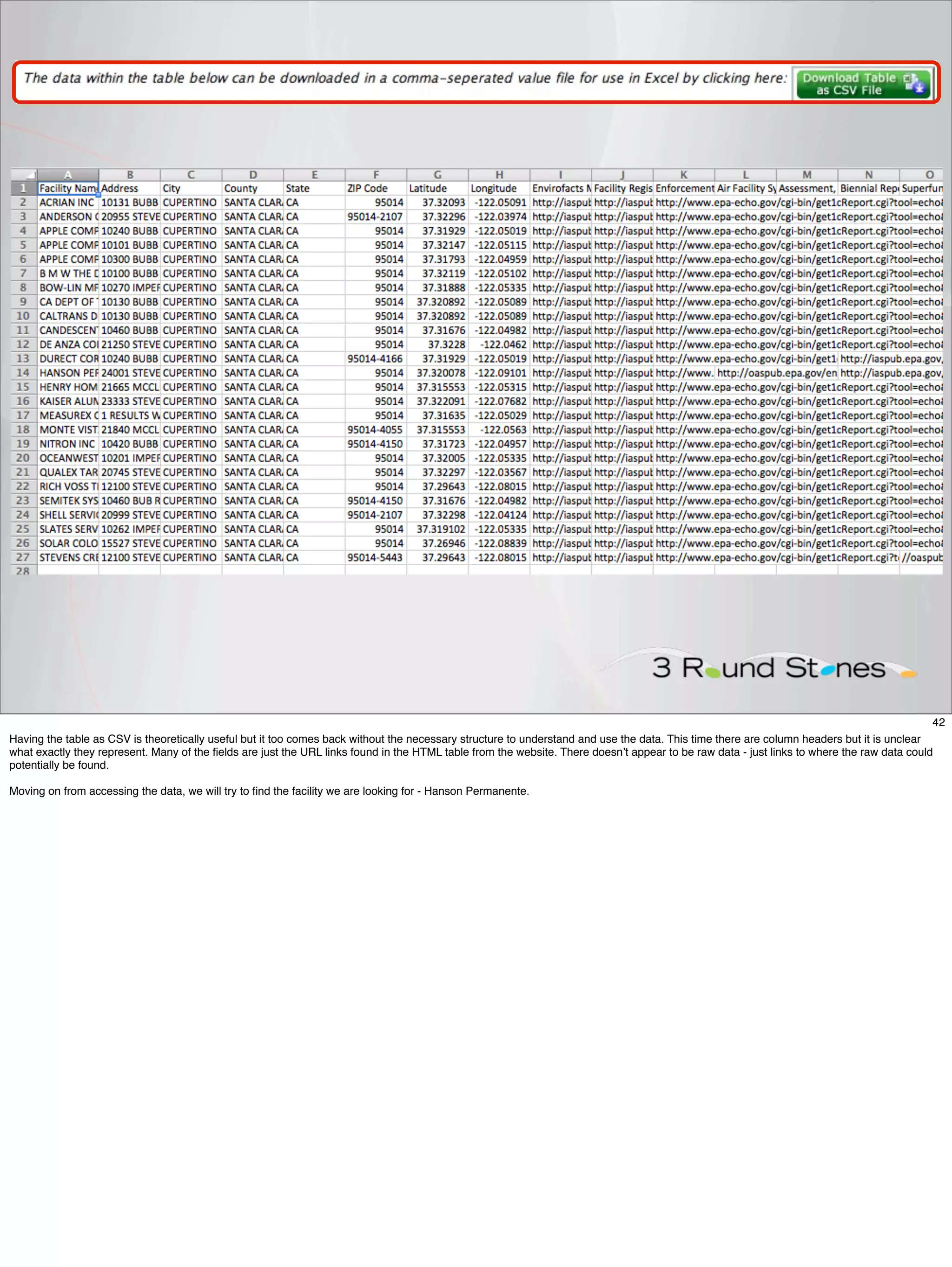

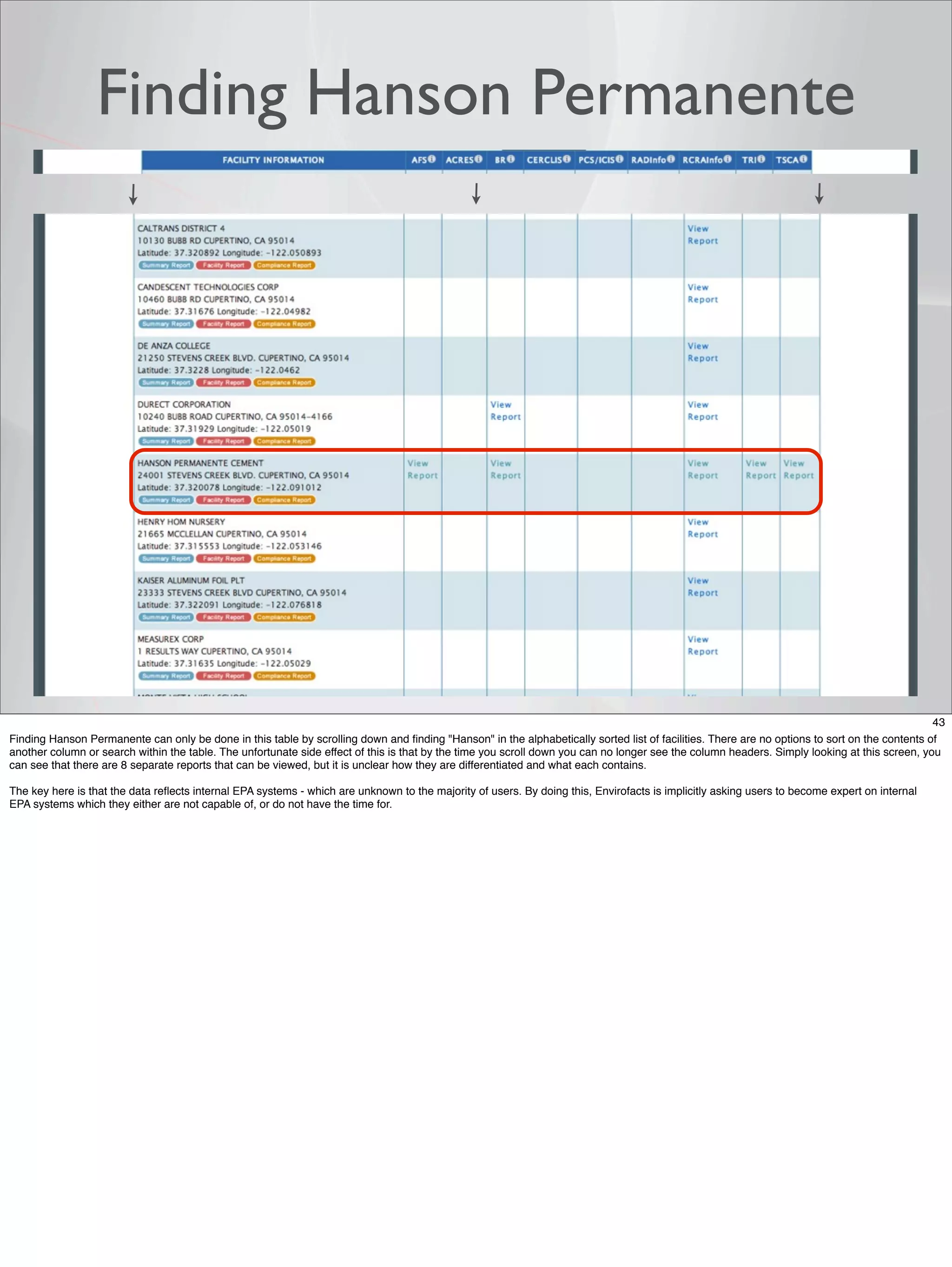

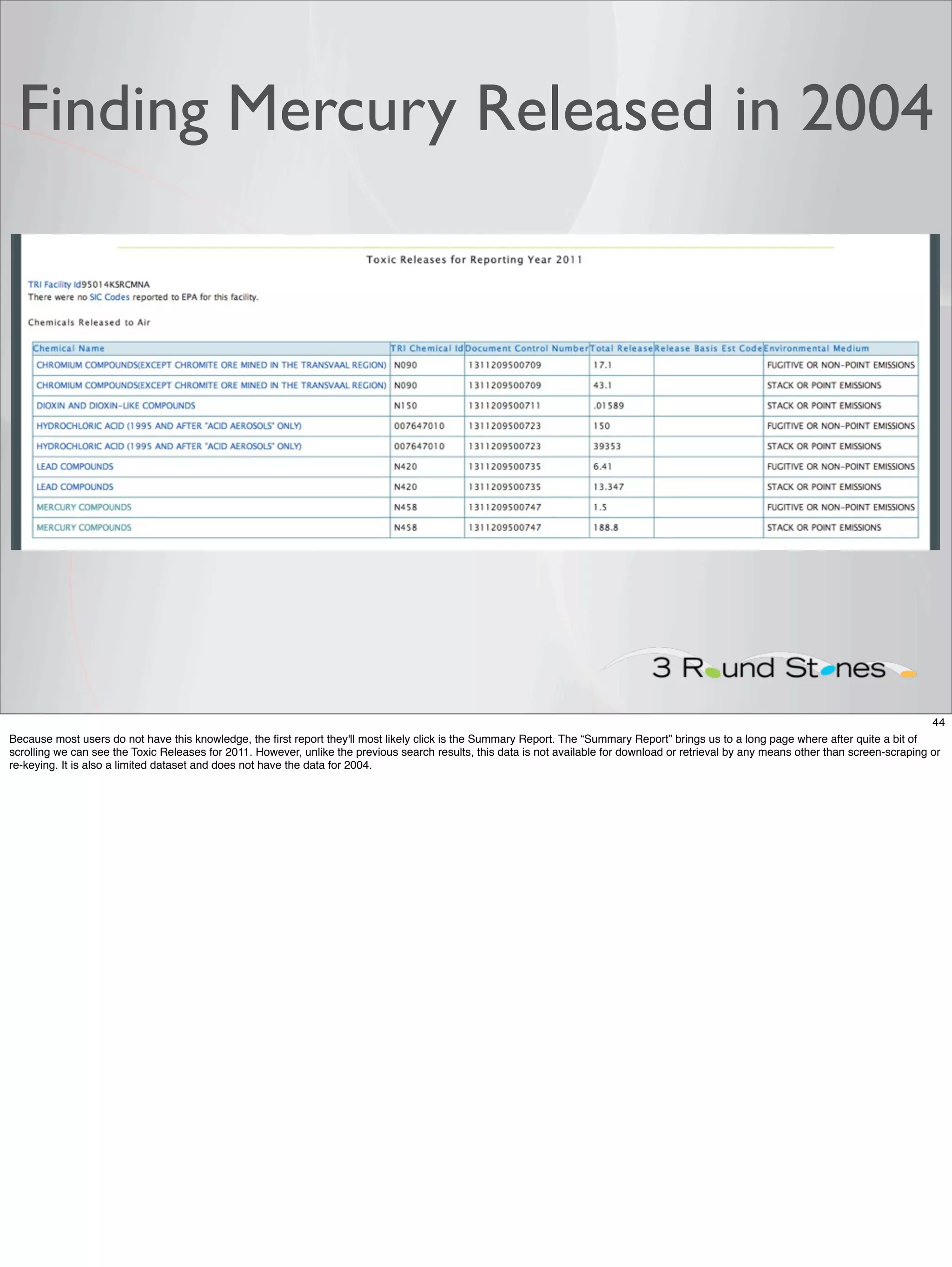

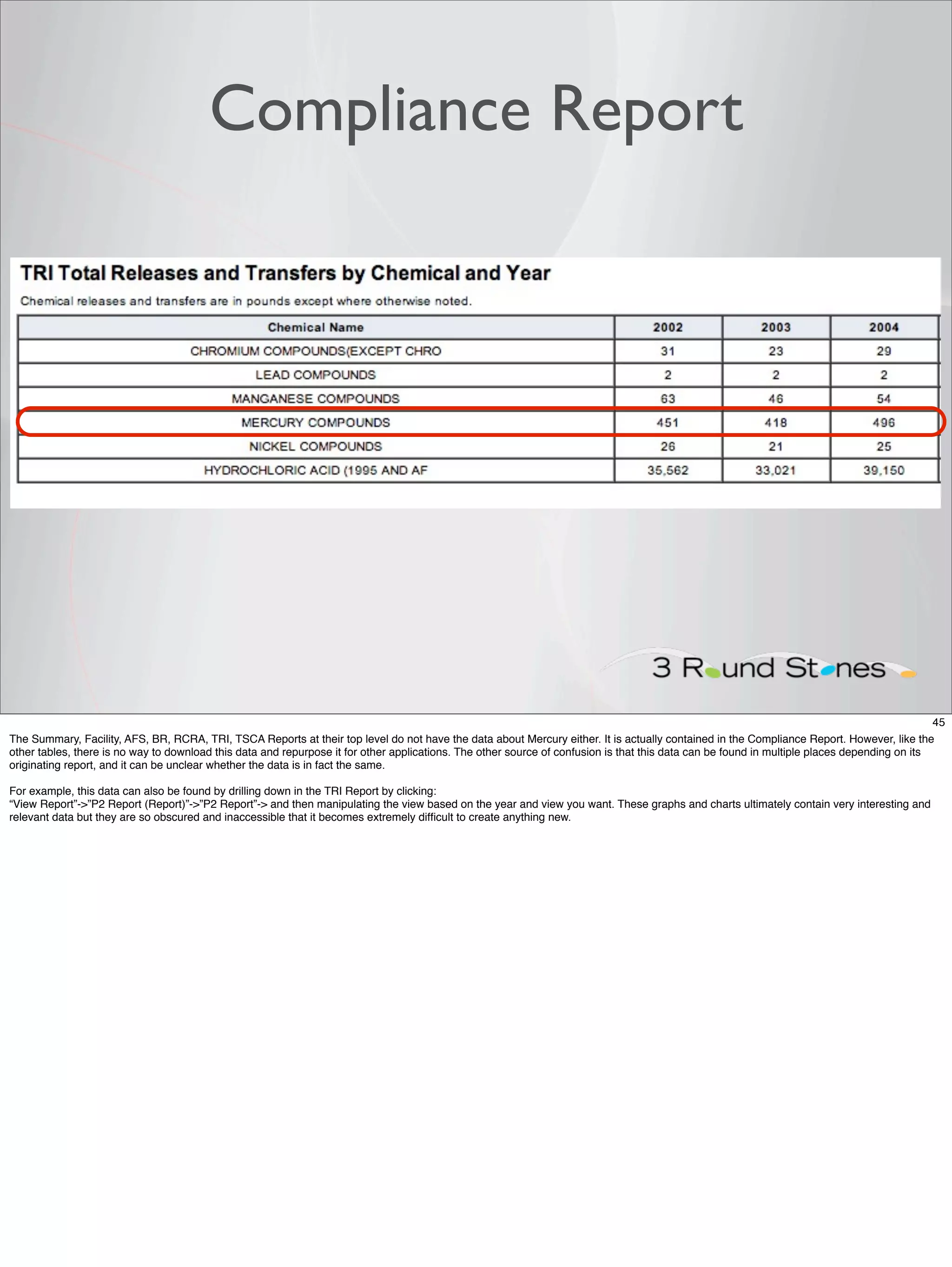

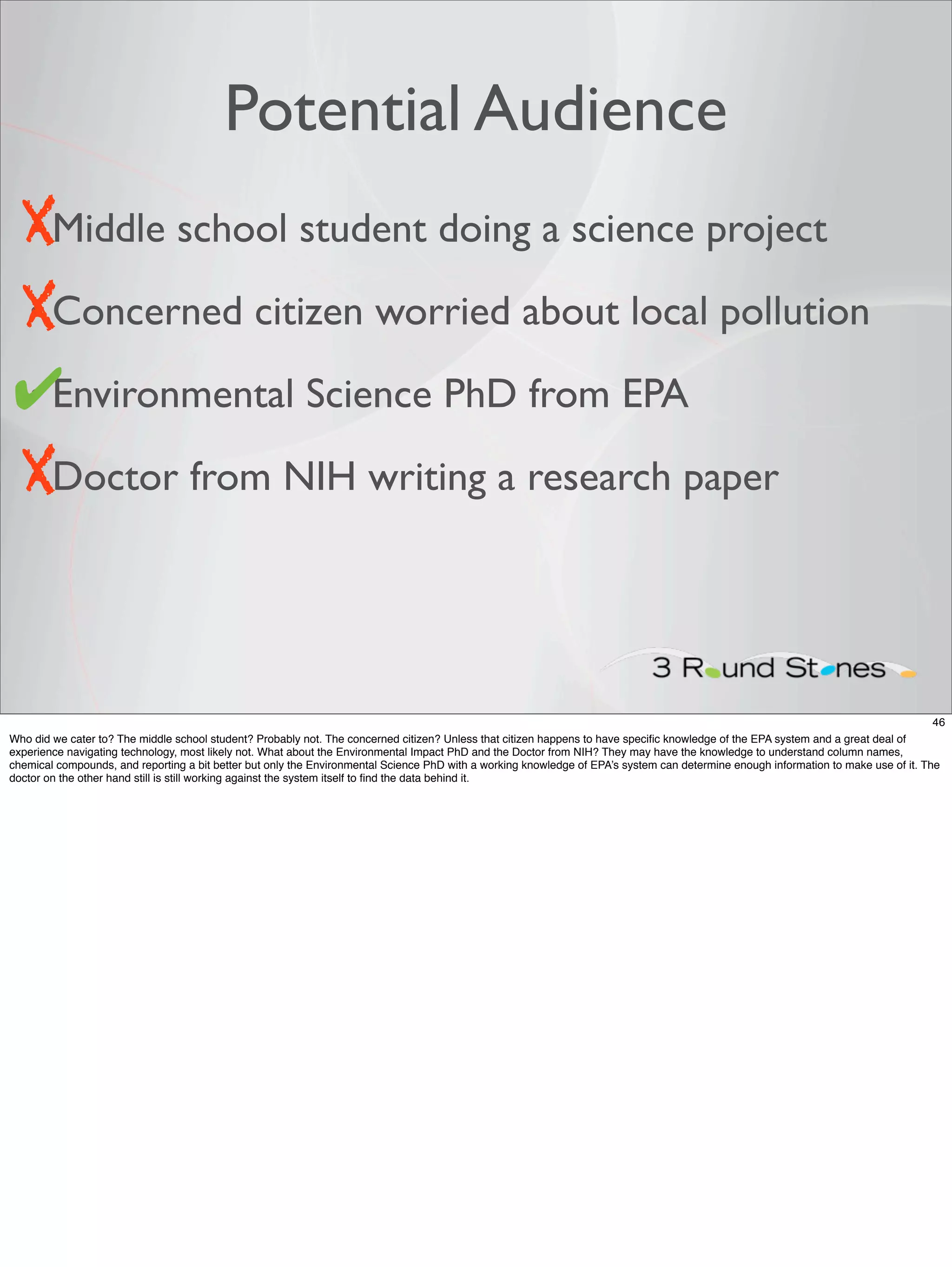

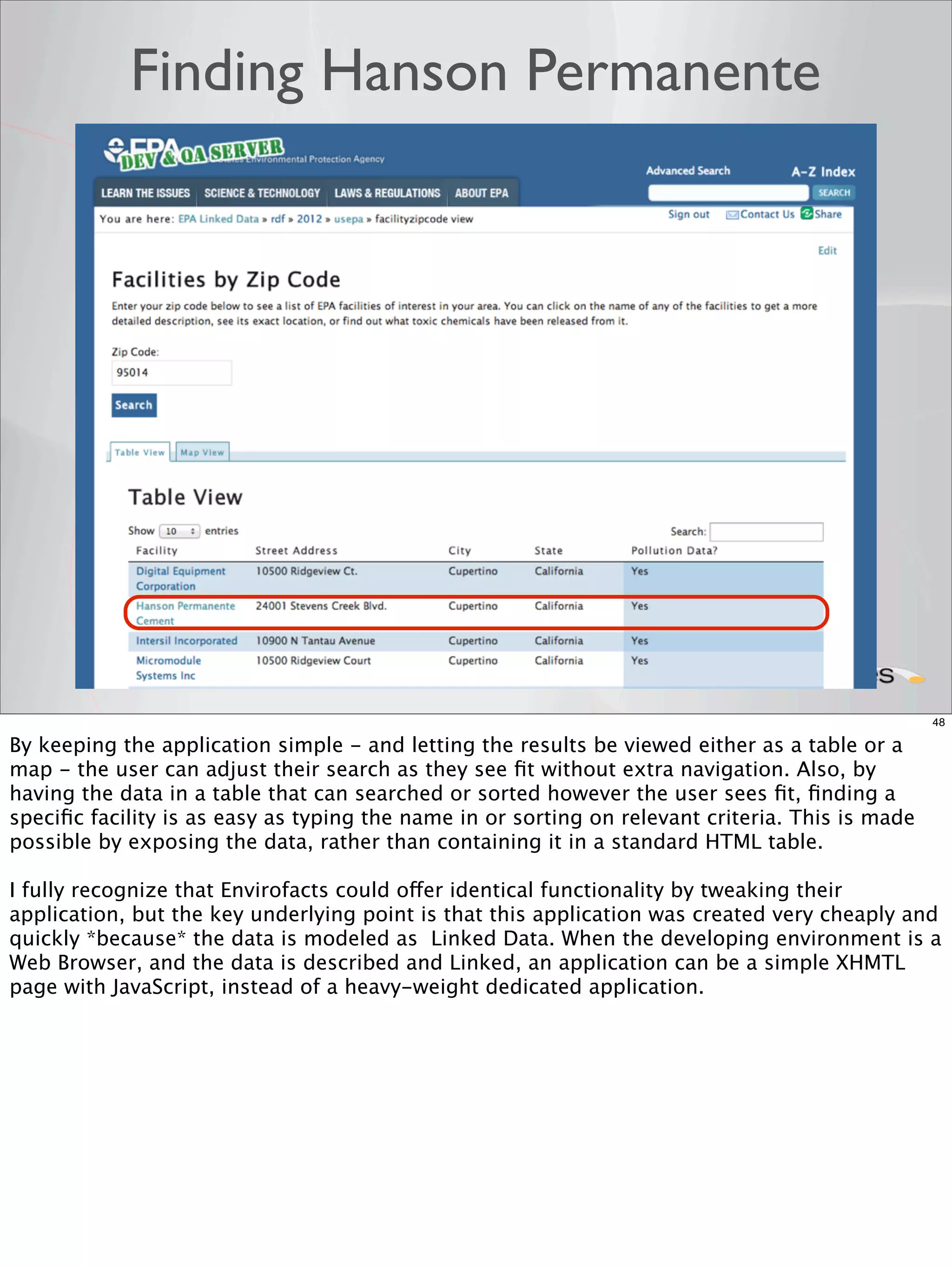

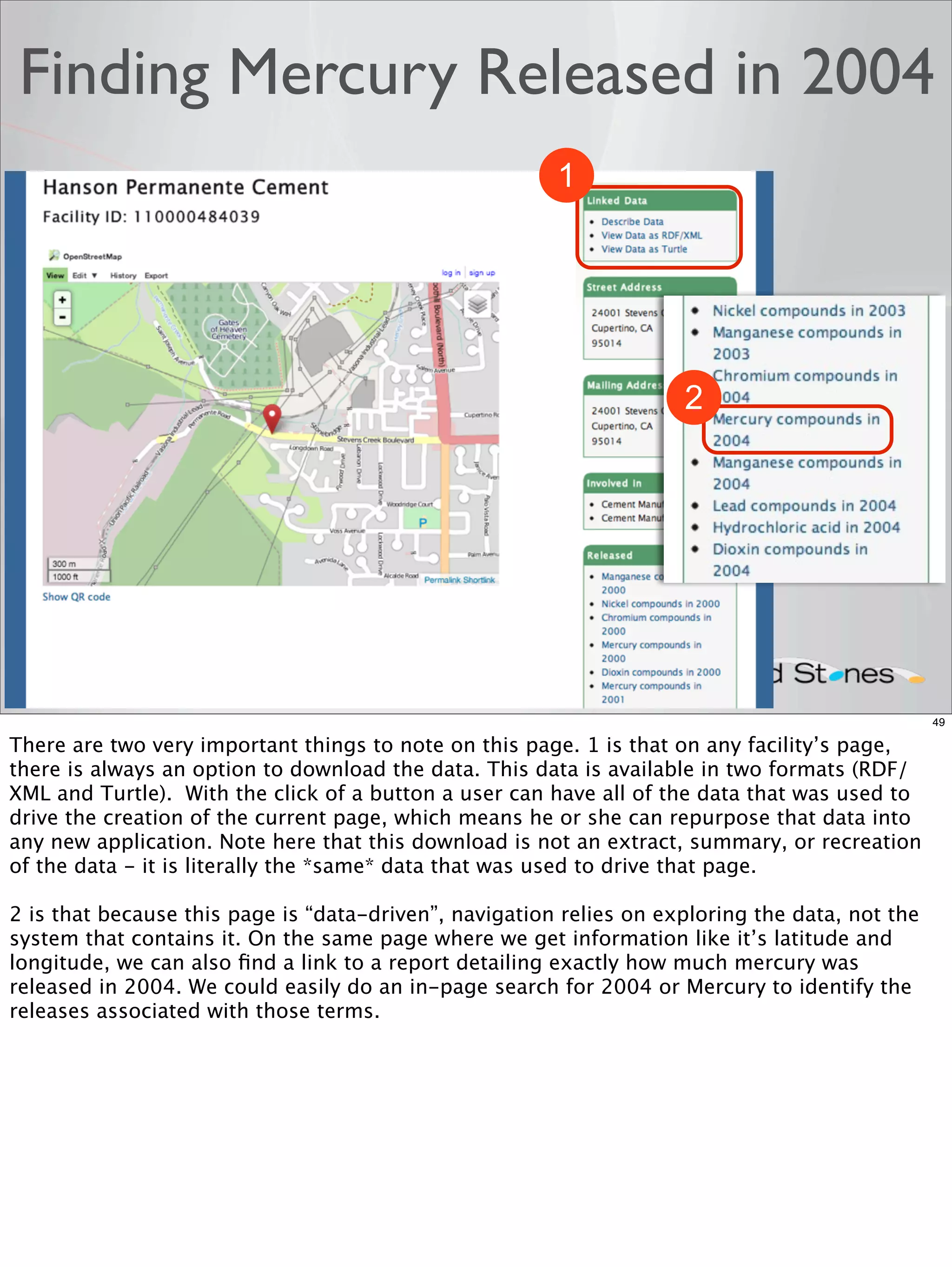

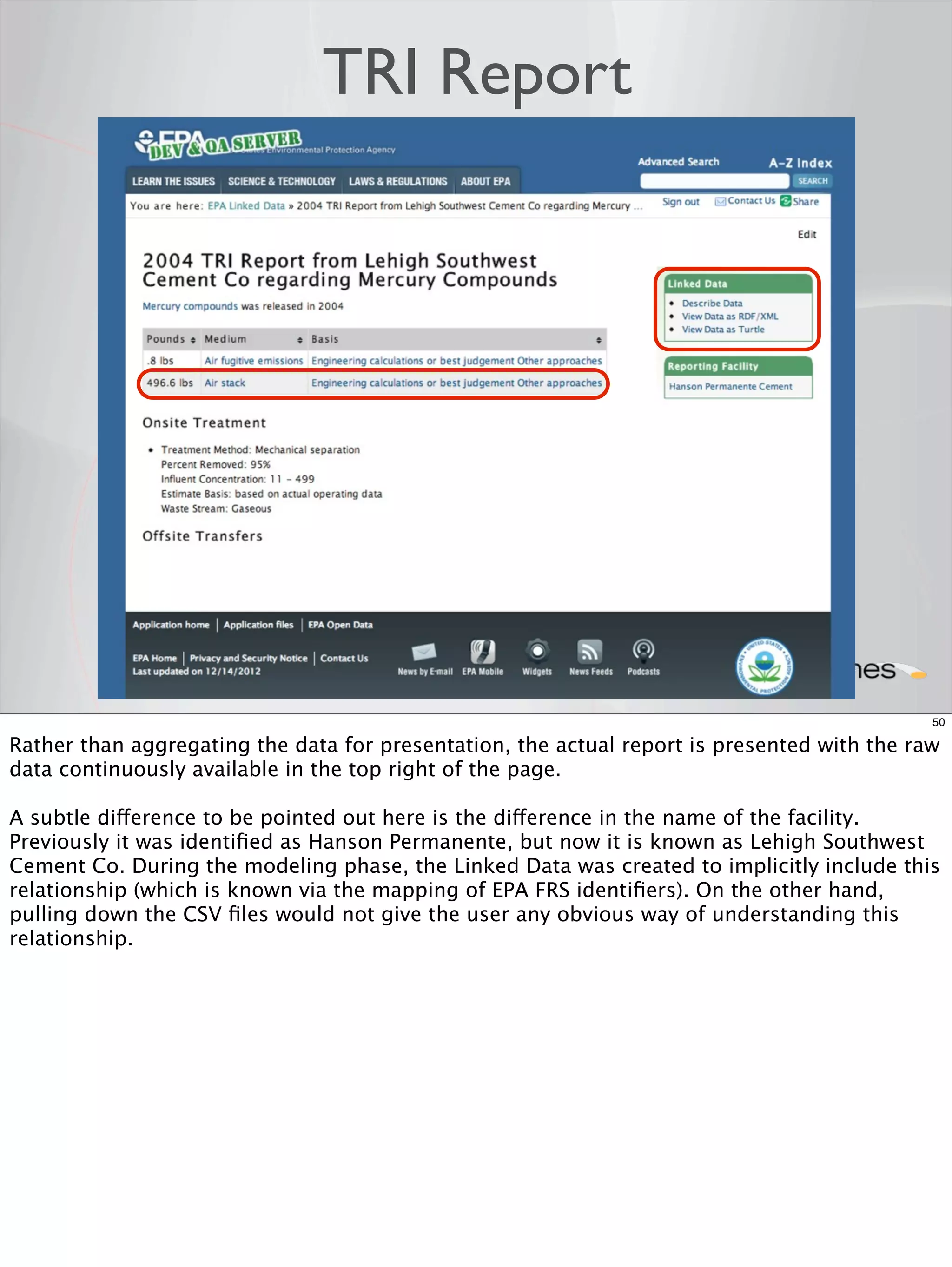

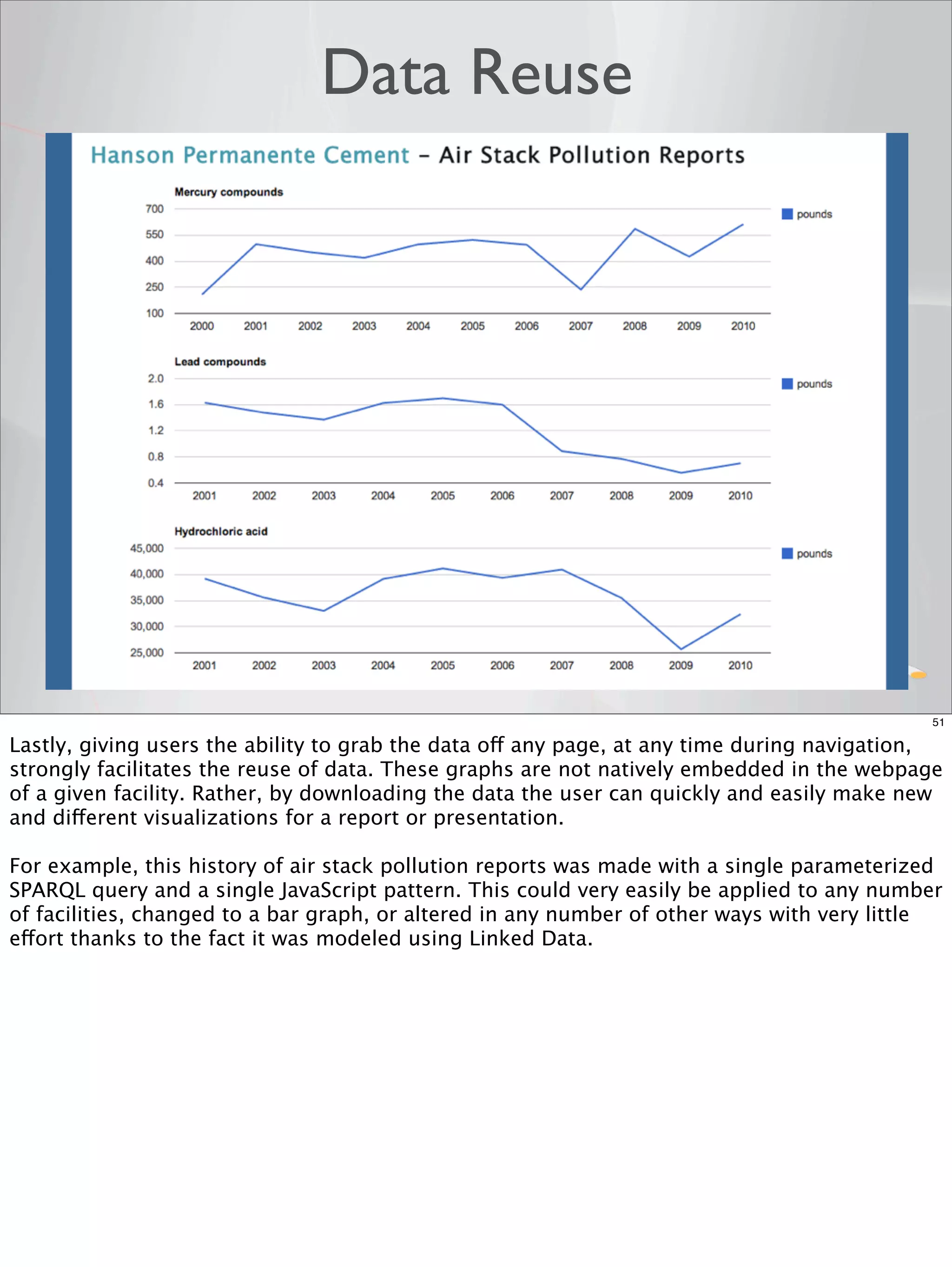

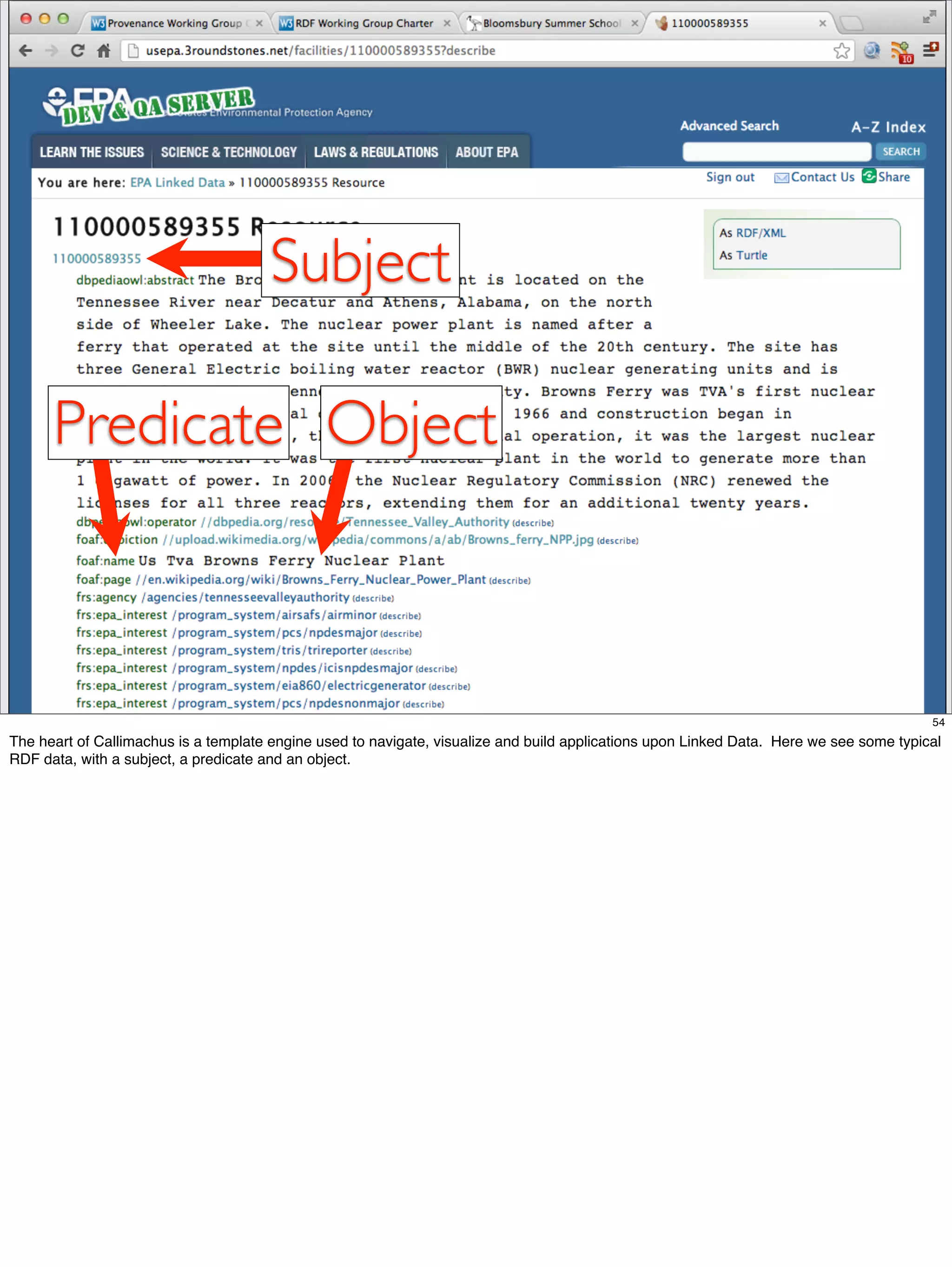

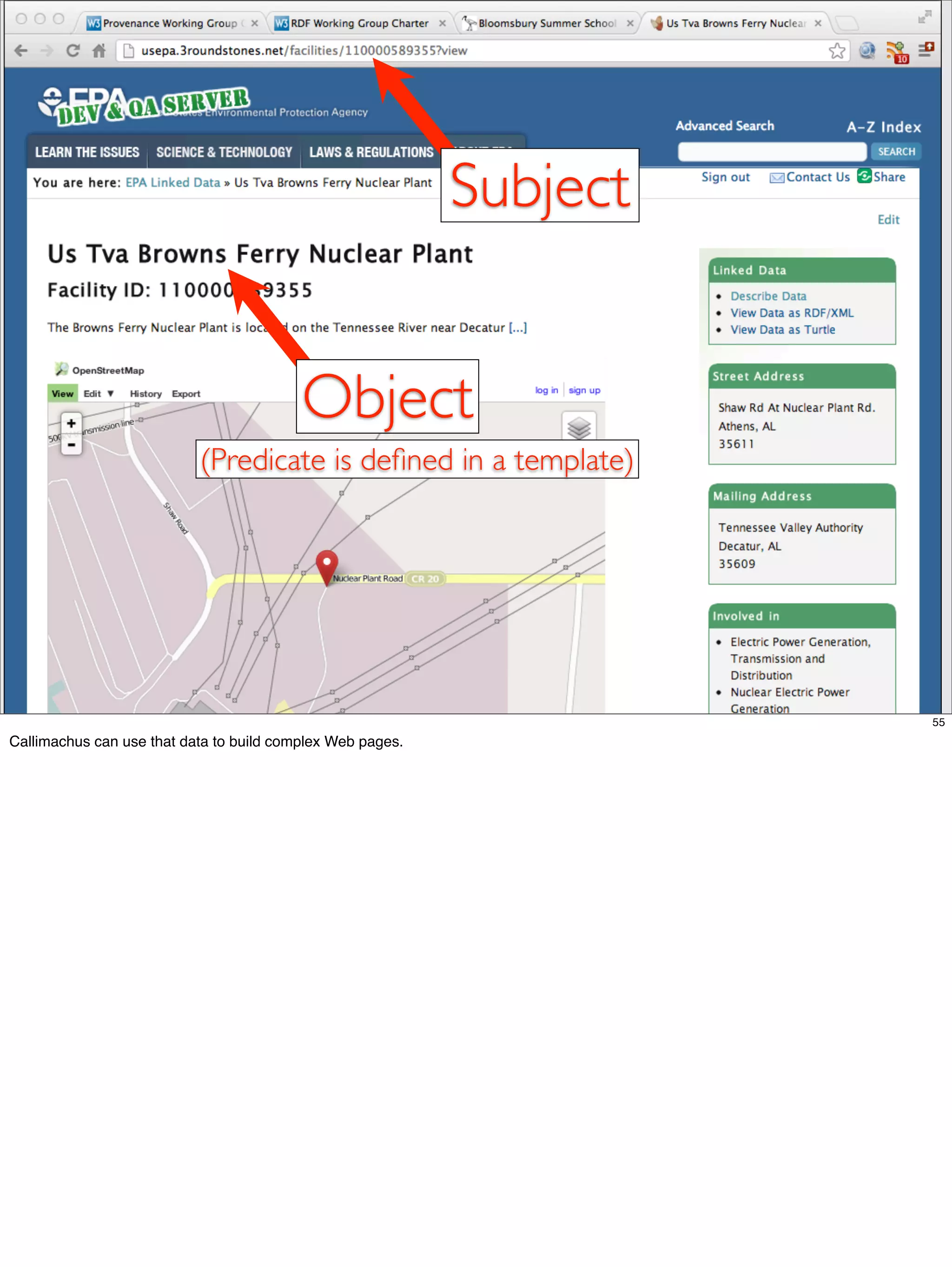

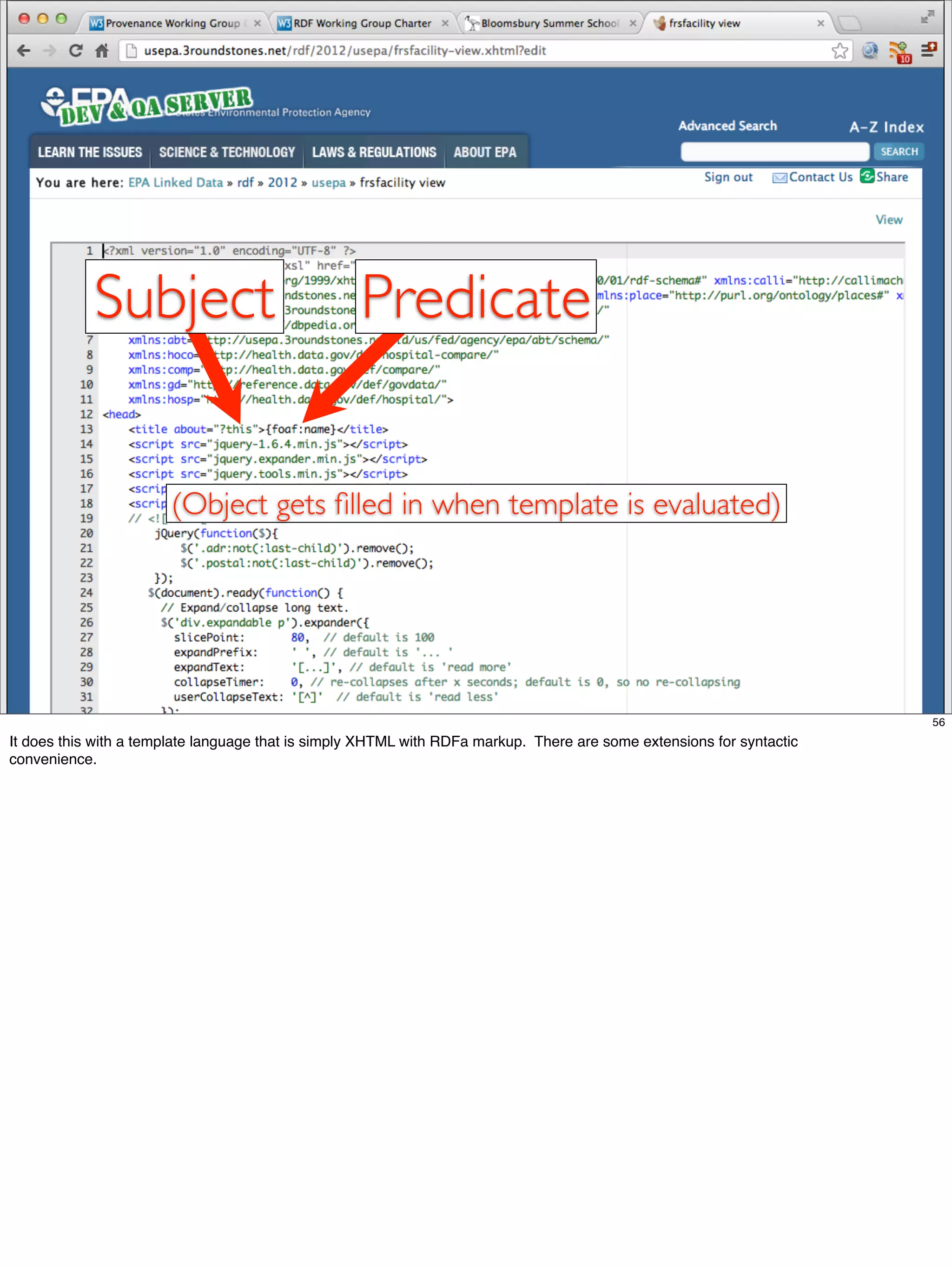

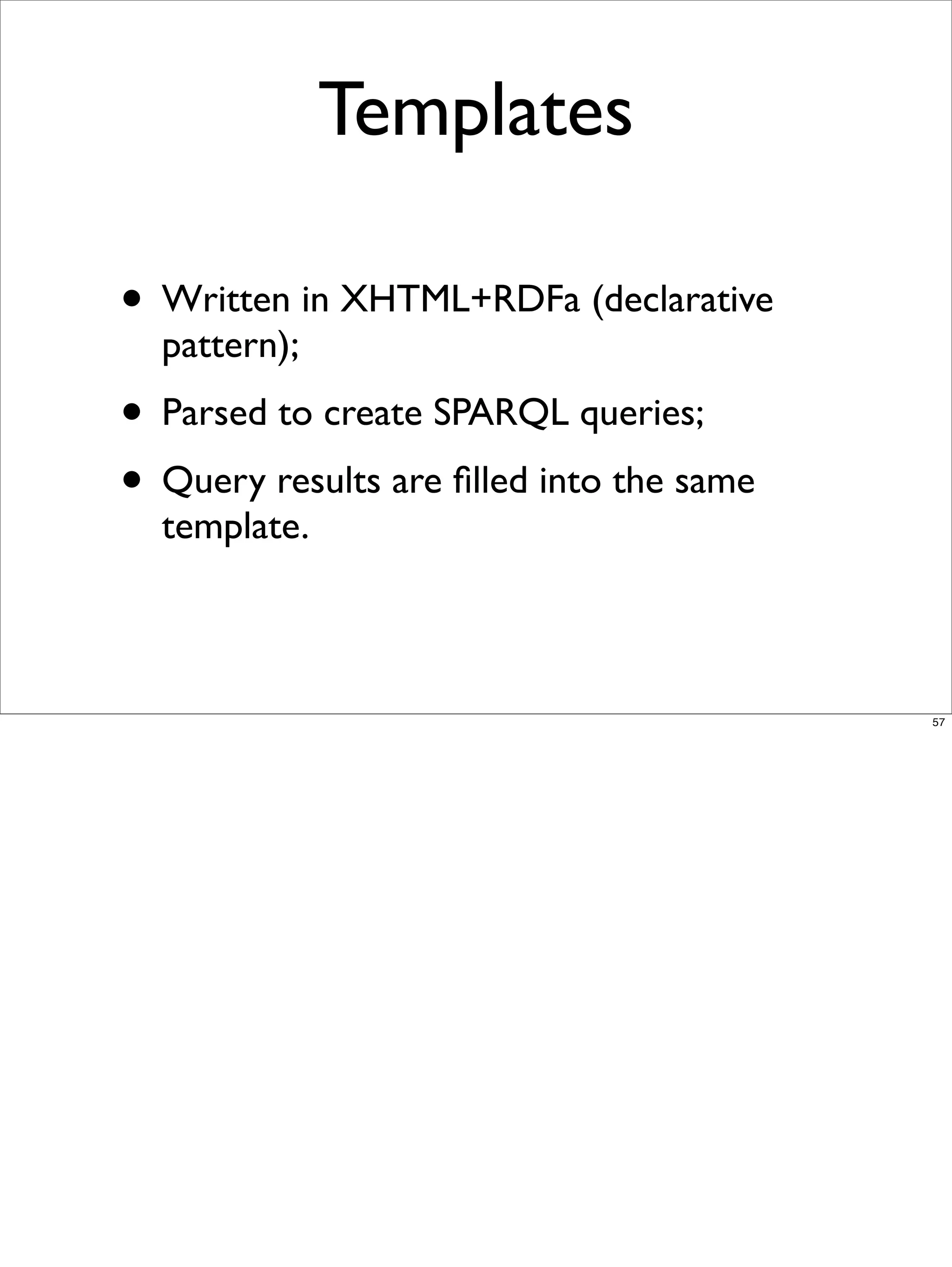

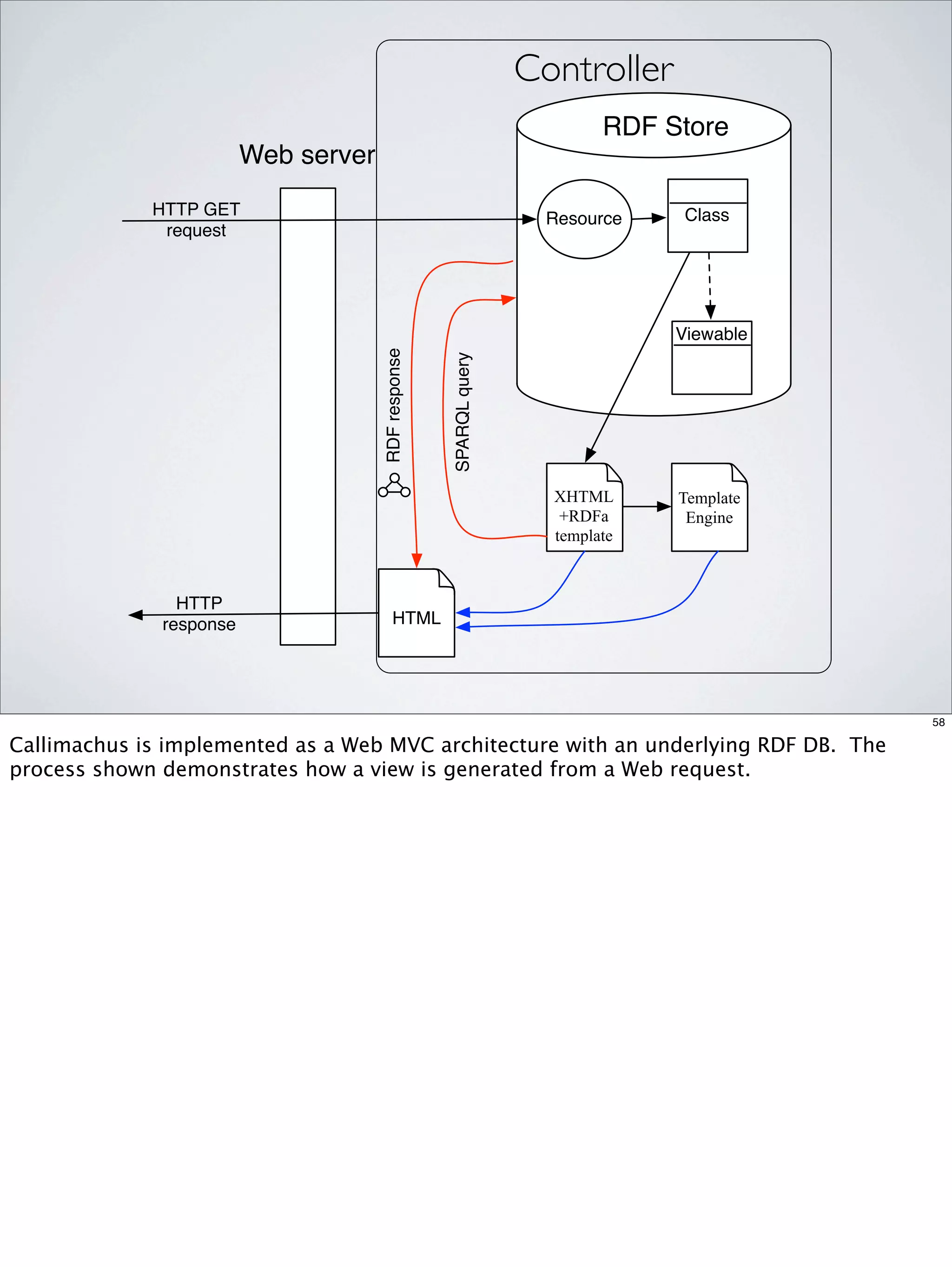

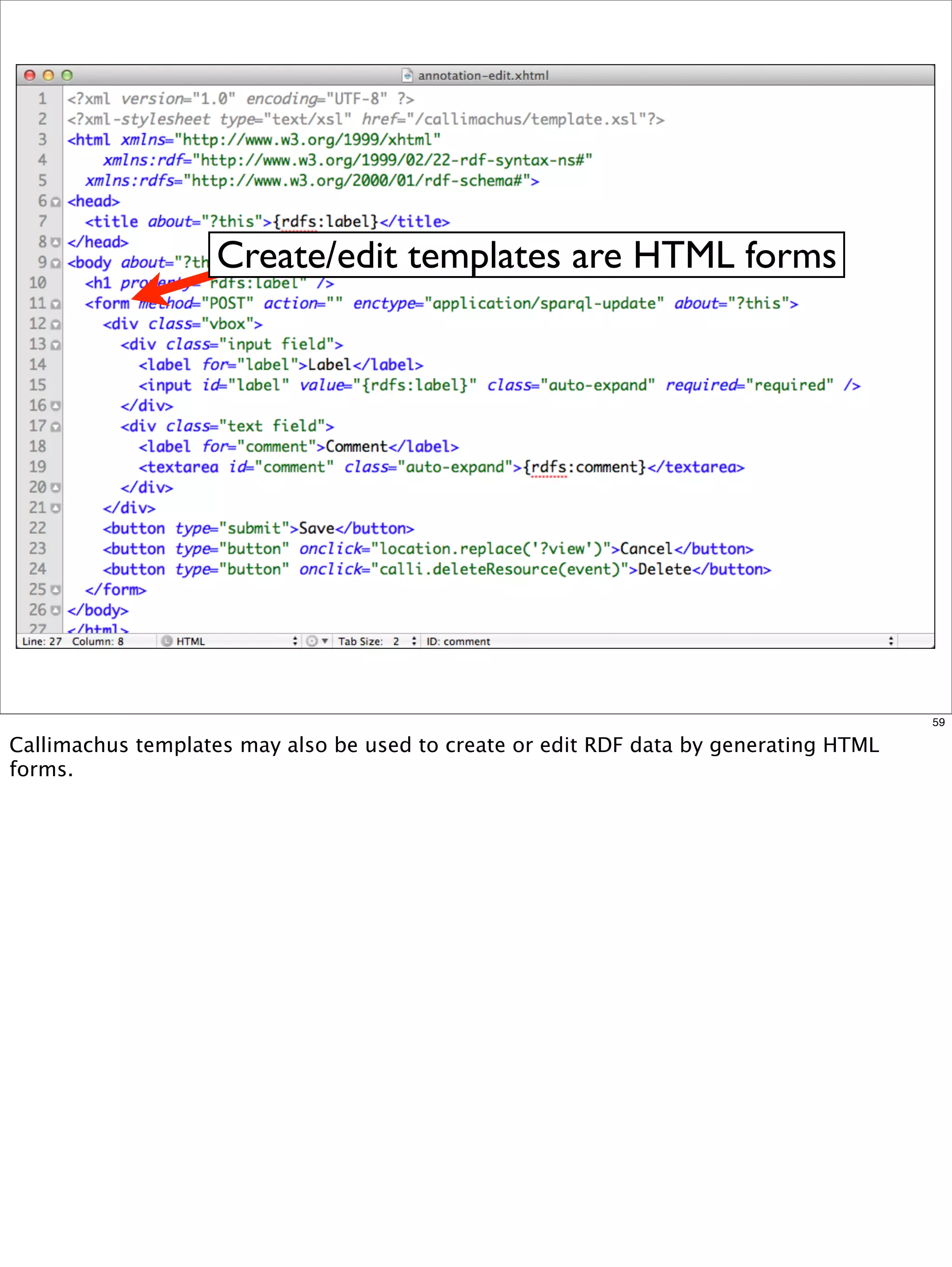

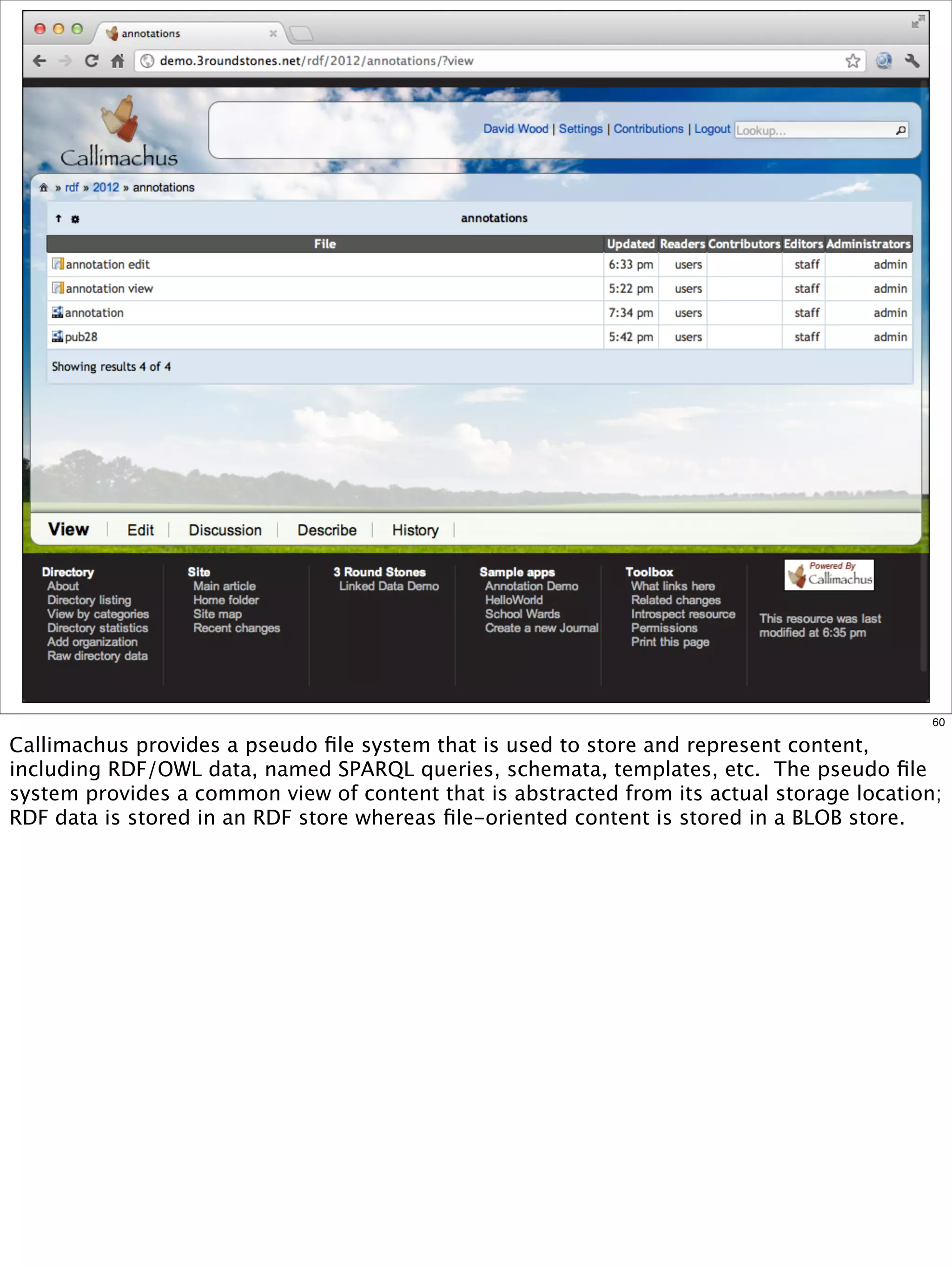

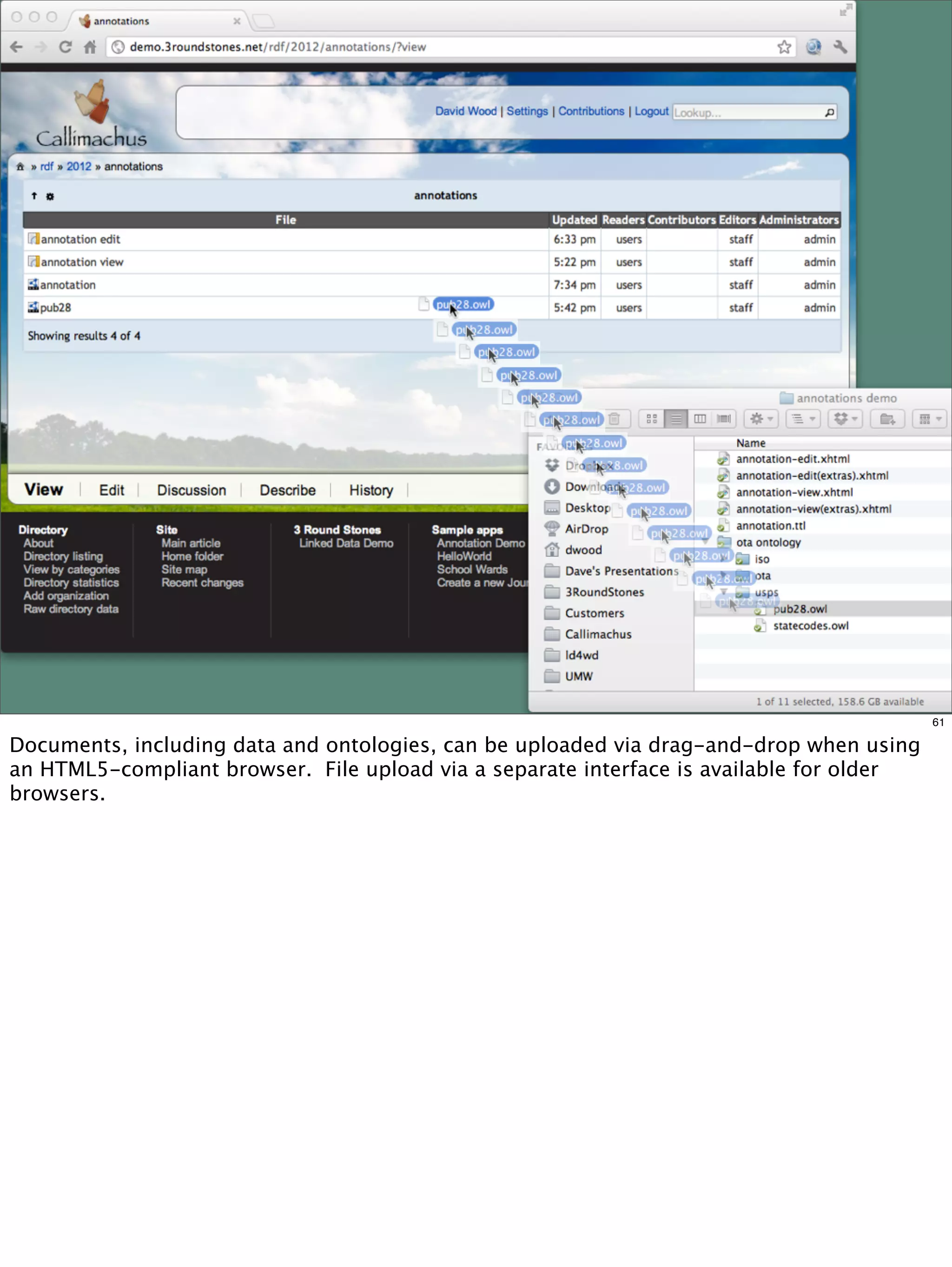

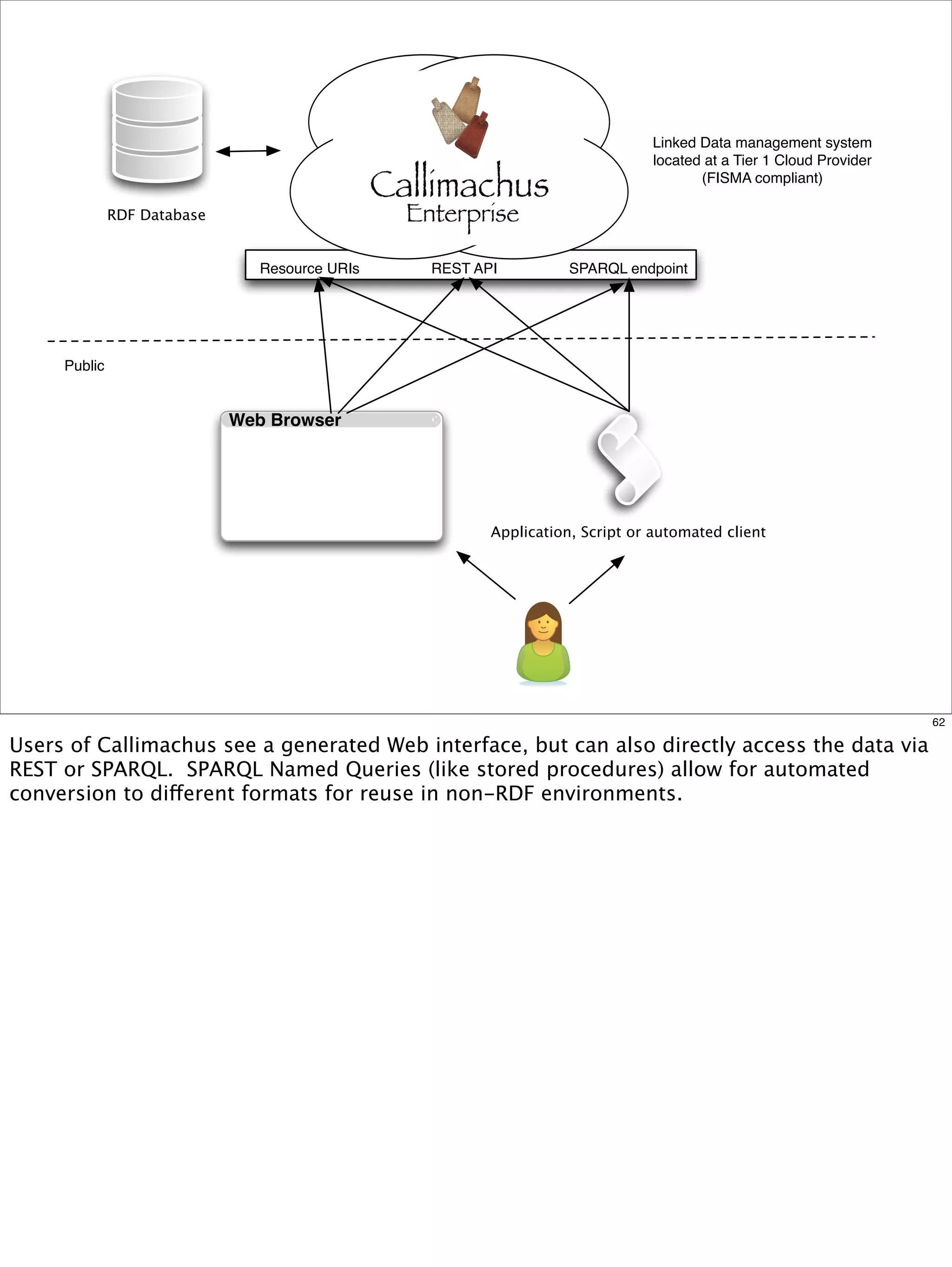

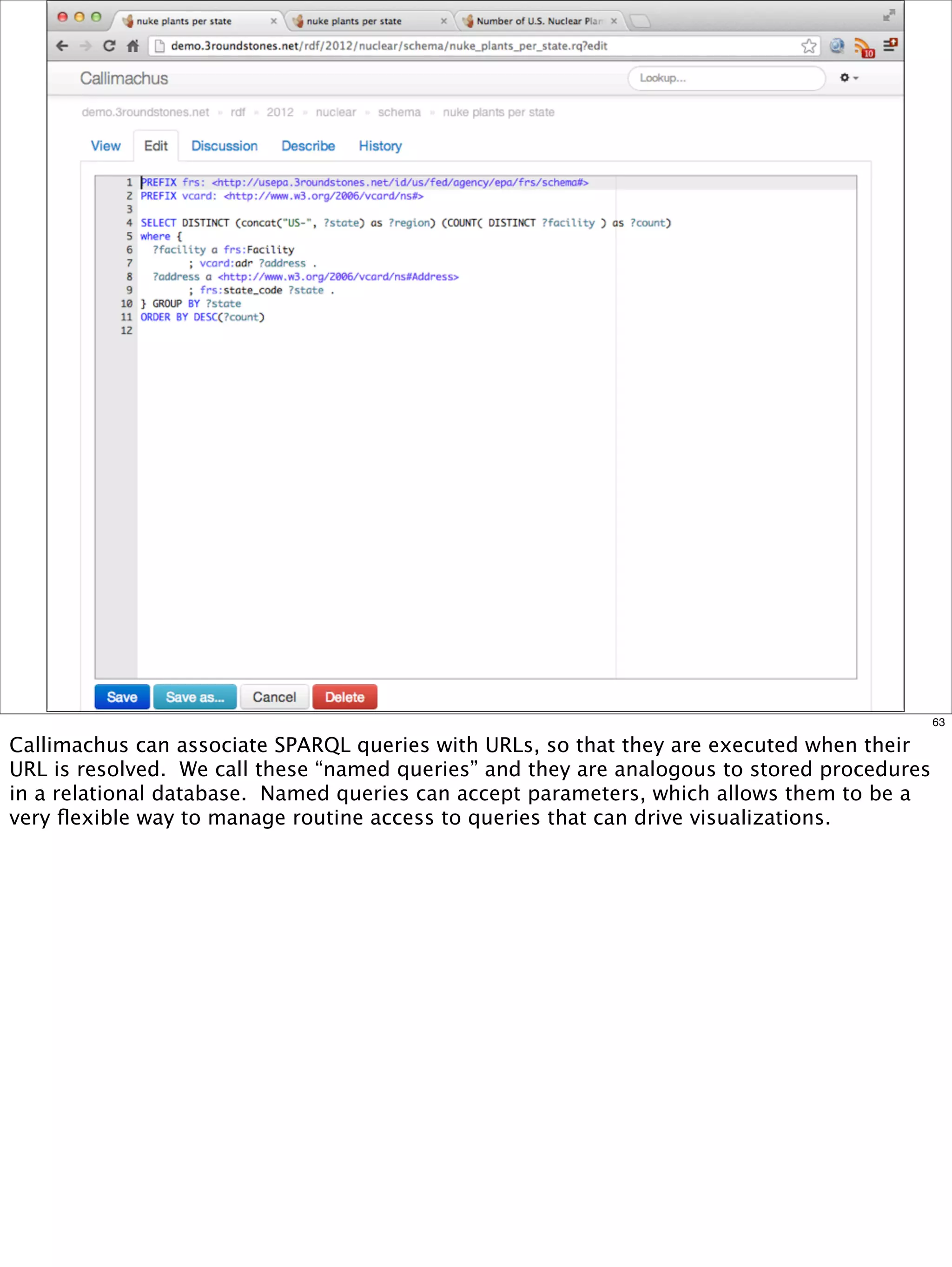

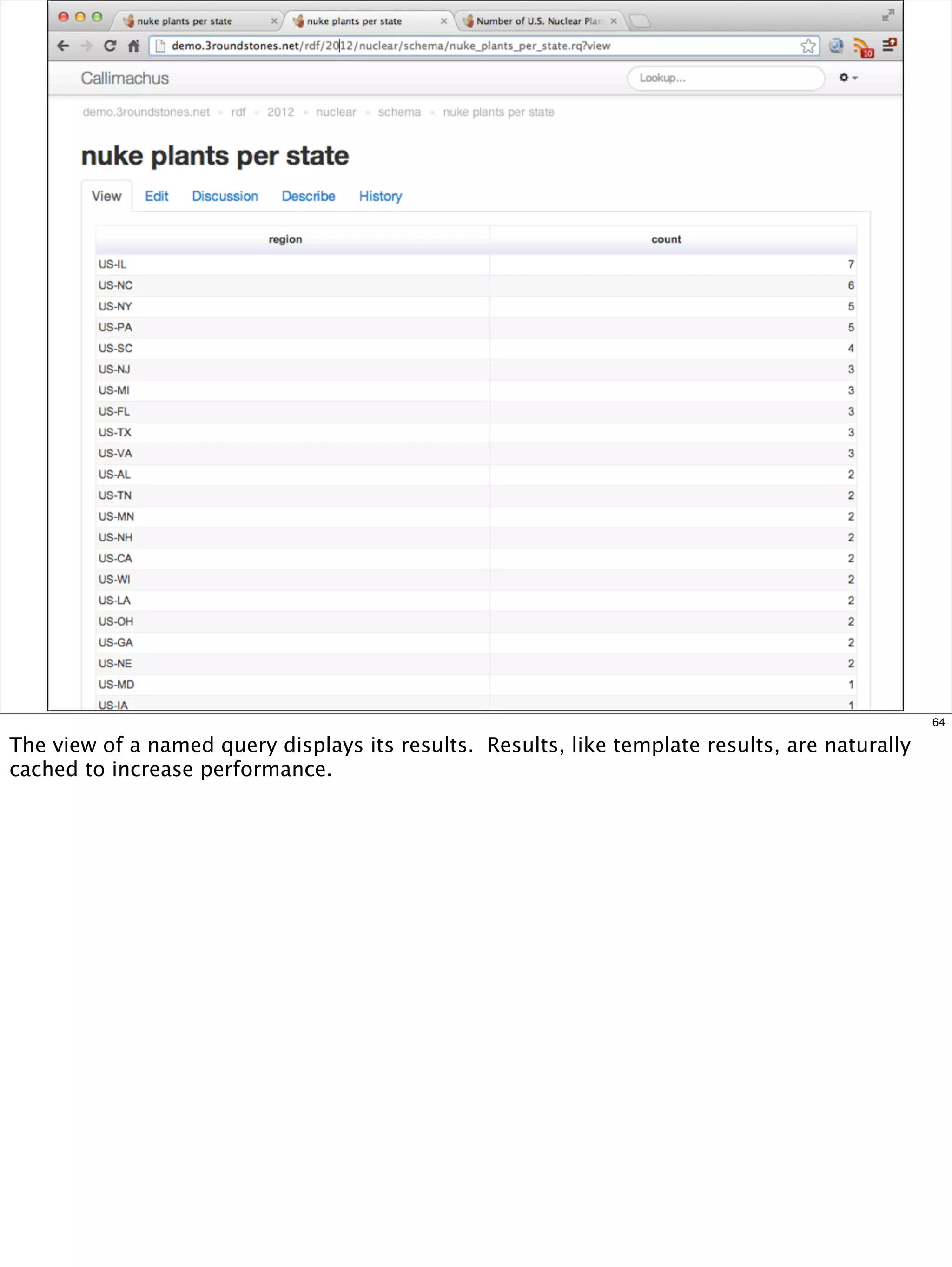

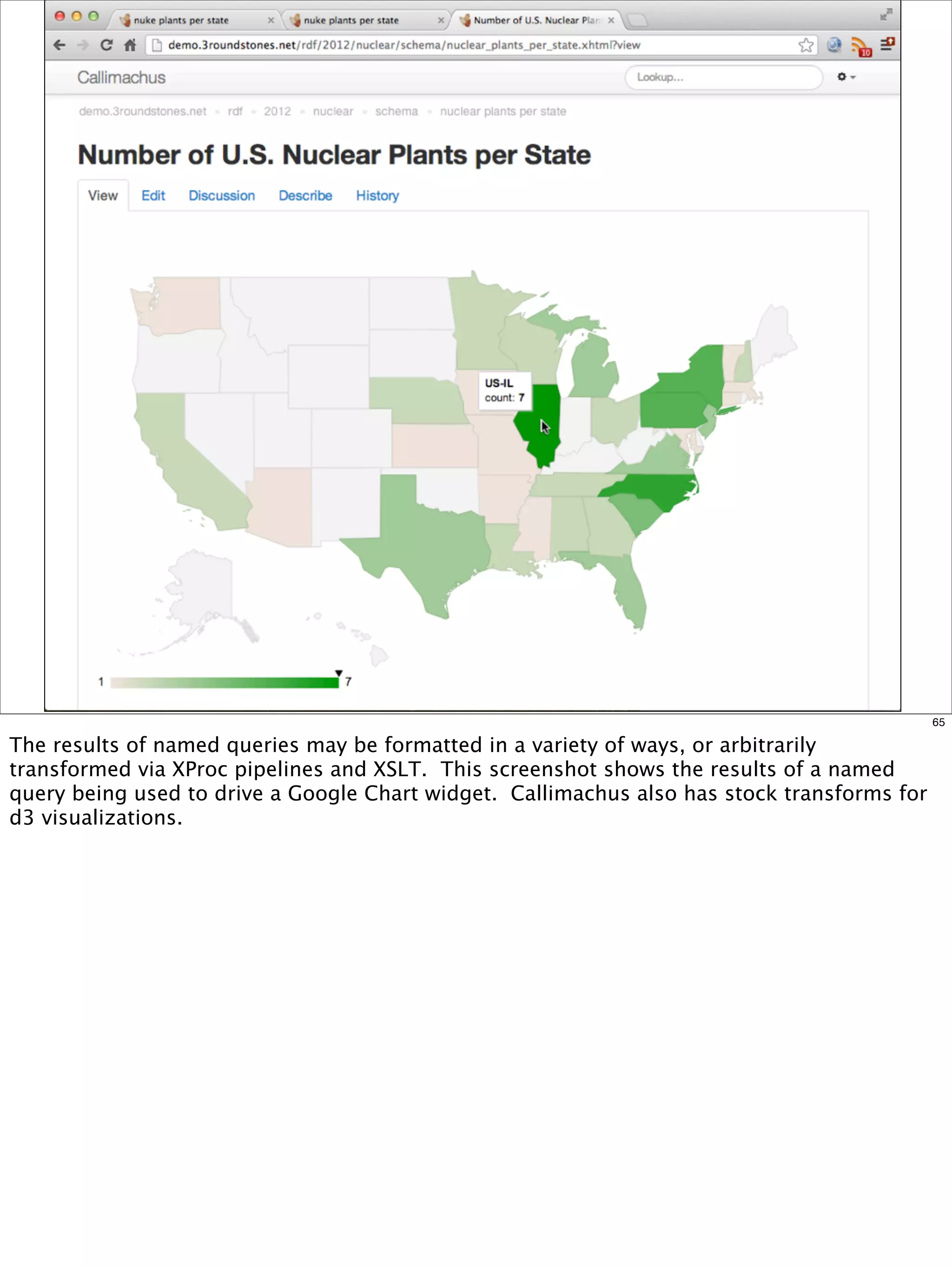

The document outlines a presentation on linked data at the EPA, highlighting trends in government data management, the increasing importance of open data, and the transition to semantic technologies for improving information access. It discusses the benefits of linked data for better data reuse and interoperability, emphasizing how it facilitates innovation in public services and research. Additionally, it introduces the Callimachus platform as a solution for publishing reusable linked data while addressing challenges in accessing and utilizing governmental data systems.