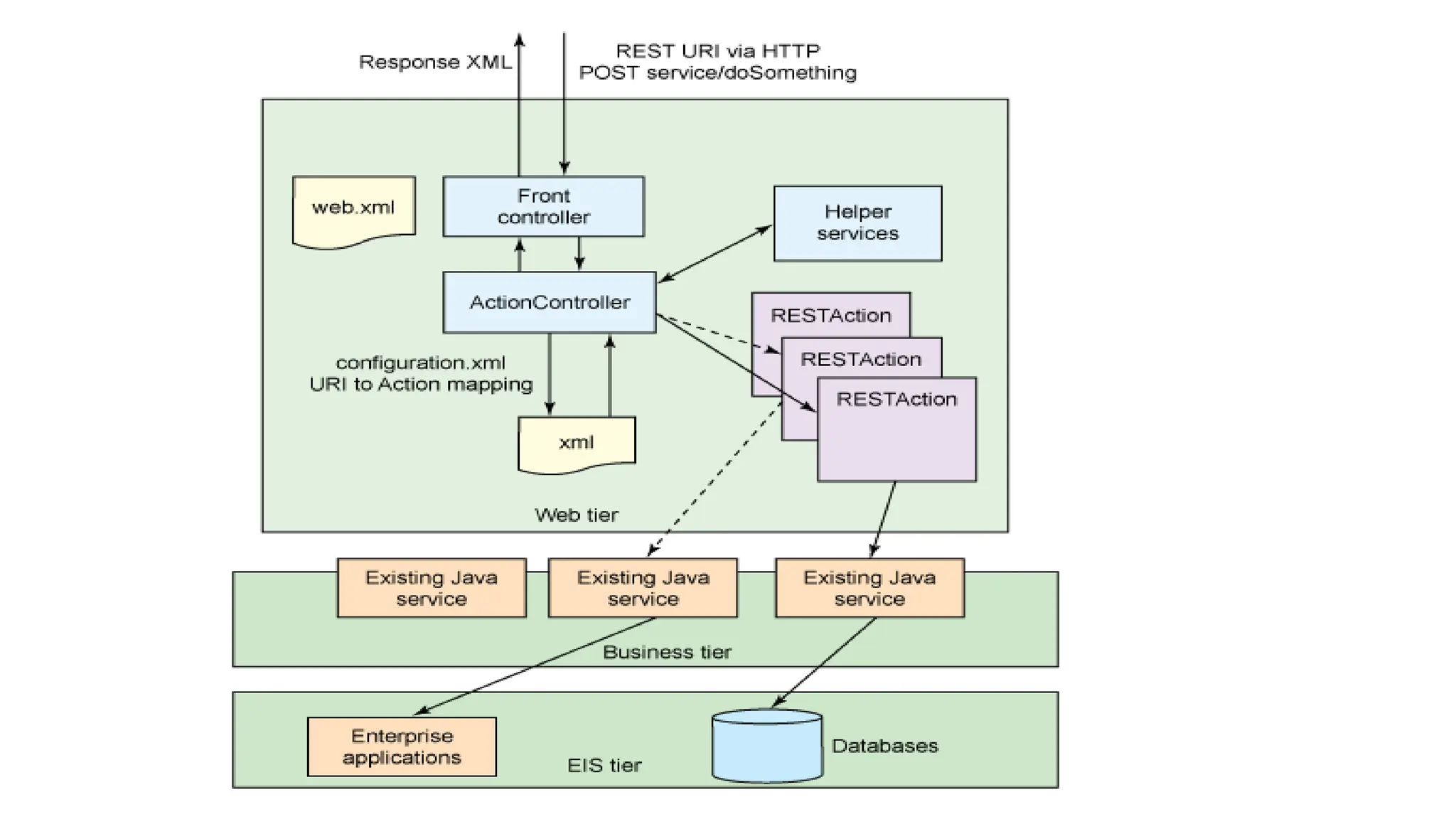

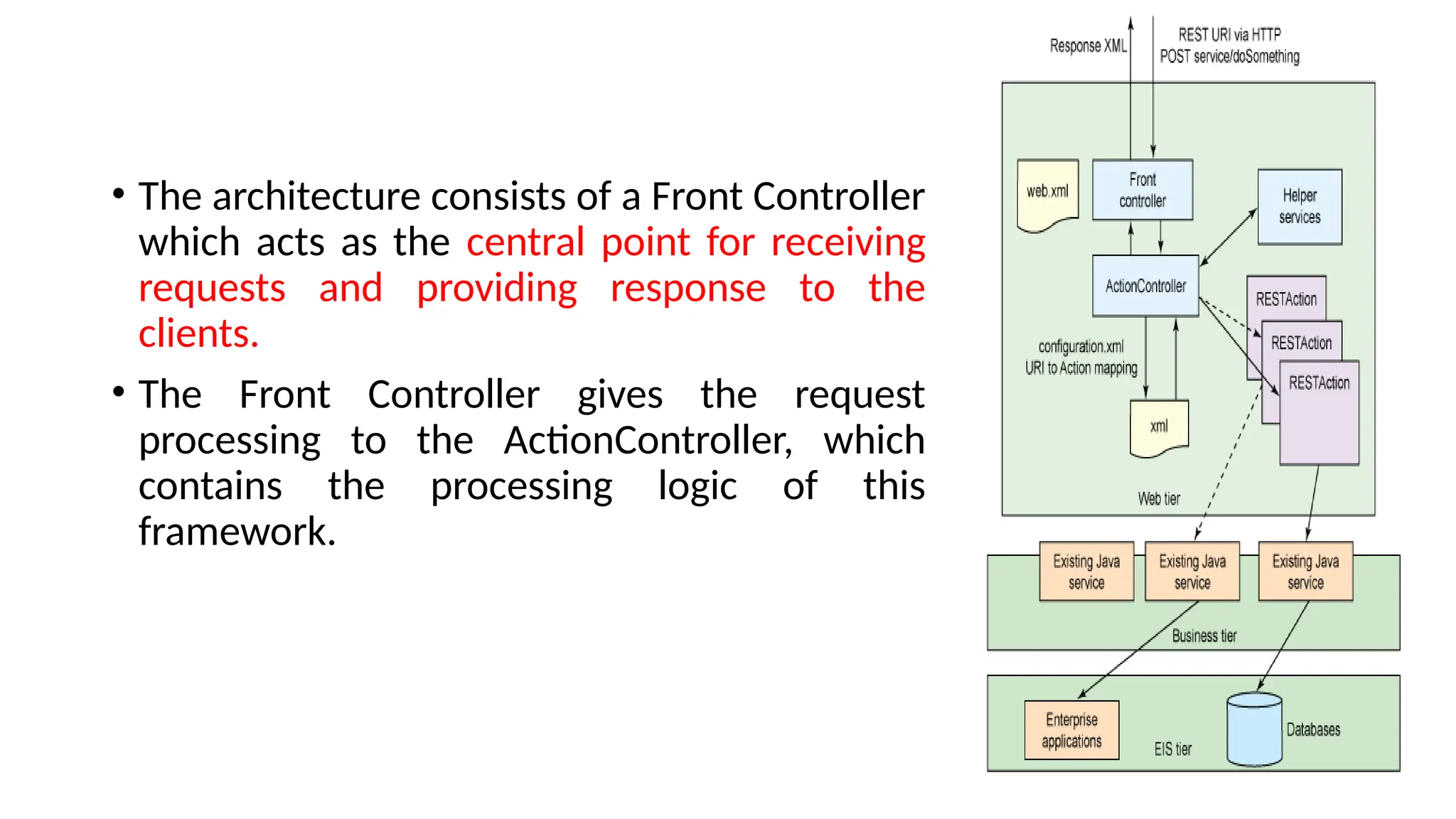

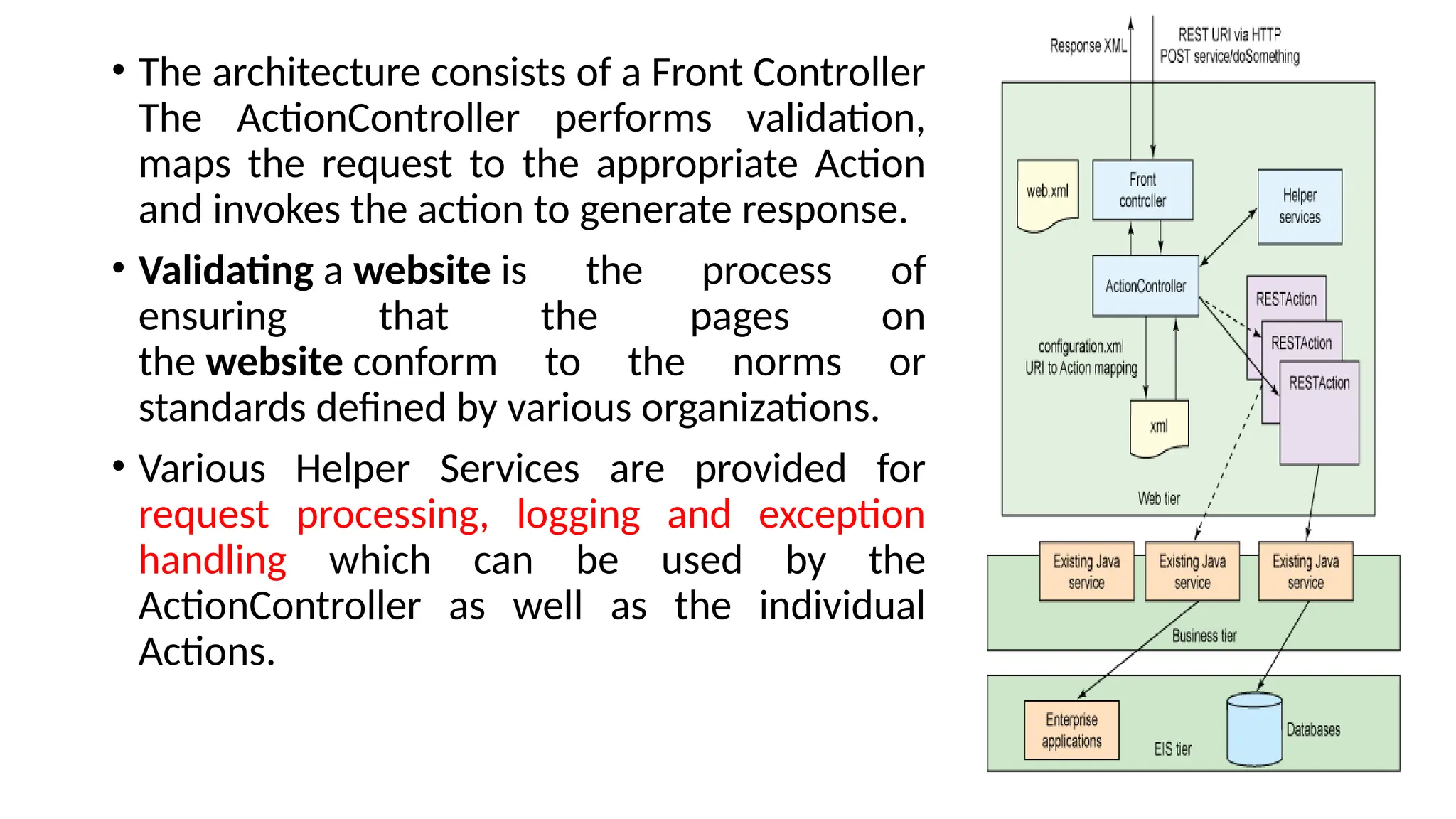

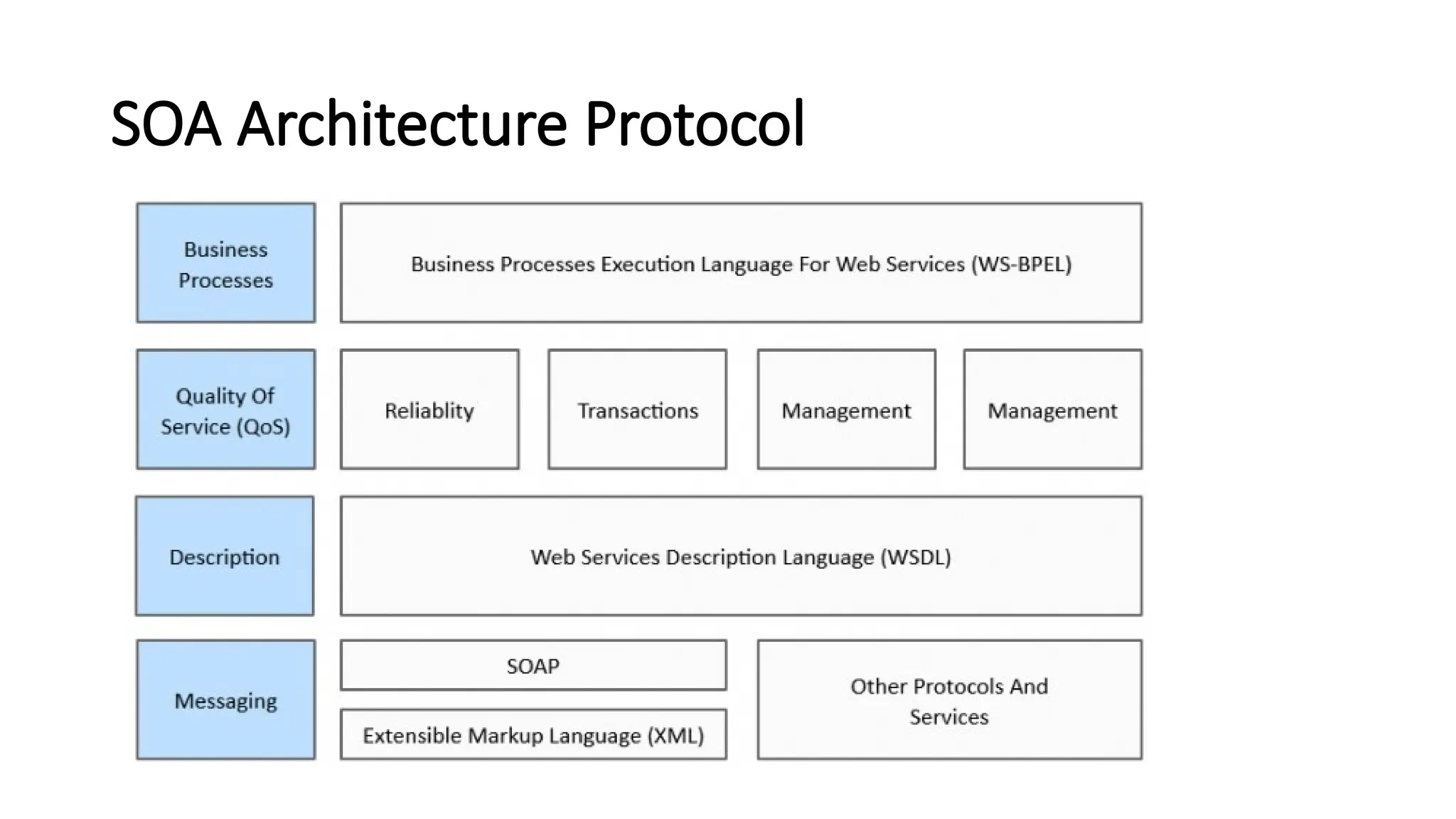

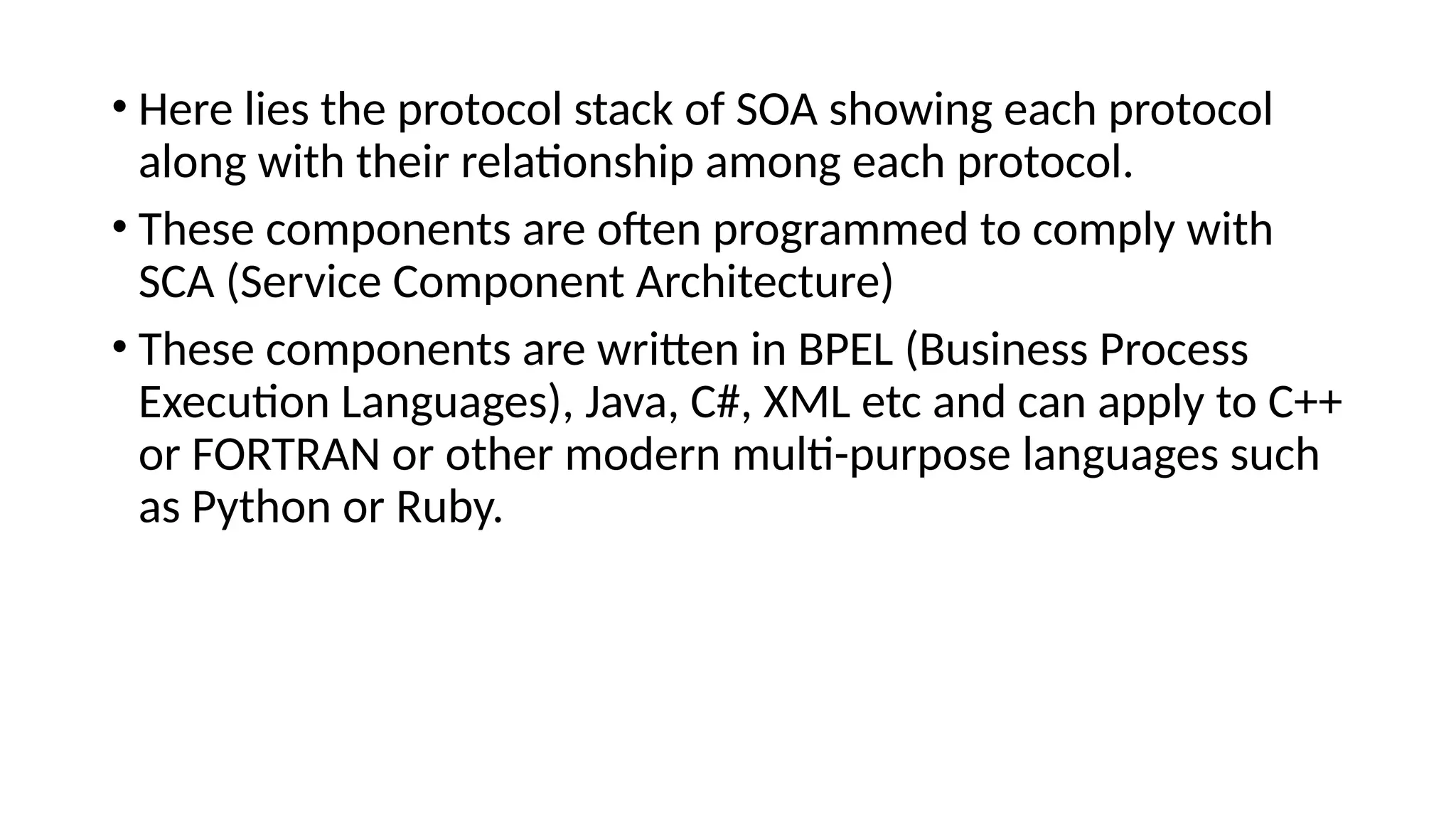

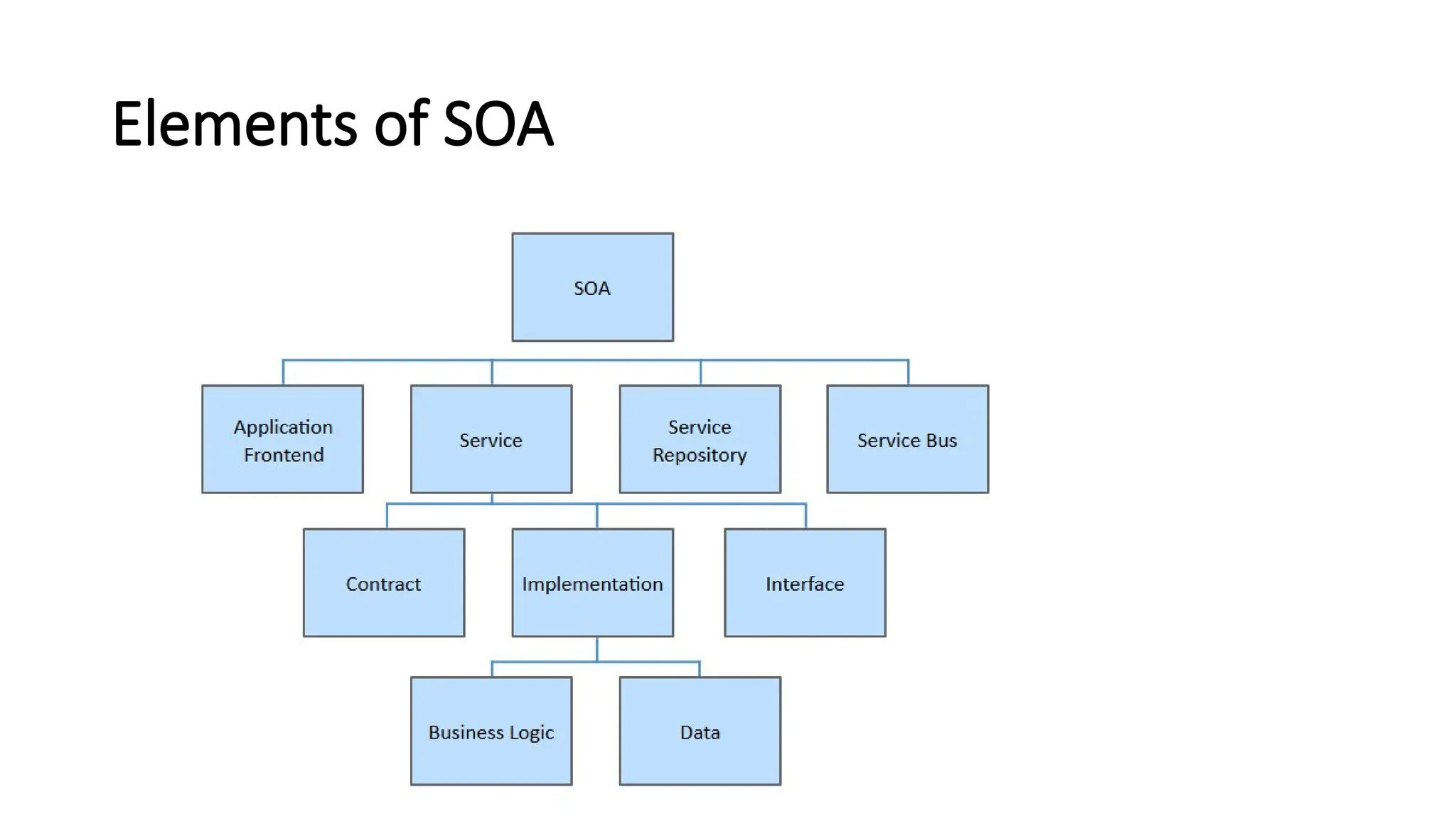

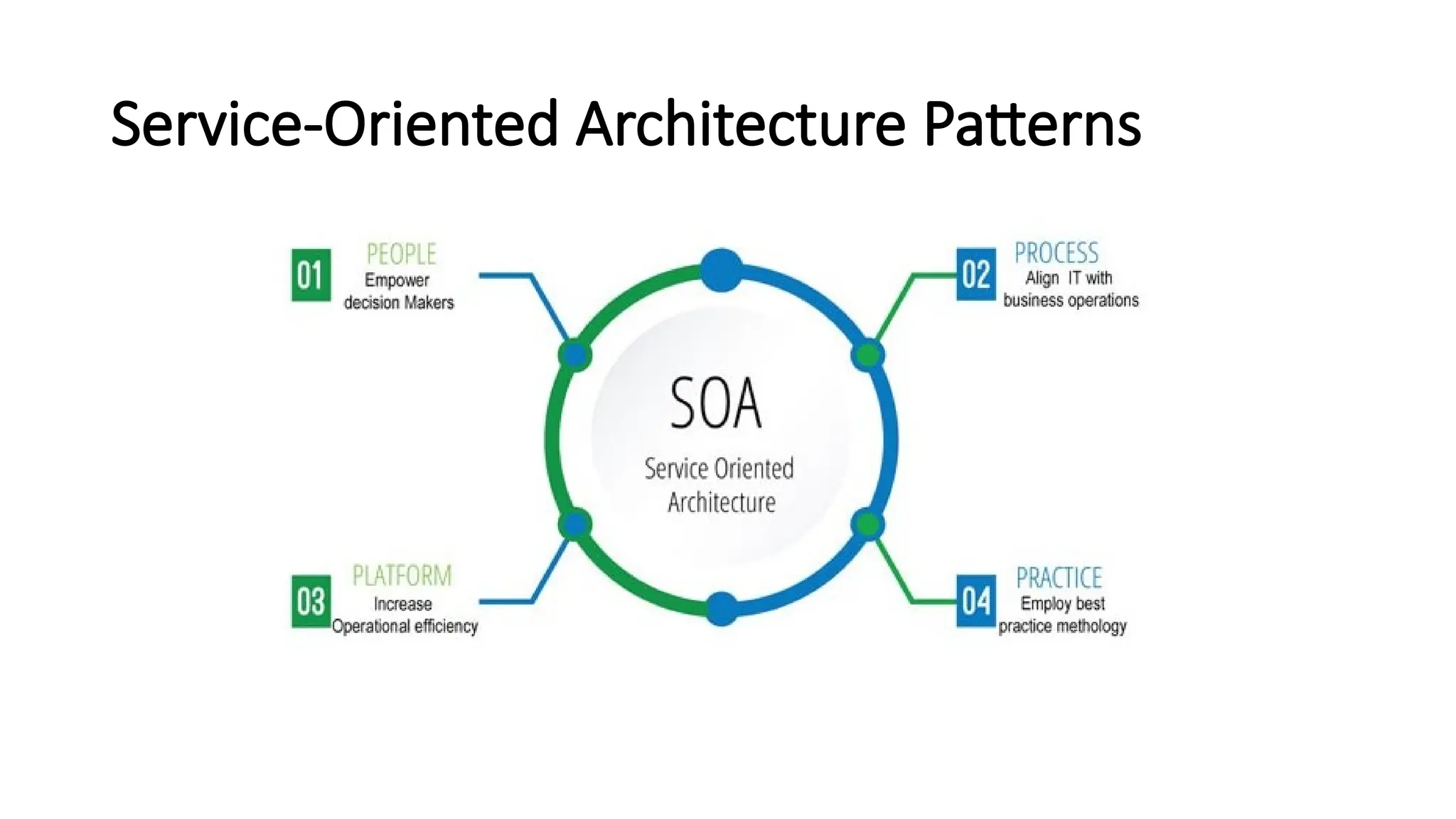

La arquitectura orientada a servicios (SOA) es un estilo de diseño de software que permite a los componentes de una aplicación comunicarse entre sí a través de servicios bien definidos e independientes, lo que facilita la integración y reutilización de componentes. SOA proporciona beneficios como la neutralidad del lenguaje, la agilidad organizacional y la reutilización de servicios existentes, y se compone de varias capas que incluyen la interfaz del consumidor, procesos comerciales y un entorno de servicios. Además, se enfoca en la gobernanza de servicios y la seguridad, destacando la importancia de establecer un protocolo común para fomentar la interoperabilidad y escalabilidad en entornos de desarrollo.

![Architectural properties

Performance in component interactions, which can be the dominant factor

in user-perceived performance and network efficiency;[9]

• scalability allowing the support of large numbers of components and

interactions among components.

• simplicity of a uniform interface;

• modifiability of components to meet changing needs (even while the

application is running);

• visibility of communication between components by service agents;

• portability of components by moving program code with the data;

• reliability in the resistance to failure at the system level in the presence of

failures within components, connectors, or data](https://image.slidesharecdn.com/unit2cloudccomputiing-241122034821-af06330d/75/UNIT2_Cloud-Computing-Cloud-Enabling-Technologies-40-2048.jpg)