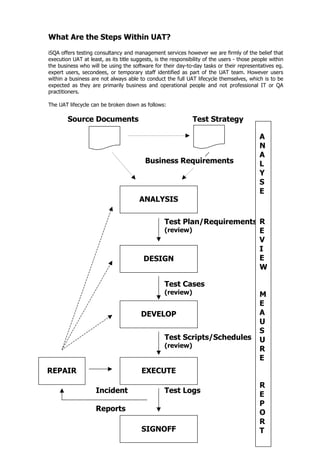

The document discusses the challenges of user acceptance testing (UAT). UAT is an important stage of testing where users test software in a real-world environment before public release. However, UAT is difficult to manage due to stress, complex communication between technical and non-technical teams, and lack of documentation. The document outlines best practices for UAT, including defining requirements, designing test cases, executing tests, tracking issues, and managing the overall test process. Common difficulties with UAT include gaps in understanding between developers and users, inexperienced testers, inconsistent reviewers, and unmotivated testing teams.