Embed presentation

Download to read offline

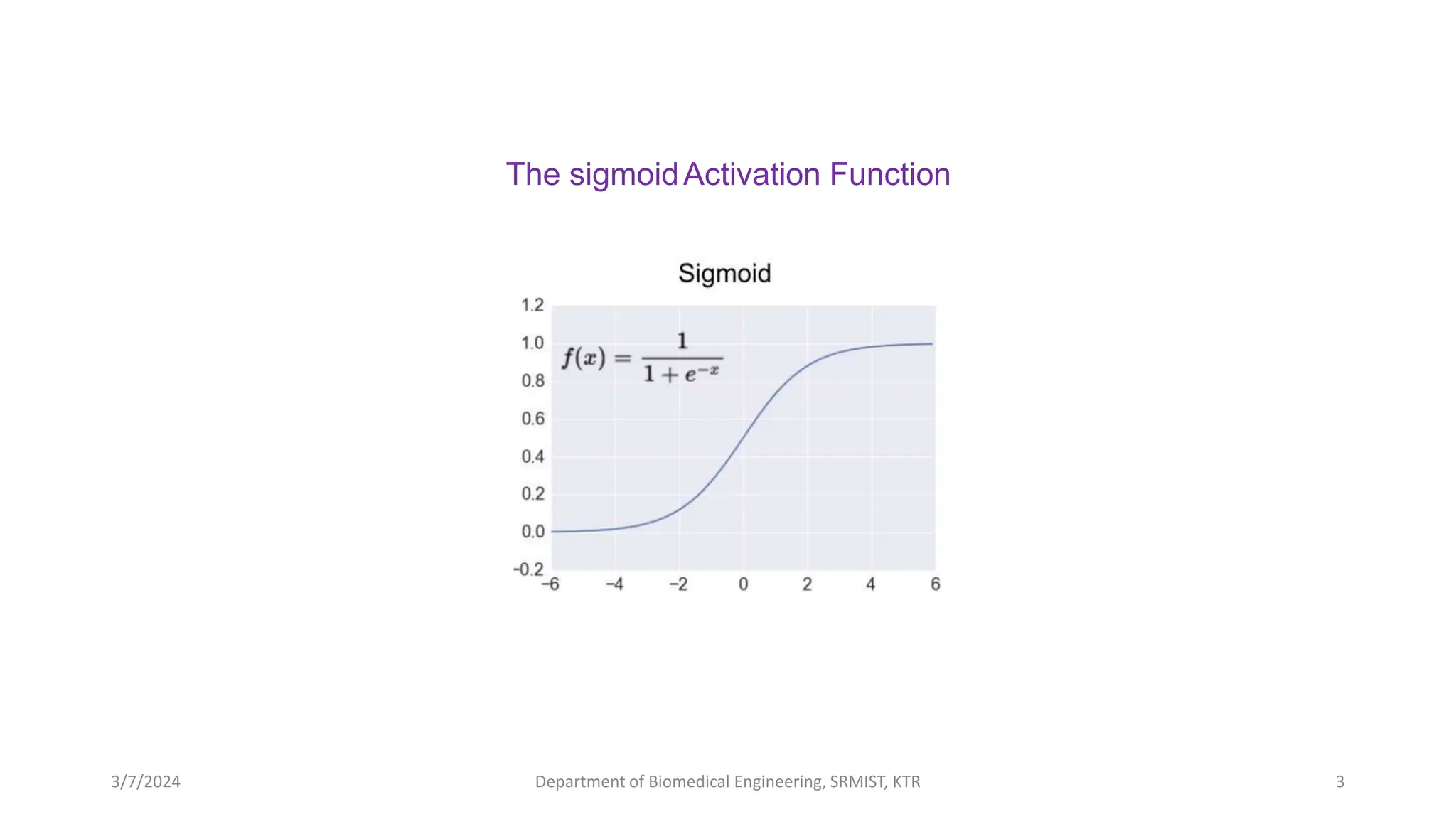

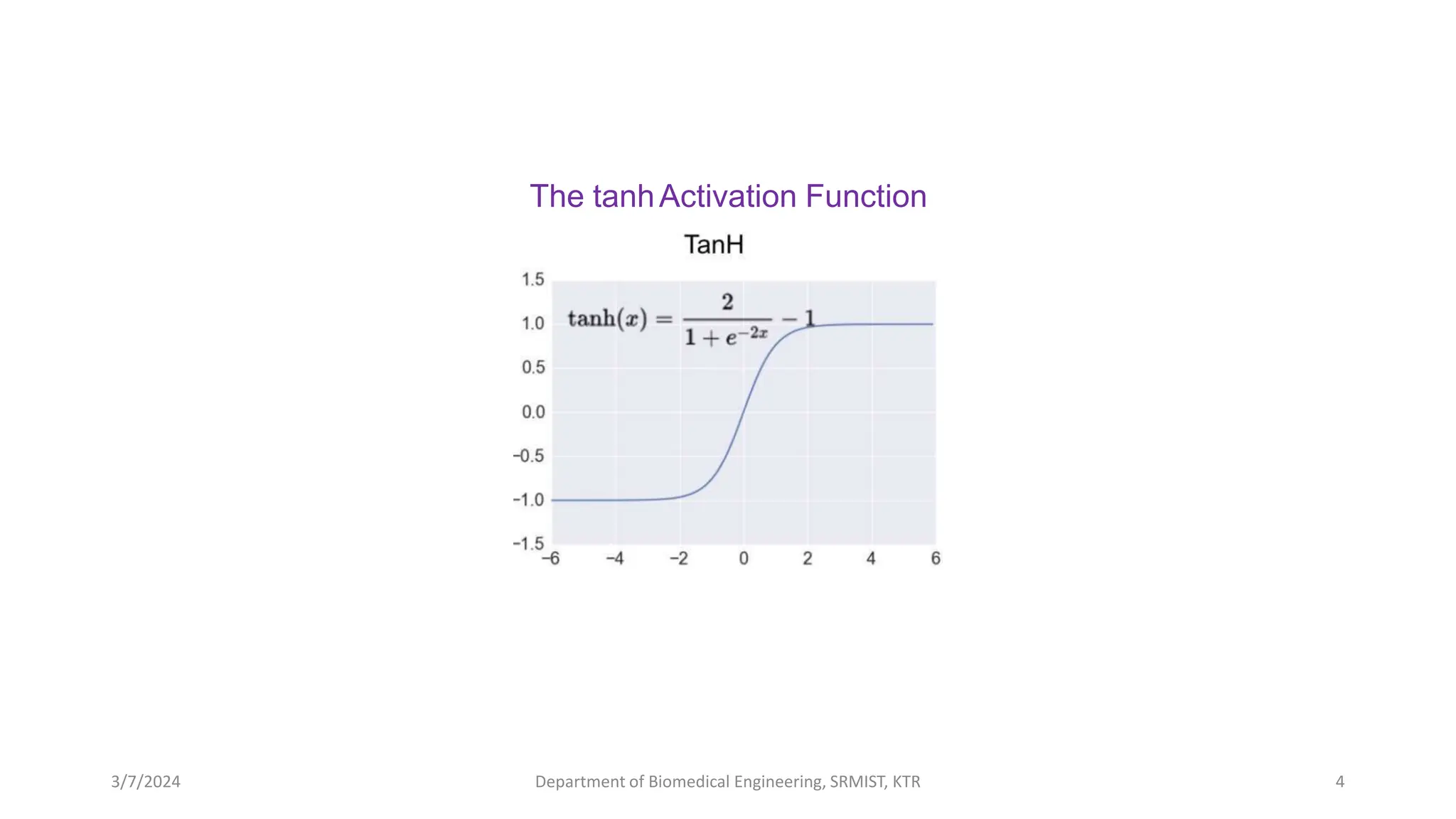

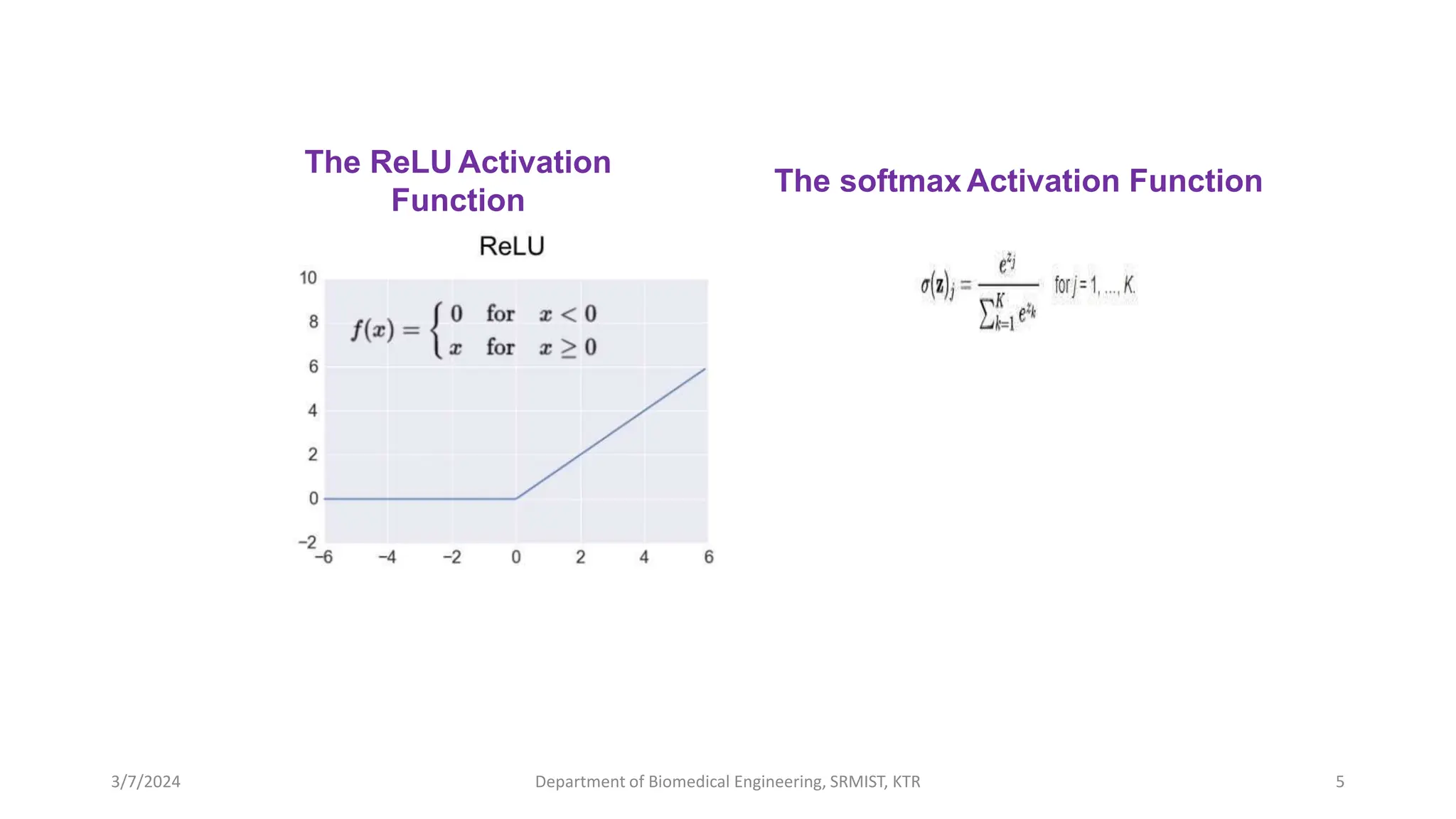

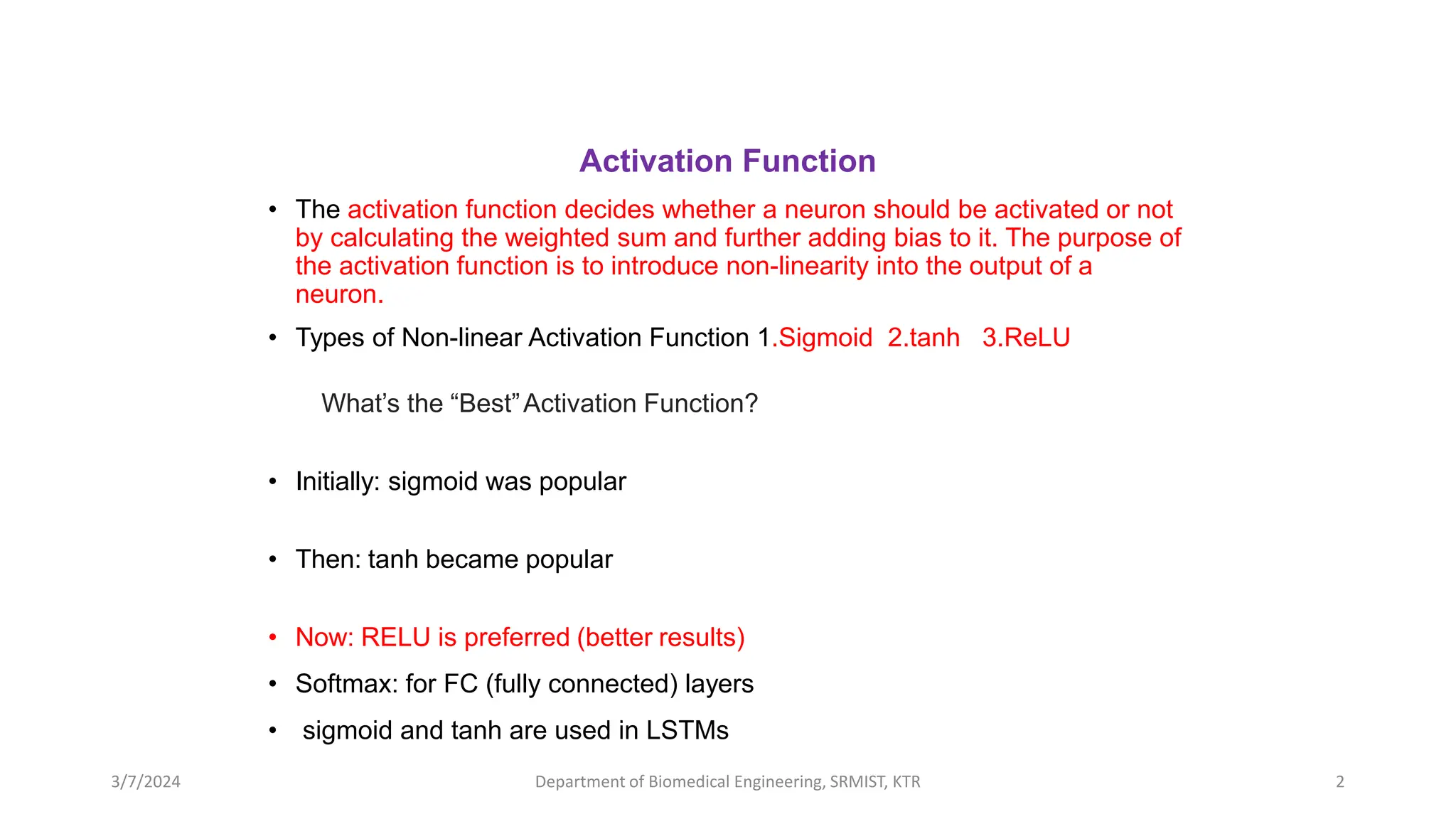

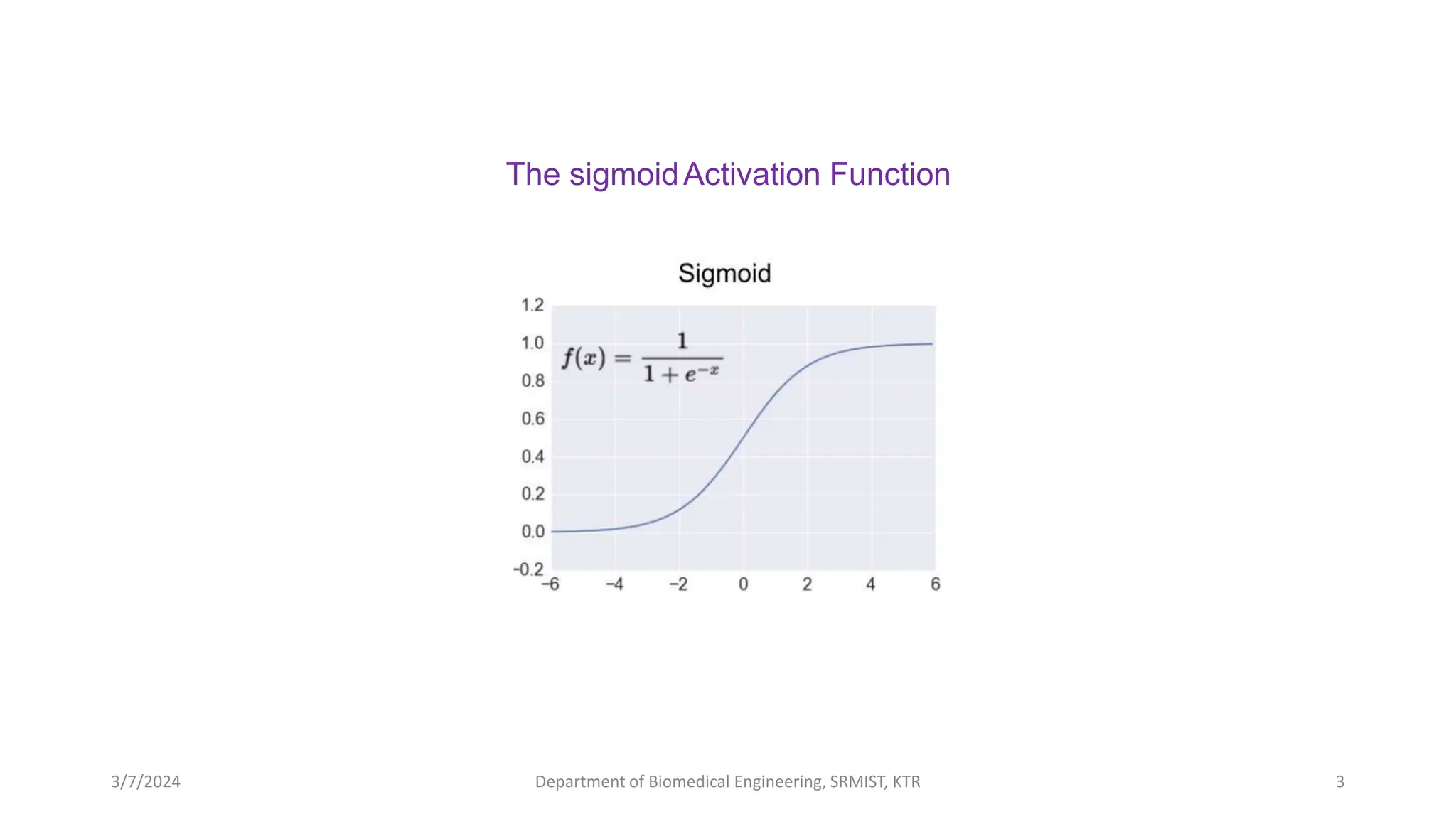

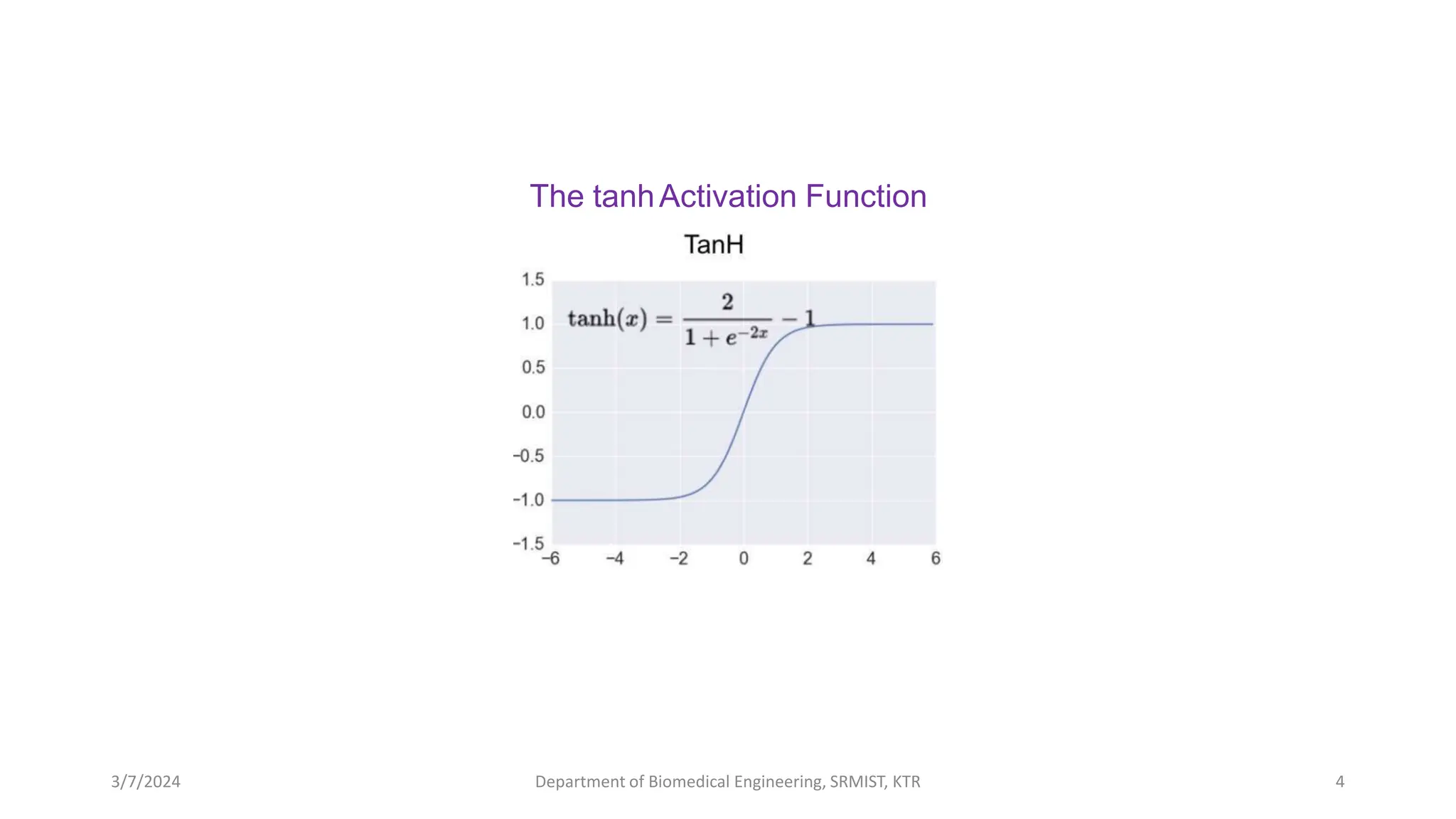

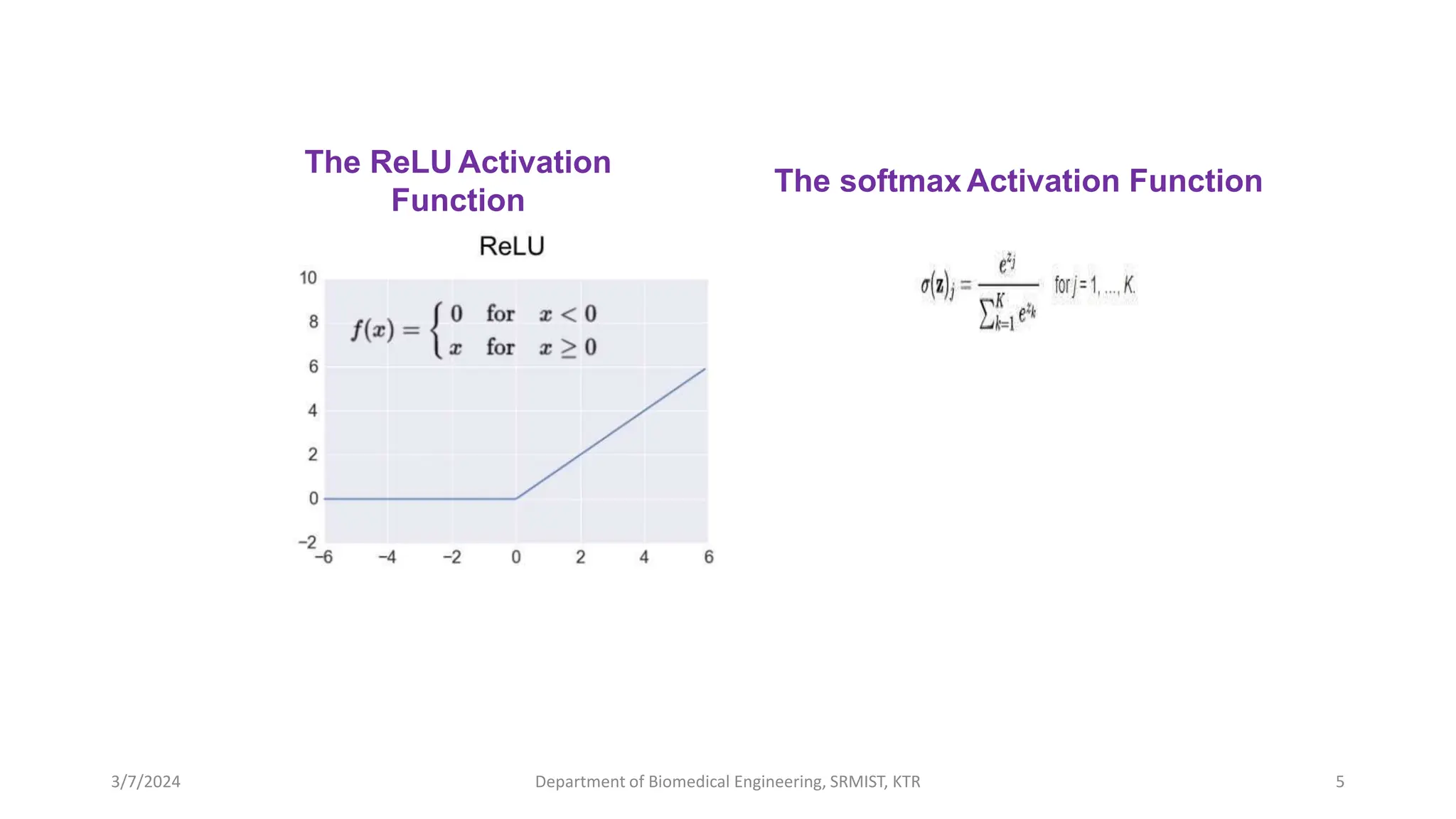

The document discusses different types of activation functions used in neural networks. It describes the sigmoid, tanh, and ReLU activation functions as common examples of non-linear functions. While sigmoid was initially popular, ReLU is now preferred as it works better in practice and helps address the vanishing gradient problem. Softmax is used for fully connected layers, while sigmoid and tanh are still used in LSTM networks.