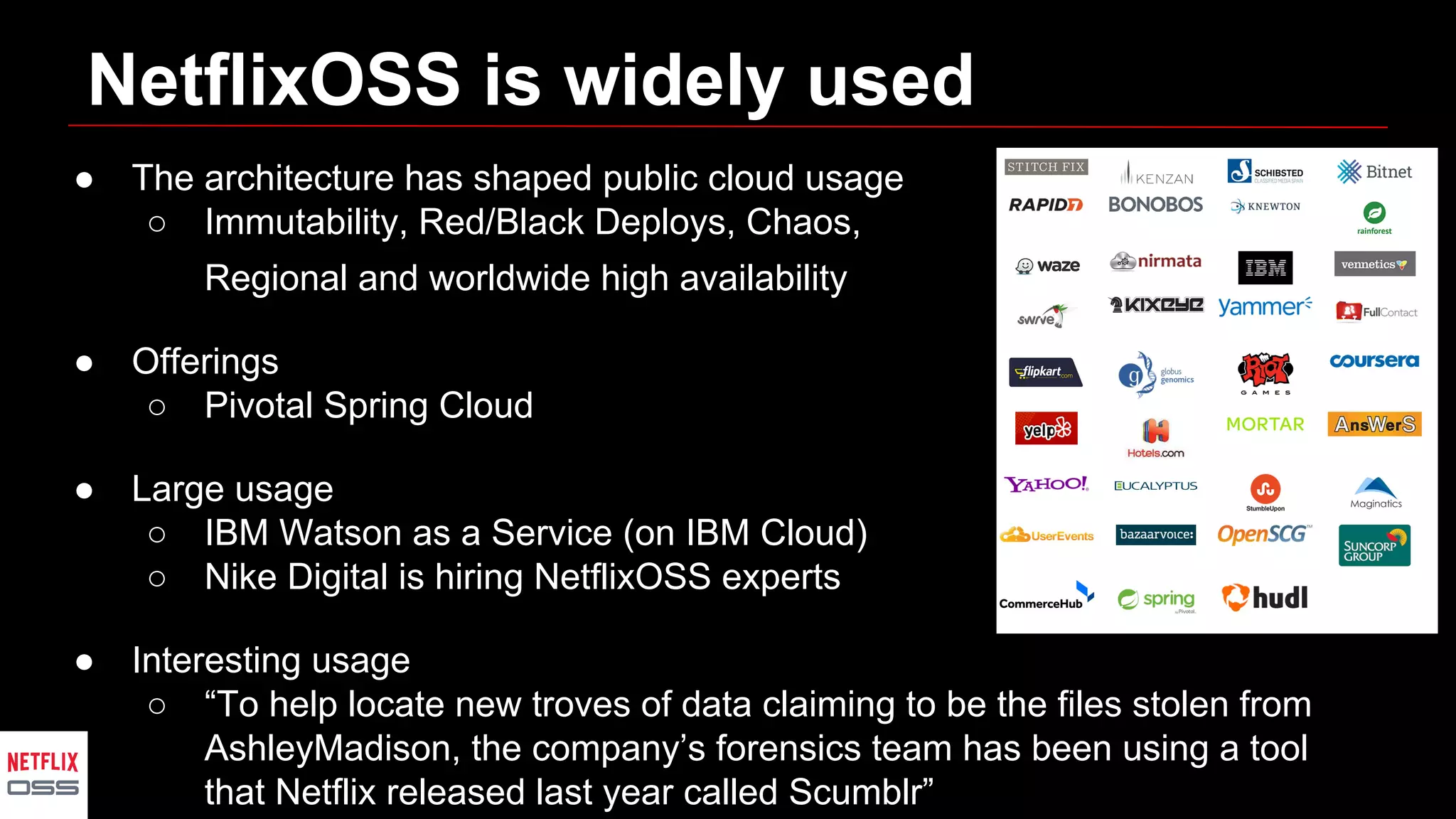

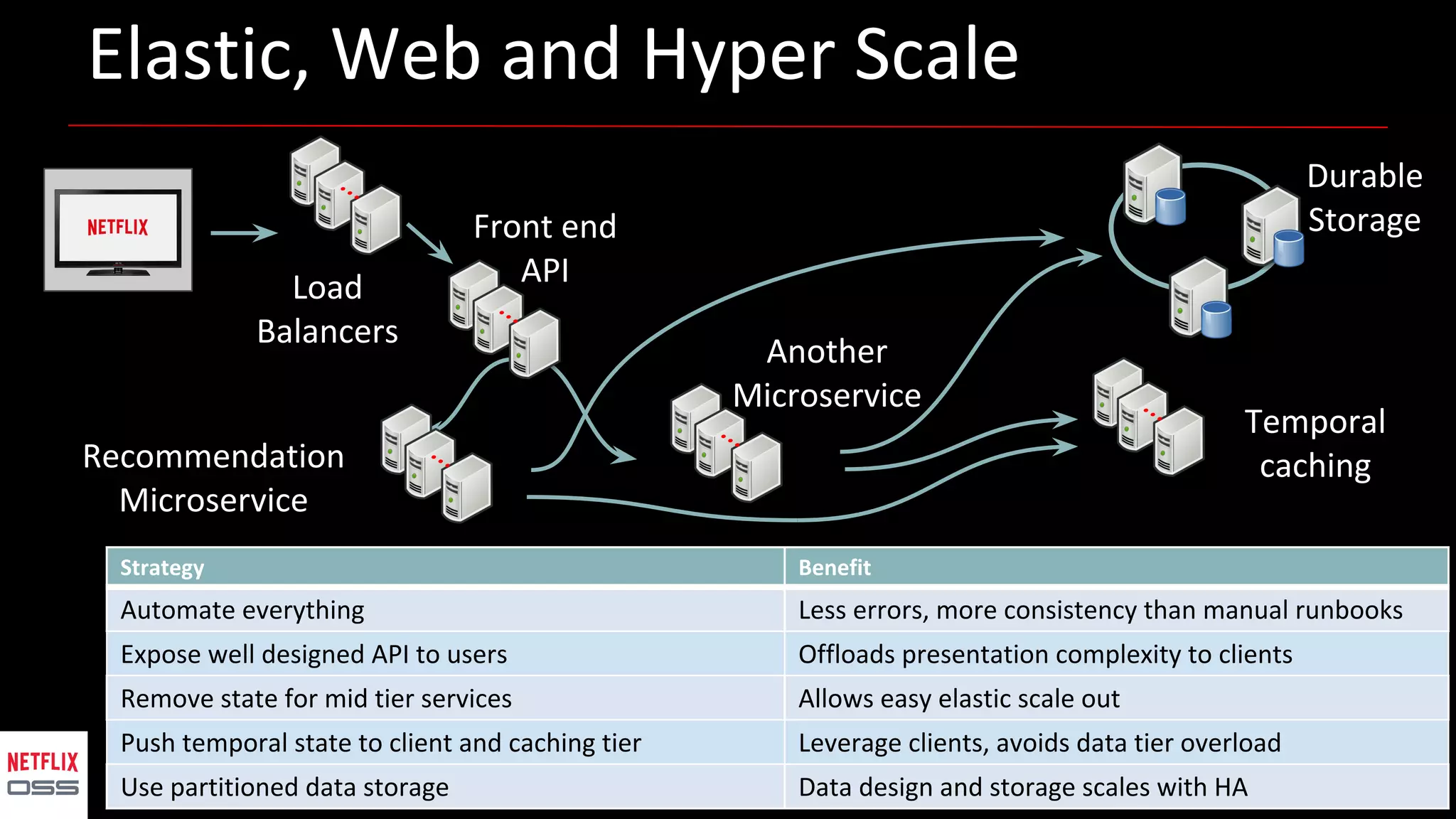

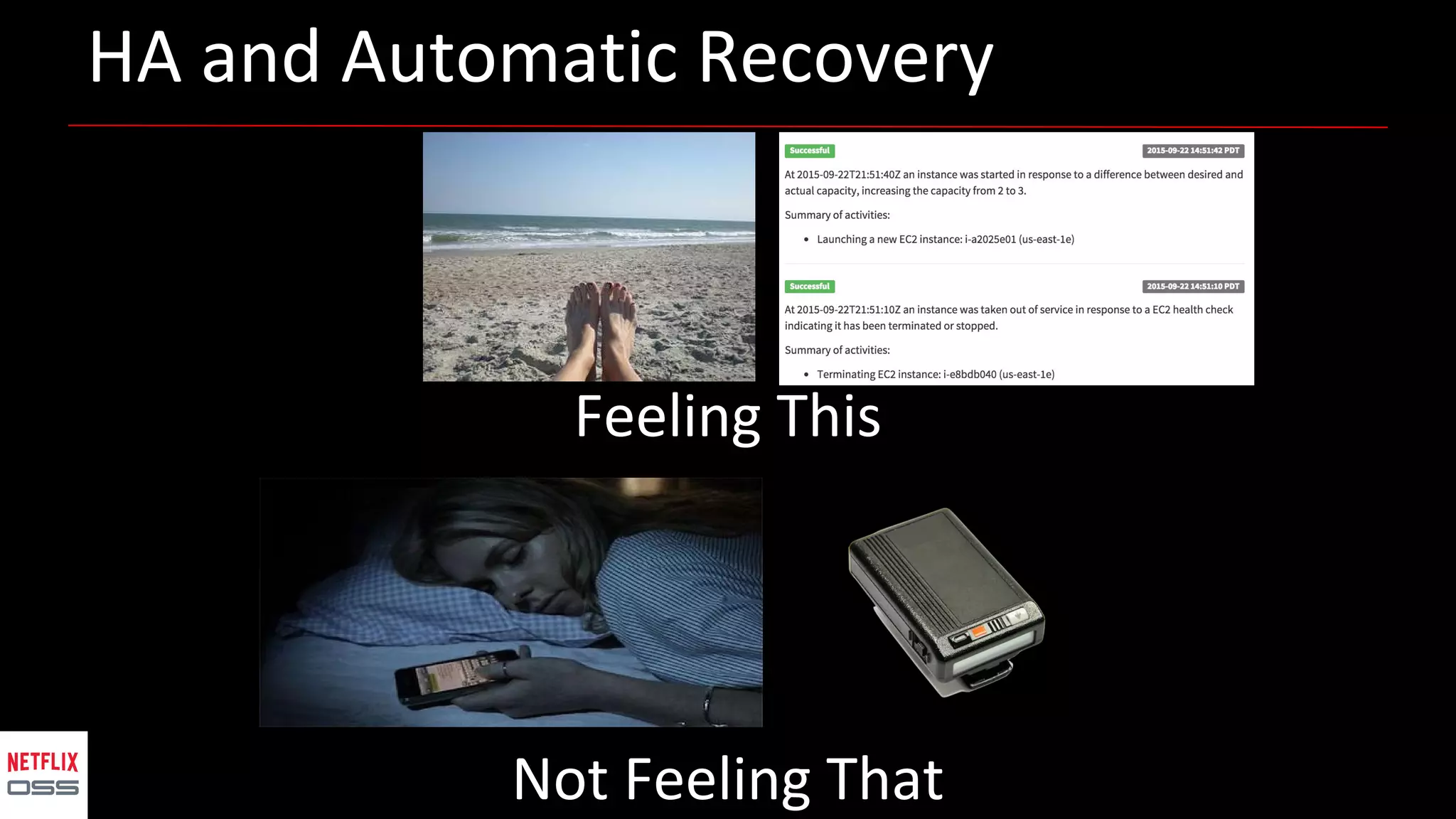

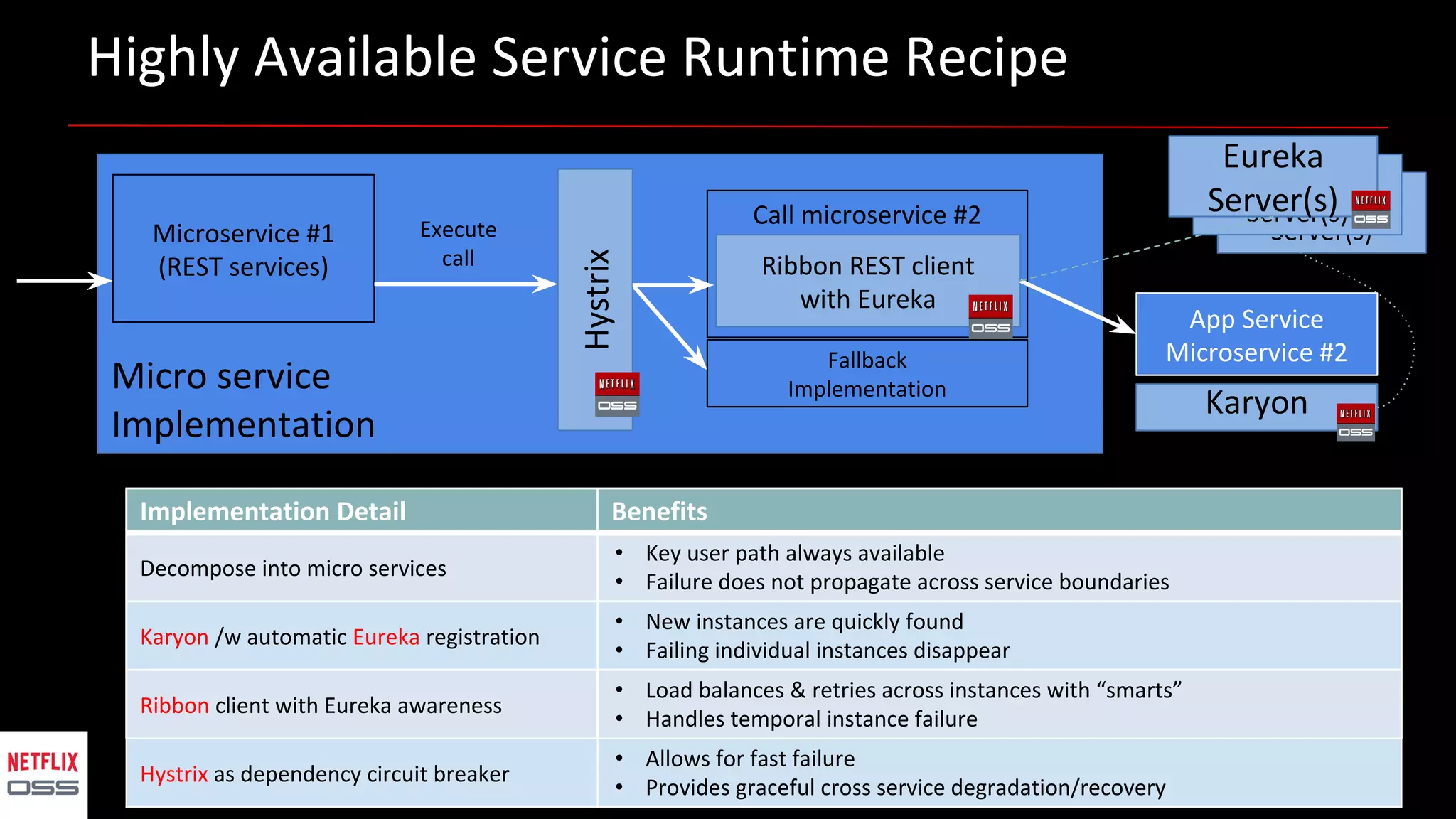

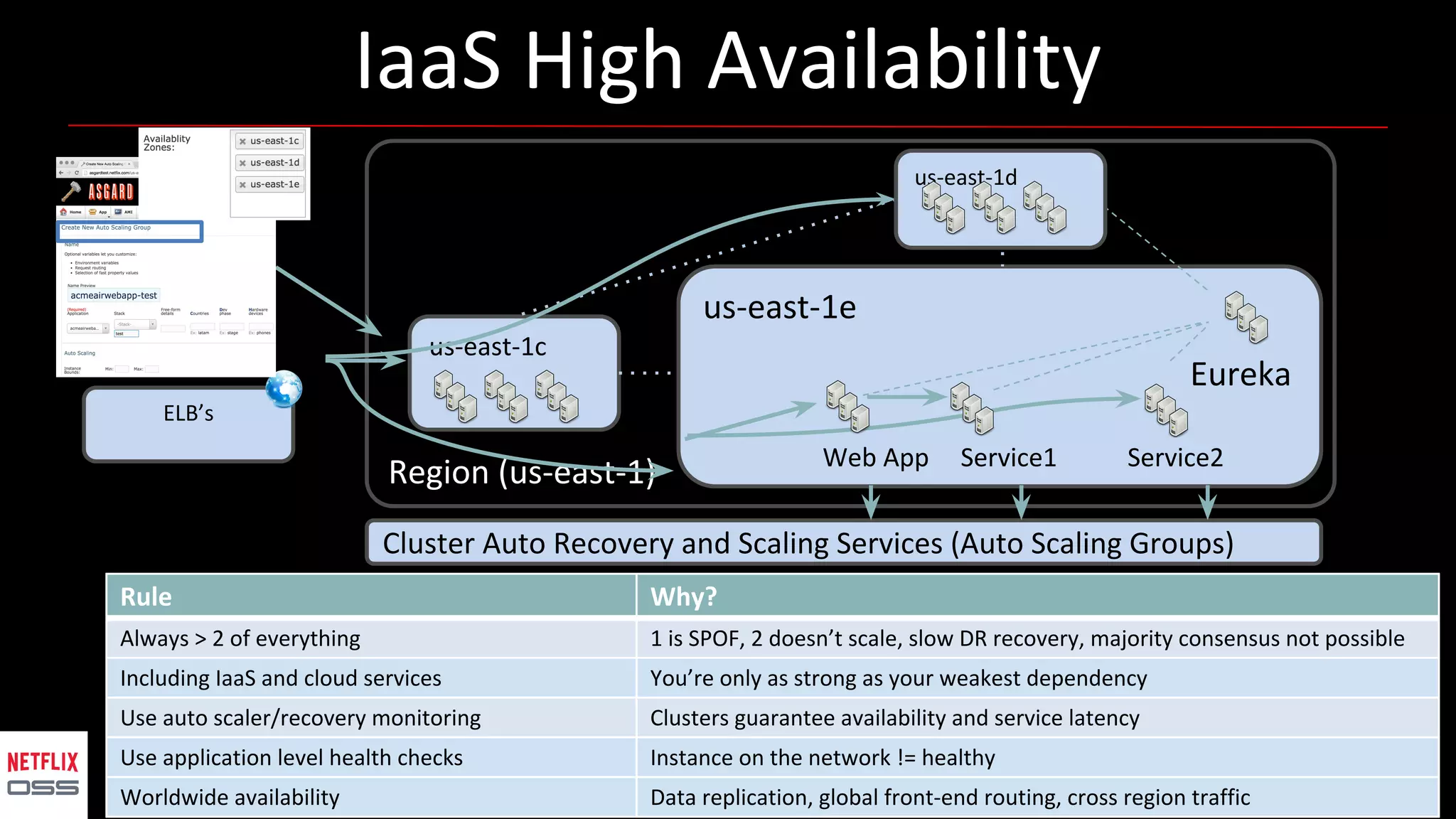

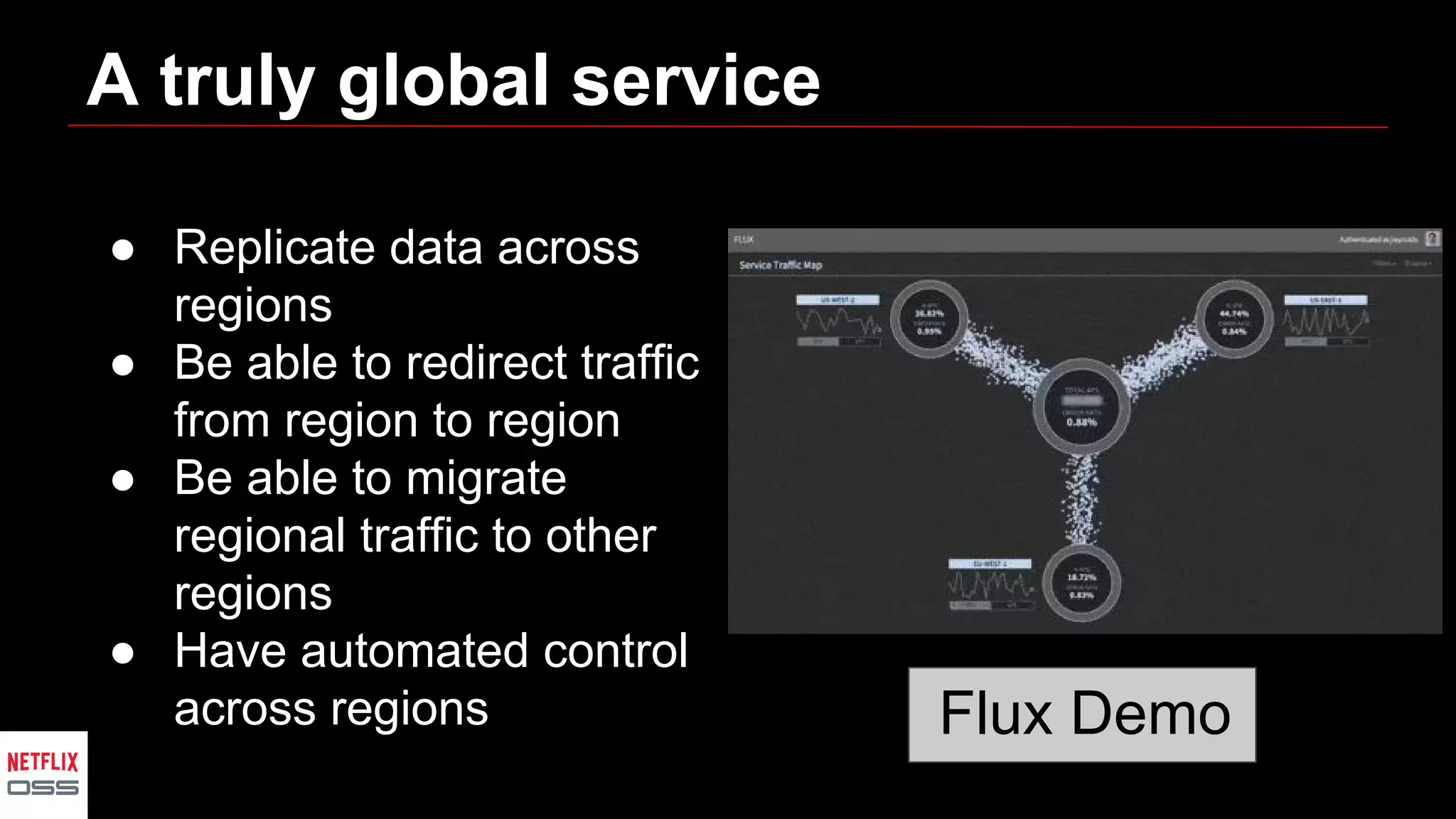

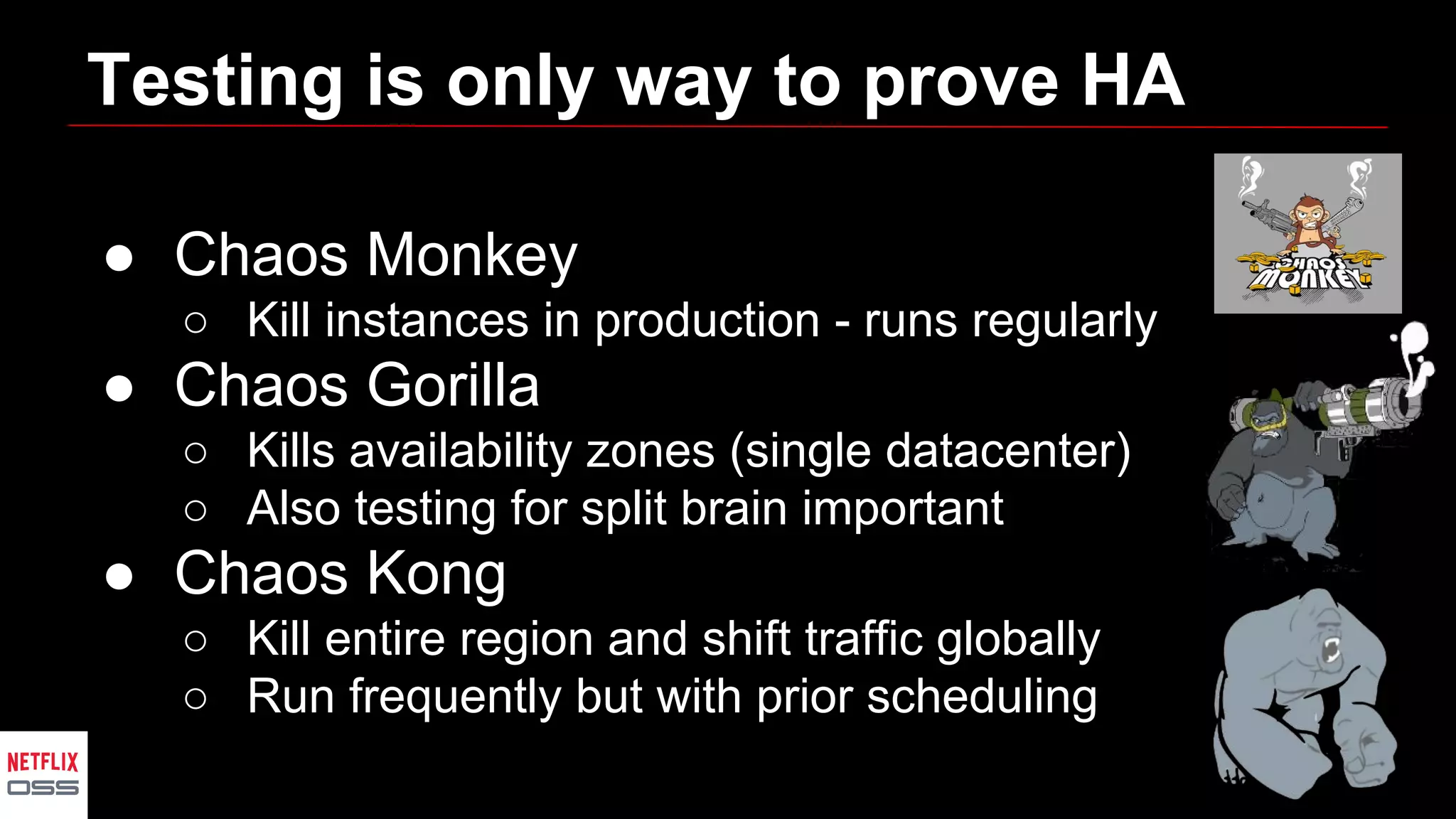

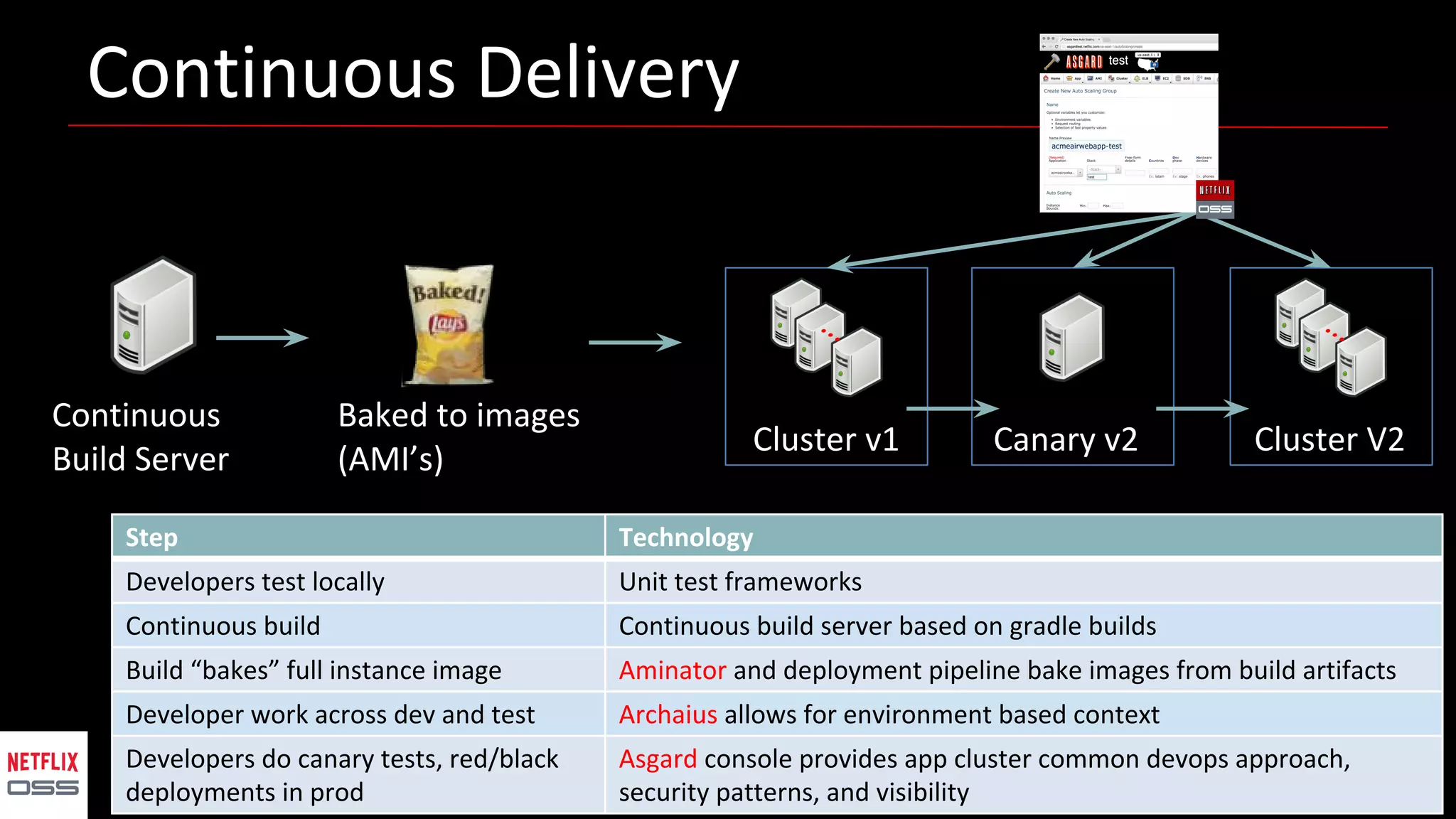

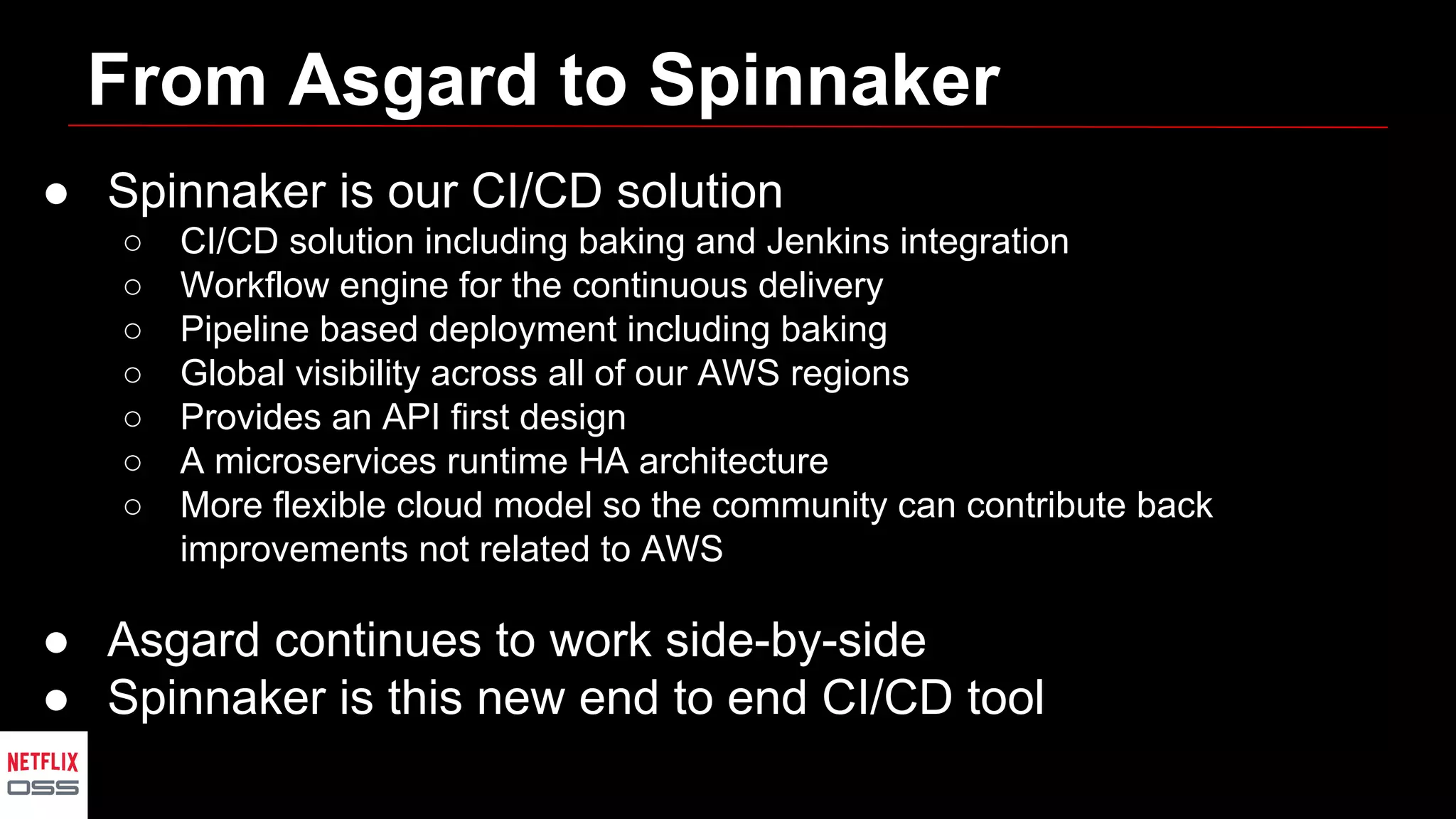

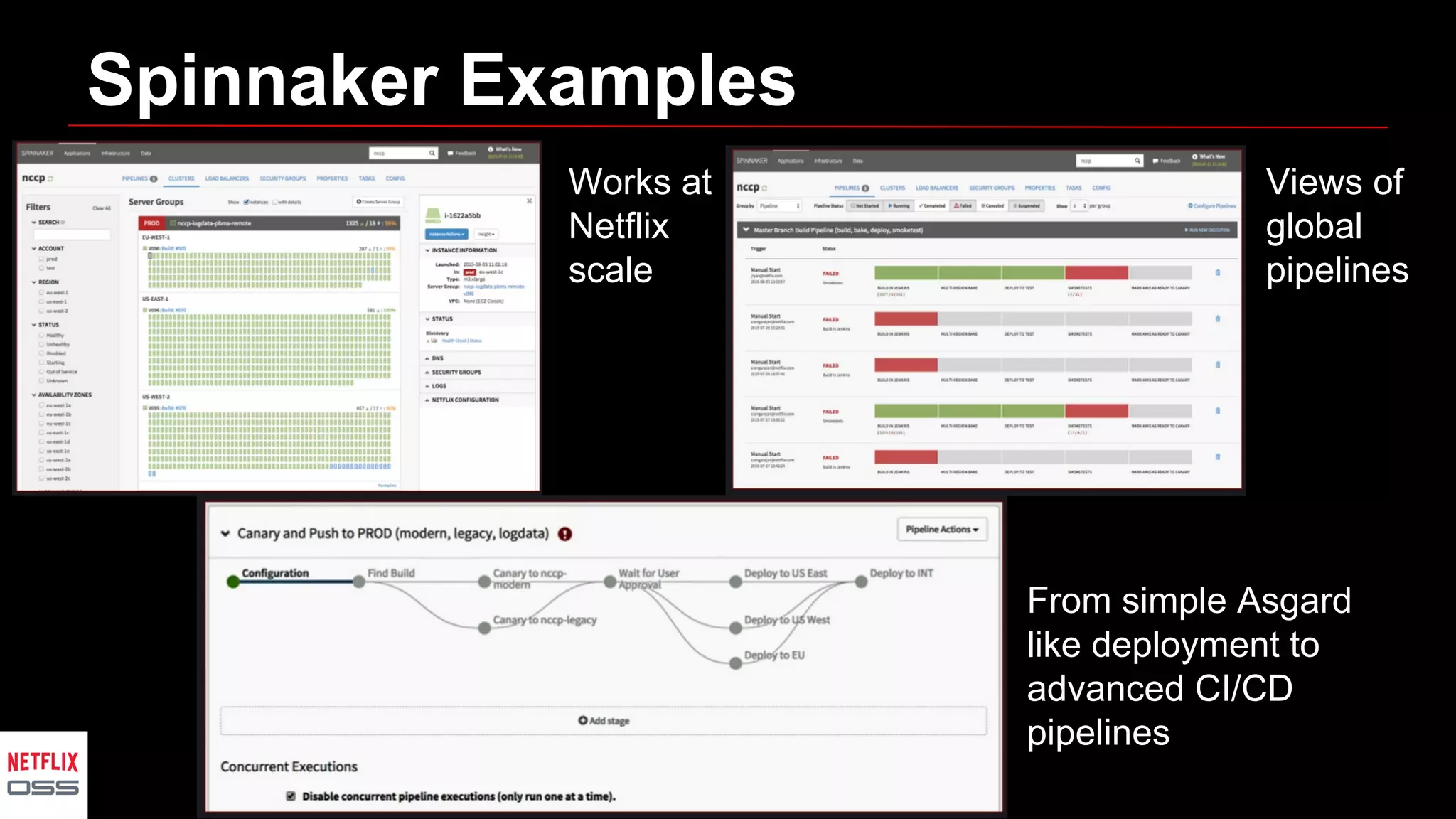

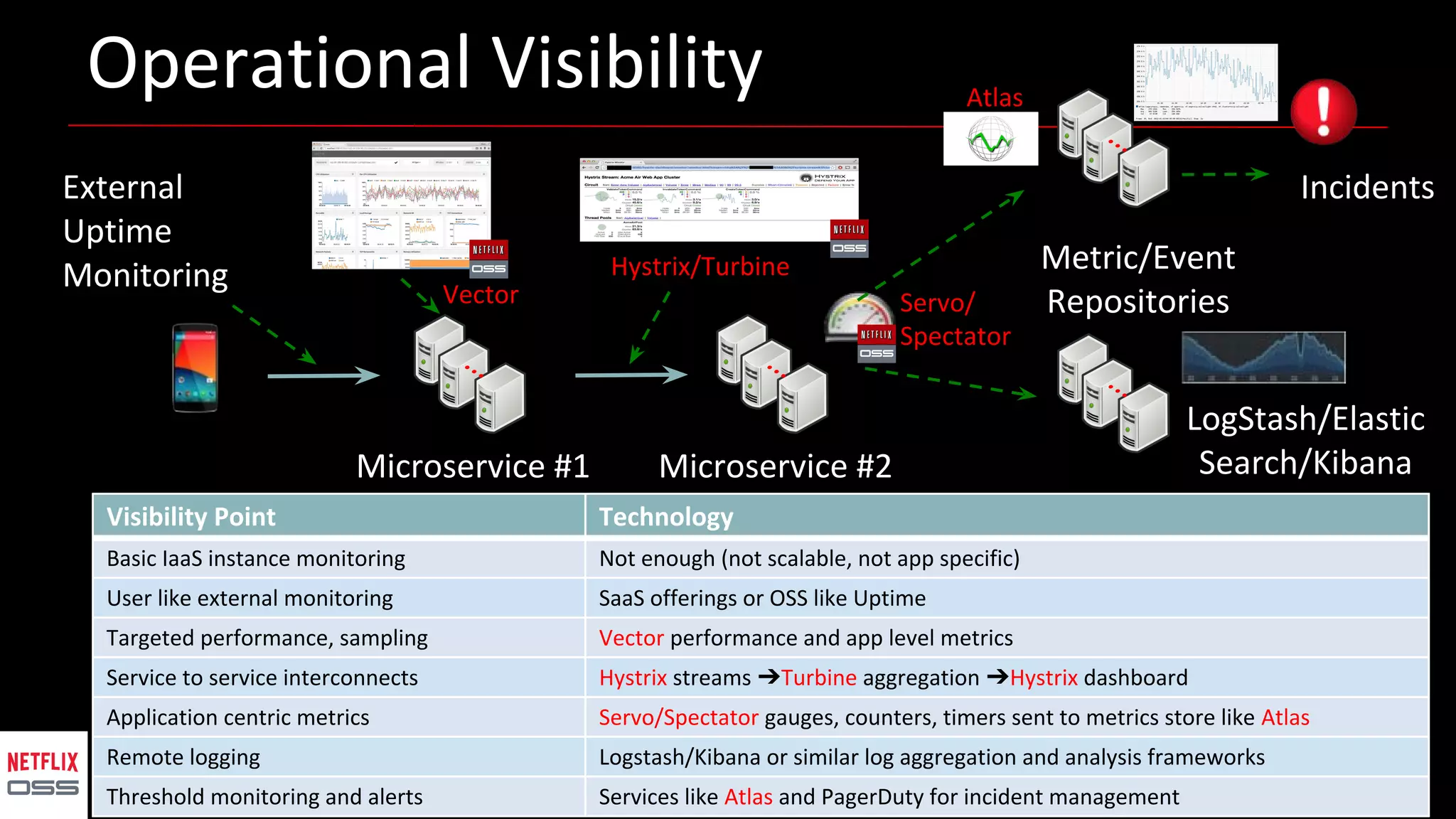

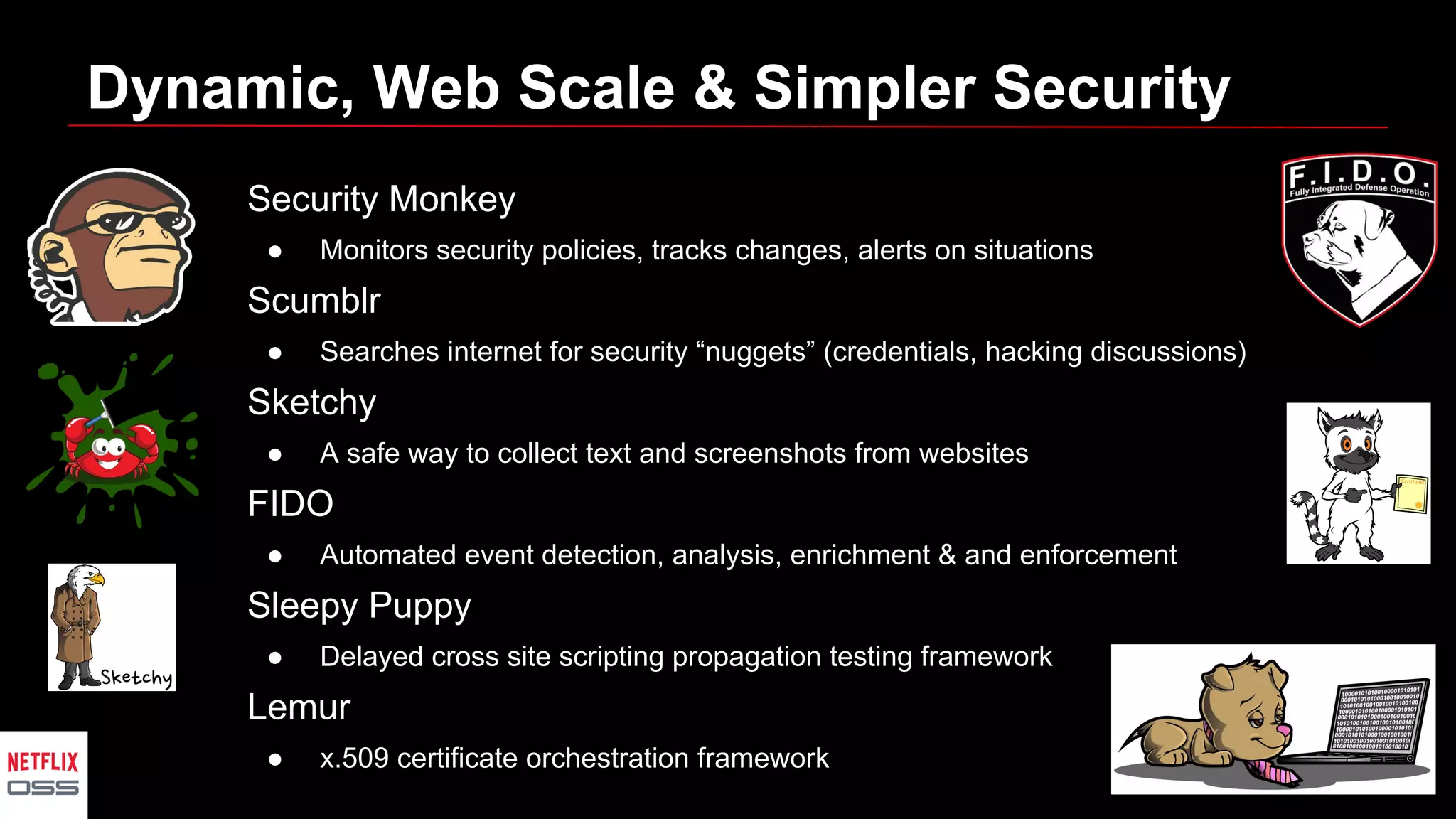

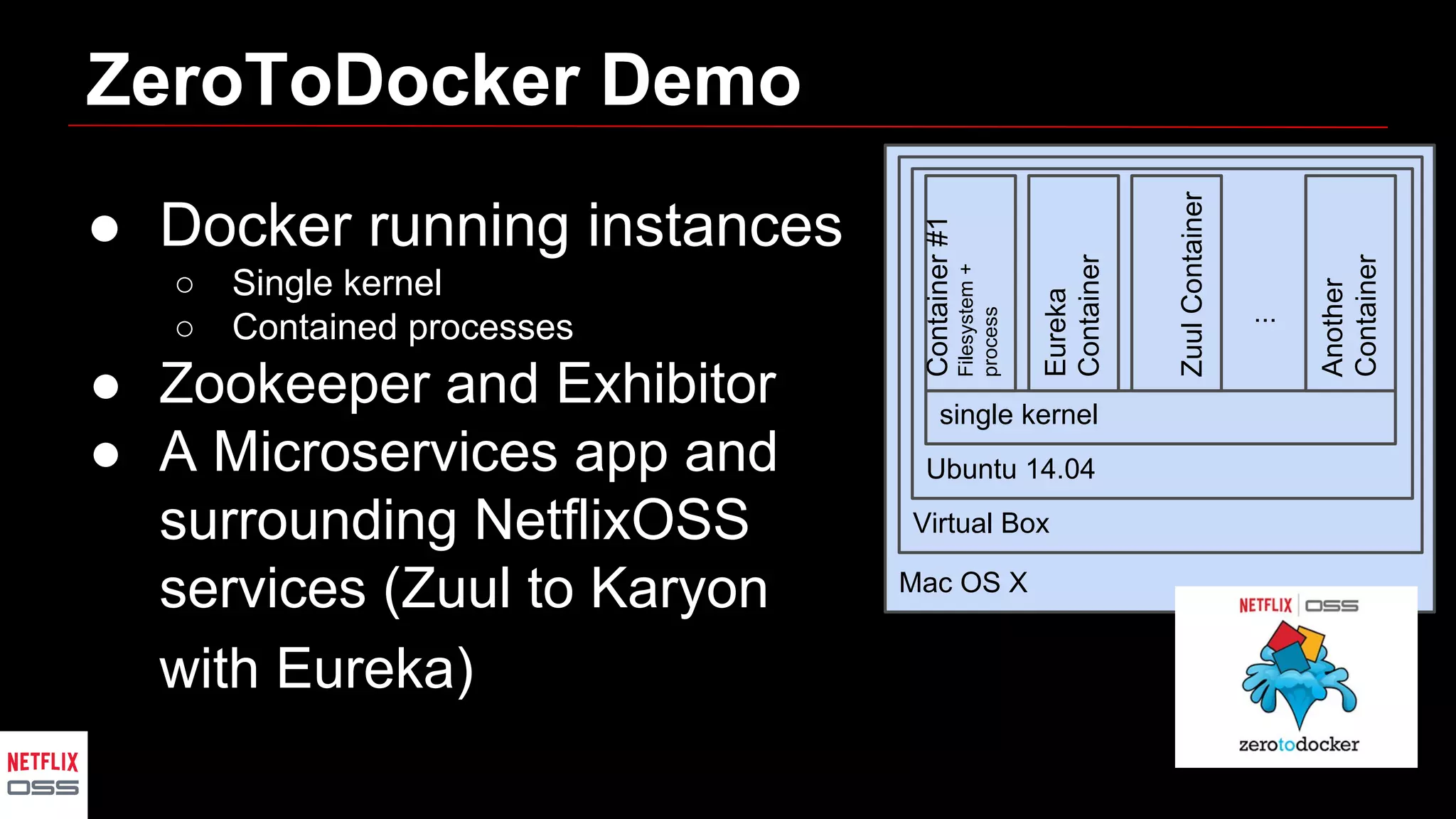

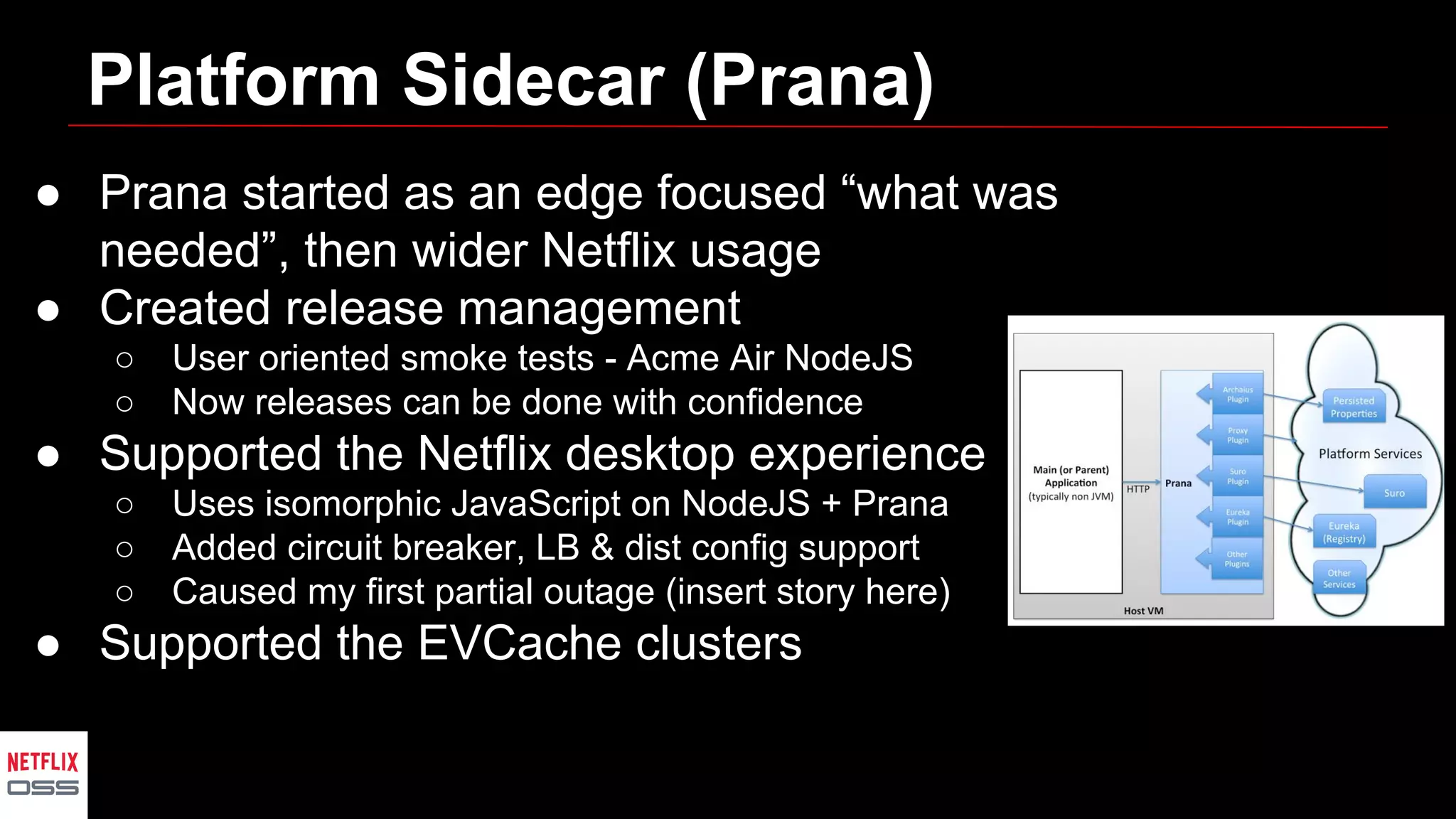

Andrew Spyker's presentation discusses Netflix's open source initiatives, detailing the architecture, performance improvements, and cloud infrastructure that support its massive scale. The talk emphasizes the culture of collaboration and the use of proven external open source technologies, as well as the development of tools like Spinnaker for CI/CD processes. Additionally, Spyker highlights ongoing projects and the importance of operational visibility and automated performance measurement in enhancing Netflix's technical capabilities.