Here is a detailed **SlideShare description (approx. 3000 words)** for your uploaded presentation **“Time\_Complexity\_Goodrich.pptx”**, based on the book *Data Structures and Algorithms in Python* by **Michael T. Goodrich, Roberto Tamassia, and David Mount**.

---

## 📘 **SlideShare Description: Time Complexity – A Complete Guide Based on Goodrich, Tamassia & Mount**

---

### **🎓 Overview**

This SlideShare presentation offers a deep dive into the concept of **Time Complexity** in computer science, as introduced in the textbook *Data Structures and Algorithms in Python* by **Goodrich, Tamassia, and Mount**. It explains how to analyze the efficiency of algorithms by understanding how their runtime increases with input size, and is ideal for students, educators, developers, and professionals preparing for technical interviews.

---

## 🔍 **What is Time Complexity?**

Time complexity is a **mathematical representation of how the running time of an algorithm grows with the size of the input (n)**. It’s used to evaluate algorithm performance in a system-independent way. Unlike actual running time, which can vary with hardware or language, time complexity gives a generalized, theoretical estimate.

---

### **Formal Definition**

**Time Complexity** of an algorithm = Number of basic operations executed as a function of input size `n`.

These operations can include:

* Arithmetic operations (`+`, `-`, `*`, `/`)

* Comparisons (`==`, `<`, `>`)

* Assignments (`=`)

* Function calls or returns

* Data access or traversal steps

---

### **Why Analyze Time Complexity?**

* To **predict performance** without executing code

* To **compare multiple algorithms**

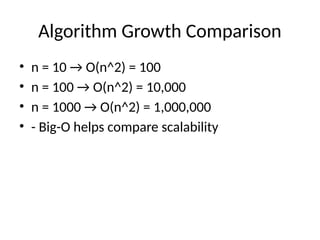

* To **determine scalability**

* To **avoid inefficient solutions** as data grows

* To **choose suitable algorithms** in real-world applications (e.g., search engines, big data, AI)

---

## ⏳ **Measuring Time Complexity**

Time complexity is usually estimated by:

1. Counting the **number of operations**

2. Ignoring **machine-dependent factors**

3. Focusing on the **dominant term** as `n → ∞`

This leads to the use of **asymptotic notation**, which describes behavior for large input sizes.

---

## 🔢 **Asymptotic Notations**

### **Big-O Notation (O)**

Describes the **worst-case** upper bound.

### **Big-Omega (Ω)**

Describes the **best-case** lower bound.

### **Theta (Θ)**

Describes the **tight bound** (average-case for some cases).

---

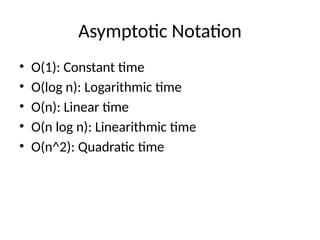

### **Common Big-O Complexities**

| Complexity | Name | Example Use Case |

| ---------- | ------------ | ------------------------------------- |

| O(1) | Constant | Accessing an array element |

| O(log n) | Logarithmic | Binary Search |

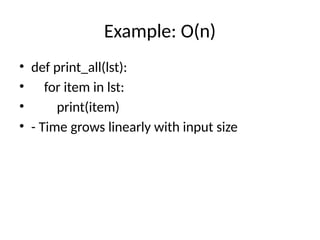

| O(n) | Linear | Iterating through an array |

| O(n log n) | Linearithmic | Merge Sort, Quick Sort (average case) |

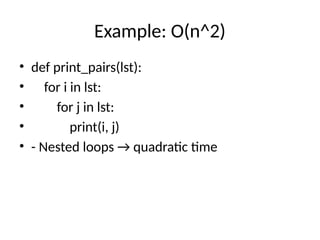

| O(n²) | Quadratic | Bubble Sort, Insertion Sort |

| O(2ⁿ) | Exponential | Recursive F

![Example: O(1)

• def get_first(lst):

• return lst[0]

• - Always takes same time regardless of list size](https://image.slidesharecdn.com/timecomplexitygoodrich-250619164446-4588b873/85/Time-Complexity-in-Algorithms-Explained-with-Python-Examples-Goodrich-Tamassia-Mount-6-320.jpg)