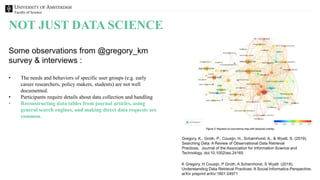

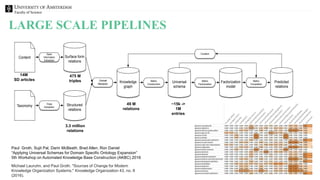

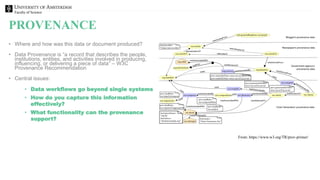

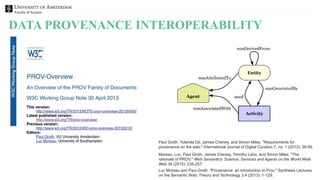

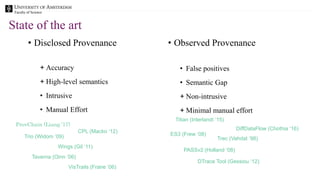

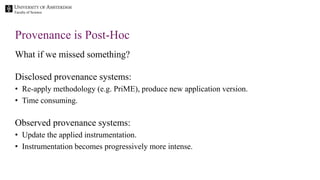

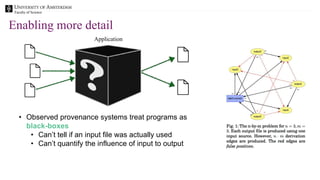

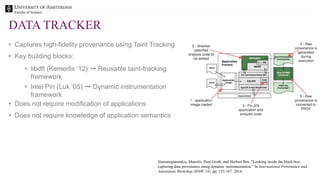

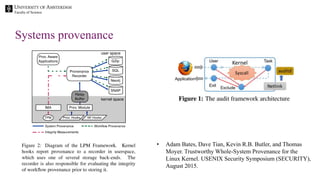

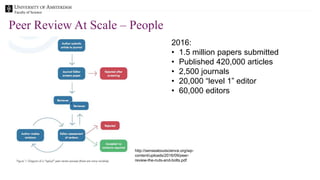

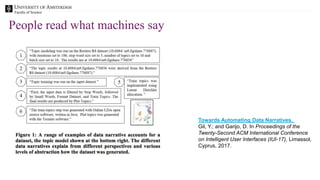

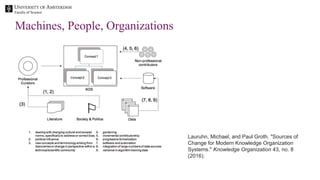

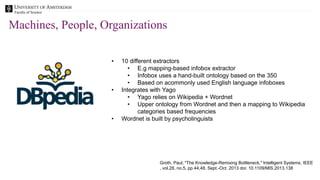

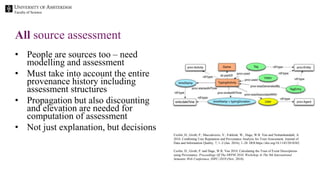

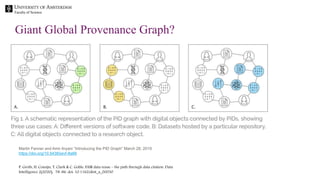

The document discusses the importance of data provenance and knowledge graphs in integrating and assessing data across scientific disciplines to address global challenges. It highlights challenges in data workflows, transparency, and the need for standardized methodologies to capture data provenance effectively. Furthermore, it examines the roles of humans and machines in data assessment and the necessity for transparency in data handling and decision-making processes.