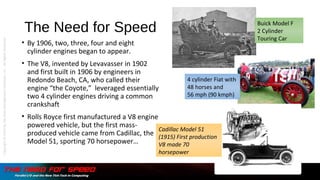

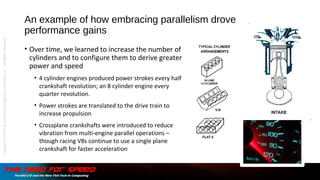

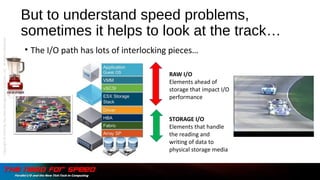

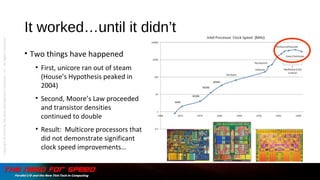

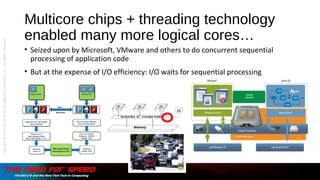

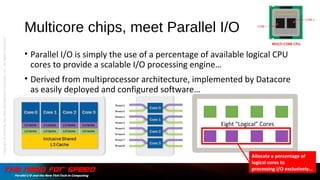

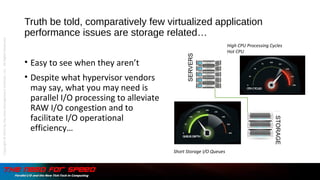

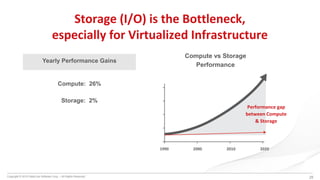

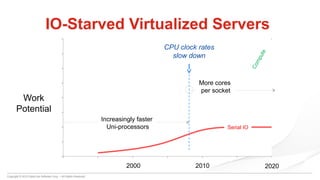

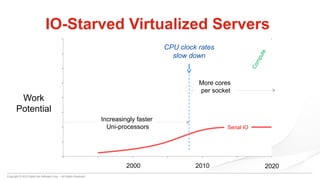

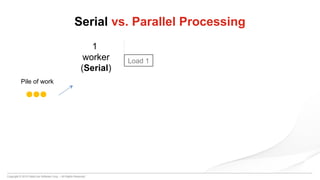

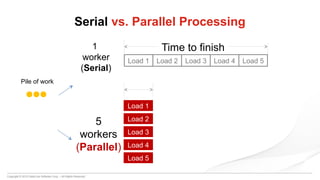

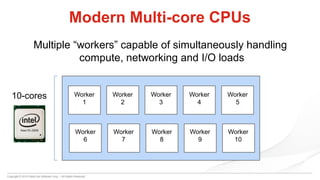

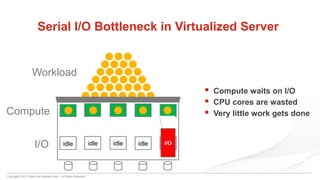

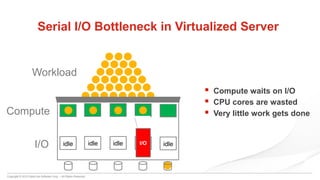

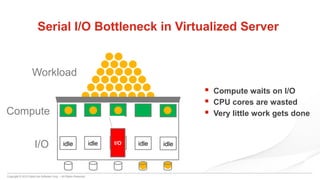

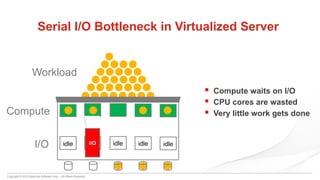

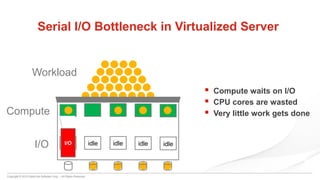

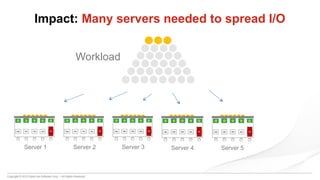

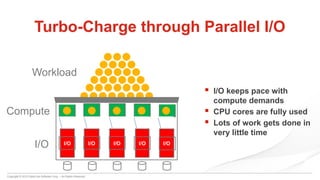

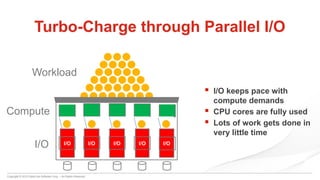

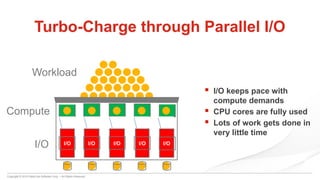

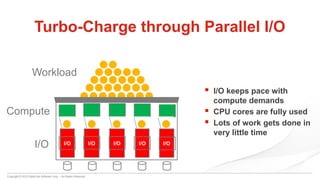

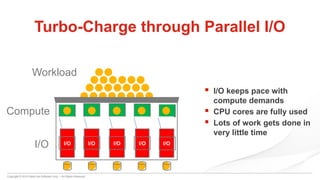

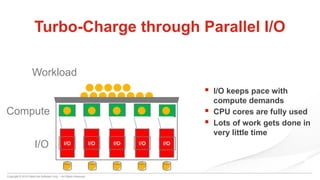

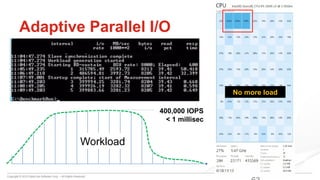

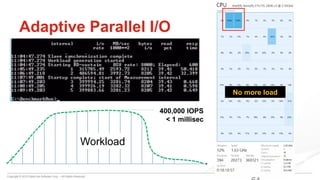

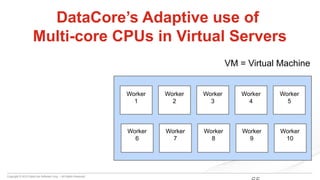

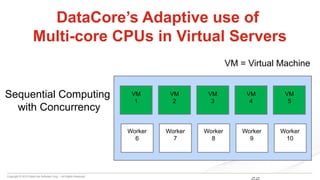

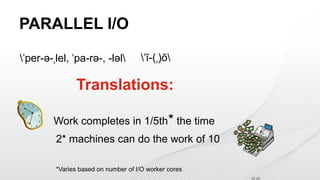

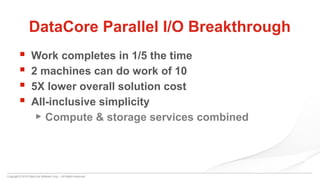

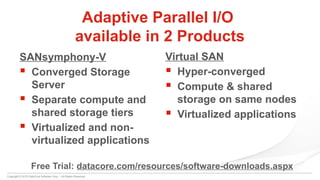

The document discusses the evolution of engine performance from early automobiles to modern vehicles, drawing parallels to server virtualization and application performance. It highlights the challenges faced by storage in keeping up with computing demands and argues for the implementation of parallel I/O to address performance issues in virtualized environments. The text emphasizes that improving I/O efficiency can lead to better overall application performance in data management.