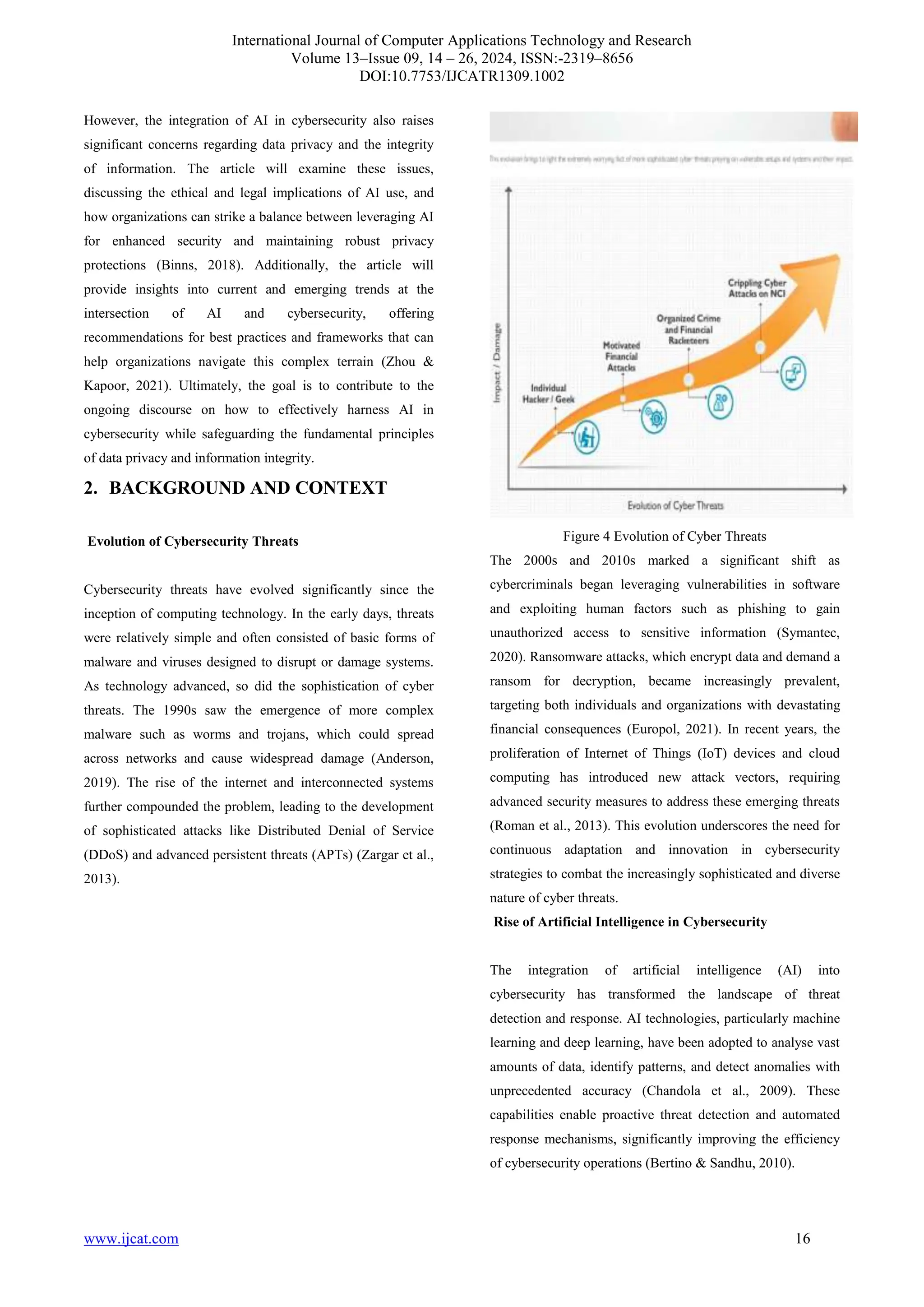

The document discusses the intersection of artificial intelligence (AI) and cybersecurity, highlighting how AI enhances data privacy and information integrity while also presenting new challenges. It emphasizes the dual-edged nature of AI in cybersecurity, focusing on threat detection, data privacy regulations, and ethical considerations. The article aims to explore strategies for balancing AI innovation with the need for robust security and privacy measures in an increasingly interconnected digital landscape.