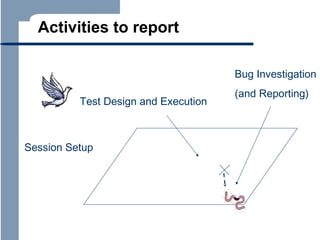

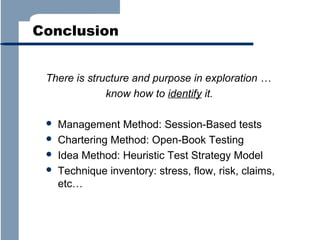

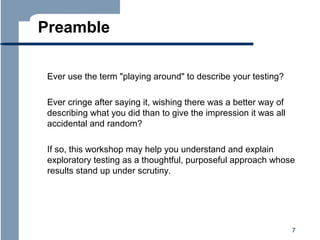

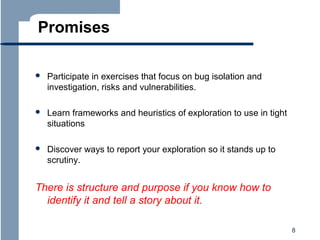

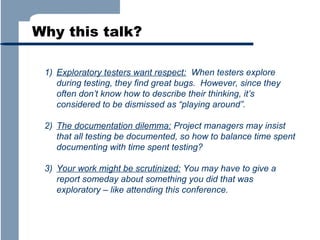

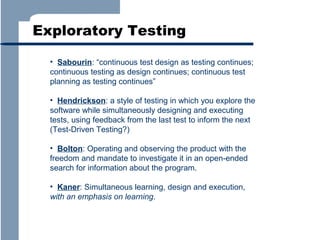

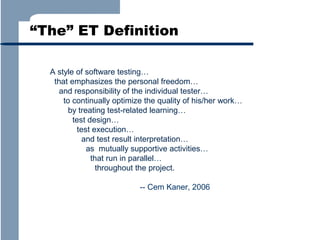

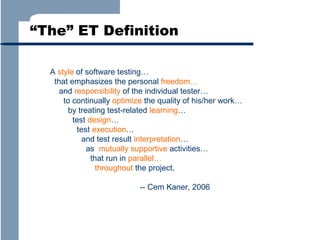

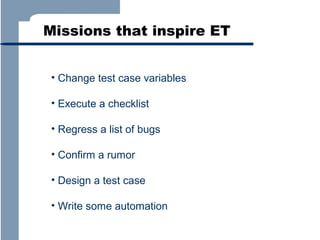

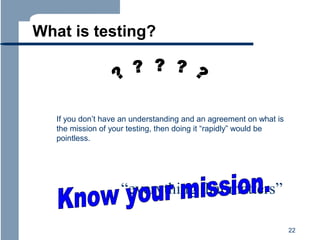

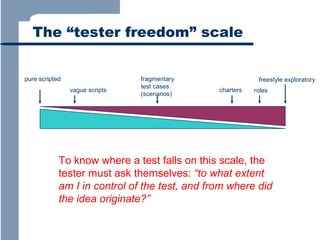

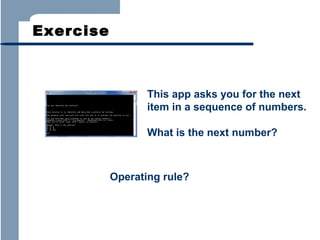

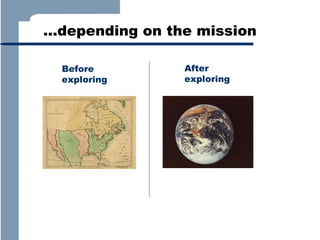

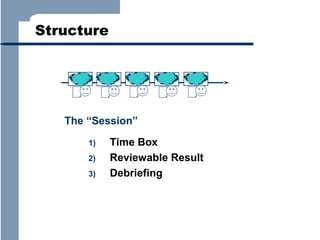

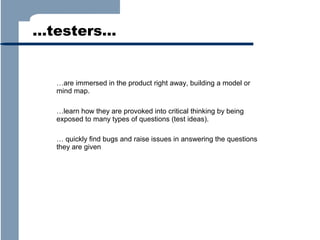

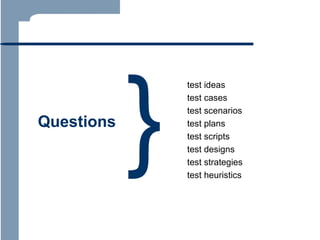

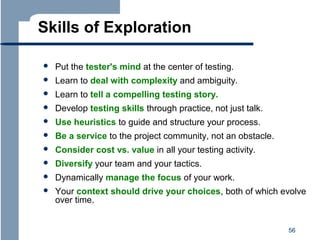

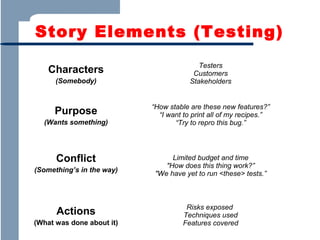

The document discusses exploratory testing, emphasizing it as a structured and purposeful approach rather than mere 'playing around.' It highlights the importance of providing respect for exploratory testers by teaching techniques for effective bug isolation, documentation, and reporting. Key concepts include understanding testing missions, session-based exploration, and the need for testers to adaptively navigate their testing environment for optimal results.

![Key Idea

Agility is about the freedom

to create, learn, and adapt,

as we get fast feedback.

[ Responding to change

over following a plan ]](https://image.slidesharecdn.com/tfbach-140116112535-phpapp01/85/Exploratory-Testing-Explained-64-320.jpg)