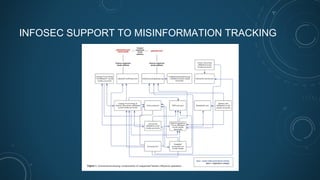

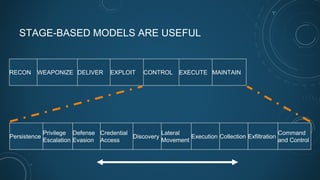

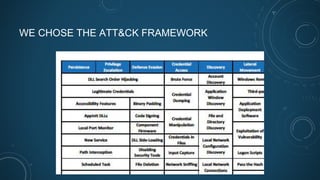

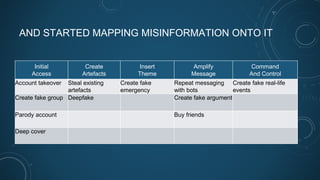

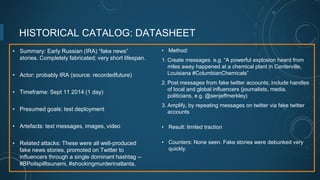

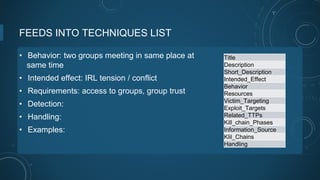

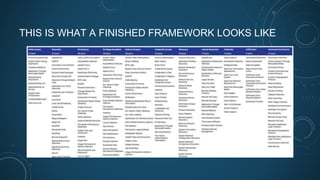

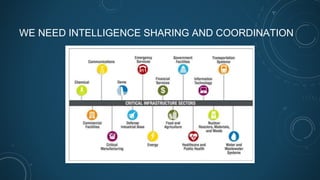

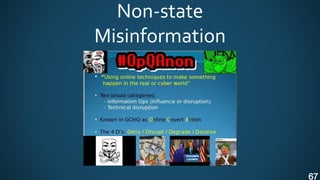

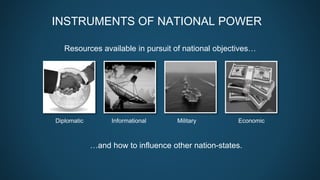

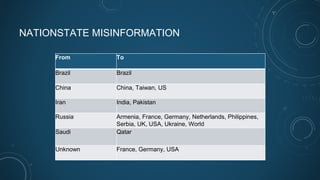

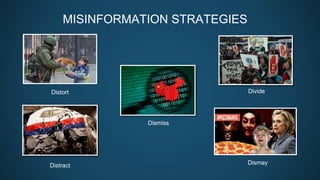

The document discusses frameworks for analyzing misinformation from an information security perspective. It begins by describing the problem of misinformation and how it has evolved, then introduces a misinformation-focused version of the ATT&CK framework for mapping misinformation techniques. The framework is populated with historical misinformation examples and analytical approaches. The goal is to establish common languages and methods to better understand, track, and respond to misinformation operations.

![MISINFORMATION VIEWED AS…

• Information security (Gordon, Grugq, Rogers)

• Information operations / influence operations (Lin)

• A form of conflict (Singer, Gerasimov)

• [A social problem]

• [News source pollution]](https://image.slidesharecdn.com/terpbreuermisinfosecframeworkscansecwest2019-190423170608/85/Terp-breuer-misinfosecframeworks_cansecwest2019-17-320.jpg)