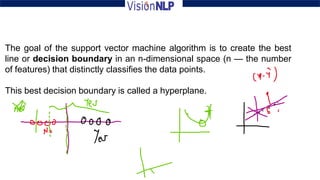

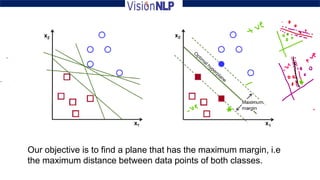

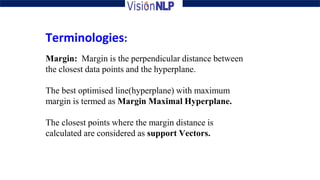

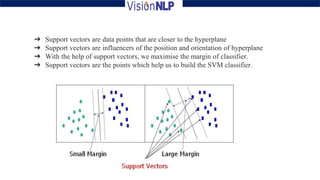

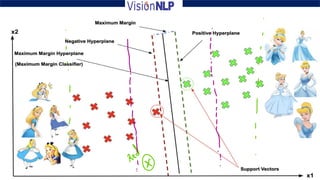

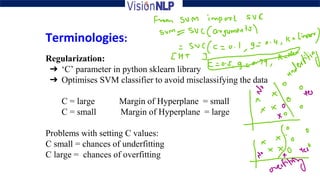

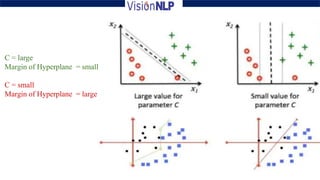

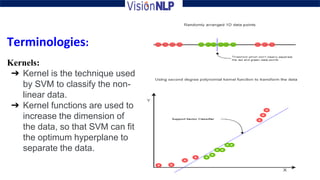

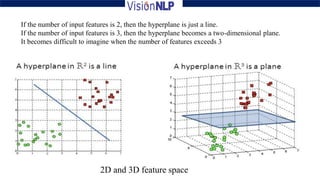

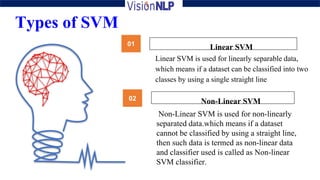

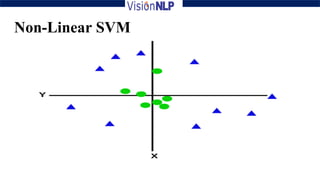

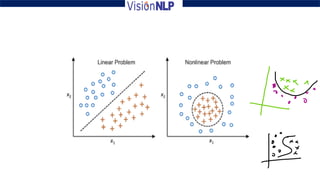

Support vector machines (SVM) is a supervised machine learning algorithm used for both classification and regression tasks. The goal of SVM is to find the optimal hyperplane that distinctly classifies data points by maximizing the margin between the two classes. It works well for smaller sized data with high dimensional features and performs classification by finding support vectors that are closest to the hyperplane.