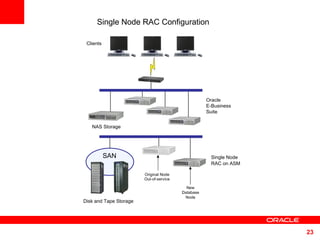

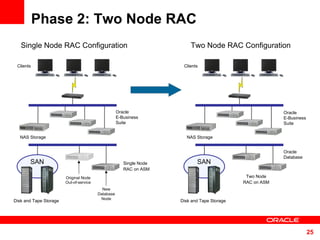

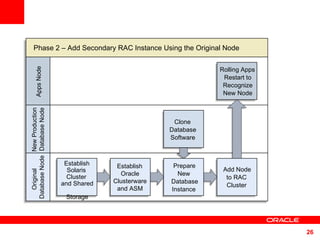

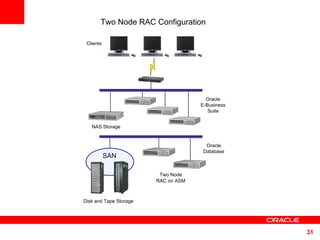

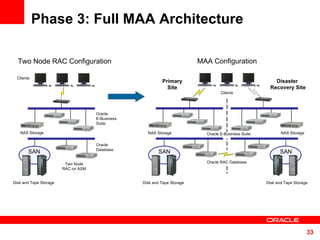

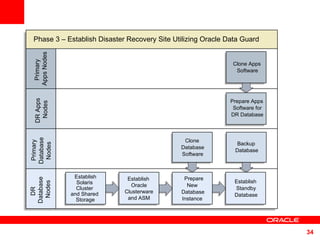

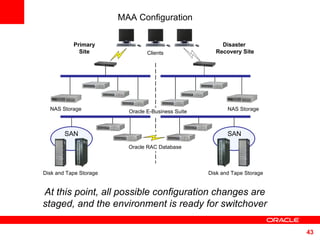

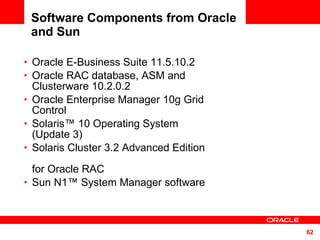

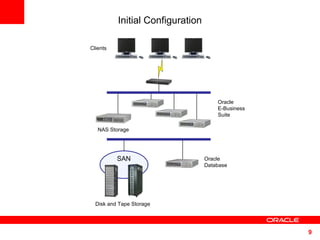

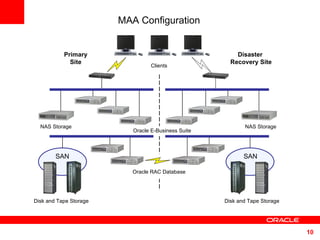

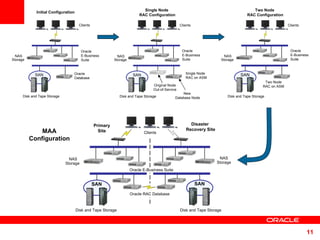

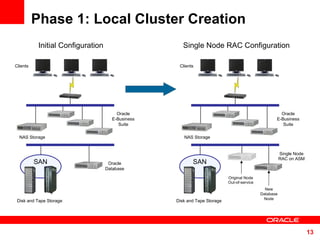

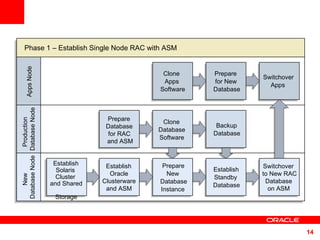

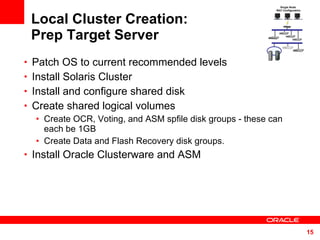

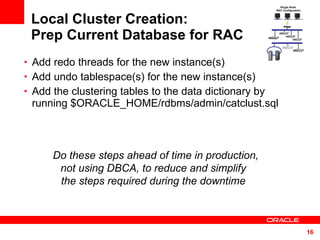

The document outlines Oracle's Maximum Availability Architecture (MAA) approach for transitioning Oracle E-Business Suite applications to a highly available configuration on Sun platforms. It describes a 3 phase process to establish a local cluster, expand to a 2 node RAC configuration, and finally implement a full disaster recovery site. The goal is to minimize downtime during implementation through cloning and staging of configuration changes ahead of planned switchovers.

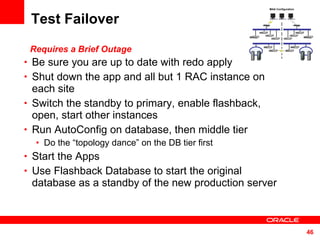

![Switchover to Single Instance RAC Be sure you are up to date with redo apply Shut down the apps [0:43] Switch to the local standby [0:01] Enable flashback [0:05] Open the new primary database instance [0:02] Remove the old application topology [1:34] Run AutoConfig on the database server [0:02] Bounce the DB listener to get the correct services [2:50] Run AutoConfig on the middle tiers (in parallel) Start the application, pointing to your single-node RAC instance Add the single instance to the Clusterware configuration Single Node RAC Configuration](https://image.slidesharecdn.com/sun-oracle-maa-060407-110406024539-phpapp02/85/Sun-oracle-maa-060407-22-320.jpg)