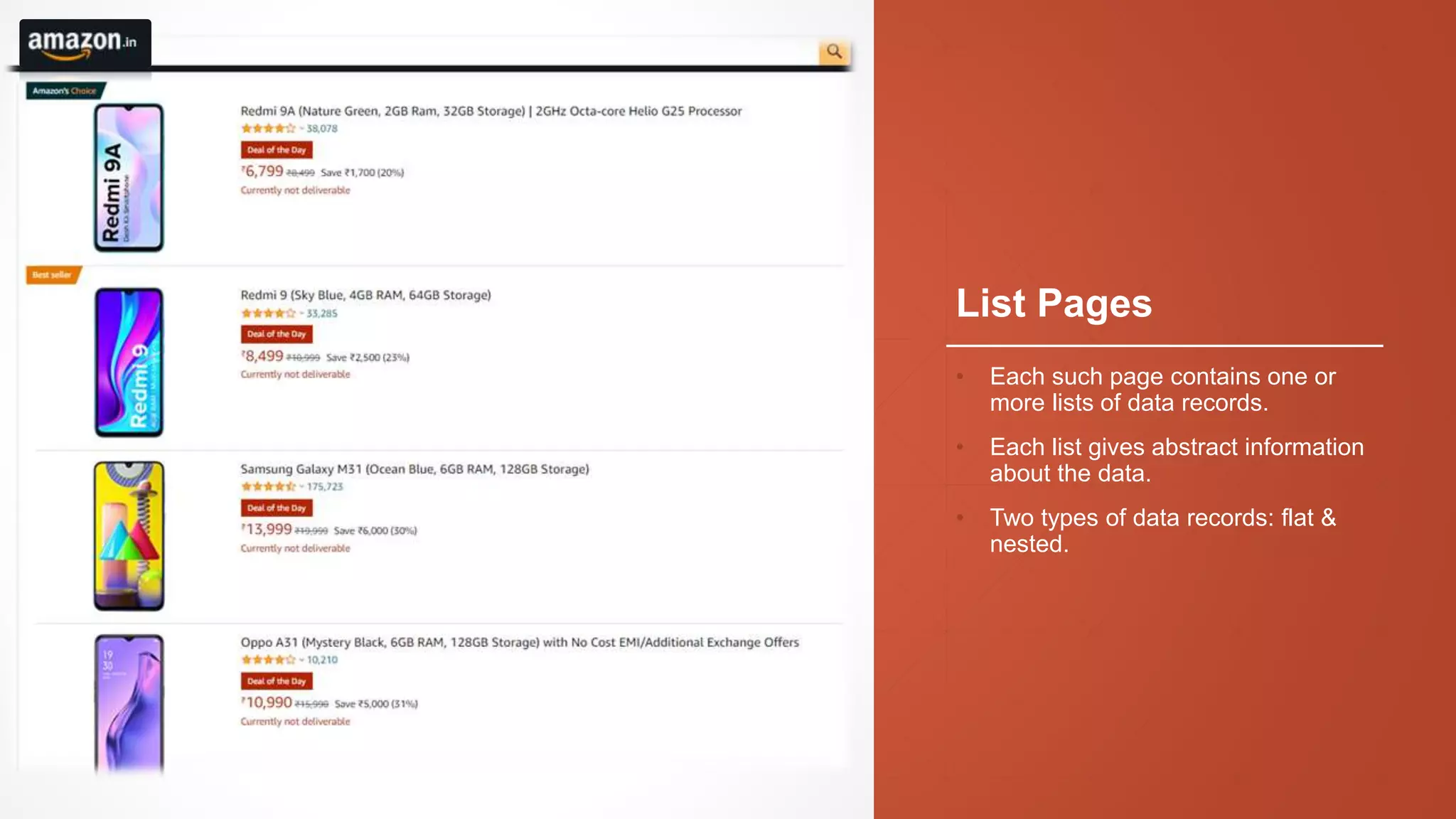

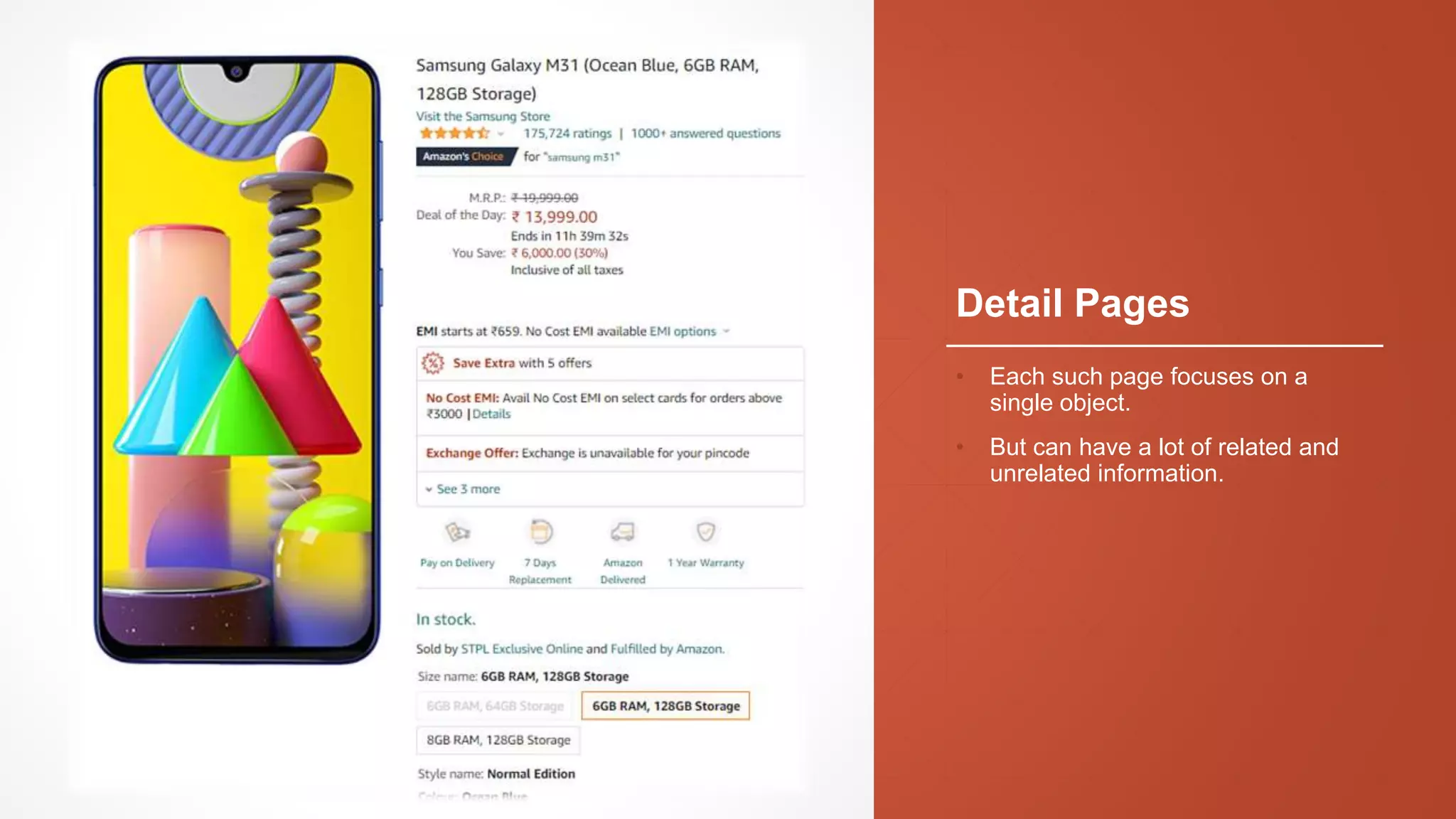

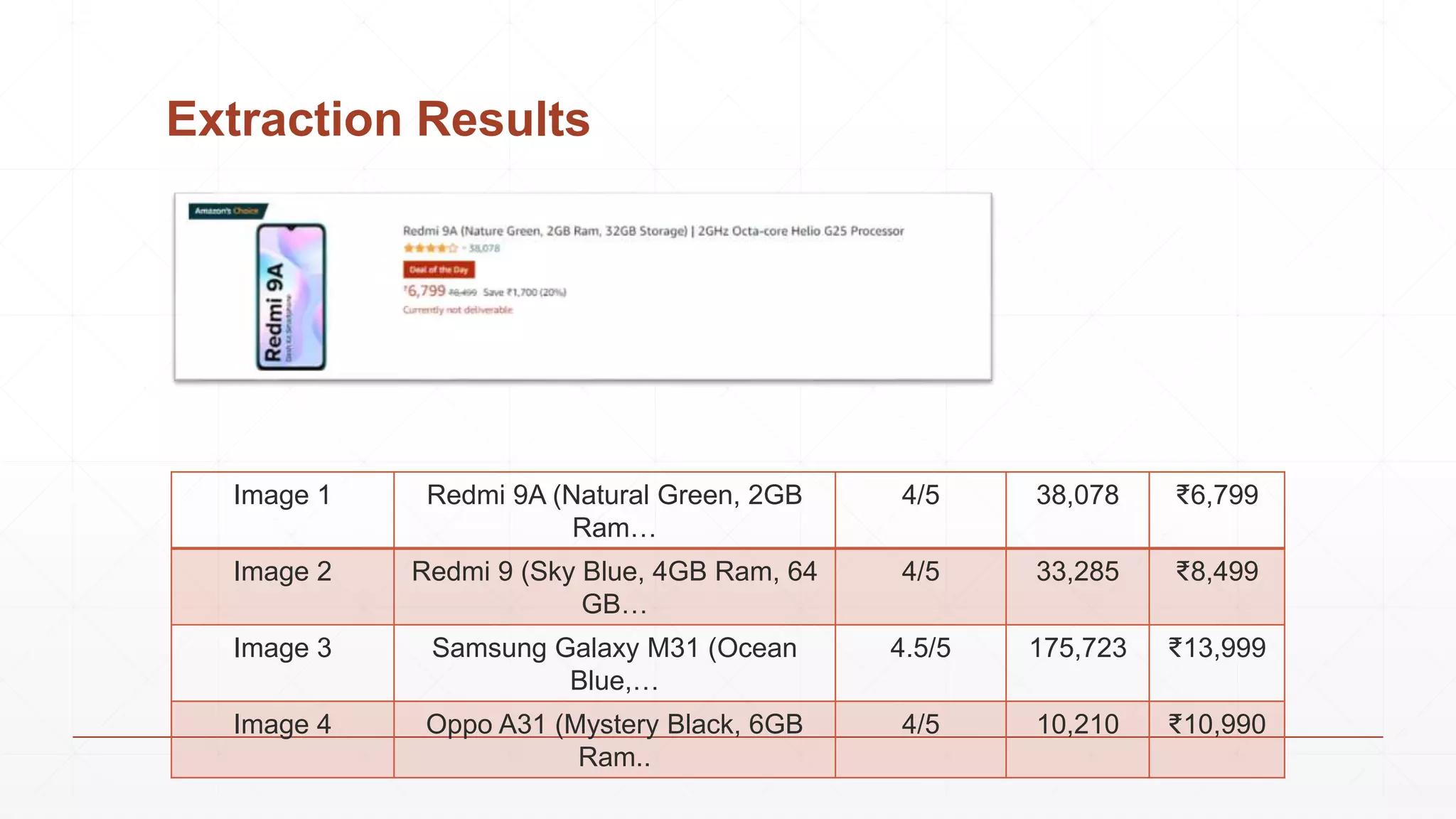

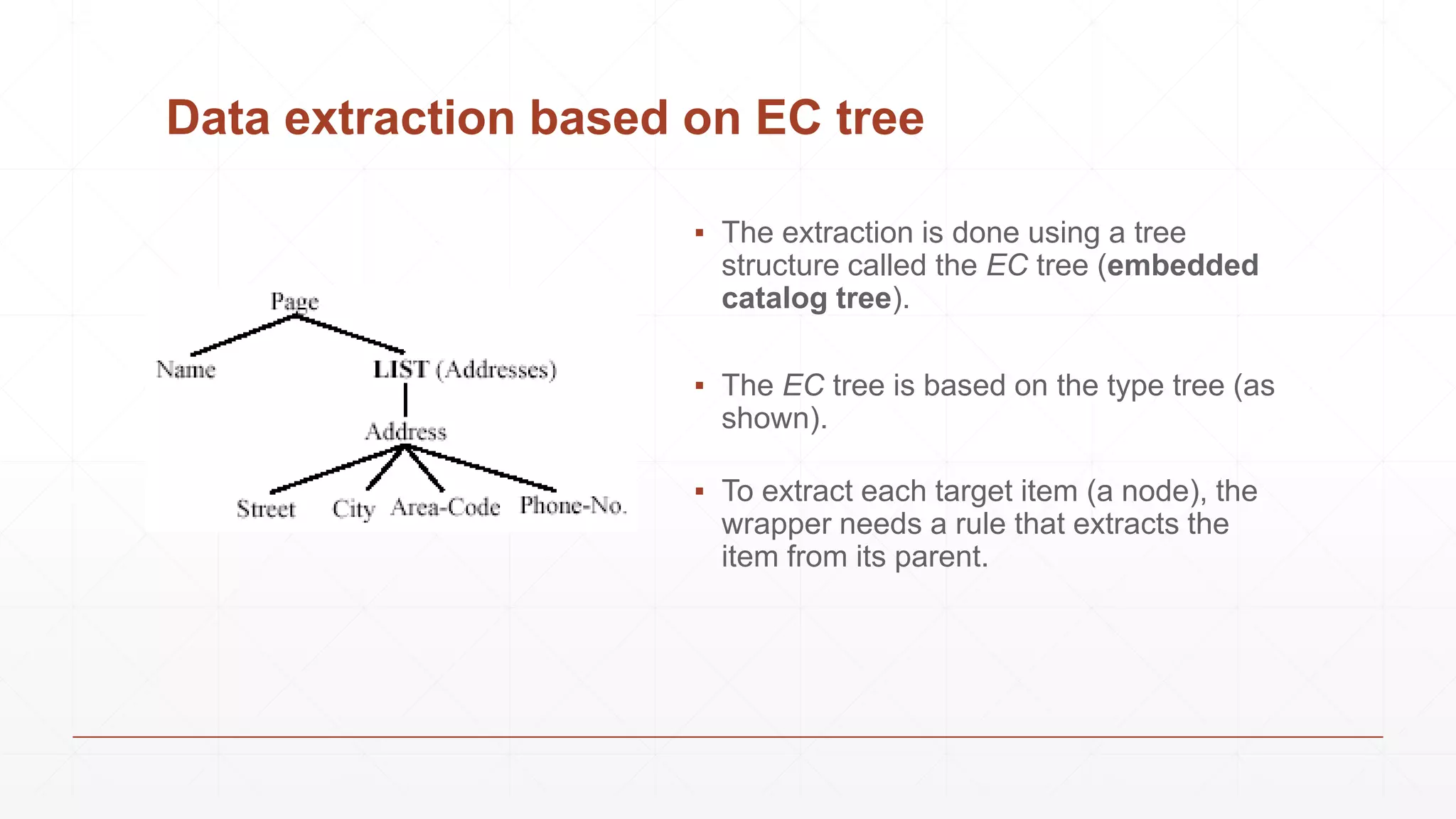

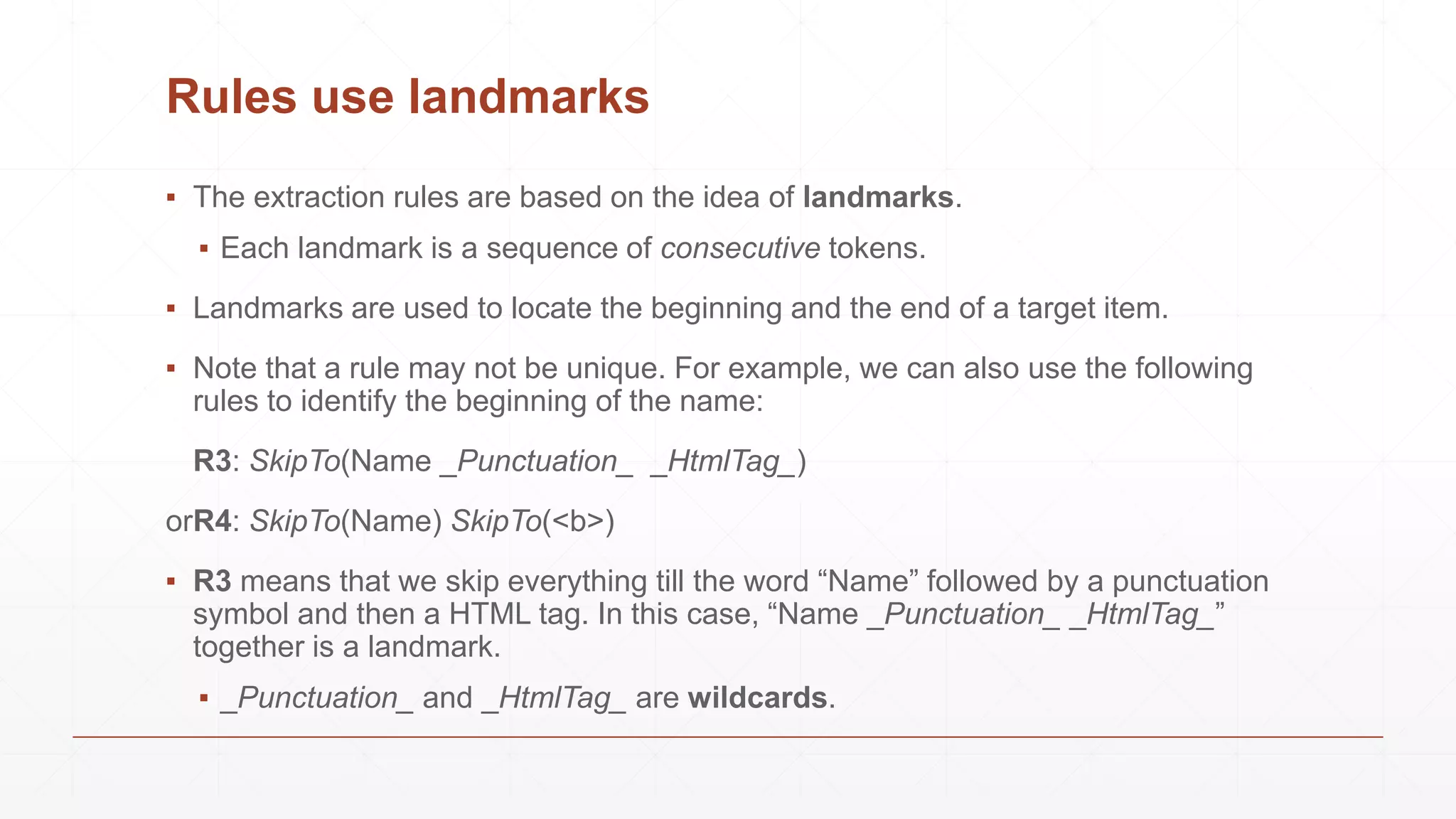

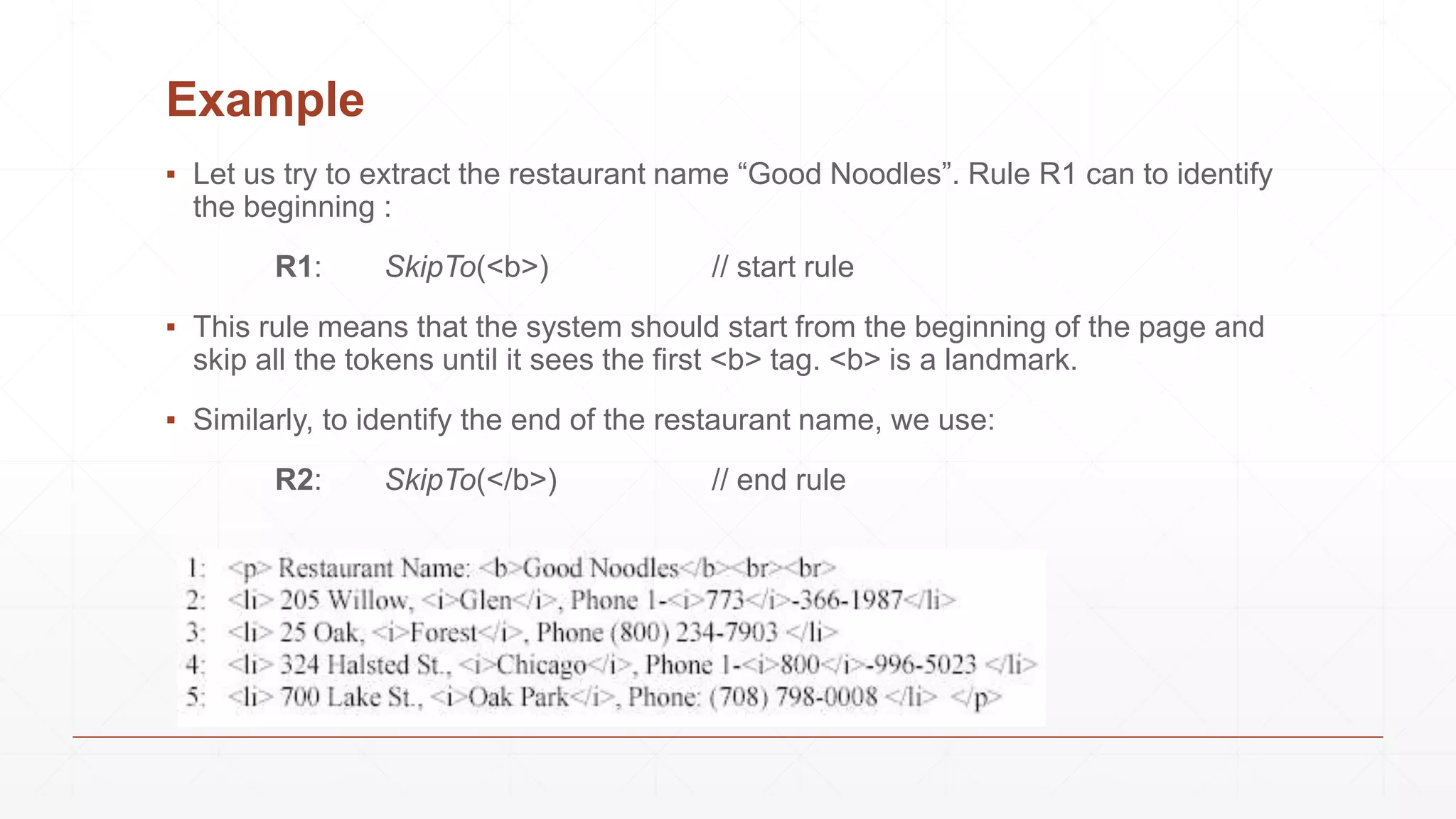

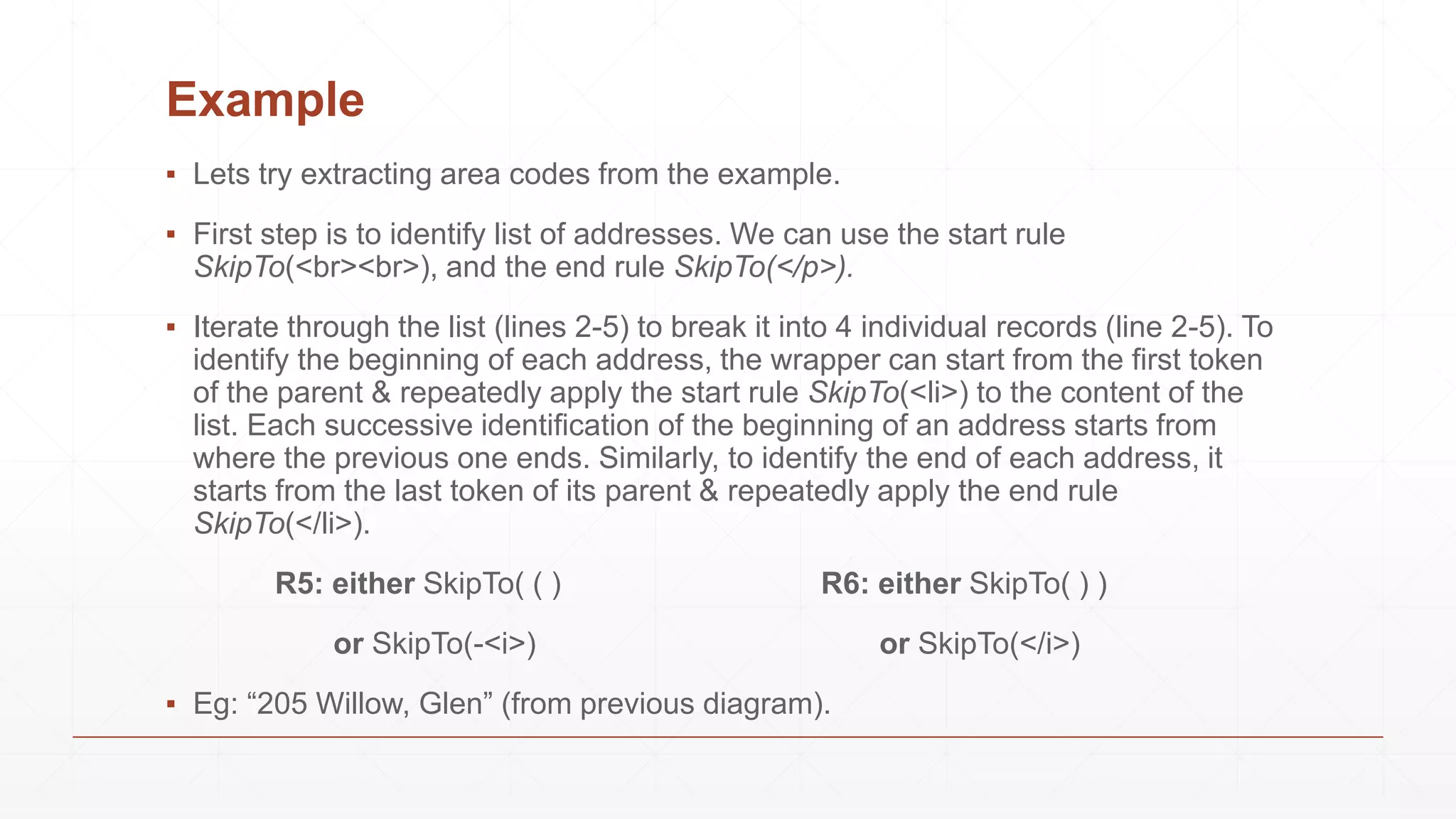

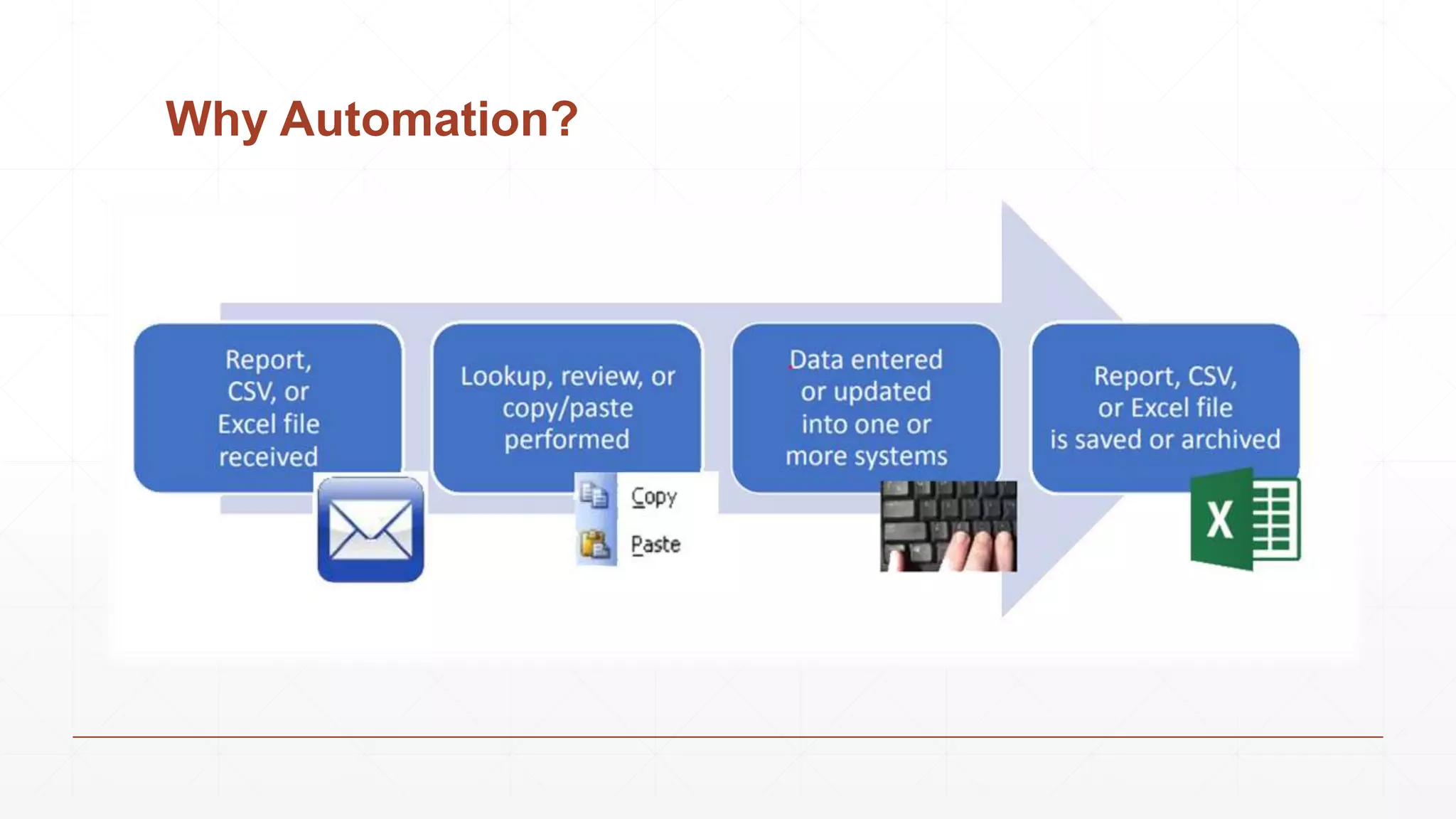

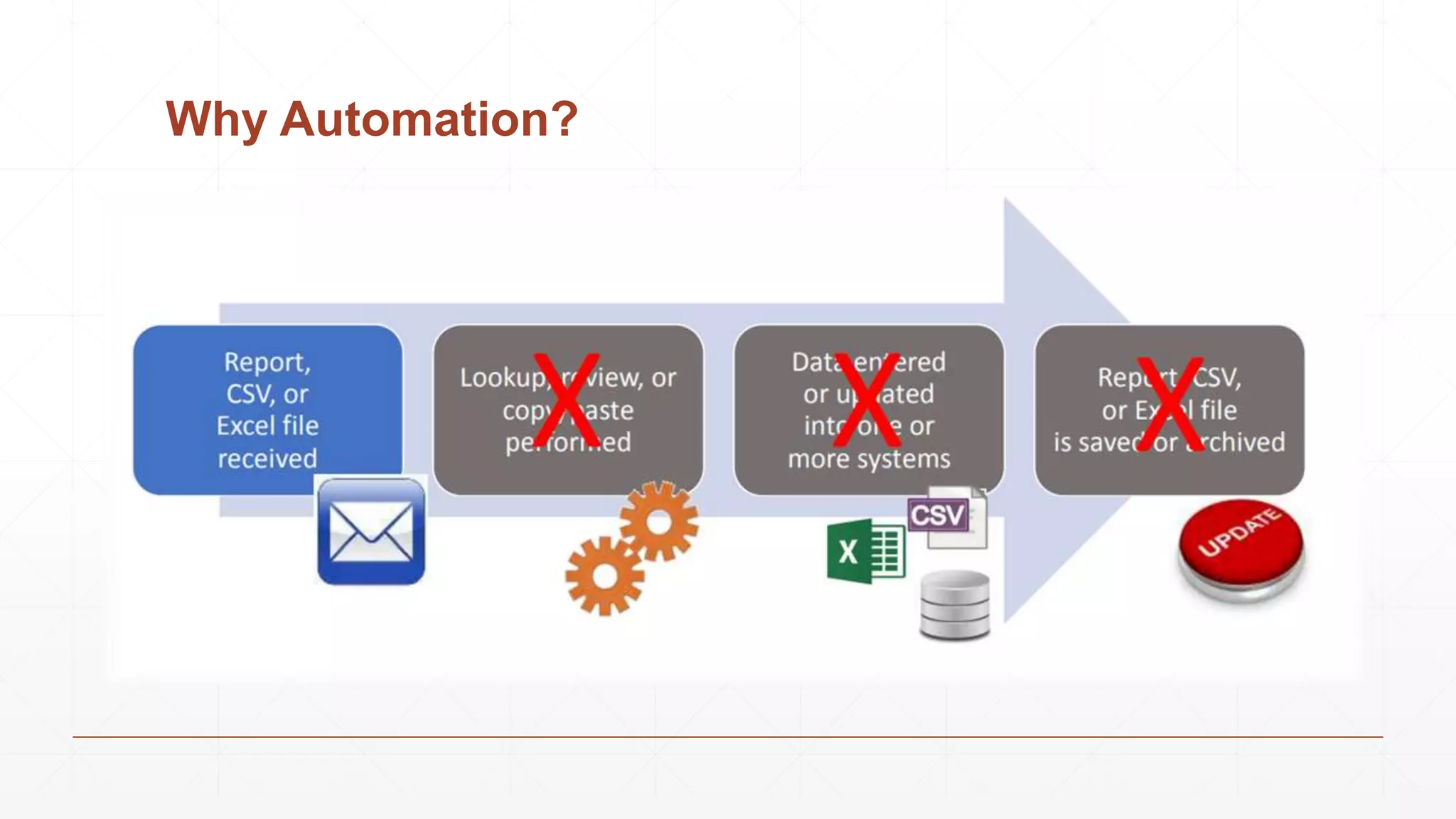

Data extraction is the process of retrieving data from various sources for further processing, analysis, or storage. It involves both logical and physical extraction methods, with automated techniques enhancing efficiency. The document discusses extraction types, methods like wrapper induction, and automation approaches to streamline repetitive tasks within organizations.