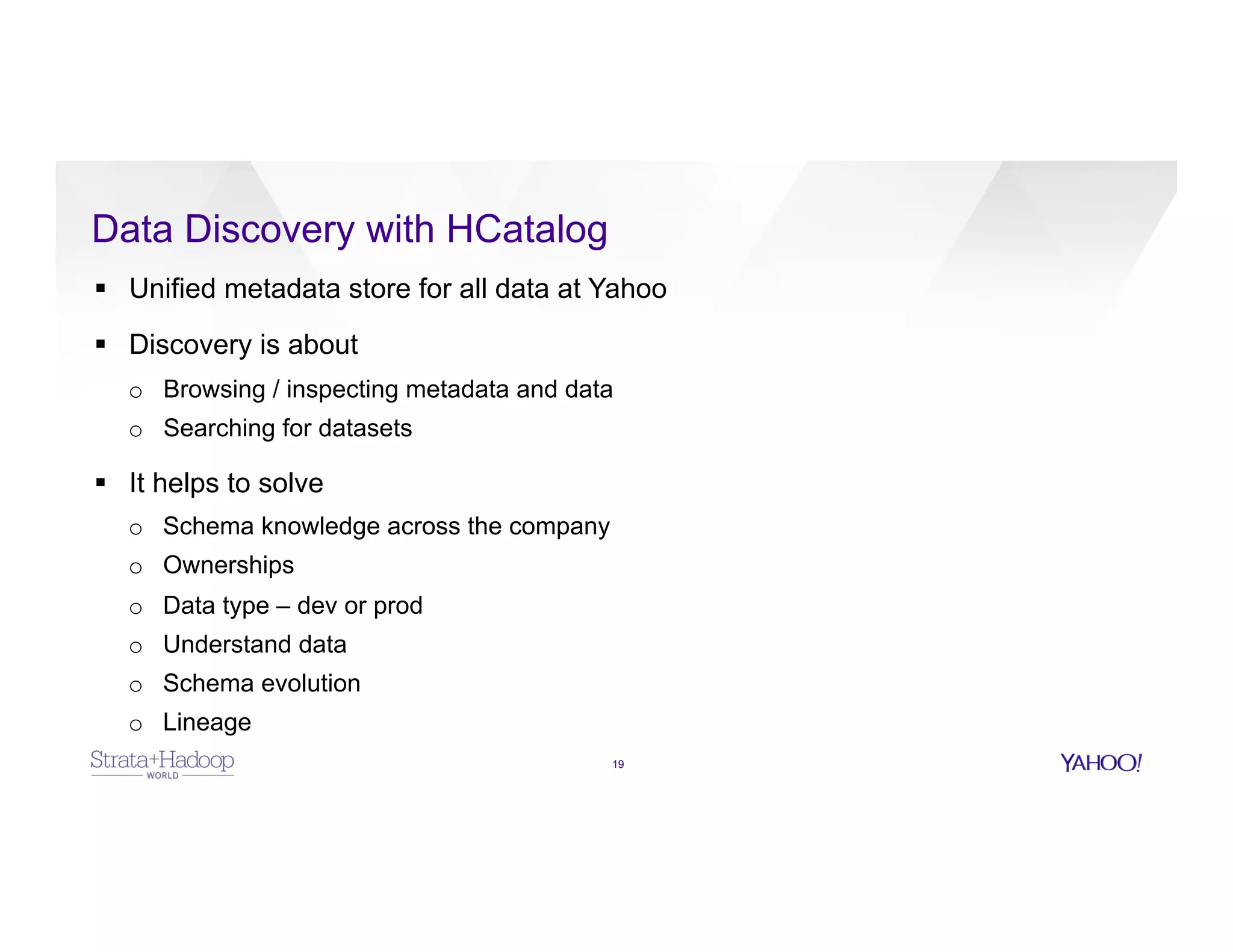

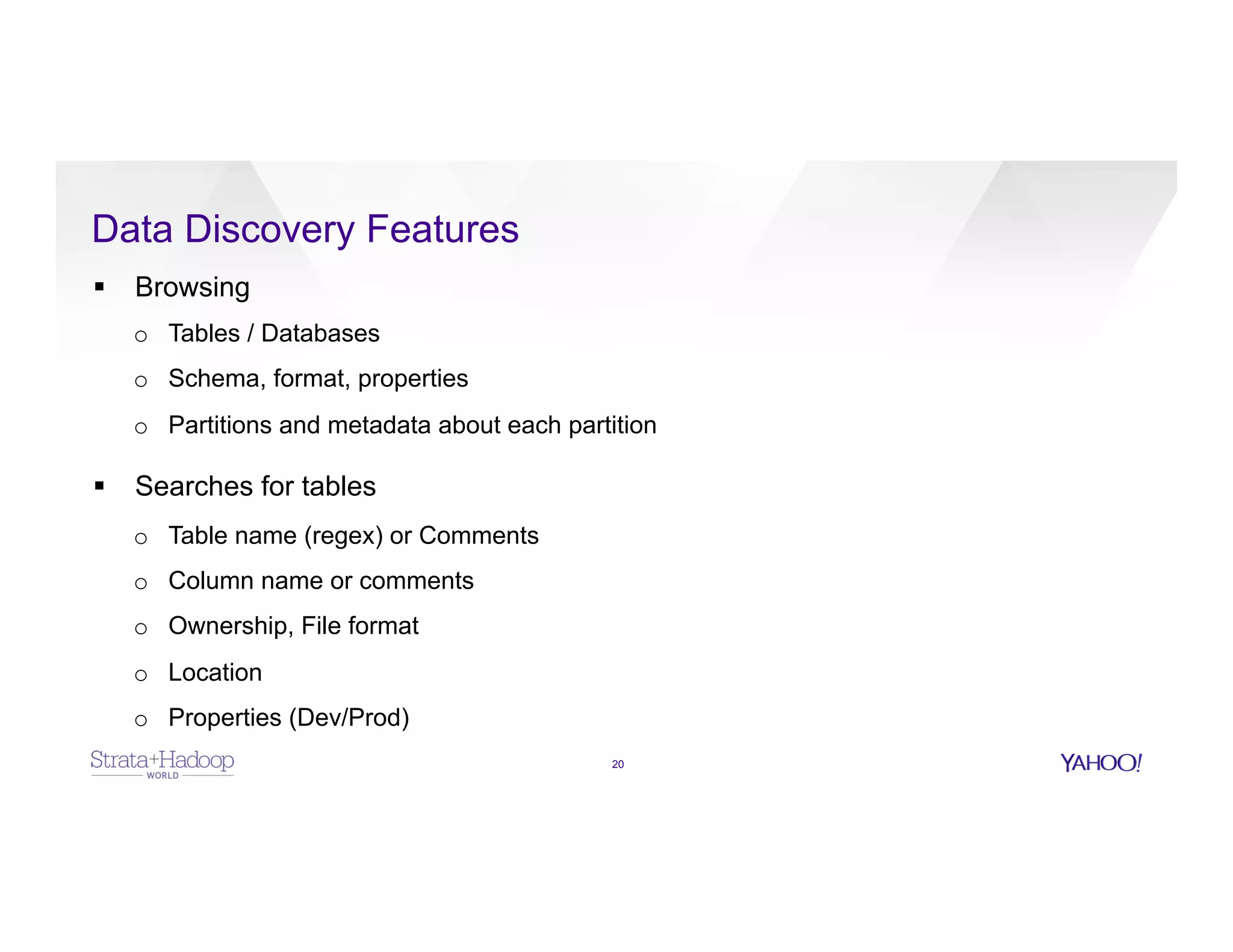

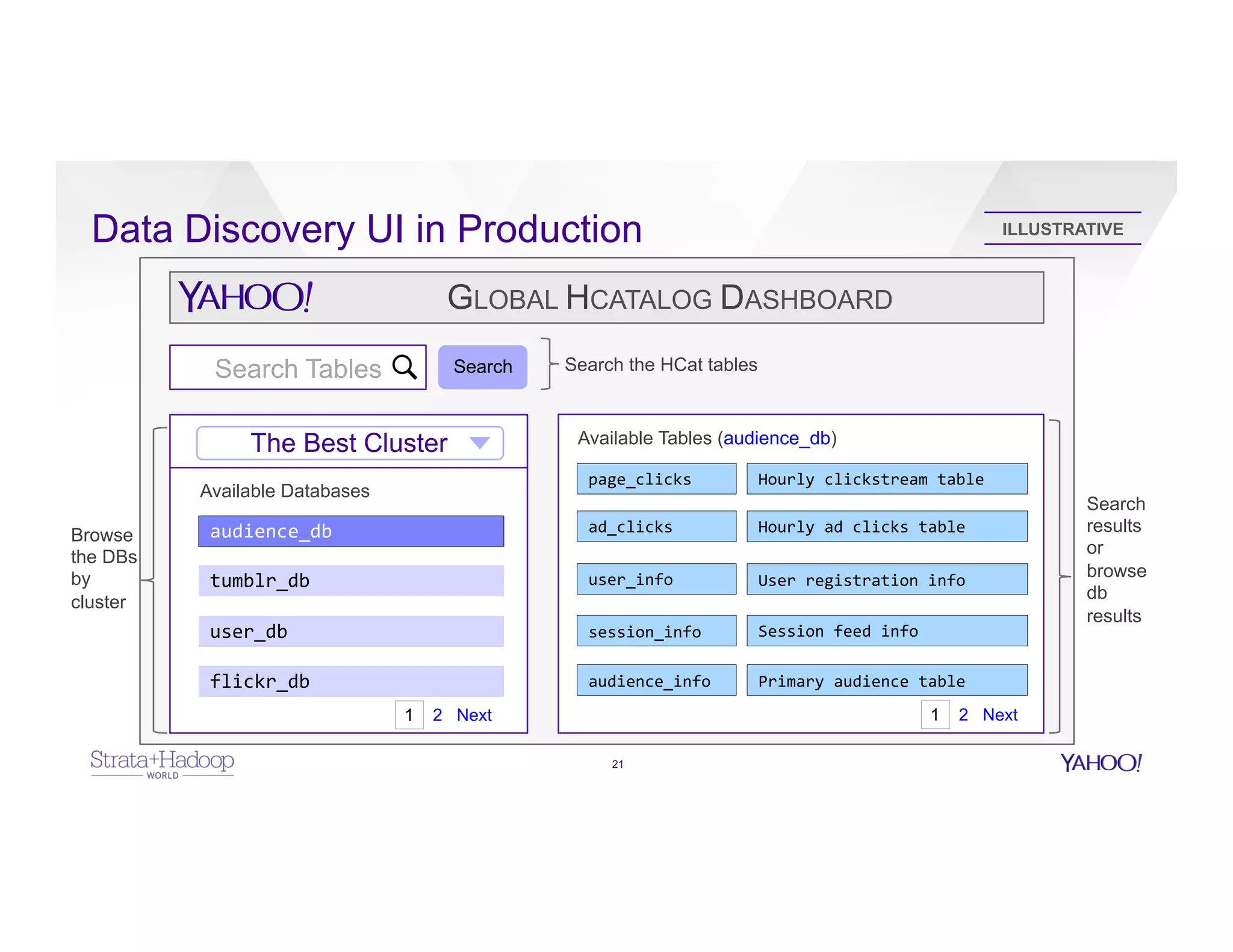

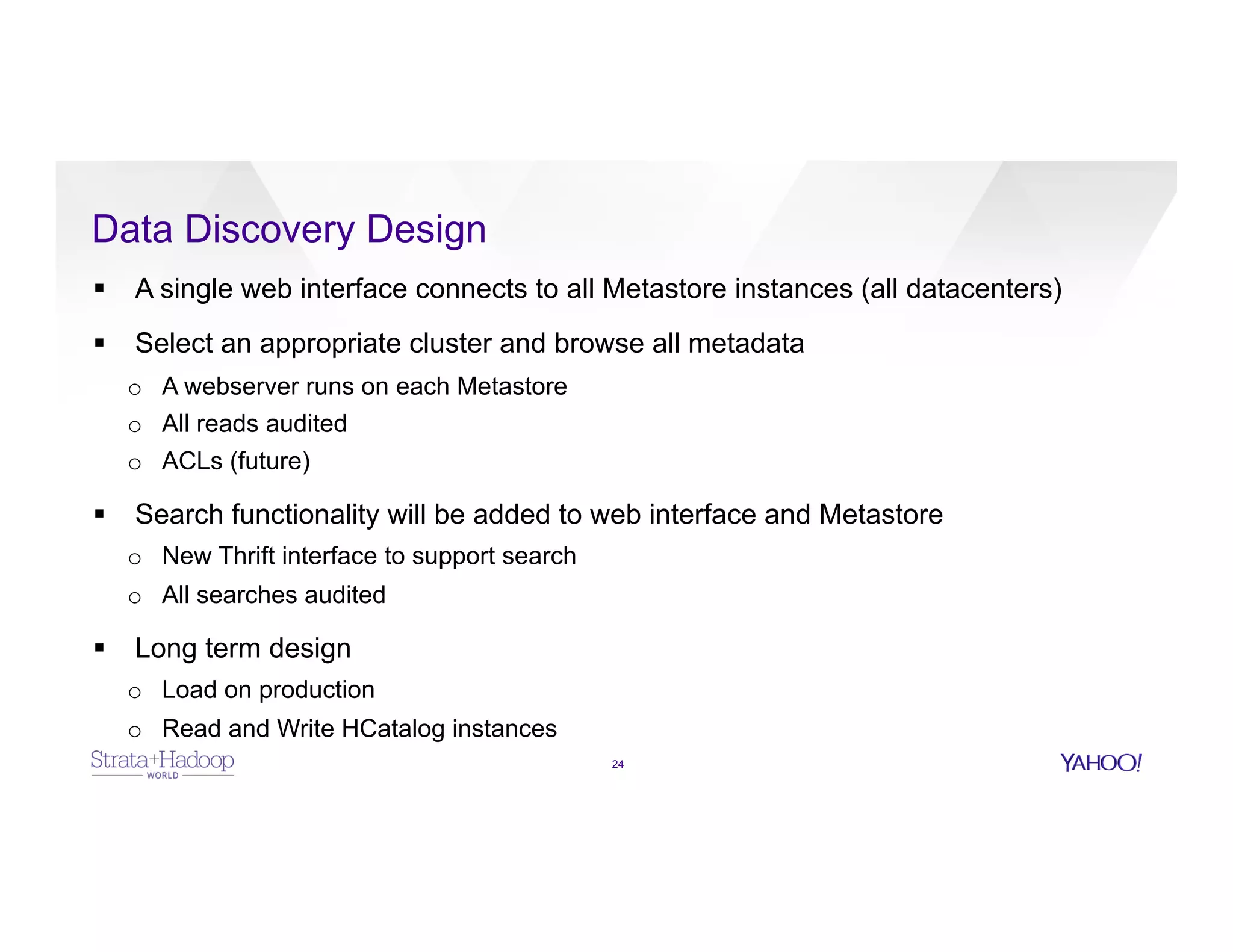

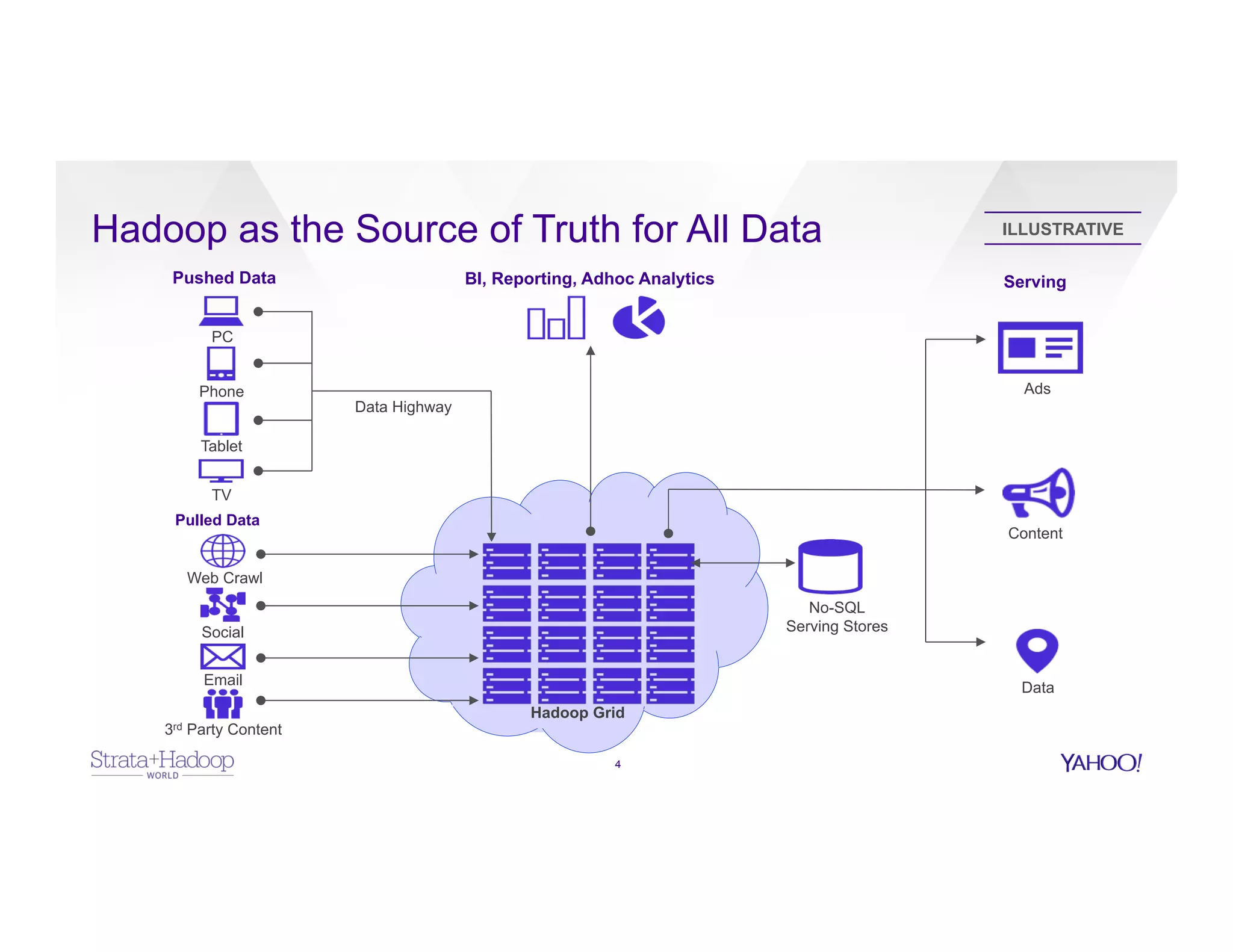

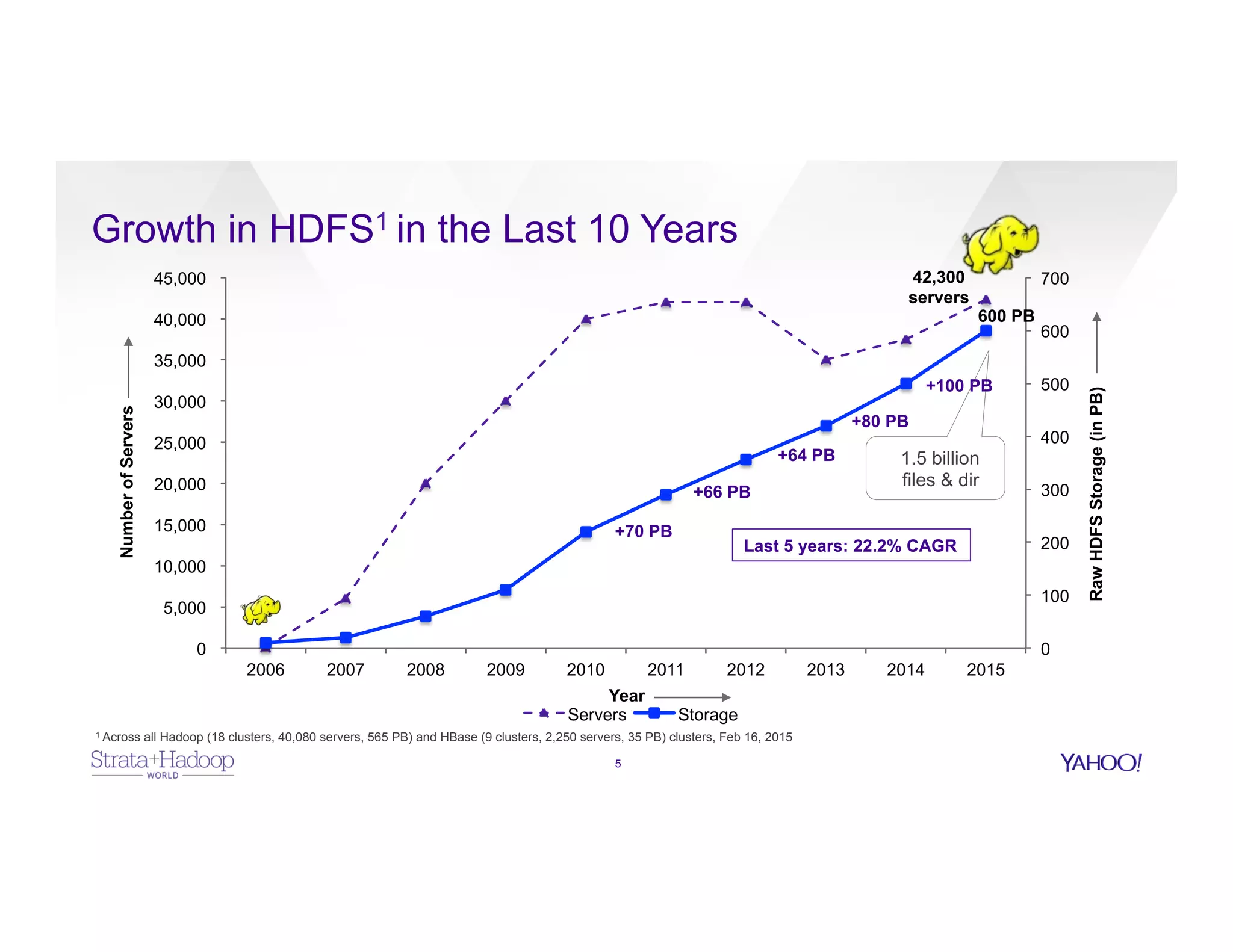

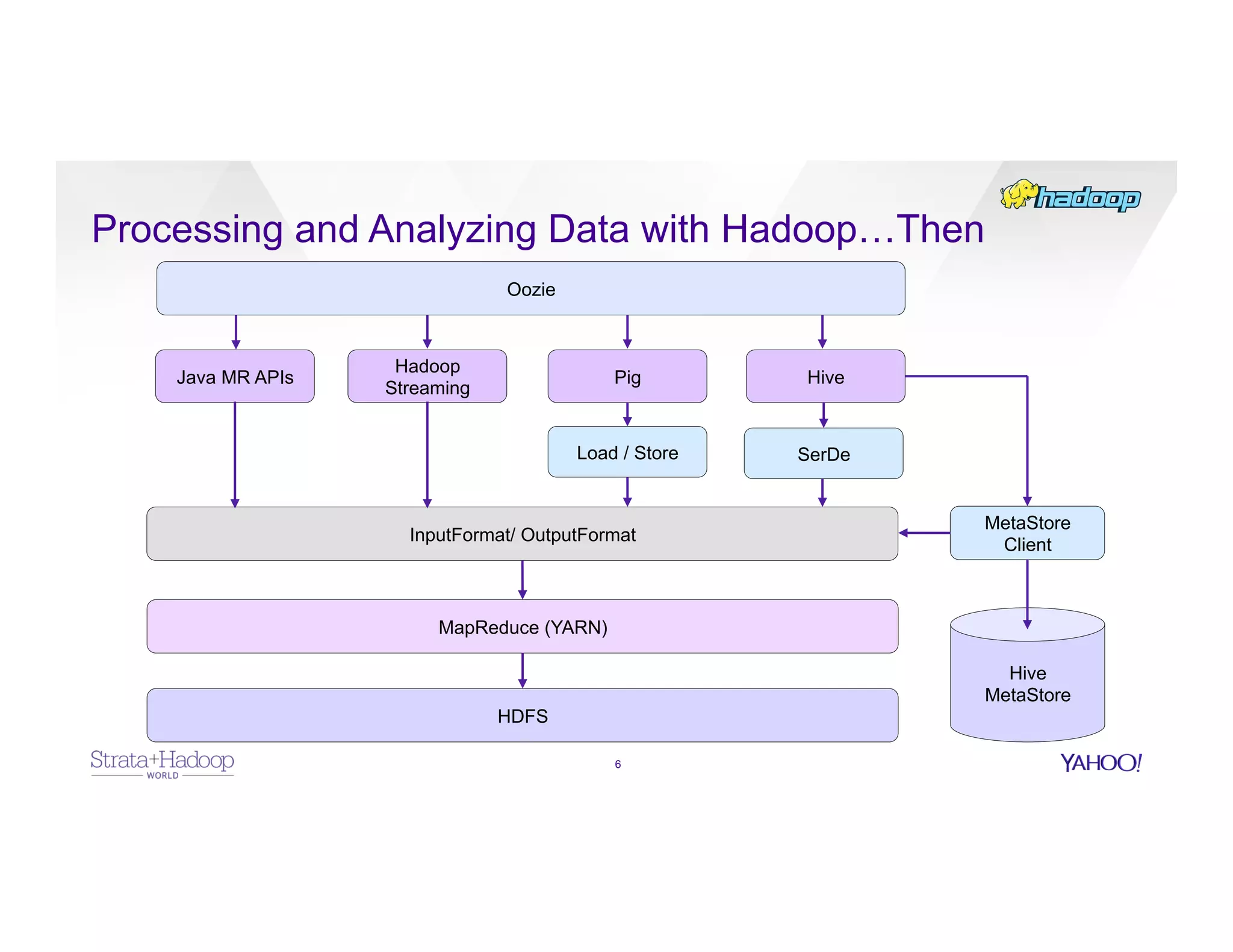

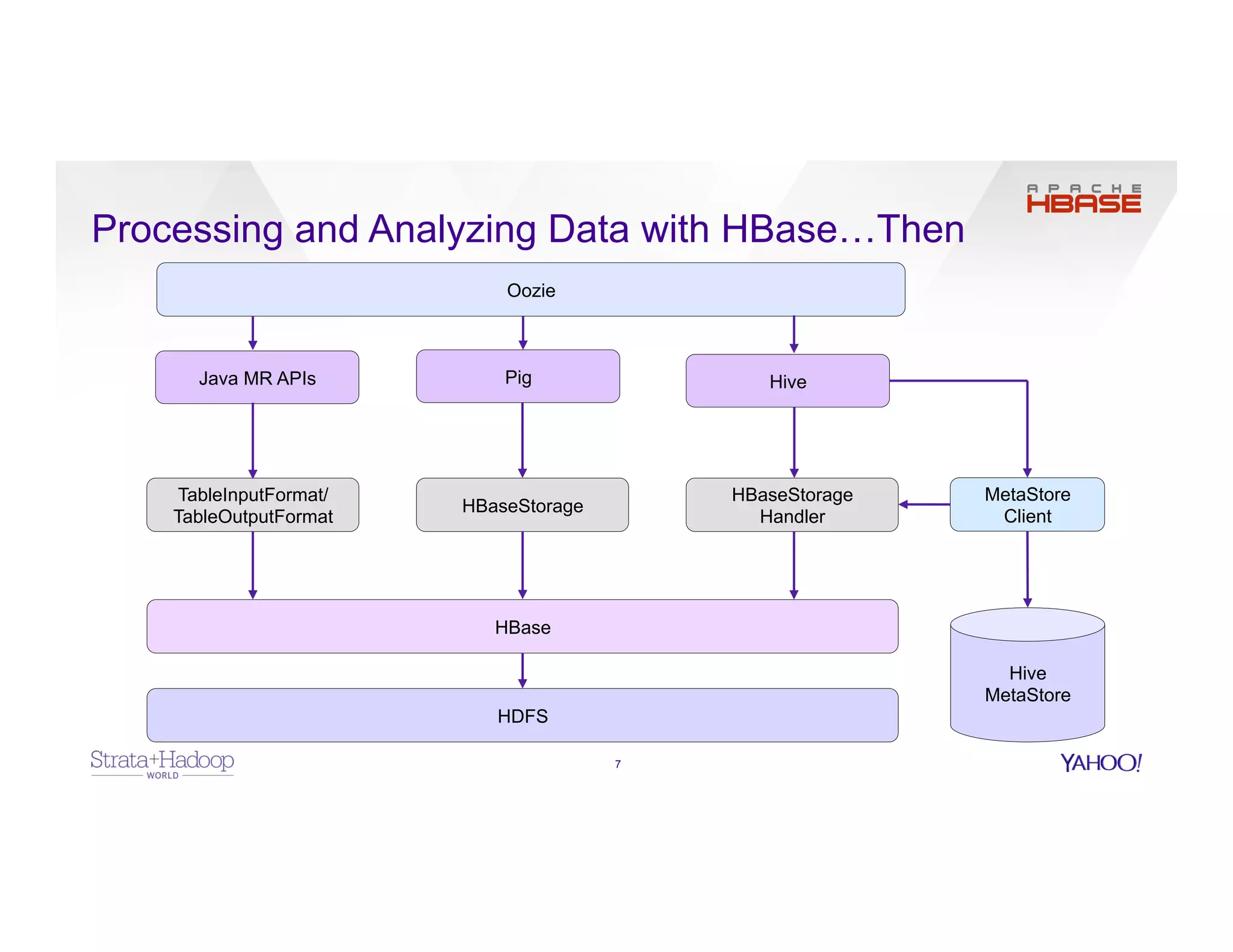

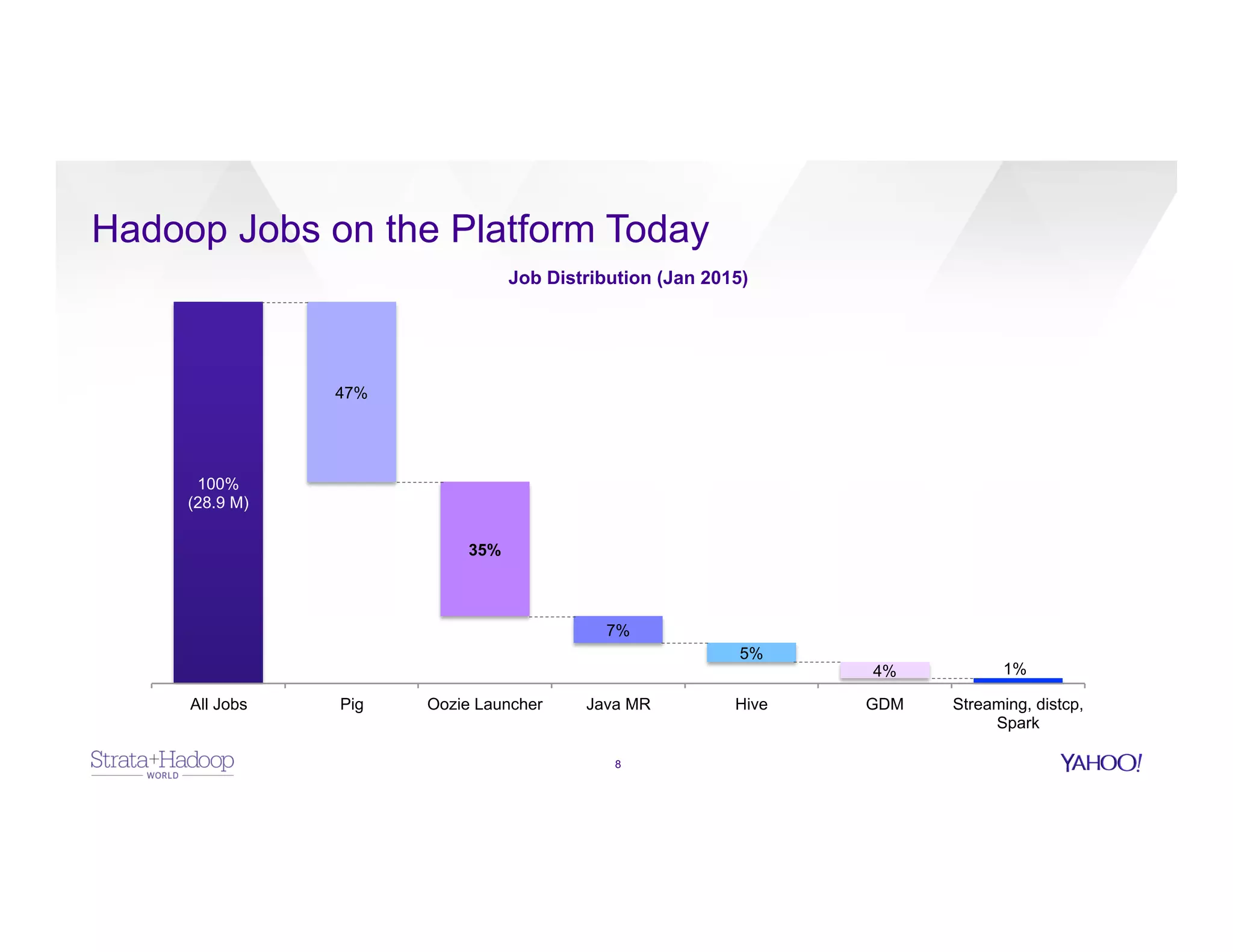

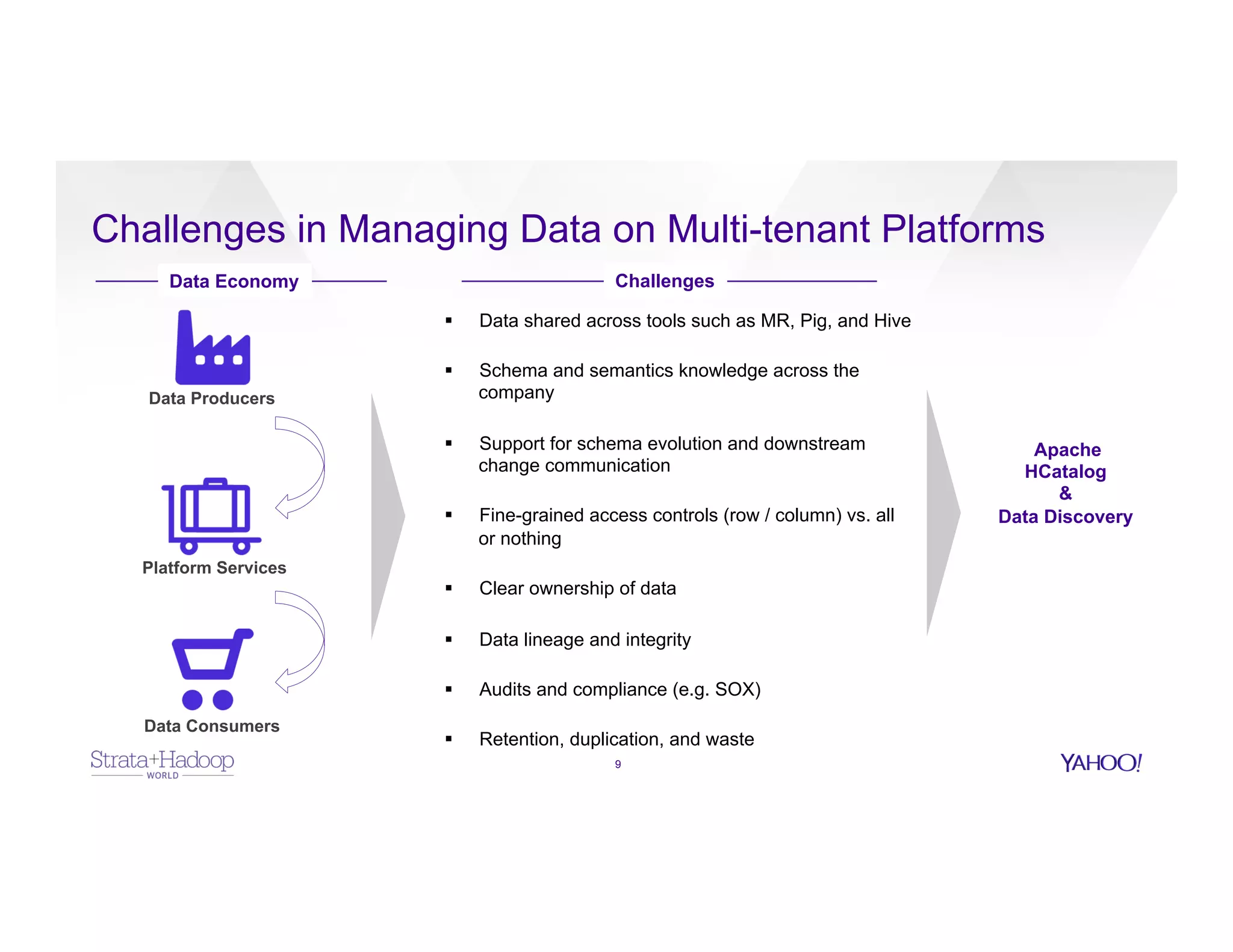

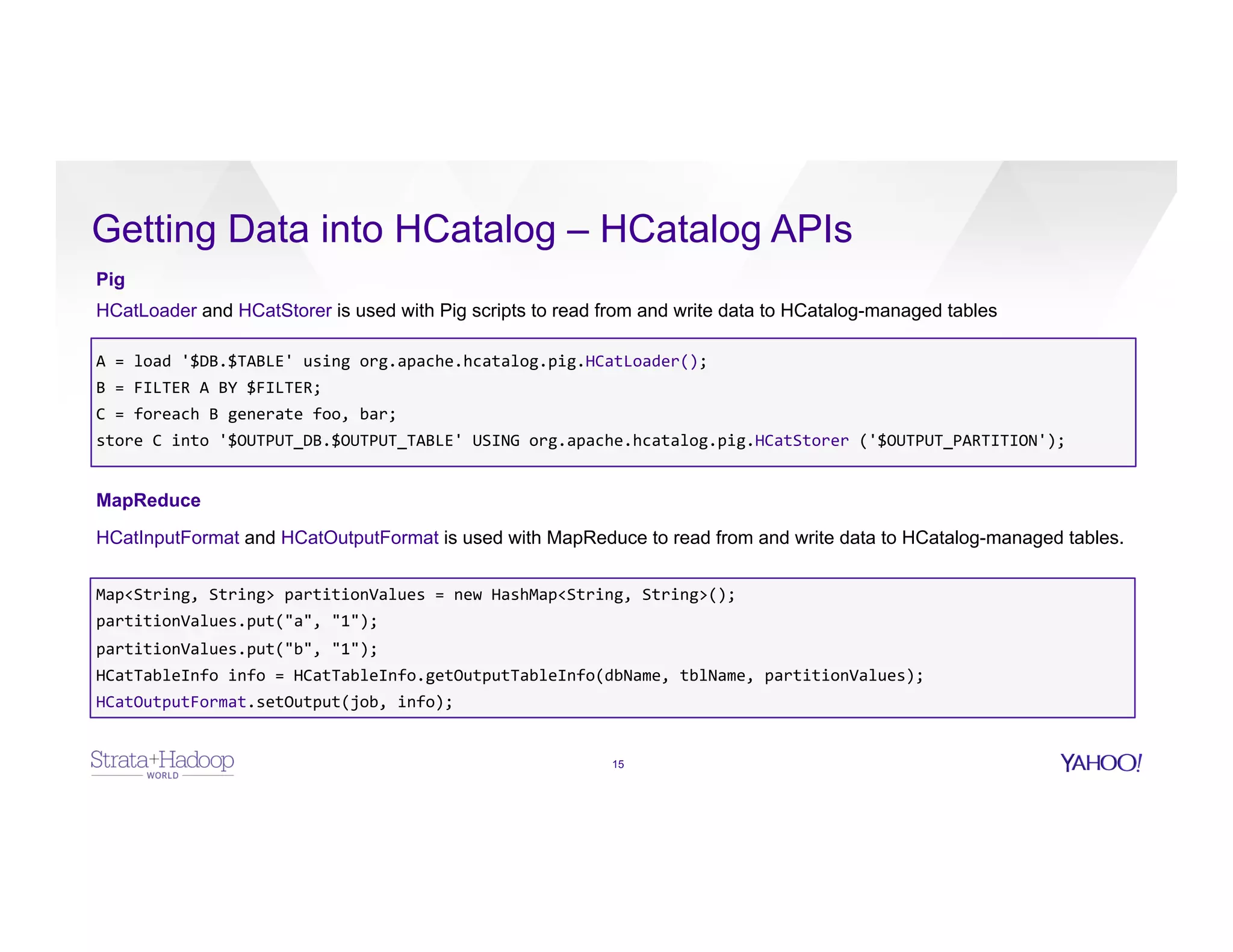

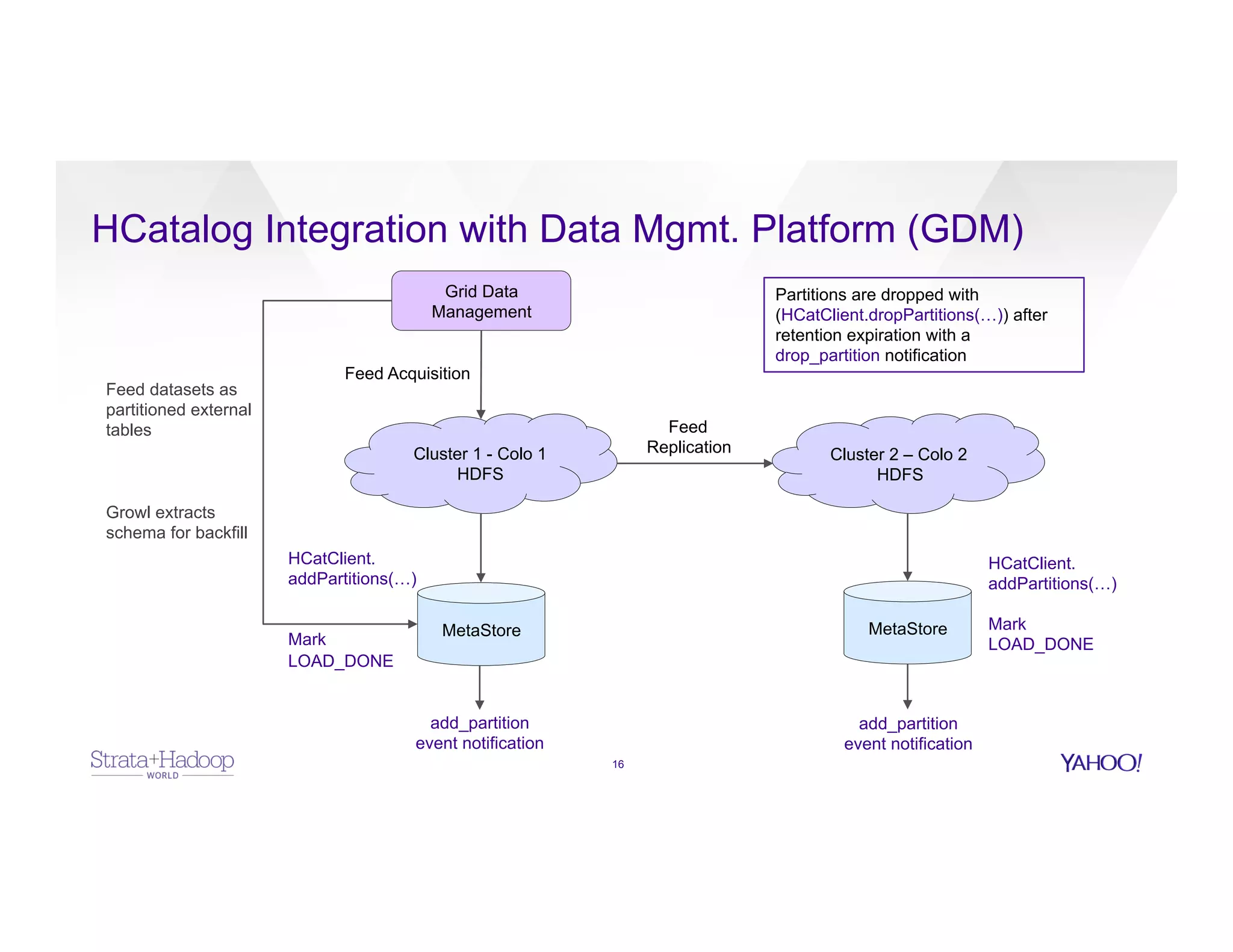

The document discusses data discovery using Apache HCatalog on Hadoop, highlighting the roles of Sumeet Singh and Thiruvel Thirumoolan at Yahoo in managing and analyzing large datasets. It addresses the challenges of data management across multi-tenant platforms, including schema evolution and access control, while proposing HCatalog as a solution for data registration and discovery. Key features of HCatalog are presented, including data management integration, notifications, and a unified metadata store to enhance data accessibility and integrity.

![Getting Data into HCatalog – DML and DDL

14

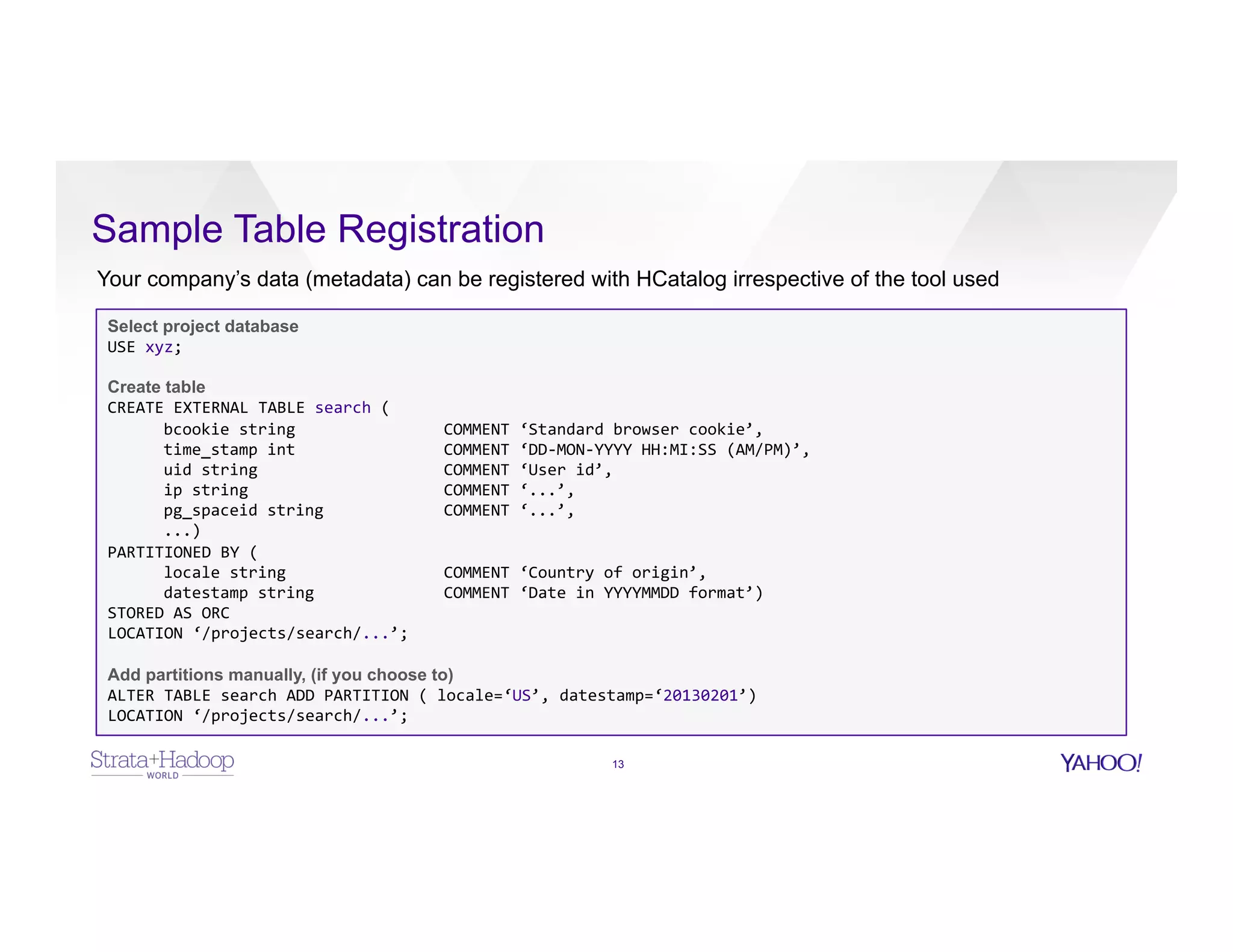

LOAD Files into tables

Copy / move data from HDFS or local filesystem into HCatalog tables

LOAD

DATA

[LOCAL]

INPATH

'filepath'

[OVERWRITE]

INTO

TABLE

tablename

[PARTITION

(partcol1=val1,

partcol2=val2

...)];

INSERT data from a query into tables

Query results can be inserted into tables of file system directories by using the insert clause.

INSERT

OVERWRITE

TABLE

tablename1

[PARTITION

(partcol1=val1,

partcol2=val2

...)

[IF

NOT

EXISTS]]

select_statement1

FROM

from_statement;

INSERT

INTO

TABLE

tablename1

[PARTITION

(partcol1=val1,

partcol2=val2

...)]

select_statement1

FROM

from_statement;

HCatalog also supports multiple inserts in the same statement or dynamic partition inserts.

ALTER TABLE ADD PARTITIONS

ALTER

TABLE

table_name

ADD

PARTITION

(partCol

=

'value1')

location

'loc1’;](https://image.slidesharecdn.com/datadiscoveryonhadoop-150710015723-lva1-app6891/75/Strata-Conference-Hadoop-World-San-Jose-2015-Data-Discovery-on-Hadoop-14-2048.jpg)

![HCatalog Notifications

17

Namespace:

E.g.

“hcat.thebestcluster”

JMS

Topic:

E.g.

“<dbname>.<tablename>”

Sample

JMS

Notification

{

"timestamp"

:

1360272556,

"eventType"

:

"ADD_PARTITION",

"server"

:

"thebestcluster-‐hcat.dc1.grid.yahoo.com",

"servicePrincipal"

:

"hcat/thebestcluster-‐hcat.dc1.grid.yahoo.com@GRID.YAHOO.COM",

"db"

:

"xyz",

"table"

:

"search",

"partitions":

[

{

"locale"

:

"US",

"datestamp"

:

"20140602"

},

{

"locale"

:

"UK",

"datestamp"

:

"20140602"

},

{

"locale"

:

"IN",

"datestamp"

:

"20140602"

}

]

}

HCatalog uses JMS (ActiveMQ) notifications that can be sent for add_database, add_table, add_partition, drop_partition,

drop_table, and drop_database. Notifications can be extended for schema change communication

HCat

Client

HCat

MetaStore

ActiveMQ

Server

Register Channel Publish to listener channels

Subscribers](https://image.slidesharecdn.com/datadiscoveryonhadoop-150710015723-lva1-app6891/75/Strata-Conference-Hadoop-World-San-Jose-2015-Data-Discovery-on-Hadoop-17-2048.jpg)