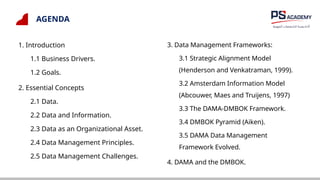

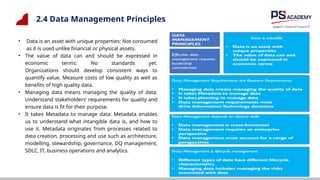

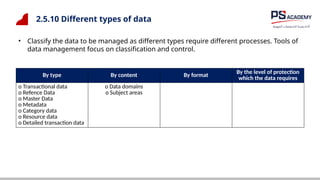

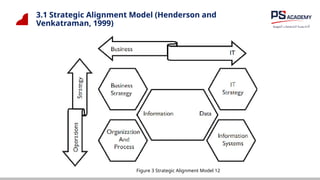

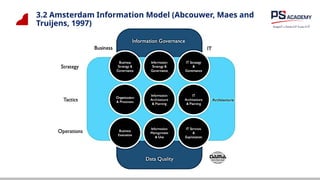

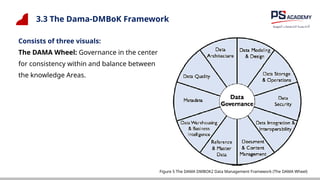

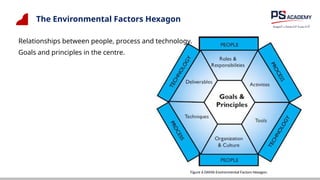

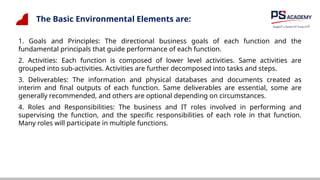

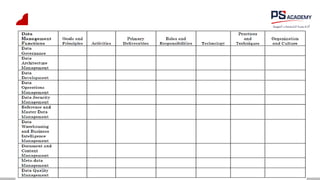

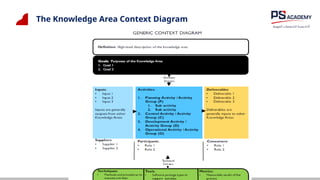

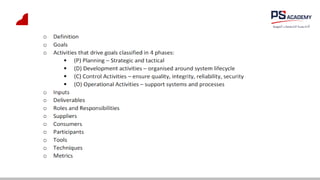

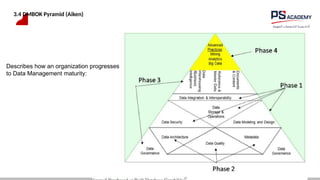

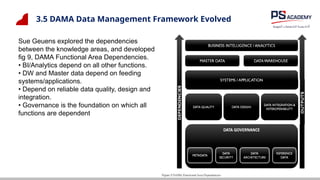

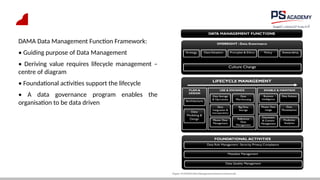

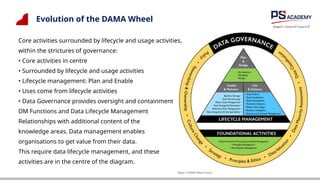

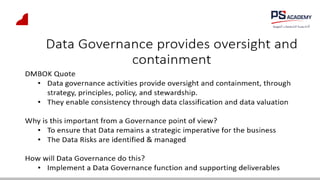

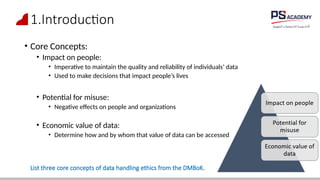

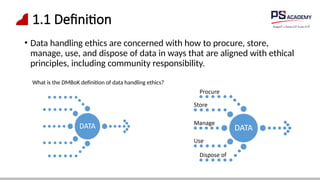

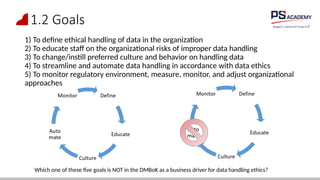

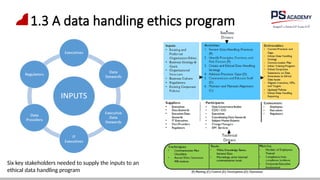

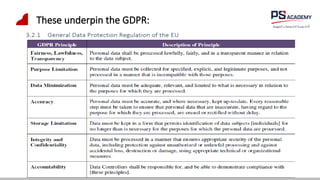

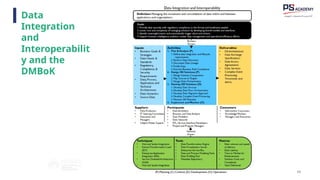

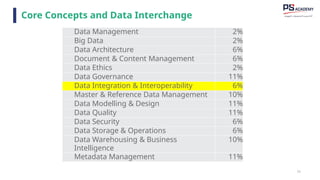

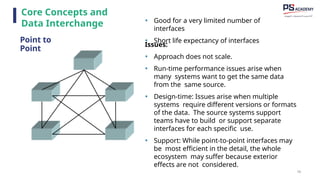

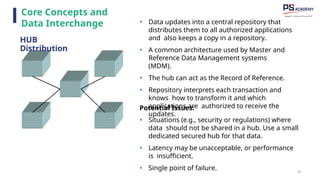

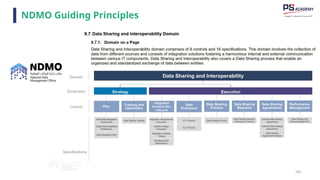

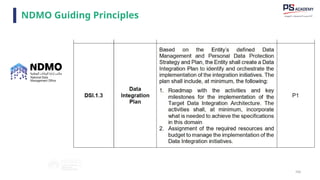

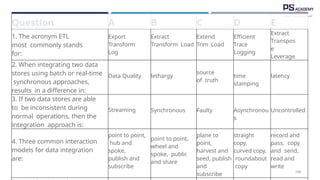

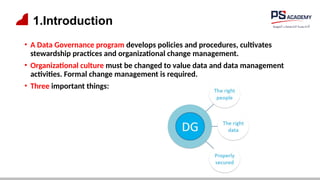

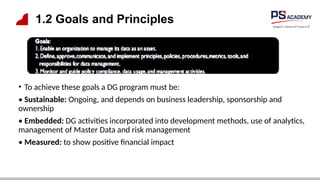

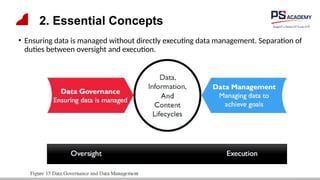

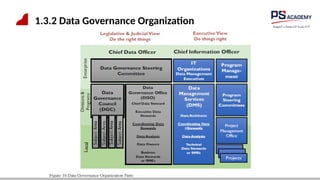

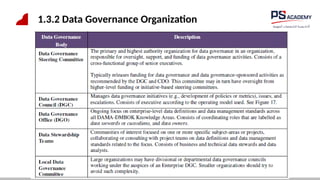

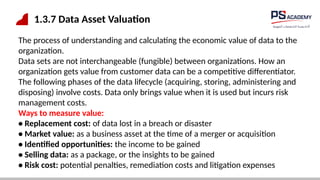

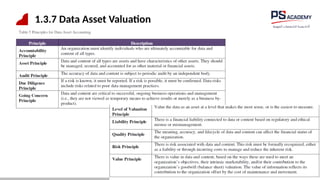

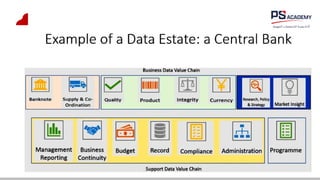

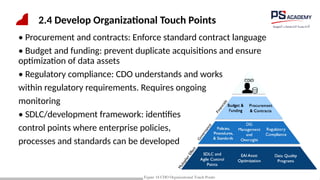

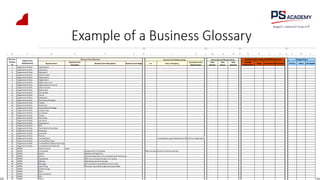

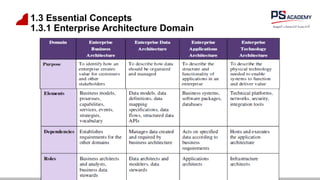

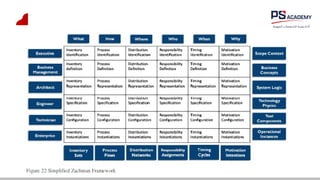

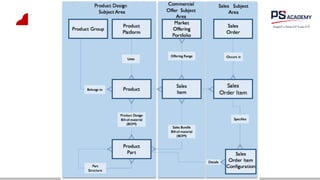

The document outlines the essential concepts, principles, challenges, and frameworks of data management, emphasizing its role as an organizational asset that requires both technical and non-technical skills for effective management. It also discusses the importance of strategic alignment, data lifecycle management, and the value of high-quality data in achieving competitive advantage. Finally, it presents various frameworks and models that guide the organization of data management practices within enterprises.